192

Ω 66

Update: Greg Brockman quit.

Update: Sam and Greg say:

Sam and I are shocked and saddened by what the board did today.

Let us first say thank you to all the incredible people who we have worked with at OpenAI, our customers, our investors, and all of those who have been reaching out.

We too are still trying to figure out exactly what happened. Here is what we know:

- Last night, Sam got a text from Ilya asking to talk at noon Friday. Sam joined a Google Meet and the whole board, except Greg, was there. Ilya told Sam he was being fired and that the news was going out very soon.

- At 12:19pm, Greg got a text from Ilya asking for a quick call. At 12:23pm, Ilya sent a Google Meet link. Greg was told that he was being removed from the board (but was vital to the company and would retain his role) and that Sam had been fired. Around the same time, OpenAI published a blog post.

- As far as we know, the management team was made aware of this shortly after, other than Mira who found out the night prior.

The outpouring of support has been really nice; thank you, but please don’t spend any time being concerned. We will be fine. Greater things coming soon.

Update: three more resignations including Jakub...

Perhaps worth noting: one of the three resignations, Aleksander Madry, was head of the preparedness team which is responsible for preventing risks from AI such as self-replication.

Also seems pretty significant:

As a part of this transition, Greg Brockman will be stepping down as chairman of the board and will remain in his role at the company, reporting to the CEO.

The remaining board members are:

OpenAI chief scientist Ilya Sutskever, independent directors Quora CEO Adam D’Angelo, technology entrepreneur Tasha McCauley, and Georgetown Center for Security and Emerging Technology’s Helen Toner.

Has anyone collected their public statements on various AI x-risk topics anywhere?

Adam D'Angelo via X:

Oct 25

This should help access to AI diffuse throughout the world more quickly, and help those smaller researchers generate the large amounts of revenue that are needed to train bigger models and further fund their research.

Oct 25

We are especially excited about enabling a new class of smaller AI research groups or companies to reach a large audience, those who have unique talent or technology but don’t have the resources to build and market a consumer application to mainstream consumers.

Sep 17

This is a pretty good articulation of the unintended consequences of trying to pause AI research in the hope of reducing risk: [citing Nora Belrose's tweet linking her article]

Aug 25

We (or our artificial descendants) will look back and divide history into pre-AGI and post-AGI eras, the way we look back at prehistoric vs "modern" times today.

Aug 20

It’s so incredible that we are going to live through the creation of AGI. It will probably be the most important event in the history of the world and it will happen in our lifetimes.

Has anyone collected their public statements on various AI x-risk topics anywhere?

A bit, not shareable.

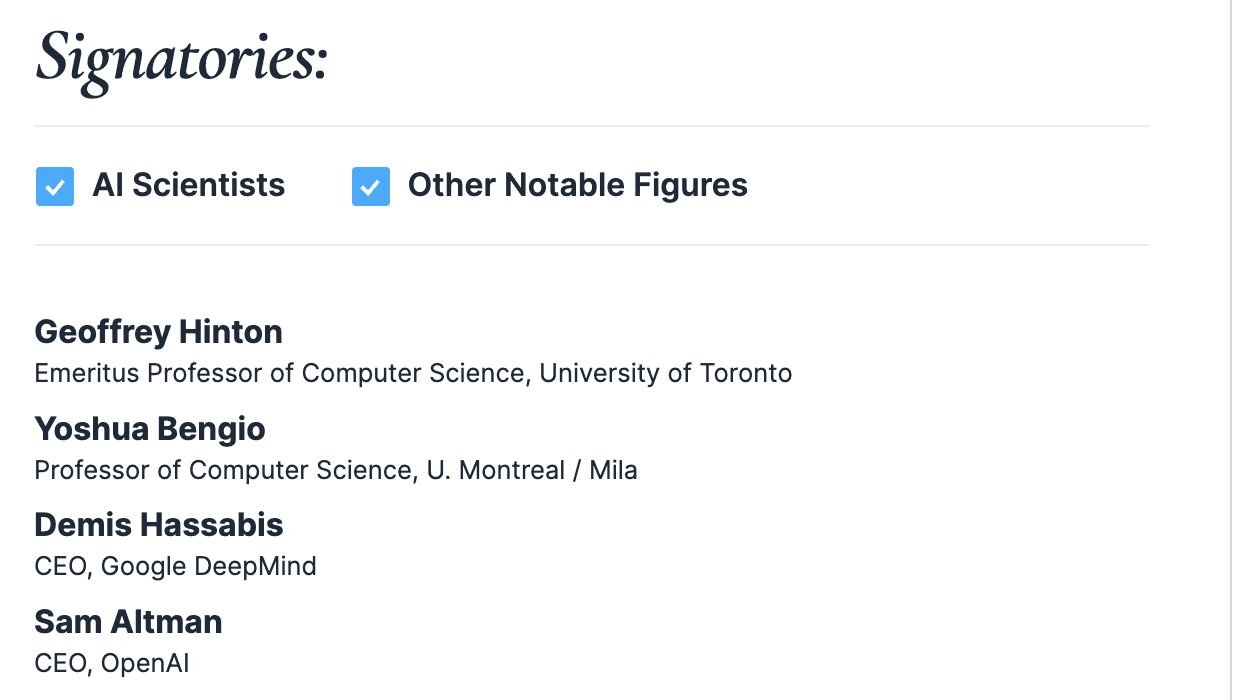

Helen is an AI safety person. Tasha is on the Effective Ventures board. Ilya leads superalignment. Adam signed the CAIS statement.

For completeness - in addition to Adam D’Angelo, Ilya Sutskever and Mira Murati signed the CAIS statement as well.

Also D'Angelo is on the board of Asana, Moskovitz's company (Moskovitz who funds Open Phil).

Judging from his tweets, D'Angelo seems like significantly not concerned with AI risk, so I was quite taken aback to find out he was on the OpenAI board. This might be misinterpreting his views based on vibes.

I couldn't remember where from, but I know that Ilya Sutskever at least takes x-risk seriously. I remember him recently going public about how failing alignment would essentially mean doom. I think it was published as an article on a news site rather than an interview, which are what he usually does. Someone with a way better memory than me could find it.

"OpenAI’s ouster of CEO Sam Altman on Friday followed internal arguments among employees about whether the company was developing AI safely enough, according to people with knowledge of the situation.

Such disagreements were high on the minds of some employees during an impromptu all-hands meeting following the firing. Ilya Sutskever, a co-founder and board member at OpenAI who was responsible for limiting societal harms from its AI, took a spate of questions.

At least two employees asked Sutskever—who has been responsible for OpenAI’s biggest research breakthroughs—whether the firing amounted to a “coup” or “hostile takeover,” according to a transcript of the meeting. To some employees, the question implied that Sutskever may have felt Altman was moving too quickly to commercialize the software—which had become a billion-dollar business—at the expense of potential safety concerns."

Kara Swisher also tweeted:

"More scoopage: sources tell me chief scientist Ilya Sutskever was at the center of this. Increasing tensions with Sam Altman and Greg Brockman over role and influence and he got the board on his side."

"The developer day and how the store was introduced was in inflection moment of...

https://twitter.com/karaswisher/status/1725678898388553901 Kara Swisher @karaswisher

Sources tell me that the profit direction of the company under Altman and the speed of development, which could be seen as too risky, and the nonprofit side dedicated to more safety and caution were at odds. One person on the Sam side called it a “coup,” while another said it was the the right move.

I think this makes sense as an incentive for AI acceleration- even if someone is trying to accelerate AI for altruistic reasons e.g. differential tech development (e.g. maybe they calculate that LLMs have better odds of interpretability succeeding because they think in English), then they should still lose access to their AI lab shortly after accelerating AI.

They get so much personal profit from accelerating AI, so only people prepared to personally lose it all within 3 years are prepared to sacrifice enough to do something as extreme as burning the remaining timeline.

I'm generally not on board with leadership shakeups in the AI safety community, because the disrupted alliance webs create opportunities for resourceful outsiders to worm their way in. I worry especially about incentives for the US natsec community to do this. But when I look at it from the game theory/moloch perspective, it might be worth the risk, if it means setting things up so that the people who accelerate AI always fail to be the ones who profit off of it, and therefore can only accelerate because they think it will benefit the world.

Looks like Sam Altman might return as CEO.

OpenAI board in discussions with Sam Altman to return as CEO - The Verge

I expect investors will take the non-profit status of these companies more seriously going forwards.

I hope Ilya et al. realize what they’ve done.

Edit: I think I’ve been vindicated a bit. As I expected money would just flock to for profit AGI labs, as it is poised to right now. I hope OpenAI remains a non profit but I think Ilya played with fire.

So, Meta disbanded its responsible AI team. I hope this story reminds everyone about the dangers of acting rashly.

Firing Sam Altman was really a one time use card.

Microsoft probably threatened to pull its investments and compute which would let Sam Altman new competitor pull ahead regardless as OpenAI would be in an eviscerated state both in terms of funding and human capital. This move makes sense if you’re at the precipice of AGI, but not before that.

Now he’s free to run for governor of California in 2026:

...I was thinking about it because I think the state is in a very bad place, particularly when it comes to the cost of living and specifically the cost of housing. And if that doesn’t get fixed, I think the state is going to devolve into a very unpleasant place. Like one thing that I have really come to believe is that you cannot have social justice without economic justice, and economic justice in California feels unattainable. And I think it would take someone with no loyalties to sort of very powerful

Aside from obvious questions on how it will impact the alignment approach of OpenAI and whether or not it is a factional war of some sort, I really hope this has nothing to do with Sama's sister. Both options—"she is wrong but something convinced the OpenAI leadership that's she's right" and "she is actually right and finally gathered some proof of her claims"—are very bad. ...On the other hand, as cynical and grim as that is, sexual harassment probably won't spell a disaster down the line, unlike a power struggle among the tops of an AGI-pursuing company.

How surprising is this to the alignment community professionals (e.g. people at MIRI, Redwood Research, or similar)? From an outside view, the volatility/flexibility and movement away from pure growth and commercialization seems unexpected and could be to alignment researchers' benefit (although it's difficult to see the repercussions at this point). While it is surprising to me because I don't know the inner workings of OpenAI, I'm surprised that it seems similarly surprising to the LW/alignment community as well.

Perhaps the insiders are stil...

It seems this was a surprise to almost everyone even at OpenAI, so I don’t think it is evidence that there isn’t much information flow between LW and OpenAI.

Someone writes anonymously, "I feel compelled as someone close to the situation to share additional context about Sam and company. . . ."

https://www.reddit.com/r/OpenAI/comments/17xoact/comment/k9p7mpv/

I wonder what changes will happen after Sam and Greg's exit.. I Hope they install a better direction towards AI safety.

Hmmm. The way Sam behaves I can't see a path of him leading an AI company towards safety. The way I interpreted his world tour (22 countries?) talking about OpenAI or AI in general, is him trying to occupy the mindspaces of those countries. A CEO I wish OpenAI has - is someone who stays at the offices, ensuring that we are on track of safely steering arguably the most revolutionary tech ever created - not trying to promote the company or the tech, I think it's unnecessary to do a world tour if one is doing AI development and deployment safely.

(But I could be wrong too. Well, let's all see what's going to happen next.)

So I guess OpenAI will keep pushing ahead on both safety and capabilities, but not so much on commercialization?

Typical speculations:

- Annie Altman charges

- Undisclosed financial interests (AGI, Worldcoin, or YC)

Potentially relevant information:

OpenAI insiders seem to also be blindsided and apparently angry at this move.

I personally think there were likely better ways to for Ilya's faction to get Sam's faction to negotiate with him, but this firing makes sense based on some reviews of this company having issues with communication as a whole and potentially having a toxic work environment.

edit: link source now available in replies

I'm just a guy but the impression I get from occasionally reading the Money Stuff newsletter is that basically anything bad you do at a public company is securities fraud, because if you do a bad thing and don't tell investors, then people who buy the securities you offer are doing so without full information because of you.

A wild (probably wrong) theory: Sam Altman announcing custom gpts was the thing that pushed the board to fire him.

customizable ai -> user can override rlhf (maybe, probably) -> we are at risk from AIs that have been finetunrd by bad actors

Basically just the title, see the OAI blog post for more details.

EDIT:

Also, Greg Brockman is stepping down from his board seat:

The remaining board members are:

EDIT 2:

Sam Altman tweeted the following.

Greg Brockman has also resigned.