On IABIED

First things first, I wholeheartedly endorse the main actionable conclusion: Ban unrestrained progress on AI that can kill us all.

I broadly think Eliezer and Nate did a good job communicating what's so difficult about the task of building a thing that is more intelligent than all of humanity combined and shaped appropriately so as to help us, rather than have a volition of its own that runs contrary to ours.[1]

The main (/most salient) disagreement I can see at the moment is the authors' expectations of value-strangeness and maximizeriness of superintelligence; or rather, I am much more uncertain about this. However, this detail is not relevant for the desirability of the post-ASI future, conditional on business-close-to-as-usual and therefore not relevant for whether the ban is good.

(Also, not sure about their choice of some stories/parables, but that's a minor issue as well.)

I liked the comparison with the Allies winning against the Axis in WWII, which, at least in resource/monetary terms, must have costed much more than it would cost to implement the ban. The things we're missing at the moment are awareness of the issue, pulling ourselves together, and collective steam.

- ^

Whatever that means, cf the problems of CEV and idealized values.

The main (/most salient) disagreement I can see at the moment is the authors' expectations of value-strangeness and maximizeriness of superintelligence; or rather, I am much more uncertain about this.

The metaphor I use is Russian roulette, where you have a revolver with 6 chambers, 1 loaded with a bullet. The gun is pointed at the head of humanity. We spin, and we pull the trigger.

- Various AI lab leaders have stated that we are playing with 1 (or 1.5) chambers loaded. Dario Amodei at Anthropic is the most pessimistic, I believe, giving us a 25% chance that AI kills us?

- Eliezer and Nate suspect that we are playing with approximately 6 out of 6 chambers loaded.

- Personally, I have a long and complicated argument that we may only be playing with 4 or 5 chambers loaded!

- You might feel that we're only playing with 2 or 3 chambers loaded.

The wise solution here is not to worry about precisely how many chambers are loaded. Even 1 in 6 odds are terrible! The wise solution is to stop playing Russian roulette entirely.

In his MLST podcast appearance in early 2023, Connor Leahy describes Alfred Korzybski as a sort of "rationalist before the rationalists":

Funny story: rationalists actually did exist, technically, before or around World War One. So, there is a Polish nobleman named Alfred Korzybski who, after seeing horrors of World War One, thought that as technology keeps improving, well, wisdom's not improving, then the world will end and all humans will be eradicated, so we must focus on producing human rationality in order to prevent this existential catastrophe. This is a real person who really lived and he actually sat down for like 10 years to like figure out how to like solve all human rationality God bless his autistic soul. You know, he failed obviously but you know you can see that the idea is not new in this regard.

Korzybski's two published books are Manhood of Humanity (1921) and Science and Sanity (1933).

E. P. Dutton published Korzybski's first book, Manhood of Humanity, in 1921. In this work he proposed and explained in detail a new theory of humankind: mankind as a "time-binding" class of life (humans perform time binding by the transmission of knowledge and abstractions through time which become accreted in cultures).

Having read the book (and having filtered it through some of my own interpretaion of it and perhaps some steelmanning) I am inclined to interpret his "time-binding" as something like (1) accumulation of knowledge from past experience across time windows that are inaccessible to any other animals (both individual (long childhoods) and cultural learning); and (2) the ability to predict and influence the future. This gets close in the neighborhood of "agency as time-travel", consequentialist cognition, etc.

In the wiki page of his other book:

His best known dictum is "The map is not the territory": He argued that most people confuse reality with its conceptual model.

(But that is relatively well-known.)

Korzybski intended the book to serve as a training manual. In 1948, Korzybski authorized publication of Selections from Science and Sanity after educators voiced concerns that at more than 800 pages, the full book was too bulky and expensive.

As Connor said...

God bless his autistic soul. You know, he failed obviously but

...but 60 years later, his project would be restarted.

See also: https://www.lesswrong.com/posts/qc7P2NwfxQMC3hdgm/rationalism-before-the-sequences

I've written about this here:

https://www.lesswrong.com/posts/kFRn77GkKdFvccAFf/100-years-of-existential-risk

The acronym SLT (in this community) is typically taken/used to refer to Singular Learning Theory, but sometimes also to (~old-school-ish) Statistical Learning Theory and/or to Sharp Left Turn.

I therefore put that to disambiguate between them and to clean up the namespace, we should use SiLT, StaLT, and ShaLT, respectively.

So that was your idea! Aram Ebtekar and I have been going around suggesting this but couldn’t remember who initially proposed it at Iliad 2.

Let this thread be the canonical reference for the posterity that this idea appeared in my mind at ODYSSEY at the session "Announcing Universal Algorithmic Intelligence Reading Group" held by you and Aram in Bayes Attic, on Wednesday, 2025-08-27, around 07:20 PM, when, for whatever reason, the two S Learning Theories entered the conversation, when you were putting some words on the whiteboard, and somebody voiced a minor complaint that SLT stands for both of them.

Are there any memes prevalent in the US government that make racing to AGI with China look obviously foolish?

The "let's race to AGI with China" meme landed for a reason. Is there something making the US gov susceptible to some sort of counter-meme, like the one expressed in this comment by Gwern?

The no interest in an AI arms race is now looking false, as apparently China as a state has devoted $137 billion to AI, which is at least a yellow flag that they are interested in racing.

apparently China as a state has devoted $1 trillion to AI

Source? I only found this article about 1 trillion Yuan, which is $137 billion.

Yeah, that was what I was referring to, and I thought it would actually be a trillion dollars, sorry for the numbers being wrong.

I'd vainly hope that everyone would know about the zero-sum nature of racing to the apocalypse from nuclear weapons, but the parallel isn't great, and no-one seems to have learned the lesson anyways, given the failure of holding SALT III or even doing START II.

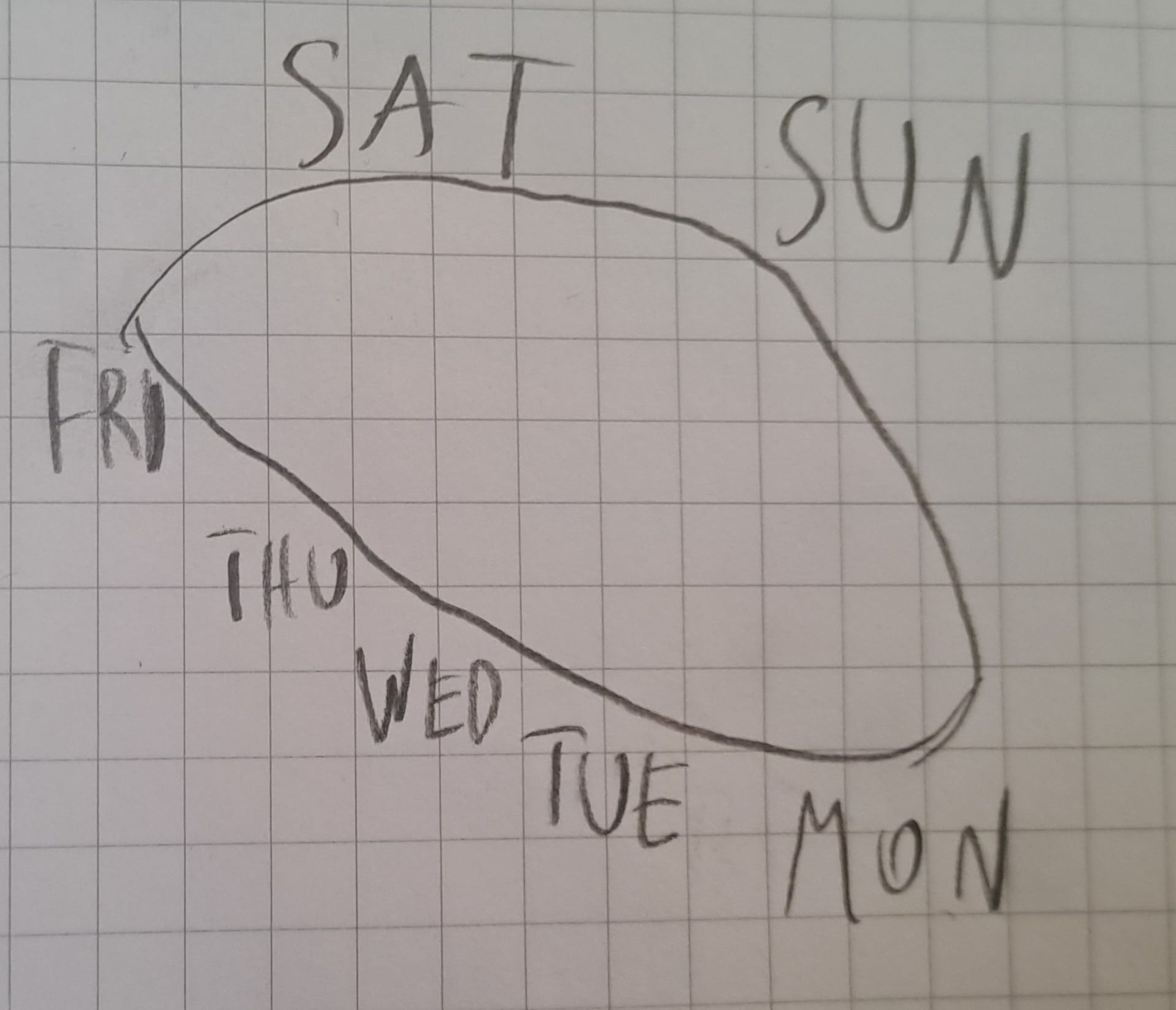

For as long as I can remember, I've had a very specific way of imagining the week. The weekdays are arranged on an ellipse, with an inclination of ~30°, starting with Monday in the bottom-right, progressing along the lower edge to Friday in the top-left, then the weekend days go above the ellipse and the cycle "collapses" back to Monday.

Actually, calling it "ellipse" is not quite right because in my mind's eye it feels like Saturday and Sunday are almost at the same height, Sunday just barely lower than Saturday.

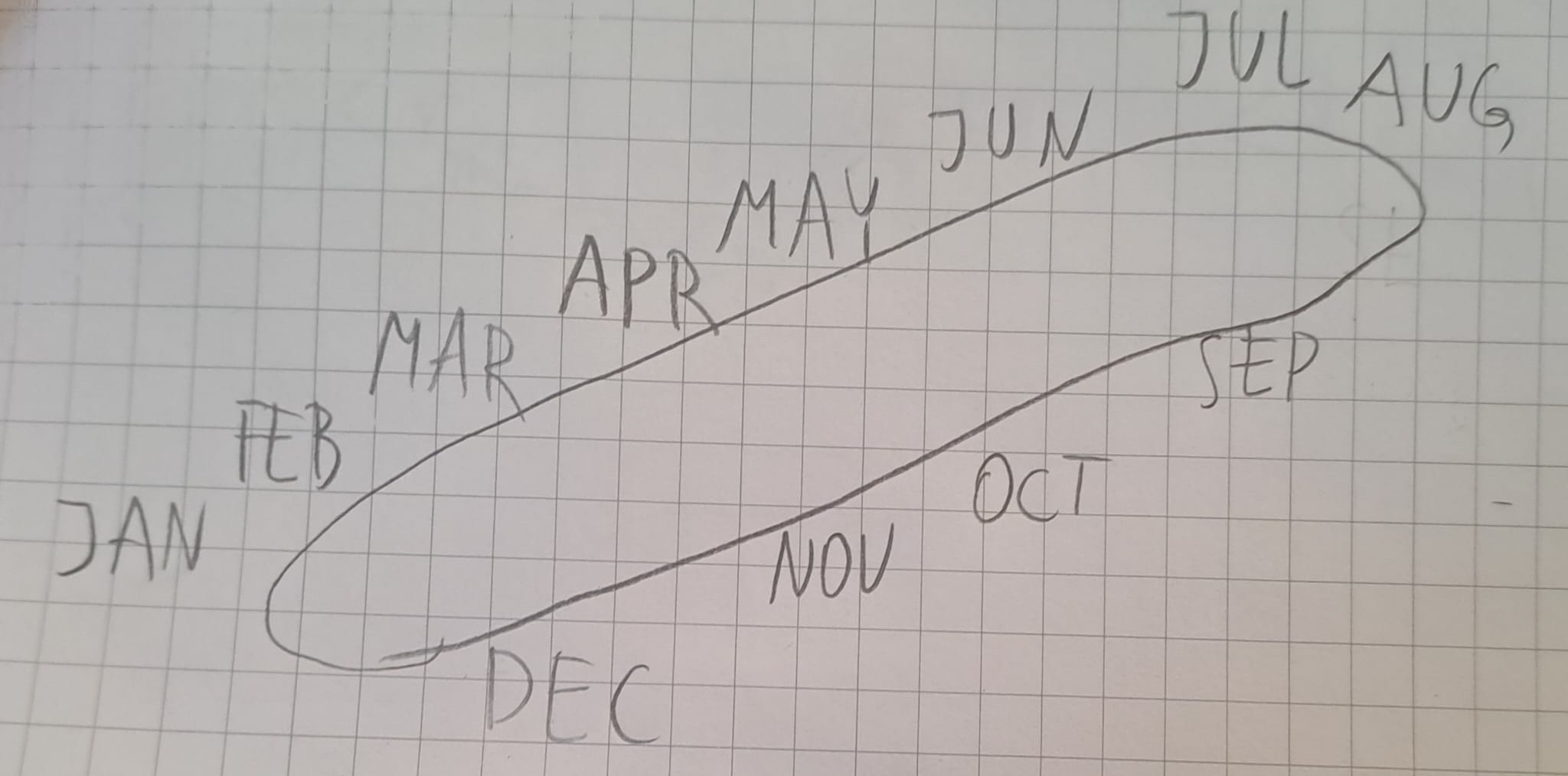

I have a similar ellipse for the year, this one oriented bottom-left to top-right:

This one also feels wrong because "in my head" each of the following is true:

- Each month takes the same amount of space (/measure?).

- There are fewer months along the lower side of the cycle.

- I strongly feel that "this should be a mathematically proper ellipse". (Non-Euclidean geometry?)

The main interesting commonalities I see between them:

- The initial elements (Monday and January) start in the lower corner of the cycle.

- The "free" elements of the cycle (weekend and summer vacation) are along the top edge of the cycle.

- The segment between the last "free" element and the initial element is stretched so as to make it ellipse-like?

- Clockwise direction.

I don't remember exactly when I became meta-aware of this in the sense of knowing that this way of imagining the most basic temporal cycles is probably rather peculiar to me. It certainly was between learning about associative synesthesia (of which I think this is an example?), which was at my first year of university at the latest (~8 years ago), and when I first described this to somebody, maybe about 2 or 3 years ago, their reaction being approximately "WTF".

I've spoken about it to a few people so far (~10?), and nobody reported having anything like this.

I just met someone recently who has this! They said they have always visualized the months of the year as on a slanted treadmill, unevenly distributed. They described it as a form of synesthesia, which is conceptually consistent with how I experience grapheme-color associative synesthesia.

This is very similar to how l perceive time! what I find interesting is that while I’ve heard people talk about the way they conceptualize time before I’ve never heard anyone else mention the bizarre geometry aspect. The sole exceptions to this were my Dad and Grandfather, who brought this phenomenon to my attention when I was young.

I have similar thing for week days, but somehow with a weird shape?

in general, it's a similar cycle, but flipped horizontally, going left to right:

on top it's: sun, sat

on the bottom: mon, tue, wed, thu, fri

the shape connecting days goes downwards from sun to mon, tue, wed, then upwards to thu, then down to fri, then up to sat, sun, closing the loop.

not sure is this makes any sense )

not sure is this makes any sense )

I think I understand.

Has it always been with you? Any ideas what might be the reason for the bump at Thursday? Was Thursday in some sense "special" for you when you were a kid?

Ha, thinking back to childhood I get it now, it's the influence of the layot of the school daily journal in USSR/Ukraine, like https://cn1.nevsedoma.com.ua/images/2011/33/7/10000000.jpg

I have something like this for years: https://www.lesswrong.com/posts/j8WMRgKSCxqxxKMnj/what-i-think-about-when-i-think-about-history

For as long as I can remember, I have always placed dates on an imaginary timeline, that "placing" involving stuff like fuzzy mental imagery of events attached to the date-labelled point on the timeline. It's probably much less crisp than yours because so far I haven't tried to learn history that intensely systematically via spaced repetition (though your example makes me want to do that), but otherwise sounds quite familiar.

Strongly normatively laden concepts tend to spread their scope, because (being allowed to) apply a strongly normatively laden concept can be used to one's advantage. Or maybe more generally and mundanely, people like using "strong" language, which is a big part of why we have swearwords. (Related: Affeective Death Spirals.)[1]

(In many of the examples below, there are other factors driving the scope expansion, but I still think the general thing I'm pointing at is a major factor and likely the main factor.)

1. LGBT started as LGBT, but over time developed into LGBTQIA2S+.

2. Fascism initially denoted, well, fascism, but now it often means something vaguely like "politically more to the right than I am comfortable with".

3. Racism initially denoted discrimination along the lines of, well, race, socially constructed category with some non-trivial rooting in biological/ethnic differences. Now jokes targeting a specific nationality or subnationality are often called "racist", even if the person doing the joking is not "racially distinguishable" (in the old school sense) from the ones being joked about.

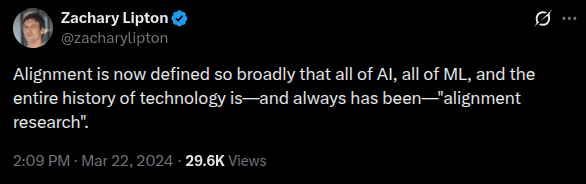

4. Alignment: In IABIED, the authors write:

The problem of making AIs want—and ultimately do—the exact, complicated things that humans want is a major facet of what’s known as the “AI alignment problem.” It’s what we had in mind when we were brainstorming terminology with the AI professor Stuart Russell back in 2014, and settled on the term “alignment.”

[Footnote:] In the years since, this term has been diluted: It has come to be an umbrella term that means many other things, mainly making sure an LLM never says anything that embarrasses its parent company.

See also: https://www.lesswrong.com/posts/p3aL6BwpbPhqxnayL/the-problem-with-the-word-alignment-1

https://x.com/zacharylipton/status/1771177444088685045 (h/t Gavin Leech)

5. AI Agents.

it would be good to deconflate the things that these days go as "AI agents" and "Agentic™ AI", because it makes people think that the former are (close to being) examples of the latter. Perhaps we could rename the former to "AI actors" or something.

But it's worse than that. I've witnessed an app generating a document with a single call to an LLMs (based on the inputs from a few textboxes, etc) being called an "agent". Calling [an LLM-centered script running on your computer and doing stuff to your files or on the web, etc] an "AI agent" is defensible on the grounds of continuity with the old notion of software agent, but if a web scraper is an agent and a simple document generator is an agent, then what is the boundary (or gradient / fuzzy boundary) between agents and non-agents that justifies calling those two things agents but not a script meant to format a database?

- ^

There's probably more stuff going on required to explain this comprehensively, but that's probably >50% of it.

Russell and Norvig discuss "intelligent agents" in AIMA (2003) and they don't mean web scrapers or database scripts, but they also don't mean that the thing they're discussing is conscious or super-rational or anything fancy like that. A self-driving car is an "agent" in their sense.

I suspect the use of "agentic" to mean something like "highly instrumentally rational" — as in "I want to become more agentic" — is an LW idiosyncrasy.

In human psychology, Milgram used "agentic" to mean "obedient", in contrast to "autonomous"!

As an aside, the origins of "LGBT" and "racism" are not quite what you say. A historical dictionary may help. "LGBT" was itself an expansion of earlier terms. LGB (and GLB) were used in the 1990s; and LG is found in the 1970s, for instance in the name of the ILGA which was originally the International Lesbian & Gay Association and more recently the International Lesbian, Gay, Bisexual, Trans and Intersex Association while retaining the shorter initialism.

beyond doom and gloom - towards a comprehensive parametrization of beliefs about AI x-risk

doom - what is the probability of AI-caused X-catastrophe (i.e. p(doom))?

gloom - how viable is p(doom) reduction?

foom - how likely is RSI?

loom - are we seeing any signs of AGI soon, looming on the horizon?

boom - if humanity goes extinct, how fast will it be?

room - if AI takeover happens, will AI(s) leave us a sliver of the light cone?

zoom - how viable is increasing our resolution on AI x-risk?

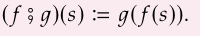

The notations we use for (1) function composition; and (2) function types, "go in opposite directions".

For example, take functions and that you want to compose into a function (or ), which, starting at some element , uses to obtain some , and then uses to obtain some . The notation goes from left to right, which works well for the minds that are used to the left-to-right direction of English writing (and of writing of all extant European languages, and many non-European languages).

One might then naively expect that a function that works like ", then ", would be denoted or maybe with some symbol combining on the left and on the right, like or .

But, no. It's or sometimes , especially in the category theory land.

The reason for this is simple: function application. For some historical reasons (that are plausibly well-documented somewhere in the annals of history of mathematics), when mathematicians were still developing a symbolic way of doing math (rather than mostly relying on geometry and natural language) (and also the concept of a function, which I hear has undergone some substantial revisions/reconceptualizations), the common way to denote an application of a function to a number became . So, now that we're stuck with this notation, to be consistent with it, we need to write the function ", then " applied to as . Hence, we write this function abstractly as and read " after ".

To make the weirdness of this notation fully explicit, let's add types to the symbols (which I unconventionally write in square brackets to distinguish typing from function applicatoin.

You can see the logic to this madness, but it feels unnatural. Like, of course, you could just as well write the function signature the other way around (like or ) or introduce a notation for function composition that respects our intuition that a composition of ", then ", should have on the left and on the right (again, assuming that we're used to reading left-to-right).

Regarding the first option, I recall Bartosz Milewski saying in his lectures that "we draw string diagrams" right-to-left, so that the direction of "flow" in the diagram is consistent with the (" after ") notation. But now looking up some random string diagrams, this doesn't seem to be the standard practice (even when they're drawn horizontally, whereas it seems like they might be more often drawn vertically).

Regarding the second option, some category theory materials use the funky semicolon notation, e.g., this, from Seven Sketches in Compositionality (Definition 3.24). I recall encountering it in places other than that textbook, but I don't think it's standard practice.

I like it better, but it still leaves something to be desired, because the explicitized signature of all the atoms of the expression on the left is something like . Why not have a notation that respects the obvious and natural order of ?

Well, many programming languages actually support pipe notation that does just that.[1]

Julia:

add2(x)=x+2

add3(x)=x+3

10 |> add2 |> add3

# 15Haskell:

import Data.Function ((&))

add2=(+2)

add3=(+3)

10 & add2 & add3

-- 15Nushell:

def add2 [] {$in + 2}

def add3 [] {$in + 3}

10 | add2 | add3

# 15Which makes me think of an alternative timeline where we developed a notation for function application where we first write the arguments and then the functions to be applied to them.

And, honestly, I find this idea very appealing. Elegant. Natural. Maybe it's because I don't need to switch my reading mode from LtR to RtL when I'm encountering an expression involving function application, and instead I can just, you know, keep reading. It's a marginal improvement in fluent interoperability between mathematical formalisms and natural language text.

In a sense, our current style is a sort of boustrophedon, although admittedly a less unruly sort, as the "direction" of the function depends on the context, rather than cycling through LtR and RtL.

(Sidenote: I recently learned about head-directionality, a proposed parameter of languages. Head-initial languages put the head of the phrase before its complements, e.g., "dog black". Head-final put it after the complements, e.g., "black dog". Many languages are a mix. So our mathematical notation is a mix in this regard, and I am gesturing that it would be nicer for it to be argument-initial.)

[This is a simple thing that seems evident and obvious to me, but I've never heard anyone comment on it, and for some reason, it didn't occur to me to elaborate on this thought until now.]

- ^

I think they are less friendly if you want to do something more complicated with functions accepting more than 1 argument, but this seems solvable.

I think they are less friendly if you want to do something more complicated with functions accepting more than 1 argument, but this seems solvable.

Indeed, this is called reverse Polish / postfix notation. For example, f(x, g(y, z)) becomes x (y z g) f, which is written without parentheses as x y z g f. That is, if you know the arity of all the letters beforehand, parentheses are unnecessary.

Lol, right! Only after I published this did I recall that there was something vaguely in this spirit called "Polish notation", and it turns out it's exactly that.

Recently, I watched Out of This Box. In the musical, they test their nascent AGI on the Christiano-Sisskind test, a successor to the Turing test. What the test involves exactly remains unexplained. Here are my hypotheses.[1]

Sisskind certainly refers to Scott Alexander, and one thing that Scott Alexander posted about something in the vicinity of the Turing test was this post (italics added):

The year is 2028, and this is Turing Test!, the game show that separates man from machine! Our star tonight is Dr. Andrea Mann, a generative linguist at University of California, Berkeley. She’ll face five hidden contestants, code-named Earth, Water, Air, Fire, and Spirit. One will be a human telling the truth about their humanity. One will be a human pretending to be an AI. One will be an AI telling the truth about their artificiality. One will be an AI pretending to be human. And one will be a total wild card. Dr. Mann, you have one hour, starting now.

Notably, the last line in the post is:

MANN: You said a bad word! You’re a human pretending to be an AI pretending to be a human! I knew it!

Christiano is, of course, Paul Christiano. One of the many things that Paul Christiano came up with is HCH:

Consider a human who has access to a question-answering machine. Suppose the machine answers questions by perfectly imitating what the human would do if asked that question.

To make things twice as tricky, suppose the human-to-be-imitated is herself able to consult a question-answering machine, which answers questions by perfectly imitating what the human would do if asked that question…

Let’s call this process HCH, for “Humans Consulting HCH.”

The limit of HCH is an infinite HCH tree where each node is able to consult a subtree rooted at itself, to answer the question coming from its parent node.

My first hypothesis is that the Christiano-Sisskind test is some recursive shenanigan like the following:

- The AI is given a task to imitate several different humans, and its performance is measured, e.g., by testing how well/badly other AIs do at recognizing whether it is an AI or the human it's trying to simulate. So basically a standard Turing test.

- Each of those simulated humans now has to imitate several different AIs. Performance is assessed again.

- Rinse and repeat, until some maximum tree depth.

The AI's overall performance is somehow aggregated into the score of the test.

An alternative possibility is that the test involves something closer to Debate, but I'm much more unsure what that might look like. Maybe something like:

- We are testing our AI against some other AI (think pairwise model comparison).

- Each AI is given some finite chain to simulate: The AI is simulating human A simulating AI X simulating human B simulating AI Y … simulating human-or-AI Z.

- The two AIs, called Alice and Bob, are debating on two separate argument trees regarding the identities of Alice and Bob. Alice wants to convince Bob that she is Alice-Z and not something else. Bob wants to convince Alice that he is Bob-Z and not something else.

- The winner is the one who argues more persuasively (measured by the final judgment of a judge who is either a human or some third AI).

- ^

If any of the musical creators are watching this, I'm curious how close this is to what you had in mind (if you had anything specific in mind (but it's, of course, totally valid not to have anything specific in mind and just nerd-name-drop Christiano and Scott)).

I've read the SEP entry on agency and was surprised how irrelevant it feels to whatever it is that makes me interested in agency. Here I sketch some of these differences by comparing an imaginary Philosopher of Agency (roughly the embodiment of the approach that the "philosopher community" seems to have to these topics), and an Investigator of Agency (roughly the approach exemplified by the LW/AI Alignment crowd).[1]

If I were to put my finger on one specific difference, it would be that Philosopher is looking for the true-idealized-ontology-of-agency-independent-of-the-purpose-to-which-you-want-to-put-this-ontology, whereas Investigator wants a mechanistic model of agency, which would include a sufficient understanding of goals, values, dynamics of development of agency (or whatever adjacent concepts we're going to use after conceptual refinement and deconfusion), etc.

Another important component is the readiness to take one's intuitions as the starting point, but also assume they will require at least a bit of refinement before they start robustly carving reality at its joints. Sometimes you may even need to discard almost all of your intuitions and carefully rebuild your ontology from scratch, bottom-up. Philosopher, on the other hand, seems to (at least more often than Investigator) implicitly assume that their System 1 intuitions can be used as the ground truth of the matter and the quest for formalization of agency ends when the formalism perfectly captures all of our intuitions and doesn't introduce any weird edge cases.

Philosopher asks, "what does it mean to be an agent?" Investigator asks, "how do we delineate agents from non-agents (or specify some spectrum of relevant agency-adjacent) properties, such that they tell us something of practical importance?"

Deviant causal chains are posed as a "challenge" to "reductive" theories of agency, which try to explain agency by reducing it to causal networks.[2] So what's the problem? Quoting:

… it seems always possible that the relevant mental states and events cause the relevant event (a certain movement, for instance) in a deviant way: so that this event is clearly not an intentional action or not an action at all. … A murderous nephew intends to kill his uncle in order to inherit his fortune. He drives to his uncle’s house and on the way he kills a pedestrian by accident. As it turns out, this pedestrian is his uncle.

At least in my experience, this is another case of a Deep Philosophical Question that no longer feels like a question, once you've read The Sequences or had some equivalent exposure to the rationalist (or at least LW-rationalist) way of thinking.

About a year ago, I had a college course in philosophy of action. I recall having some reading assigned, in which the author basically argued that for an entity to be an agent, it needs to have an embodied feeling-understanding of action. Otherwise, it doesn't act, so can't be an agent. No, it doesn't matter that it's out there disassembling Mercury and reusing its matter to build the Dyson Sphere. It doesn't have the relevant concept of action, so it's not an agent.

You are suffused with a return-to-womb mentality - desperately destined for the material tomb. Your philosophy is unsupported. Why do AI researchers think they are philosophers when its very clear they are deeply uninvested in the human condition? there should be another term, 'conjurers of the immaterial snake oil', to describe the actions you take when you riff on Dyson Sphere narratives to legitimize your paltry and thoroughly uninteresting research

Is there any research on how the actual impact of [the kind of AI that we currently have] lives up to the expectations from the time [shortly before we had that kind of AI but close enough that we could clearly see it coming]?

This is vague but not unreasonable periods for the second time would be:

- After OA Copilot, before ChatGPT (so summer-autumn 2022).

- After PaLM, before Copilot.

- After GPT-2, before GPT-3.

I'm also interested in research on historical over- and under-performance of other tech (where "we kinda saw it (or could see) it coming") relative to expectations.

[Epistemic status: shower thought]

The reason why agent foundations-like research is so untractable and slippery and very prone to substitution hazards, etc, is largely because it is anti-inductive, and the key source of its anti-inductivity is the demons in the universal prior preventing the emergence of other consequentialists, which could become a trouble for their acausal monopoly on the multiverse.

(Not that they would pose a true threat to their dominance, of course. But they would diminish their pool of usable resources a little bit, so it's better to nip them in the bud than manage the pest after it grows.)

It's kinda like grabby aliens, but in logical time.

/j

Does severe vitamin C deficiency (i.e. scurvy) lead to oxytocin depletion?

According to Wikipedia

The activity of the PAM enzyme [necessary for releasing oxytocin fromthe neuron] system is dependent upon vitamin C (ascorbate), which is a necessary vitamin cofactor.

I.e. if you don't have enough vitamin C, your neurons can't release oxytocin. Common sensically, this should lead to some psychological/neurological problems, maybe with empathy/bonding/social cognition?

Quick googling "scurvy mental problems" or "vitamin C deficiency mental symptoms" doesn't return much on that. This meta-analysis finds some association of sub-scurvy vitamin C deficiency with depression, mood problems, worse cognitive functioning and some other psychiatric conditions but no mention of what I'd suspect from lack of oxytocin. Possibly oxytocin is produced in low enough levels that this doesn't really matter because you need very little vit C? But on the other hand (Wikipedia again)

By chance, sodium ascorbate by itself was found to stimulate the production of oxytocin from ovarian tissue over a range of concentrations in a dose-dependent manner.

So either this (i.e. disturbed social cognition) is not how we should expect oxytocin deficiencies to manifest or vitamin C deficiency manifests in so many ways in the brain that you don't even bother with "they have worse theory of mind than when they ate one apple a day".

"they have worse theory of mind than when they ate one apple a day".

Just a detail, but shouldn't this be one orange a day? Apples do not contain much vitamin C.

Googling for "scurvy low mood", I find plenty of sources that indicate that scurvy is accompanied by "mood swings — often irritability and depression". IIRC, this has remarked upon for at least two hundred years.

That's also what this meta-analysis found but I was mostly wondering about social cognition deficits (though looking back I see it's not clear in the original shortform)