I feel 5 trillion must be a misprint. This is like several years worth of American tax revenues. Conditional on this being true I would take this as significant evidence that what they have internally is unbelievably good. Perhaps even an AI with super-persuasion!

It is such a ridiculous figure, I suspect it must be off by at least an OOM.

It is not a misprint.

This kind of strategy is rational once you're sure that The Singularity is going to happen and it's just a matter of waiting out Moore's Law, there are benefits to being first.

Conditional on this being true, he must be very certain we are close to median human performance, like on the order of one to three years. I don't think this amount of capital can be efficiently expended in the chips industry unless human capital is far less important than it once was. And it will not be profitable, basically, unless he thinks Winning is on the table in the very near term.

Yeah if your product can multiply GDP by a huge amount in the long run, basically any amount of money we can produce is an insignificant sum.

This article from the Wall Street Journal (linked in TFA) says developing human-level AI could cost trillions of dollars to build, which I believe is reasonable (and it could even be a good deal), not that Mr. Altman expects to raise trillions of dollars on short order.

That's much more reasonable of a claim, though it might be too high still (but much more reasonable.)

Sure!

Sam Altman was already trying to lead the development of human-level artificial intelligence. Now he has another great ambition: raising trillions of dollars to reshape the global semiconductor industry.

The OpenAI chief executive officer is in talks with investors including the United Arab Emirates government to raise funds for a wildly ambitious tech initiative that would boost the world’s chip-building capacity, expand its ability to power AI, among other things, and cost several trillion dollars, according to people familiar with the matter. The project could require raising as much as $5 trillion to $7 trillion, one of the people said.

If not a misprint, sounds like door-in-the-face sales tactics. I'm sure everyone involved knows just fine that they're not going to raise >500B in any sane world. But perhaps they can raise 150B if they make 40B look small in comparison.

Wasn't there a bet on when the first 1T training run will happen? Not saying this will be it, but I don't think it's absurd to see a very large number in the next 20 years.

I agree large number, but 5 to 7 tril? nobody has even done a $200b training run? the us military budget is $850 billion? I don't think this number is plausible. it's just throwing stones at the overton window and hoping it breaks, so they can scoot over to a number much bigger than last time.

We can reason back from the quantity of money to how much Altman expects to do with it.

Suppose we know for a fact that it’s will soon be possible to replace some percentage of labor with an AI that has negligible running cost. How much should we be willing to pay for this? It gets rid of opex (operating expenses, i.e. wages) in exchange for capex (capital expenses, i.e. building chips and data centers). The trade between opex and capex depends on the long term interest rate and the uncertainty of the project. I will pull a reasonable number from the air, and say that the project should pay back in ten years. In other words, the capex is ten times the avoided annual opex. Seven trillion dollars in capex is enough to employ 10,000,000 people for ten years (to within an order of magnitude).

That’s a surprisingly modest number of people, easily absorbed by the churn of the economy. When I first saw the number “seven trillion dollars,” I boggled and said “that can’t possibly make sense”. But thinking about it, it actually seems reasonable. Is my math wrong?

This analysis is so highly decoupled I would feel weird posting it most places. But Less Wrong is comfy that way.

I think this would only work if the investors themselves would save the operation costs of those 10^7 people which seems unlikely - if Sam wants to create AGI and sell that as a service, he can only capture a certain fracture of the gains, while the customers will realize the remaining share.

You’re right. My analysis only works if a monopoly can somehow be maintained, so the price of AI labor is set to epsilon under the price of human labor. In a market with free entry, the price of AI labor drops to the marginal cost of production, which is putatively negligible. All the profit is dissipated into consumer surplus. Which is great for the world, but now the seven trillion doesn’t make sense again.

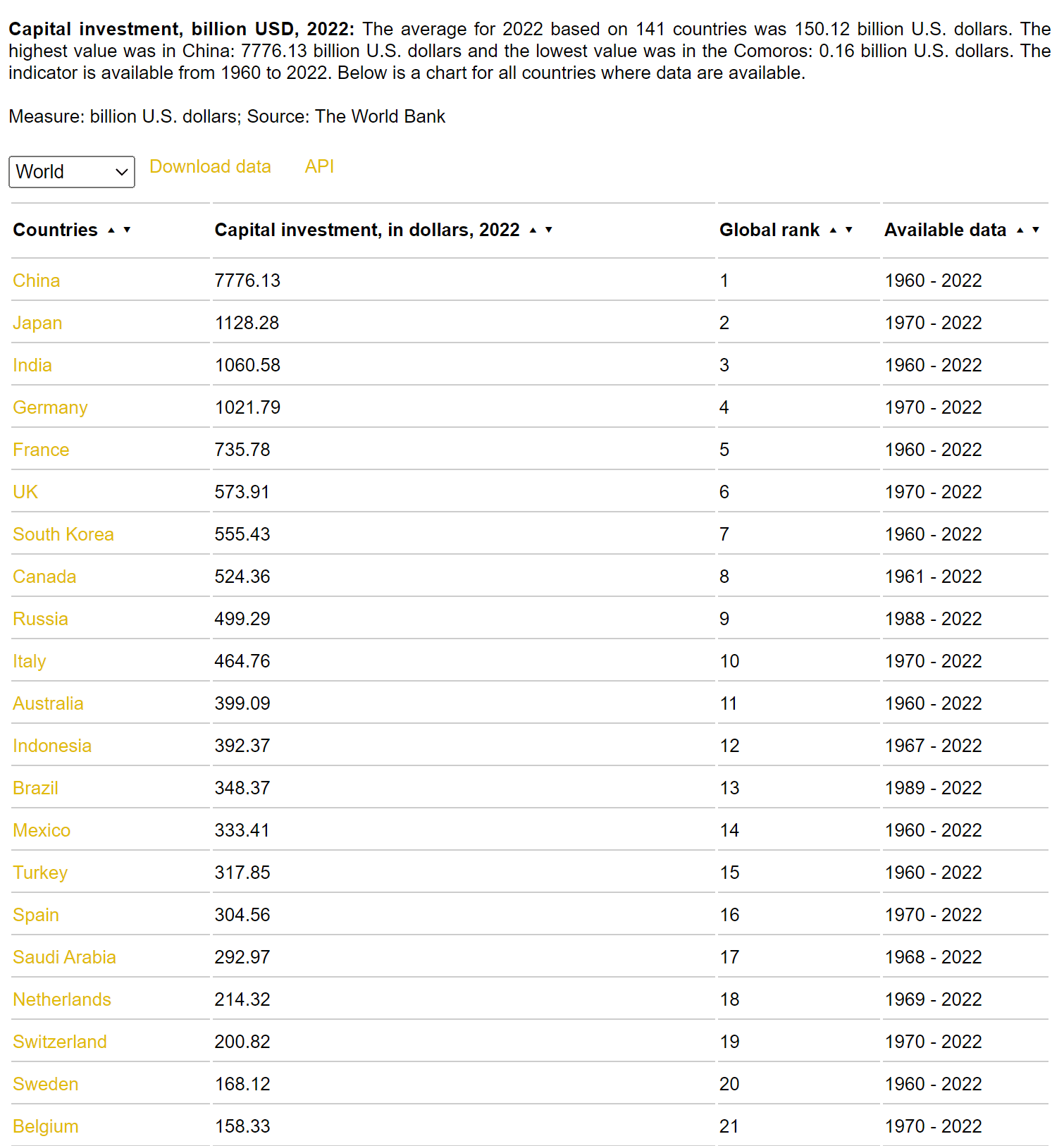

For all the reasons mentioned, this seems unlikely, but I was curious:

So yes it is possible. Essentially this would be investing all of the capital investment - defined as physical goods acquisition - in China in 2022 all into AI hardware.

It is also, if all you care about is ROI and nothing else, probably the right decision. Just a bad idea to invest it all into one company that may be inefficient/corrupt, this would be entire AI sector investment.

I imagine it's a sales tactic. Ask for $7 trillion, people assume you believe you're worth that much, and if you've got such a high opinion of yourself, maybe you're right...

In other news, I'm looking to sell a painting of mine for £2 million ;)

My $300 against your $30 that OpenAI's next round of funding raises less than $40 billion of investor money. In case OpenAI raises no investment money in the next 36 months, the bet expires. Any takers?

I make the same offer with "OpenAI" replaced with "any startup with Sam Altman as a founder".

I'm retracting this comment and these 2 offers. I still think it is very unlikely, but realized that I would be betting against people who are vastly more efficient than I am at using the internet to access timely information and might even be betting against people with private information from Altman or OpenAI.

Someone should publicly bet like I did as an act of altruism to discourage this unprofitable line of inquiry, but I'm not feeling that altruistic today.

At this point, I'd rather spend $5-7 trillion on a Butlerian Jihad to stop OpenAI's reckless hubris.

If you view what they’re doing as an inevitability, I think OpenAI is one of the safest players to be starting this.

Within the set that could actually be doing it? yeah, like, maybe. I'd rather it be anthropic. But across all the orgs that could have existed with a not-too-crazy amount of counterfactual, not even fuckin close.

well for starters, get the altman henchman off the board. then generally be more like anthropic. I'd want a lot of deep changes, generally with the aim to make the things altman says are a good idea not possible for him to back out of.

Well I'm seeing no signs at all, whatsoever, that OpenAI would ever seriously consider slowing, pausing, or stopping its quest for AGI, no matter what safety concerns get raised. Sam Altman seems determined to develop AGI at all costs, despite all risks, ASAP. I see OpenAI as betraying virtually all of its founding principles, especially since the strategic alliance with Microsoft, and with the prospect of colossal wealth for its leaders and employees.

This 5-7 trillion is to enhance GPU production and he also sees revenue doubling from current $2 billion to $4 billion next year.

I’m taking a guess GPT-5 training is going well?