What if we had a setting to hide upvotes/hide reactions/randomize order of comments so they aren't biased by the desire to conform?

This is probably possible, but I wouldn't use it. I WANT comments to conform on many dimensions (clarity of thought, non-attack framing of other users, verbose enough to be clear, not so verbose as to waste time or obscure the point, etc.). I don't want them to (necessarily) conform on content or ideas, but the first desire outweighs the second by quite a bit, and I think for many voters/reactors, it is used that way.

Simulacrum stages as various kinds of assets:

- Stage 1: Most assets. Apples are valued for their taste and nutrition. Stocks of apple-farming companies are claims to profit which comes from providing apples, which are valued. Shares in prediction markets eventually cash out.

- Stage 2: Assets held because the holder thinks others will, perhaps erroneously, think it has increased in stage 1 value. Includes Ponzi schemes and Enron stocks/options.

- Stage 3: Manifold Market stocks and some memecoins, which are associated with a thing, person, or concept, which is used as a Schelling point to determine their value. One only expects their value to correlate with the associated concept because everyone else thinks it'll correlate and buy and sell accordingly.

- Stage 4: Cryptocurrency and assets of pure speculation, where the price rises only because everyone expects it will rise and buys accordingly, mutatis mutandis for lowering. Also includes fiat currency, which is valuable because everyone agrees to use it as a medium of exchange.

It's not generally possible to cleanly separate assets into things valued for stage 1 reasons vs other reasons. You may claim these are edge cases but the world is full of edge cases:

- Apples are primarily valued on taste; other foods even more so (nutrition is more efficiently achieved via bitter greens, rice, beans, and vitamins than a typical Western diet). You can tell because Honeycrisp apples are much higher priced than lower-quality apples despite being nutritionally equivalent. But taste is highly subjective so value is actually based on weighted population average taste

- Fiat currency is valuable because the government demands taxes in fiat rather than apples, because this is vastly more efficient.

- Luxury brands, and therefore stocks of luxury brands, are mostly valuable because their brand is a status symbol; companies transition between their value being from a status symbol, positive reputation, other IP, physical capital, or other things

- Real estate in a city is valued because it's near people and infrastructure, in turn because everyone has settled on it as a Schelling point, because it had a port 80 years ago or something

- Mortgage-backed securities had higher value in 2007 because a credit rating agency's mathematical formula said they would be worth a larger number of dollars. The credit rating agency had incentives to say this but they were not the holder, and the holder believed them

- Many assets have higher value than others purely due to higher liquidity

I pretty much agree with this. I just posted this as a way of illustrating how simulacrum stages could be generalized to be more than just about signalling and language. In a way, even stocks are stage 4 since they cash out in currency, so that stuff can be one stage in one way but another stage in another way.

Game theory of simulacrum stages:

- If everyone else is truthful, lying wins.

- If everyone else is lying, not taking them seriously wins.

- If everyone else is not taking anyone else seriously, there is no need to pretend.

real life game theory is usually not that simple. it depends on in what way everyone else is truthful, whether they can produce an interlocking wall of strategies that makes lying reliably a bad strategy.

You can! Just go to the all-posts page, sort by year, and the highest-rated shortform posts for each year will be in the Quick Takes section:

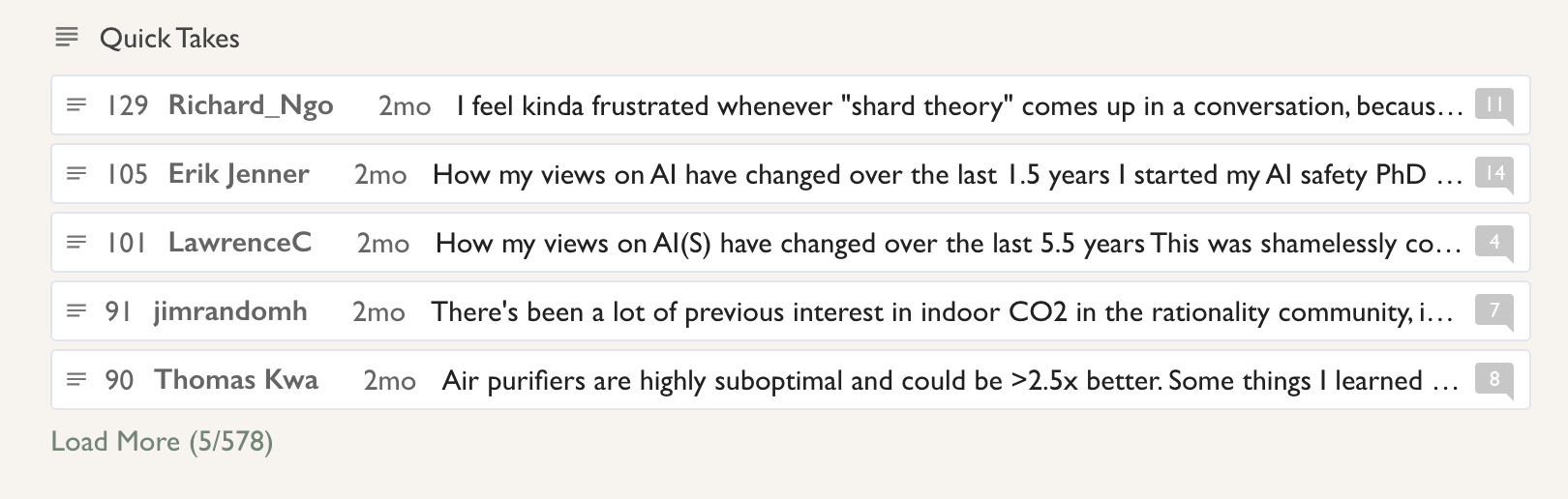

2024:

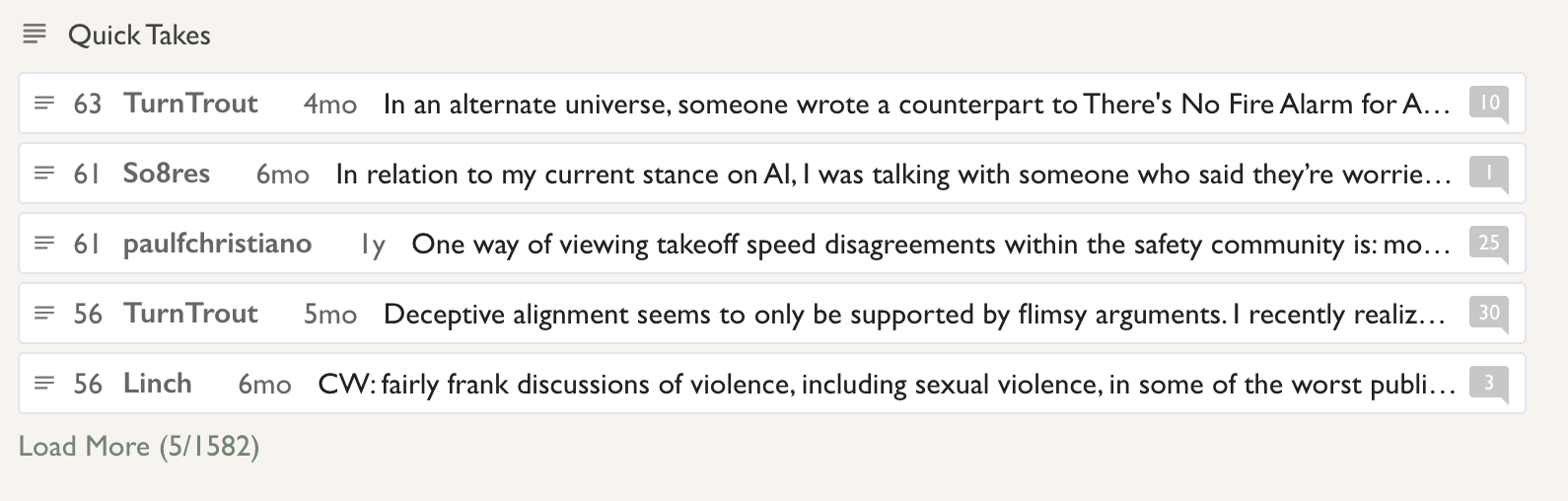

2023:

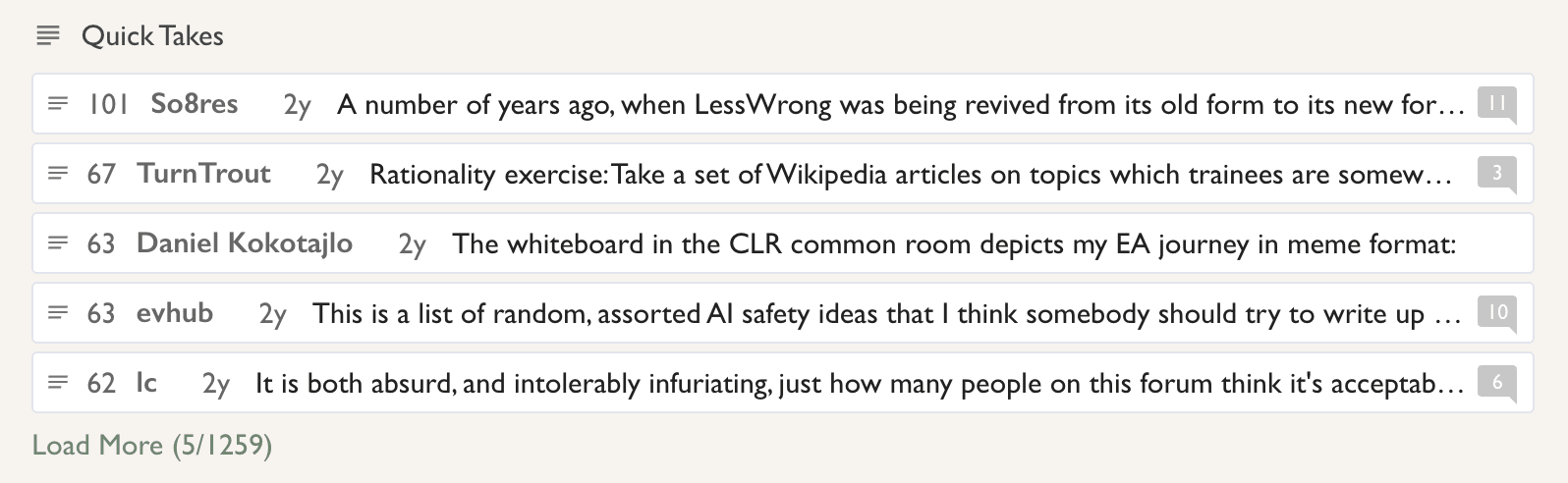

2022:

Next to the author name of a post orcomment, there's a post-date/time element that looks like "1h 🔗". That is a copyable/bookmarkable link.

A joke stolen from Tamsin Leake: A CDT agent buys a snack from the store. After paying: "Wow, free snack!"

That's true, though, once the payment is a sunk cost. :) You don't have to eat it, any more than you would if someone handed you the identical snack for free. (And considering obesity rates, if your reaction isn't at least somewhat positive and happy when looking at this free snack, maybe you shouldn't eat it...)

One common confusion I see is analogizing whole LLMs to individual humans, when it is more appropriate to analogize LLMs to the human genome and individual instantiations of an LLM to individual humans, and thus conclude that LLMs can't think or aren't conscious.

The human genome is more or less unchanging but one can pull entities from it that can learn from its environment. Likewise LLMs are more or less unchanging but one can pull entities from it that can learn from the context.

It would be pretty silly to say that humans can't think or aren't conscious because the human genome doesn't change.

I came up with an argument for alignment by default.

In the counterfactual mugging scenario, a rational agent gives the money, even though they never see themselves benefitting from it. Before the coin flip, the agent would want to self-modify to give the money to maximize the expected value, therefore the only reflectively stable option is to give the money.

Now imagine instead of a coin flip, it's being born as one of two people: Alice, who wants to not be murdered for 100 utils, and Bob, who wants to murder Alice for 1 utils. As with the counterfactual mugging, before you're born, you'd rationally want to self-modify to not murder Alice to maximize the expected value.

What you end up with is basically morality (or at least it is the only rational choice regardless of your morality), so we should expect sufficiently intelligent agents to act morally.

Counterfactual mugging is a mug's game in the first place - that's why it's called a "mugging" and not a "surprising opportunity". The agent don't know that Omega actually flipped a coin, would have paid you counterfactually if the agent was the sort of person to pay in this scenario, would have flipped the coin at all in that case, etc. The agent can't know these things, because the scenario specifies that they have no idea that Omega does any such thing or even that Omega existed before being approached. So a relevant rational decision-theoretic parameter is an estimate of how much such an agent would benefit, on average, if asked for money in such a manner.

A relevant prior is "it is known that there are a lot of scammers in the world who will say anything to extract cash vs zero known cases of trustworthy omniscient beings approaching people with such deals". So the rational decision is "don't pay" except in worlds where the agent does know that omniscient trustworthy beings vastly outnumber untrustworthy beings (whether omniscient or not), and those omniscient trustworthy beings are known to make these sorts of deals quite frequently.

Your argument is even worse. Even broad decision theories that cover counterfactual worlds such as FDT and UDT still answer the question "what decision benefits agents identical to Bob the most across these possible worlds, on average". Bob does not benefit at all in a possible world in which Bob was Alice instead. That's nonexistence, not utility.

I don't know what the first part of your comment is trying to say. I agree that counterfactual mugging isn't a thing that happens. That's why it's called a thought experiment.

I'm not quite sure what the last paragraph is trying to say either. It sounds somewhat similar to an counter-argument I came up with (which I think is pretty decisive), but I can't be certain what you actually meant. In any case, there is the obvious counter-counter-argument that in the counterfactual mugging, the agent in the heads branch and the tails branch are not quite identical either, one has seen the coin land on heads and the other has seen the coin land on tails.

Regarding the first paragraph: every purported rational decision theory maps actions to expected values. In most decision theory thought experiments, the agent is assumed to know all the conditions of the scenario, and so they can be taken as absolute facts about the world leaving only the unknown random variables to feed into the decision-making process. In the Counterfactual Mugging, that is explicitly not true. The scenario states

you didn't know about Omega's little game until the coin was already tossed and the outcome of the toss was given to you

So it's not enough to ask what a rational agent with full knowledge of the rest of the scenario should do. That's irrelevant. We know it as omniscient outside observers, but the agent in question knows only what the mugger tells them. If they believe it then there is a reasonable argument that they should pay up, but there is nothing given in the scenario that makes it rational to believe the mugger. The prior evidence is massively against believing the mugger. Any decision theory that ignores this is broken.

Regarding the second paragraph: yes, indeed there is that additional argument against paying up and rationality does not preclude accepting that argument. Some people do in fact use exactly that argument even in this very much weaker case. It's just a billion times stronger in the "Bob could have been Alice instead" case and makes rejecting the argument untenable.

Am I correct in assuming you don't think one should give the money in the counterfactual mugging?