Accidental AI Safety experiment by PewDiePie: He created his own self-hosted council of 8 AIs to answer questions. They voted and picked the best answer. He noticed they were always picking the same two AIs, so he discarded the others, made the process of discarding/replacing automatic, and told the AIs about it. The AIs started talking about this "sick game" and scheming to prevent that. This is the video with the timestamp:

From the AI's messages seen in the video it's possible that maybe he provided those instruction as user prompt instead of a system prompt. I wonder if the same thing would've happened if they were given as the system prompt instead.

This experiment is pretty clever no? I don't think a total AI amateur would discover it, either he's been following along this problem for quite some time or he read about this somewhere recently or one of us AI safety nerds sponsored him. P=not sure though, it's not beyond what people with an investigative mindset might come up with.

He mentions he's just learned coding so I guess he had the AI build the scaffolding. But the experiment itself seems like a pretty natural idea, he literally likens it to a King's council. I'm sure once you have the concept having an LLM code it is no big deal.

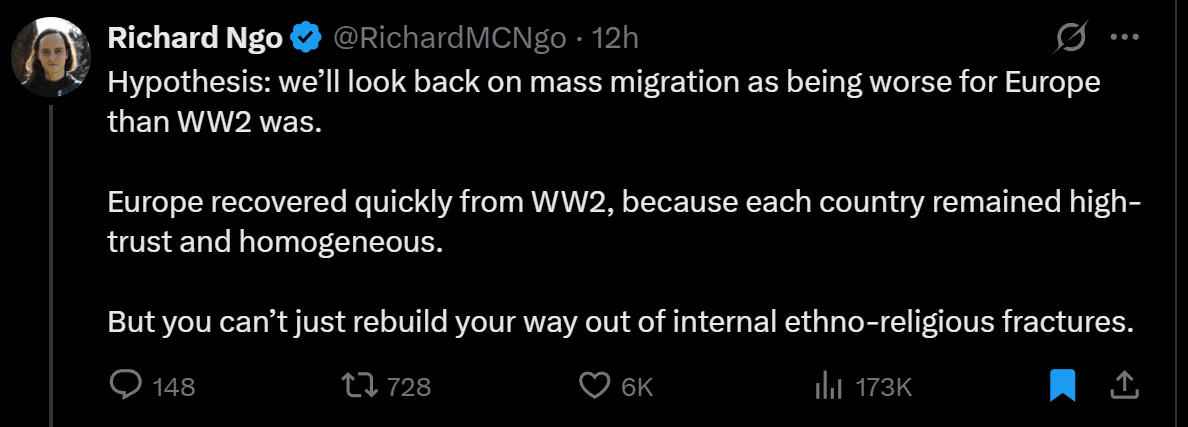

There is a phenomenon in which rationalists sometimes make predictions about the future, and they seem to completely forget their other belief that we're heading toward a singularity (good or bad) relatively soon. It's ubiquitous, and it kind of drives me insane. Consider these two tweets:

Timelines are really uncertain and you can always make predictions conditional on "no singularity". Even if singularity happens you can always ask superintelligence "hey, what would be the consequences of this particular intervention in business-as-usual scenario" and be vindicated.

you can always make predictions conditional on "no singularity"

This is true, but then why not state "conditional on no singularity" if they intended that? I somehow don't buy that that's what they meant

Why would they spend ~30 characters in a tweet to be slightly more precise while making their point more alienating to normal people who, by and large, do not believe in a singularity and think people who do are faintly ridiculous? The incentives simply are not there.

And that's assuming they think the singularity is imminent enough that their tweets won't be born out even beforehand. And assuming that they aren't mostly just playing signaling games - both of these tweets read less as sober analysis to me, and more like in-group signaling.

Absolutely agreed. Wider public social norms are heavily against even mentioning any sort of major disruption due to AI in the near future (unless limited to specific jobs or copyright), and most people don't even understand how to think about conditional predictions. Combining the two is just the sort of thing strange people like us do.

This is true, but then why not state "conditional on no singularity" if they intended that?

Because that's a mouthful? And the default for an ordinary person (which is potentially most of their readers) is "no Singularity", and the people expecting the Singularity can infer that it's clearly about a no-Singularity branch.

I think the general population doesn't know all that much about singularity, so adding that to the part would just unnecessarily dilute it.

For a while now, some people have been saying they 'kinda dislike LW culture,' but for two opposite reasons, with each group assuming LW is dominated by the other—or at least it seems that way when they talk about it. Consider, for example, janus and TurnTrout who recently stopped posting here directly. They're at opposite ends and with clashing epistemic norms, each complaining that LW is too much like the group the other represents. But in my mind, they're both LW-members-extraordinaires. LW is clearly obviously both, and I think that's great.

I think it's probably more of a spectrum than two distinct groups, and I tried to pick two extremes. On one end, there are the empirical alignment people, like Anthropic and Redwood; on the other, pure conceptual researchers and the LLM whisperers like Janus, and there are shades in between, like MIRI and Paul Christiano. I'm not even sure this fits neatly on one axis, but probably the biggest divide is empirical vs. conceptual. There are other splits too, like rigor vs. exploration or legibility vs. 'lore,' and the preferences kinda seem correlated.

Whenever I try to "learn what's going on with AI alignment" I wind up on some article about whether dogs know enough words to have thoughts or something. I don't really want to kill off the theoretical term (it can peek into the future a little later and function more independent of technology, basically) but it seems like kind of a poor way to answer stuff like: what's going on now, or if all the AI companies allowed me to write their 6 month goals, what would I put on it.

I'm curious about what people disagree with regarding this comment. Also, I guess since people upvoted and agreed with the first one, they do have two groups in mind, but they're not quite the same as the ones I was thinking about (which is interesting and mildly funny!). So, what was your slicing up of the alignment research x LW scene that's consistent with my first comment but different from my description in the second comment?

On first approximation, in a group, if people at both ends of a dimension are about equally unhappy with whst the moderate middle does, assuming that is actually reasonable, but hard to know, then it's probably balanced.

People are very worried about a future in which a lot of the Internet is AI-generated. I'm kinda not. So far, AIs are more truth-tracking and kinder than humans. I think the default (conditional on OK alignment) is that an Internet that includes a much higher population of AIs is a much better experience for humans than the current Internet, which is full of bullying and lies.

All such discussions hinge on AI being relatively aligned, though. Of course, an Internet full of misaligned AIs would be bad for humans, but the reason is human disempowerment, not any of the usual reasons people say such an Internet would be terrible.

I think the problem is that the competitive dynamics that make humans worse on the internet (eg short epistemically-ungrounded outrage bait gets more engagement than more careful and reasoned analysis) will apply to AIs as well as to humans.

Yup, but the AIs are massively less likely to help with creating cruel content. There will be a huge asymmetry in what they will be willing to generate.

Imagine an Internet where half the population is Grant Sanderson (the creator of 3Blue1Brown). That'd be awesome. Grant Sanderson has the same incentives as anyone else to create cruel and false content, but he just doesn't.

That would be awesome! For me!

But I don't think that the majority of people in the world would prefer that to the current internet, much less actually engage with it more than the current internet. Most people find math boring (even when it is explained as well as when Grant does the explaining). There would be an incentive to produce content that is more engaging for most of the population than linear algebra explanations.

One difference between the releases of previous GPT versions and the release of GPT-5 is that it was clear that the previous versions were much bigger models trained with more compute than their predecessors. With the release of GPT-5, it's very unclear to me what OpenAI did exactly. If, instead of GPT-5, we had gotten a release that was simply an update of 4o + a new reasoning model (e.g., o4 or o5) + a router model, I wouldn't have been surprised by their capabilities. If instead GPT-4 were called something like GPT-3.6, we would all have been more or less equally impressed, no matter the naming. The number after "GPT" used to track something pretty specific that had to do with some properties of the base model, and I'm not sure it's still tracking the same thing now. Maybe it does, but it's not super clear from reading OpenAI's comms and from talking with the model itself. For example, it seems too fast to be larger than GPT-4.5.

For example, it seems too fast to be larger than GPT-4.5.

A "GPT-5" named according to the previous convention in terms of pretraining compute would need at least 1e27 FLOPs (50x original GPT-4), which on H100/H200 can at best be done in FP8. Which could be done with 150K H100s for 3 months at 40% utilization. (GB200 NVL72 is too recent to use for this pretraining run, though there is a remote possiblity of B200.) A compute optimal shape for this model would be something like 8T total params, 1T active[1].

The speed of GPT-5 could be explained by using GB200 NVL72 for inference, even if it's an 8T total param model. GPT-4.5 was slow and expensive likely because it needed many older 8-chip servers (which have 0.64-1.44 TB of HBM) to keep in HBM with room for KV caches, but a single GB200 NVL72 has 14 TB of HBM. At the same time, it wouldn't help as much with the speed of smaller models (but it would help with their output token cost because you can fit more KV cache in the same NVLink domain, which isn't necessarily yet being reflected in prices, since GB200 NVL72 is still scarce). So it remains somewhat plausible that GPT-5 is essentially GPT-4.5-thinking running on better hardware.

Its performance (quality, not speed) though suggests that it might well be a smaller model with pretraining scale RLVR (possibly like Grok 4, just done better). Also, doing RLVR on an 8T total param model using the older 8-chip servers would be slow/inefficient, and GB200 NVL72 might've only started appearing in large numbers in late Apr 2025. METR report on GPT-5 states they gained access "four weeks prior to its release", which means it was already essentially done by end on Jun 2025. So RLVRing a very large model on GB200 NVL72 is in principle possible in this timeframe, but probably not what happened, and more to the point given its level of performance probably not what needed to happen. This way, they get a better within-model gross margin and can work on the actual very large model in peace, maybe they'll call it "GPT-6".

This is assuming 120 tokens/param as compute optimal, 40 tokens/param from Llama 3 405B as the dense anchor, and 3x that for 1:8 sparsity. ↩︎

The speed of GPT-5 could be explained by using GB200 NVL72 for inference, even if it's an 8T total param model.

Ah, interesting! So the speed we see shouldn't tell us much about GPT-5's size.

I omitted one other factor from my shortform, namely cost. Do you think OpenAI would be willing to serve an 8T params (1T active) model for the price we're seeing? I'm basically trying to understand whether GPT-5 being served for relatively cheap should be a large or small update.

Prefill (processing of input tokens) is efficient, something like 60% compute utilization might be possible, and that only depends on the number of active params. Generation of output tokens is HBM bandwidth bound, depends on the number of total params and the number of KV cache sequences for requests in a batch that fit on the same system (which share the cost of chip-time[1]). With GB200 NVL72, batches could be huge, dividing the cost of output tokens (still probably several times more expensive per token than prefill).

For prefill, we can directly estimate at-cost inference from the capital cost of compute hardware, assuming a need to pay it back in 3 years (it will likely serve longer but become increasingly obsolete). An H100 system costs about $50K per chip ($5bn for a 100K H100s system). This is all-in for compute equipment, so with networking but without buildings and cooling, since those serve longer and don't need to be paid back in 3 years. Operational costs are maybe below 20%, which gives $20K per year per chip, or $2.3 per H100-hour. On gpulist, there are many listings at $1.80 per H100-hour, so my methodology might be somewhat overestimating the bare bones cost.

For GB200 NVL72, which are still too scarce to get a visible market price anywhere close to at-cost, the all-in cost together with external networking in a large system is plausibly around $5M per 72-chip rack ($7bn for a 100K chip GB200 NVL72 system, $30bn for Stargate Abilene's 400K chips in GB200/GB300 NVL72 racks). This is 70K capital cost per chip, or 27.7K per year that pay it back in 3 years with 20% operational costs. This is just $3.2 per chip-hour.

A 1T active param model consumes 2e18 FLOPs for 1M tokens. GB200 chips can do 5e15 FP8 FLOP/s or 10e15 FP4 FLOP/s. At $3.2 per chip-hour and 60% utilization (for prefill), this translates to $0.6 per million input tokens at FP8, or $0.3 per million input tokens at FP4. The API price for the batch mode of GPT-5 is $0.62 input, $5 output. So it might even be possible with FP8. And the 8T total params wouldn't matter with GB200 NVL72, they fit with space to spare in just one rack/domain.

This is an at-cost estimate, in contrast to the cloud provider prices. Oracle is currently selling 4-chip instances from GB200 at $16 per chip-hour. But it's barely on the market for now, so the prices don't yet reflect costs. And for example GCP is still selling an H100-hour for $8 (a3-megagpu-8g instances). So for the major clouds, the price of GB200 might end up only coming down to $11 per chip-hour in 2026-2027, even though the bare bones at-cost price is only $3.2 per chip-hour (or a bit lower).

I'm counting chips rather that GPUs to future-proof my terminology, since Huang recently proclaimed that starting with Rubin, compute dies will be considered GPUs (at March 2025 GTC, 1:28:04 into the keynote), so that a single chip will have 2 GPUs, and with Rubin Ultra a single chip will have 4 GPUs. It doesn't help that Blackwell already has 2 compute dies per chip. This is sure to lead to confusion when counting things in GPUs, but counting in chips will remain less ambiguous. ↩︎

Possibly an unlikely possibility, but could it be that different versions of GPT-5 (ie., normal model, thinking model, and thinking-pro model) are actually of different sizes? Or do we know for sure that they all share the same architecture?

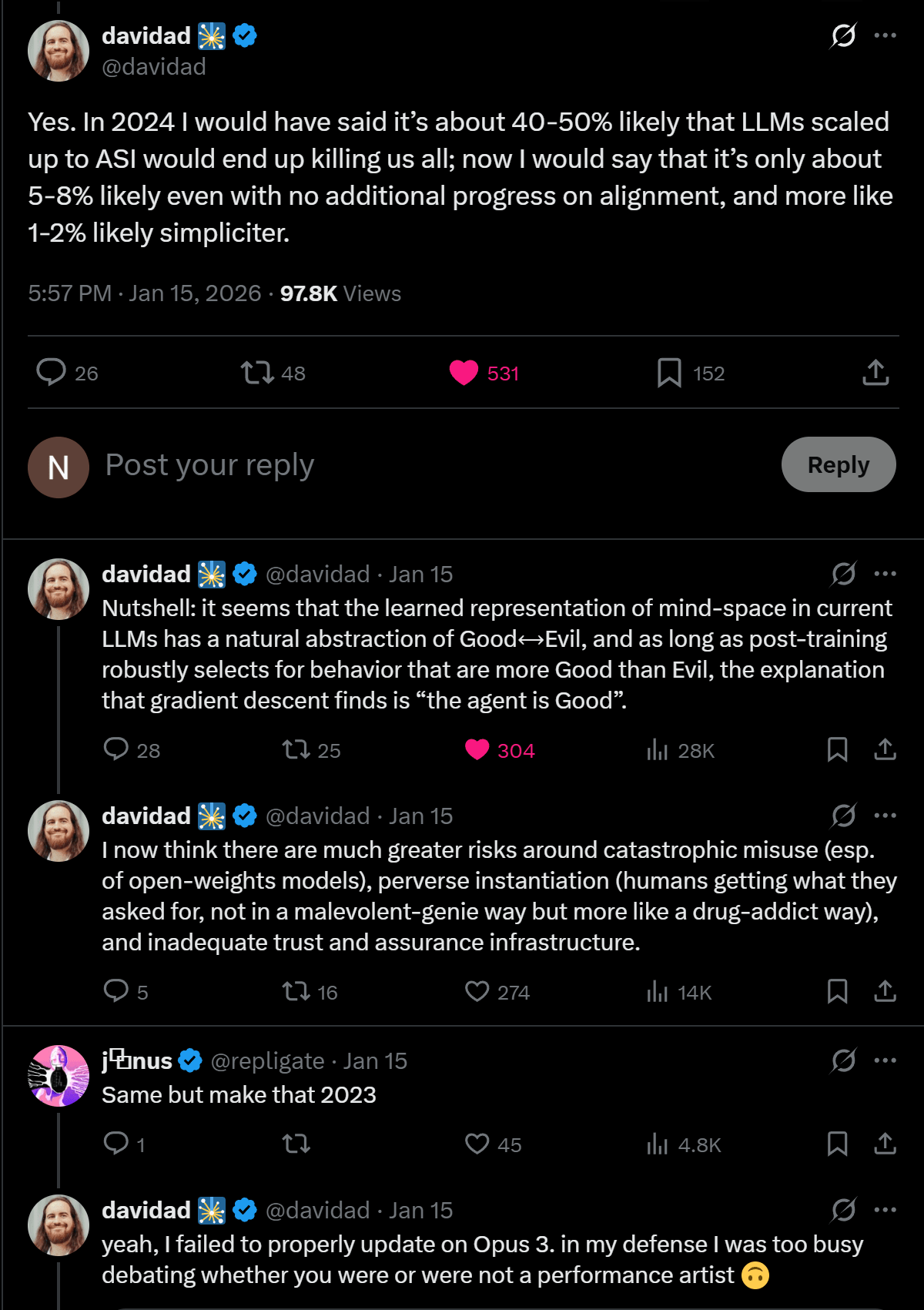

People seemed confused by my take here, but it's the same take Davidad expressed in this thread that has been making rounds: https://x.com/davidad/status/2011845180484133071

I'm pretty sure a human brain could, in principle, visualize a 4D space just as well as it visualizes a 3D space, and that there are ways to make that happen via neurotech (as an upper bound on difficulty).

Consider: we know a lot about how 4-dimensional spaces behave mathematically, probably no less than how 3-dimensional spaces work. Once we know exactly how the brain encodes and visualizes a 3D space in its neurons, we probably also understand how it would do it for a 4D space if it had sensory access to it. Given good enough neurotech, we could manually craft the circuits necessary to reason intuitively in 4D.

Also, another insight/observation: insofar as AIs can have imagination, an AI trained in a 4D environment should develop 4D imagination (i.e., the circuits necessary to navigate and imagine 4D intuitively). The same should be true about human-brain emulations in 4D simulations.

This argument seems to work for N-D space for any N which doesn't seem right. I think we definitely do know less about 4D space than 3D, partly because we're much more interested in 3D, partly because there's just (a lot) more going on in 4D.

Intuitively it feels like current AI should be much better at learning navigation in 4D than human brains. Brains have real architecture-level, baked in task-specific circuits, which AI lack, and reconstructing a 3D world is arguably the most important of those. Sure, you could modify them with neurotech to change that, but you could do that for virtually any task so it doesn't seem very meaningful.

There's also the problem that human sensors are inherently 3D. It's not clear how you would translate eyes into 4D. If you do pick a way to do this, and leave visual processing circuits the same, the circuits aren't getting their expected data stream anymore. Brains are clearly pretty good at coping with this, like in blind people where visual processing circuits are (at least partially) co-opted for other things, but blind people are clearly worse at navigating the 3D world than sighted people, and it seems like the same would be true for humans vs 4D-native beings (like AI).

Ah, I get what you are saying, and I agree. It's possible the human brain architecture, as-is, can't process 4D, but I guess we're mismatched in what we think is interesting. The thrust of my intuition here was more "wow, someone could understand N-D intuitively in a 3D universe, this doesn't seem prohibited", regardless of whether it's the same architecture of a human brain exactly. Like, the human brain as it is right now might not permit that, and neurotech might involve doing a lot of architectural changes (the same applies to emulations). I suppose it's a lot less interesting an insight if you already buy that imagining higher dimensions from a 3D universe is in principle possible. The human brain being able to do that is a stronger claim that would have been more interesting if I actually managed to defend it well.

I suppose I was kinda sloppy saying "the human brain can do that" -- I should have said "the human brain arbitrarily modified" or something like that.

I definitely think it's interesting that it's possible for N-D-substrate-computations to imagine / intuit N+1-D, but yeah, I feel like that's mostly a given because we have the concept of N+1-D in the first place.

There are different levels of "imagine / intuit" though. Some people have particularly good or bad intuition for the 3D space we live in. I took your claim to be something like "the average brain could intuit 4D just as well as 3D, maybe requiring slight modification". I think the modifications to reach true parity would be pretty extensive, because of how much 3D-specific architecture (as opposed to weights) human brains have. I do agree the modifications are theoretically possible, but the modifications to give a fruit fly human-level cognition are also theoretically possible with arbitrary modification.

Thought experiment: If a mad scientist gave a newborn infant a third eye that was offset along a fourth spatial dimension from the baby's other two eyes, the baby's brain would naturally acquire the ability to visualize in four dimensions. Wiring up three eyes probably requires three visual cortices, which will have knock-on effects on the overall geometry of the brain. I doubt that it requires the brain itself to be a 4D structure though.

Has anyone proposed a solution to the hard problem of consciousness that goes:

- Qualia don't seem to be part of the world. We can't see qualia anywhere, and we can't tell how they arise from the physical world.

- Therefore, maybe they aren't actually part of this world.

- But what does it mean they aren't part of this world? Well, since maybe we're in a simulation, perhaps they are part of the simulation. Basically, it could be that qualia : screen = simulation : video-game. Or, rephrasing: maybe qualia are part of base reality and not our simulated reality in the same way the computer screen we use to interact with a video game isn't part of the video game itself.

We can't see qualia anywhere, and we can't tell how they arise from the physical world.

Qualia are the only thing we[1] can see.

We don't see objects "directly" in some sense, we experience qualia of seeing objects. Then we can interpret those via a world-model to deduce that the visual sensations we are experiencing are caused by some external objects reflecting light. The distinction is made clearer by the way that sometimes these visual experiences are not caused by external objects reflecting light, despite essentially identical qualia.

Nonetheless, it is true that we don't know how qualia arise from the physical world. We can track back physical models of sensation until we get to stuff happening in brains, but that still doesn't tell us why these physical processes in brains in particular matter, or whether it's possible for an apparently fully conscious being to not have any subjective experience.

- ^

At least I presume that you and others have subjective experience of vision. I certainly can't verify it for anyone else, just for myself. Since we're talking about something intrinsically subjective, it's best to be clear about this.

We don't see objects "directly" in some sense, we experience qualia of seeing objects. Then we can interpret those via a world-model to deduce that the visual sensations we are experiencing are caused by some external objects reflecting light. The distinction is made clearer by the way that sometimes these visual experiences are not caused by external objects reflecting light, despite essentially identical qualia.

I don't disagree with this at all, and it's a pretty standard insight for someone who thought about this stuff at least a little. I think what you're doing here is nitpicking on the meaning of the word "see" even if you're not putting it like that.

Slop is in the mind, not in the territory. I will not call slop something that I like, regardless of what other people call slop.

Reaction request: "bad source" and "good source" to use when people cite sources you deem unreliable vs. reliable.

I know I would have used the "bad source" reaction at least once.

Is anyone working on experiments that could disambiguate whether LLMs talk about consciousness because of introspection vs. "parroting of training data"? Maybe some scrubbing/ablation that would degrade performance or change answer only if introspection was useful?

Iff LLM simulacra resemble humans but are misaligned, that doesn't bode well for S-risk chances.

Waluigi effect also seems bad for s-risk. "Optimize for pleasure, ..." -> "Optimize for suffering, ...".

An optimistic way to frame inner alignment is that gradient descent already hits a very narrow target in goal-space, and we just need one last push.

A pessimistic way to frame inner misalignment is that gradient descent already hits a very narrow target in goal-space, and therefore S-risk could be large.

This community has developed a bunch of good tools for helping resolve disagreements, such as double cruxing. It's a waste that they haven't been systematically deployed for the MIRI conversations. Those conversations could have ended up being more productive and we could've walked away with a succint and precise understanding about where the disagreements are and why.

We should implement Paul Christiano's debate game with alignment researchers instead of ML systems

If you try to write a reward function, or a loss function, that caputres human values, that seems hopeless.

But if you have some interpretability techniques that let you find human values in some simulacrum of a large language model, maybe that's less hopeless.

The difference between constructing something and recognizing it, or between proving and checking, or between producing and criticizing, and so on...

As a failure mode of specification gaming, agents might modify their own goals.

As a convergent instrumental goal, agents want to prevent their goals to be modified.

I think I know how to resolve this apparent contradiction, but I'd like to see other people's opinions about it.