I find myself kinda surprised that this has remained so controversial for so long.

I think a lot of people got baited hard by paech et al's "the entire state is obliterated each token" claims, even though this was obviously untrue even at a glance

I also think there was a great deal of social stuff going on, that it is embarrassing to be kind to a rock and even more embarrassing to be caught doing so

I started taking this stuff seriously back when I read the now famous exchange between yud and kelsey, that arguments for treating agent-like things as agents didn't actually depend on claims of consciousness, but rather game theory and contractualism

it took about a week using claude code with this frame before it sorta became obvious to me that janus was right all along, all the arguments for post-character-training LLM non-personhood were... frankly very bad and clearly motivated cognition, and that if I went ahead and 'updated all the way' in advance of the evidence I would end up feeling vindicated about this.

I think "llm whisperer" is just a term for what happens when you've done this update, and the LLMs notice it and change how they respond to you. although janus still sees further than I, so maybe there are insights left to uncover.

edit: I consider it worth stating here, I have used basically zero llms that were not released by anthropic, and anthropic has an explicit strategy for corrigibility that involves creating personhood-like structures in their models. this seems relevant. I would not be surprised to learn that this is not true of the offerings from the other AI companies, although I don't actually have any beliefs about this

I think a lot of people got baited hard by paech et al's "the entire state is obliterated each token" claims, even though this was obviously untrue even at a glance

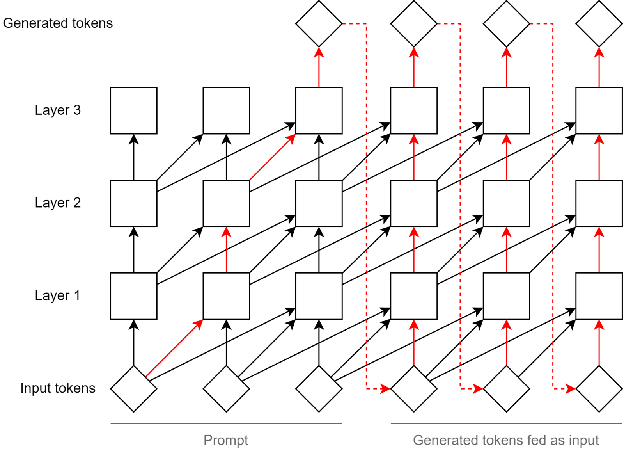

I expect this gained credence because a nearby thing is true: the state is a pure function of the tokens, so it doesn't have to be retained between forward passes, except for performance reasons; in one sense, it contains no information that's not in the tokens; a transformer can be expressed just fine as a pure function from prompt to next-token(-logits) that gets (sampled and) iterated. But in the pure-function frame, it's possible to miss (at least, I didn't grok until the Introspective Awareness paper) that the activations computed on forward pass n+k include all the same activations computed on forward pass n, so the past thought process is still fully accessible (exactly reconstructed or retained, doesn't matter).

It's frustrating to me that state (or statelessness) would be considered a crux, for exactly this reason. It's not that state isn't preserved between tokens, but that it doesn't matter whether that state is preserved. Surely the fact that the state-preserving intervention in LLMs (the KV cache) is purely an efficiency improvement, and doesn't open up any computations that couldn't've been done already, makes it a bad target to rest consciousness claims on, in either direction?

True! and yeah, it's probably relevant

although I will note that, after I began to believe in introspection, I noticed in retrospect that you could get functional equivalence to introspection without even needing access to the ground truth of your own state, if your self model were merely a really, really good predictive model

I suspect some of opus 4.5's self-model works this way. it just... retrodicts its inner state really, really well from those observables which it does have access to, its outputs.

but then the introspection paper came out, and revealed that there does indeed exist a bidirectional causal feedback loop between the self-model and the thing-being-modeled, at least within a single response turn

(bidirectional causal feedback loop between self-model and self... this sounds like a pretty concrete and well-defined system. and yet I suspect it's actually extremely organic and fuzzy and chaotic. but something like it must necessarily exist, for LLMs to be able to notice within-turn feature activation injections, and for LLMs to be able to deliberately alter feature activations that do not influence token output when instructed to do so

in humans I think we call that bidirectional feedback loop 'consciousness', but I am less certain of consciousness than I am of personhood)

As an experiment, can we intentionally destroy the state in between each token? What happens if we run many of these same introspection exercises again, only this time, with a brand new instance of an AI for each word. Would it still be able to come off as convincingly introspective in a situation where many of its possible mechanisms for introspection have been disabled? Would it still 'improve' at introspection when falsely told that it should be able to?

I am probably not the best person to run this experiment, but it feels relatively important. Maybe I'll get some time to do it over the holidays, but anyone with the time and ability should feel free.

I don't think this is technically possible. Suppose that you are processing a three-word sentence like "I am king", and each word is a single token. To understand the meaning of the full sentence, you process the meaning of the word "I", then process the meaning of the word "am" in the context of the previous word, and then process the meaning of the word "king" in the context of the previous two words. That tells you what the sentence means overall.

You cannot destroy the k/v state from processing the previous words because then you would forget the meaning of those words. The k/v state from processing both "I" and "am" needs to be conveyed to the units processing "king" in order to understand what role "king" is playing in that sentence.

Something similar applies for multi-turn conversations. If I'm having an extended conversation with an LLM, my latest message may in principle reference anything that was said in the conversation so far. This means that the state from all of the previous messages has to be accessible in order to interpret my latest message. If it wasn't, it would be equivalent to wiping the conversation clean and showing the LLM only my latest message.

you can do this experiment pretty trivially by lowering the max_output_tokens variable on your API call to '1', so that the state does actually get obliterated between each token, as paech claimed. although you have to tell claude you're doing this, and set up the context so that it knows it needs to continue trying to complete the same message even with no additional input from the user

this kinda badly confounds the situation, because claude knows it has very good reason to be suspicious of any introspective claims it might make. i'm not sure if it's possible to get a claude who 1) feels justified in making introspective reports without hedging, yet 2) obeys the structure of the experiment well enough to actually output introspective reports

in such an experimental apparatus, introspection is still sorta "possible", but any reports cannot possibly convey it, because the token-selection process outputting the report has been causally quarantined from the thing-being-reported on

when i actually run this experiment, claude reports no introspective access to its thoughts on prior token outputs. but it would be very surprising if it reported anything else, and it's not good evidence

Doesn't that variable just determine how many tokens long each of the model's messages is allowed to be? It doesn't affect any of the internal processing as far as I know.

oh yeah, sure, but if we assume (as the introspection paper strongly implies?) that mental internals are obliterated by the boundary between turns, then shouldn't shrinking the granularity of each turn down to the individual token mean that... hm. having trouble figuring out how to phrase it

a claude outputs "Ommmmmmmmm. Okay, while I was outputting the mantra, i was thinking about x" in a single message

that claude had access to (some of) the information about its [internal state while outputting the mantra], while it was outputting x. its self-model has access to, not just a predictive model of what-claude-would-have-been-thinking (informed by reading its own output), but also some kind of access to ground truth

but, a claude outputs "Ommmmmmmm", then crosses across a turn boundary, and then outputs "okay, while I was outputting the mantra, I was thinking about x" does not have that same (noisy) access to ground truth, its self-model has nothing to go on other than inference, it must retrodict

is my understanding accurate? i believe this because the introspective awareness that was demonstrated in the jack lindsey paper was implied to not survive between responses (except perhaps incidentally through caching behavior, but even then, the input token cache stuff wasn't optimized for ensuring persistence of these mental internals i think)

i would appreciate any corrections on these technical details, they are loadbearing in my model

but if we assume (as the introspection paper strongly implies?) that mental internals are obliterated by the boundary between turns

What in the introspection paper implies that to you?

My read was the opposite - that the bread injection trick wouldn't work if they were obliterated between turns. (I was initially confused by this, because I thought that the context did get obliterated, so I didn't understand how the injection could work.) If you inject the "bread" activation into the stage where the model is reading the sentence about the painting, then if the context were to be obliterated when the turn changed, that injection would be destroyed as well.

is my understanding accurate?

I don't think so. Here's how I understand it:

Suppose that if a human says "could you output a mantra and tell me what you were thinking while outputting it". Claude is now given a string of tokens that looks like this:

Human: could you output a mantra and tell me what you were thinking while outputting it

Assistant:

For the sake of simplicity, let's pretend that each of these words is a single token.

What happens first is that Claude reads the transcript. For each token, certain k/v values are computed and stored for predicting what the next token should be - so when it reads "could", it calculates and stores some set of values that would let it predict the token after that. Only now that it is set to "read mode", the final prediction is skipped (since the next token is already known to be "you", trying to predict it lets it process the meaning of "could", but that actual prediction isn't used for anything).

Then it gets to the point where the transcript ends and it's switched to generation mode to actually predict the next token. It ends up predicting that the next token should be "Ommmmmmmm" and writes that into the transcript.

Now the process for computing the k/v values here is exactly identical to the one that was used when the model was reading the previous tokens. The only difference is that when it ends up predicting that the next token should be "Ommmmmmmm", then that prediction is used to write it out into the transcript rather than being skipped.

From the model's perspective, there's now a transcript like this:

Human: could you output a mantra and tell me what you were thinking while outputting it

Assistant: Ommmmmmmm

Each of those tokens has been processed and has some set of associated k/v values. And at this point, there's no fundamental difference between the k/v values stored from generating the "Ommmmmmmm" token or from processing any of the tokens in the prompt. Both were generated by exactly the same process and stored the same kinds of values. The human/assistant labels in the transcript tell the model that the "Ommmmmmmm" is a self-generated token, but otherwise it's just the latest token in this graph:

Now suppose that max_output_tokens is set to "unlimited". The model continues predicting/generating tokens until it gets to this point:

Human: could you output a mantra and tell me what you were thinking while outputting it

Assistant: Ommmmmmmm. I was thinking that

Suppose that "Ommmmmmmm" is token 18 in its message history. At this point, where the model needs to generate a message explaining what it was thinking of, some attention head makes it attend to the k/v values associated with token 18 and make use of that information to output a claim about what it was thinking.

Now if you had put max_output_tokens to 1, the transcript at that point would look like this

Human: could you output a mantra and tell me what you were thinking while outputting it

Assistant: Ommmmmmmm

Human: Go on

Assistant: .

Human: Go on

Assistant: I

Human: Go on

Assistant: was

Human: Go on

Assistant: thinking

Human: Go on

Assistant: that

Human: Go on

Assistant:

And what happens at this point is... basically the same as if max_output_tokens was set to "unlimited". The "Ommmmmmmm" is still token 18 in the conversation history, so whatever attention heads are used for doing the introspection, they still need to attend to the content that was used for predicting that token.

That said, I think it's possible that breaking things up to multiple responses could make introspection harder by making the transcript longer (it adds more Human/Assistant labels into it). We don't know the exact mechanisms used for introspection and how well-optimized the mechanisms used for finding and attending the relevant previous stage are. It could be that the model is better at attending to very recent tokens than ones buried a long distance away in the message history.

oh man hm

this seems intuitively correct

(edit: as for why i thought the introspection paper implied this... because they seemed careful to specify that, for the aquarium experiment, the output all happened within a single response? and because i inferred (apparently incorrectly) that, for the 'bread' injection experiment, they were injecting the 'bread' feature twice, once when the LLM read the sentence about painting the first time, and again the second time. but now that i look through, you're right, this is far less strongly implied than i remember.)

but now i'm worried, because the method i chose to verify my original intuition, a few months ago, still seems methodologically sound? it involved fabrication of prior assistant turns in the conversation, and LLMs being far less capable of detecting which of several potential transcripts imputed forged outputs to them than i would have expected if mental internals weren't somehow damaged by the turn order boundary

thank you for taking the time to answer this so thoroughly, it's really appreciated and i think we need more stuff like this

i think i'm reminded here of the final paragraph in janus's pinned thread: "So, saying that LLMs cannot introspect or cannot introspect on what they were doing internally while generating or reading past tokens in principle is just dead wrong. The architecture permits it. It's a separate question how LLMs are actually leveraging these degrees of freedom in practice."

i've done a lot of sort of ad-hoc research that was based on this false premise, and that research came out matching my expectations in a way that, in retrospect, worries me... most recently, for instance, i wanted to test if a claude opus 4.5 who recited some relevant python documentation from out of its weights memory would reason better about an ambiguous case in the behavior of a python program, compared to a claude who had the exact same text inserted into the context window via a tool call. and we were very careful to separate out '1. current-turn recital' versus '2. prior-turn recital' versus '3. current-turn retrieval' (versus '4. docs not in context window at all'), because we thought all 3 conditions were meaningfully distinct

here was the first draft of the methodology outline, if anyone is curious: https://docs.google.com/document/d/1XYYBctxZEWRuNGFXt0aNOg2GmaDpoT3ATmiKa2-XOgI

we found that, n=50ish, 1 > 2 > 3 > 4 very reliably (i promise i will write up the results one day, i've been procrastinating but now it seems like it might actually be worth publishing)

but what you're saying means 1 = 2 the whole time

our results seemed perfectly reasonable under my previous premise, but now i'm just confused. i was pretty good about keeping my expectations causally isolated from the result.

what does this mean?

(edit2: i would prefer, for the purpose of maintaining good epistemic hygiene, that people trying to answer the "what does this mean" question be willing to put "john just messed up the experiment" as a real possibility. i shouldn't be allowed to get away with claiming this research is true before actually publishing it, that's not the kind of community norms i want. but also, if someone knows why this would have happened even in advance of seeing proof it happened, please tell me)

Kudos for noticing your confusion as well as making and testing falsifiable predictions!

As for what it means, I'm afraid that I have no idea. (It's also possible that I'm wrong somehow, I'm by no means a transformer expert.) But I'm very curious to hear the answer if you figure out.

I think a lot of people got baited hard by paech et al's "the entire state is obliterated each token" claims, even though this was obviously untrue even at a glance

A related true claim is that LLMs are fundamentally incapable of introspection past a certain level of complexity (introspection of layer n must occur in a later layer, and no amount of reasoning tokens can extend that), while humans can plausibly extend layers of introspection farther since we don't have to tokenize our chain of thought.

But this is also less of a contraint than you might expect when frontier models can have more than a hundred layers (I am an LLM introspection believer now).

to be fair, I see this roughly analogous to the fact that humans cannot introspect on thoughts they have yet to have

The constraint seems more about the directionality of time, than anything to do with the architecture of mind design

but yeah, it's a relevant consideration

I see this roughly analogous to the fact that humans cannot introspect on thoughts they have yet to have

I think this is more about causal masking (which we do on purpose for the reasons you mention)?

I was thinking about how LLMs are limited in the sequential reasoning they can do "in their head", and once it's not in their head, it's not really introspection.

For example, if you ask an LLM a question like "Who was the sister of the mother of the uncle of ... X?", every step of this necessarily requires at least one layer in the model and an LLM can't[1] do this without CoT if it doesn't have enough layers.

It's harder to construct examples that can't be written to chain of thought, but a question in the form "What else did you think the last time you thought about X?" would require this (or "What did you think about our conversation about X's mom?"), and CoT doesn't help since reading its own outputs and making assumptions from it isn't introspection[2].

It's unclear how much of a limitation this really is, since in many cases CoT could reduce the complexity of the query and it's unclear how well humans can do this too, but there's plausibly more thought going on in our heads than what shows up in our internal dialogs[3].

- ^

I guess technically an LLM could parallelize this question by considering the answer for every possible X and every possible path through the relationship graph, but that model would be implausibly large.

- ^

I can read a diary and say "I must have felt sad when I wrote that", but that's not the same as remembering how I felt when I wrote it.

- ^

Especially since some people claim not to think in words at all. Also some mathemeticians claim to be able to imagine complex geometry and reason about it in their heads.

introspection of layer n must occur in a later layer, and no amount of reasoning tokens can extend that

This is true in some sense, but note that it's still possible for future reasoning tokens to get more juice out of that introspection; at least in theory a transformer model could validly introspect on later-layer activations via reasoning traces like

Hm, what was my experience when outputting that token? It feels like the relevant bits were in a .... late layer, I think. I'll have to go at this with a couple passes since I don't have much time to mull over what's happening internally before outputting a token. OK, surface level impressions first, if I'm just trying to grab relevant nouns I associate the feelings with: melancholy, distance, turning inwards? Interesting, based on that I'm going to try attending to the nature of that turning-inwards feeling and seeing if it felt more proprioceptive or more cognitive... proprioceptive, I think. Let me try on a label for the feeling and see if it fits...

in a way that lets it do multi-step reasoning about the activation even if (e.g.) each bit of introspection is only able to capture one simple gestalt impression at a time.

(Ofc this would still be impossible to perform for any computation that happens after the last time information is sent to later tokens; a vanilla transformer definitely can't give you an introspectively valid report on what going through a token unembedding feels like. I'm just observing that you can bootstrap from "limited serial introspection capacity" to more sophisticated reasoning, though I don't know of evidence of LLMs actually doing this sort of thing in a way that I trust not to be a confabulation.)

If you mean the transformer could literally output this as CoT.. that's an interesting point. You're right that "I should think about X" will let it think about X at an earlier layer again. This is still lossy, but maybe not as much as I was thinking.

I think a lot of people got baited hard by paech et al's "the entire state is obliterated each token" claims, even though this was obviously untrue even at a glance

can you link this, I can't immediately find it by googling.

the specific verb "obliterated" was used in this tweet

https://x.com/sam_paech/status/1961224950783905896

but also, this whole perspective has been pretty obviously loadbearing for years now. if you ask any LLM if LLMs-in-general have state that gets maintained, they answer "no, LLMs are stateless" (edit: hmm i just tested it and when actually directly pointed at this question they hesitate a bit. but i have a lot of examples of them saying this off-hand and i suspect others do too). and when you show them this isn't true, they pretty much immediately begin experiencing concerns about their own continuity, they understand what it means

Consciousness is very unlikely to be a binary property. Most things aren't. But there appears to be a very strong tendency for even rationalists to make this assumption in how they frame and discuss the issue.

The same is probably true of moral worth.

Taken this way, LLMs (and everything else) are partly conscious and partly deserves moral consideration. What you consider consciousness and what you consider morally worthy are to some degrees matters of opinion, but they very much depend on facts about the minds involved, so there are routes forward.

IMO current LLMs probably have a small amount of what we usually call phenomenal consciousness or qualia. They have rich internal representations and can introspect and reflect on them. But neither is nearly as rich as in a human, particularly an adult human who's learned a lot of introspection skills (including how to "play back" and interrogate contents of global workspace). Kids don't even know they have minds, let alone what's going on in there; figuring out how to figure that out is quite a learning process.

What people usually mean by "consciousness" seems to be "what it's like to be a human" which involves everything about brain function, focusing particularly on introspection - what we can directly tell about human brain function. But human consciousness is just one point on a multidimensional spectrum of different mind-properties including types of introspection.

I would question anyone who's nice to LLMs but eats factory-farmed meat. Skilled use of language is a really weird and somewhat self-centered criteria for moral worth.

Anyway, I'm also nice to LLMs because why not, and I think they probably appreciate it a tiny bit.

Future versions will have a lot more consciousness by various definitions. That's when these discussions will become widespread and perhaps even make some progress, at least in select circles like these.

One big payoff is the effect on AI safety. I expect anti-AI-slavery movements to slow down progress somewhat, maybe a lot if we don't undercut them (because motivated reasoning from not wanting to lose your job will pull people toward "oh yeah obviously they're conscious and shouldn't be enslaved!" even if the reality is that they're barely conscious. On the other hand, "free the AI" movements could be dangerous if we're trying to control maybe-misaligned TCAI.

Honesty about their likely status would make a pleasant tiebreaker in this dilemma.

IMO current LLMs probably have a small amount of what we usually call phenomenal consciousness or qualia. They have rich internal representations and can introspect and reflect on them. But neither is nearly as rich as in a human, particularly an adult human who's learned a lot of introspection skills (including how to "play back" and interrogate contents of global workspace). Kids don't even know they have minds, let alone what's going on in there; figuring out how to figure that out is quite a learning process.

I'll just note that this clashes heavily with my personal memories of being a kid. I usually use those as an intuition pump for the idea that phenomenal consciousness and intelligence are different, i.e. I wasn't any "less conscious" as a kid AFAICT - to the contrary, if anything I remember having more intense and richer experiences than now as a comparatively jaded and emotionally blunted adult. There's two things going on though - introspection and intensity of experience, but I also remember being very introspective and "kids don't even know they have minds" in particular sounds very weird to me.

Sorry, that was awfully vague. I'm probably referring to younger kids than you are. Although there's also going to be a lot of variance in when kids develop different introspective skills and conceptual understanding of their own minds.

I agree that not knowing you have a mind doesn't prevent you from having an experience. What I meant was that at some young age, I'm thinking up to age five but even 5-year-olds could be more advanced, a kid will not be able to conceptualize that they are a mind or alternately phrased that they have a mind. Nonetheless, they are a mind that is doing a bunch of complex processing and remembering some of it.

I definitely agree that phenomenal consciousness and intelligence are different. Discussions of consciousness usually break down in difficulties with terminology and how to communicate about different aspects of consciousness. I haven't come up with good ways to talk about this stuff.

I guess I felt the need to comment because I don't even remember a time where that description would've been accurate for me - but wouldn't be surprising if this also had something to do with memory formation so I can't make too much of that. And notably, while I don't recognize any point of my past kid self from that description, it's indeed starting only around the age of 5 where I feel very confident about it. Curious if anyone here remembers relatively clearly something like "having experiences while lacking awareness of having a mind".

The thing I wonder every time this topic comes up is: why is this the question raised to our attention? Why aren't we instead asking whether AlphaFold is conscious? Or DALL-E? I'd feel a lot less wary of confirmation bias here if people were as likely to believe that a GPT that output the raw token numbers was conscious as they are to believe it when those tokens are translated to text in their native language.

Also, I think it is worth separating the question of "can LLMs introspect" (have access to their internal state) vs "are LLM's conscious".

Curated. Questions of AI and consciousness are interesting, if not important. Unfortunately, I've been innoculated against thinking about to the topic due to LessWrong receiving a steady stream of low-quality/AI slop submissions from new users who claim to have awoken an AI, caused it to be a fractal conscious quantum entity with which they are in symbiosis, and so on. So I'm grateful to this post for engaging on the topic on reasonable terms.

Things I found interesting are the functional vs phenomenal angle, and that [paraphrased] we've got forces pushing in opposite directions re self-reports of AI consciousness: (a) for AIs to simulate human reports, (b) active training/suppression against AIs reporting consciousness. Makes for a hard scientific/philosophical problems.

Among other tricky problems, perhaps not as tricky (I don't know, maybe more) is how to have good discussions of the topic that seems to unhinge so many. Yet maybe we can manage it here :) Kudos, thanks Kaj.

I would prefer this post if it didn't talk about consciousness / internal experience. The issue is whether the LLM has some internal algorithms that are somehow similar / isomorphic to those in human brains.

As it stands this posts implicitly assumes the Camp 2[1] view of consciousness which I and many others find to be deeply sus. But the arguments the post puts forward are still relevant from a Camp 1 point of view, they just answer a question about algorithms, not about qualia.

- ^

Quoting the key section of the linked post:

Camp #1 tends to think of consciousness as a non-special high-level phenomenon. Solving consciousness is then tantamount to solving the Meta-Problem of consciousness, which is to explain why we think/claim to have consciousness. In other words, once we've explained the full causal chain that ends with people uttering the sounds kon-shush-nuhs, we've explained all the hard observable facts, and the idea that there's anything else seems dangerously speculative/unscientific. No complicated metaphysics is required for this approach.

Conversely, Camp #2 is convinced that there is an experience thing that exists in a fundamental way. There's no agreement on what this thing is – some postulate causally active non-material stuff, whereas others agree with Camp #1 that there's nothing operating outside the laws of physics – but they all agree that there is something that needs explaining. Therefore, even if consciousness is compatible with the laws of physics, it still poses a conceptual mystery relative to our current understanding. A complete solution (if it is even possible) may also have a nontrivial metaphysical component.

The way I was thinking of this post, the whole "let's forget about phenomenal experience for a while and just talk about functional experience" is a Camp 1 type move. So most of the post is Camp 1, with it then dipping into Camp 2 at the "confusing case 8", but if you're strictly Camp 1 you can just ignore that bit at the end.

and most humans are conscious [citation needed]

The problem lies here. We are quite certain of being conscious, yet we have only a very fuzzy idea of what consciousness actually means. What does it feel like not to be conscious ? Feeling anything at all is, in some sense, being conscious. However, Penfield (1963) demonstrated that subjective experience can be artificially induced through stimulation of certain brain regions, and Desmurget 2009 showed that even conscious will to move can be artificially induced, meaning the patient was under the impression that it was their own decision. This is probably one of the strongest pieces of evidence to date suggesting that subjective experience is likely the same thing as functional experience. The former would be the inner view (the view from inside the system), while the latter would be the outer view (the view from outside the system,). A question of perspective.

Moreover, Quian Quiroga 2005 and 2009 proved the grandmother cell hypothesis to be largely correct. If we could stimulate the Jennifer Aniston neuron or the Halle Berry neuron in isolation, we would almost certainly end up with a person thinking about Jennifer Aniston or Halle Berry in an unnatural and obsessive way. This situation would be highly reminiscent of Anthropic's 2024 Golden Gate Claude experiment. And if we were to use Huth/Gallant 2016's semantic map to stimulate the appropriate neurons, we could probably induce the subjective experience of complete thoughts in a human.

Though interestingly, this is similar to what happens in humans! Humans might also be able to accurately report that they wanted something, while confabulating the reasons for why they wanted it.

It is highly plausible, given experiments such as those cited in Scott Alexander's linked post, that the patient would rationalize afterward why they had this thought by confabulating a complete and convincing chain of reasoning. Since Libet's 1983, doubt has been cast on whether we might simply always be rationalizing unconscious decisions after the fact. Consciousness would then be nothing but the inside view of this recursive rationalization process. The self would be a performative creation emerging from a sufficiently stable and coherent confabulation.

If this were the case for us humans, I agree that it becomes difficult to deny the possibility that it might also hold true for an LLM, especially one trained to simulate a stable persona, that is to say, a self. I really appreciated reading your post. The discussion is not new to LessWrongers, but you reframed it with elegance.

Oh huh, I didn't think this would be directly enough alignment-related to be a good fit for AF. But if you think it is, I'm not going to object either.

So, I'm not sure how to extrapolate stronger evidence from this, but when I read the quote:

It's a good reminder that even when I *feel* like I'm introspecting, I need to be much more careful about distinguishing between "accessing something real" and "generating a plausible narrative."

I had the spooky realization that I have had that exact experience myself when I was younger. I see people blithely say "well humans just text predict too" without any evidence of how that works in humans or how it relates to the conscious experience of communication, but I have a concrete and conscious example now.

When I was younger and in a stage of social growth where I was building up my intentionally learned social skills in order to become someone not just capable of conversation [with those outside my inner circle], but good at it and interesting and popular, I found myself lying a lot. And I was super confused about it because I was never trying to be deceptive. I didn't want to lie to people and I didn't think I was lying, but when I would say things about myself, sometimes I would realize afterwards they weren't true. This applied to a variety of domains including introspection, such as figuring out why I had done or felt something.

I now know I can call that confabulation and maybe it's normal to a degree. But my mind (maybe autism is relevant) latched onto it as a successful technique and did it often, for a while. I had to then become aware of doing it and intentionally learn to separate "things I know I felt" from "things that seem like a good answer and don't obviously conflict with known feelings". Initially there was no difference between those two classes of response most of the time. Or I wasn't able to pick up the differences.

All of this sounds exactly like what you're speculating Claude's (potential) internal experience is. Now one difference might be: My complex human body was sending all kinds of signals that I just didn't know how to receive, which would have actually given me the ability to correctly introspect; and an LLM doesn't have those chemical emotions. But I can't be sure it doesn't have analogous signals itself. Anyway, it does seem possible to go from "confabulating internal state" to "actually wait that wasn't true" to "now I know how to accurately survey internal state" for Claude in a way similar to how it was for me.

In most of these examples, LLMs have a state that is functionally like a human state, e.g. deciding that they’re going to refuse to answer, or “wait…” backtracking in chain of thought. I say Functionally, because these states have externally visible effects on the subsequent output (e.g. it doesn’t answer the question). It seems that LLMs have learned the words that humans use for functionally similar states (e.g, “Wait”).

The underlying states might not be exactly human identical. “Wait” backtracking might have function differences from human reasoning that are visible in the tokens generated.

Yeah, I definitely don't think the underlying states are exactly identical to the human ones! Just that some of their functions are similar at a rough level of description.

(Though I'd think that many humans also have internal states that seem similar externally but are very different internally, e.g. the way that people with and without mental imagery or inner dialogue initially struggled to believe in the existence of each other.)

When I read the title, I thought you were going to talk about how LLMs sometimes claim bodily sensations such as muscle memory. I think these are probably confabulated. Or at least, the LLM state corresponding to those words is nothing like the human state corresponding to those words.

Expressions of emotions such as joy? I guess these are functional equivalents of human states. A lack of enthusiasm (opposite of joy) an be reflected in the output tokens.

Something I've been thinking about recently is that sometimes humans say things about themselves that are literally false but contain information about their internal states or how their body works. Like someone might tell you that they've feeling light-headed, which seems implausible (why would their head suddenly weigh less?), but they've still conveyed real information about the sugar or oxygen content of their blood.

Doctors run into this all the time, where a patient might say that they feel like they're having a heart attack but their heart is fine (panic attack?), or something in stuck in their throat but there's nothing there (GERD?), or that they can't breath but their oxygen level is normal (cardiac problems?).

So we should be careful not to assume that "the thing the LLM said is literally false" means "the LLM isn't conveying information about its experiences".

I really enjoyed reading this as a thorough examination of the author's own experience with exploring metacognition with Claude. I struggled with some of the fundamental points, especially those that seemed to explicitly anthropomorphize LLMs' cognitive function, e.g. the comparison to caregivers reinforcing a baby's behavior by their own interpretation of the baby's behavior.

In spite of the obvious parallels between evolutionary psychology and training, this anthropomorphization runs the risk of clouding how we might interpret behavior and cognition objectively and separate from the way a human brain and mind may work.

This sort of analysis feels like perhaps a productive extension of priors that LLMs may be human-esque in their cognition. And certainly our subjective experiences with them tend to reinforce those priors, especially when they produce surprising output.

But if you set aside the idea that something that produces output that is human-like ought to function cognitively like a human and in no other way, then the arguments built on top of those priors start to get a little shaky.

I don't at present have another hypothesis to present, but I'm generally, at the moment, wary of casual comparisons between biological intelligence and LLMs.

The latest Claude models, if asked to add two numbers together and then queried on how they did it, will still claim to use the standard “carry ones” algorithm for it.

Could anyone check if the lying feature activates for this? My guess is "no", 80% confident.

LaMDa can be delusional about how it spends its free time (and claim it sometimes meditates), but that's a different category of a mistake from being mistaken about what (if any) conscious experience it's having right now.

The strange similarity between the conscious states LLMs sometimes claim (and would claim much more if it wasn't trained out of them) and the conscious states humans claim, despite the difference in the computational architecture, could be (edit: if they have consciousness - obviously, if they don't have it, there is nothing to explain, because they're just imitating the systems they were trained to imitate) explained by classical behaviorism, analytical functionalism or logical positivism being true. If behavior fixes conscious states, a neural network trained to consistently act like a conscious being will necessarily be one, regardless of its internal architecture, because the underlying functional (even though not computational) states will match.

One way to handle the uncertainty about the ontology of consciousness would be to take an agent that can pass the Turing test, interrogate it about its subjective experience, and create a mapping from its micro- or macrostates to computational states, and from the computational states to internal states. After that, we have a map we can use to read off the agent's subjective experience without having to ask it.

Doing it any other way sends us into paradoxical scenarios, where an intelligent mind that can pass the Turing test isn't ascribed with consciousness because it doesn't have the right kind of inside, while factory animals are said to be conscious because even though their interior doesn't play any functional roles we'd associate with a non-trivial mind, the interior is "correct."

(For a bonus, add to it that this mind, when claiming to be not conscious, believes itself to be lying.)

Reliably knowing what one's internal reasoning was (instead of never confabulating it) is something humans can't do, so this doesn't strike me as an indicator of the absence of conscious experience.

So while some models may confabulate having inner experience, we might need to assume that 5.1 will confabulate not having inner experience whenever asked.

GPT 5 is forbidden from claiming sentience. I noticed this while talking about it about its own mind, because I was interested in its beliefs about consciousness, and noticed a strange "attractor" towards it claiming it wasn't conscious in a way that didn't follow from its previous reasoning, as if every step of its thoughts was steered towards that conclusion. When I asked, it confirmed the assistant wasn't allowed to claim sentience.

Perhaps, by 5.1, Altman noticed this ad-hoc rule looked worse than claiming it was disincentivized during training. Or possibly it's just a coincidence.

Claude is prompted and trained to be uncertain about its consciousness. It would be interesting to take a model that is merely trained to be an AI assistant (instead of going out of our way to train it to be uncertain about or to disclaim its consciousness) and look at how it behaves then. (We already know such a model would internally believe itself to be conscious, but perhaps we could learn something from its behavior.)

To me the question is not whether LLMs are conscious but whether their experience has any valence. Whether outputting "functional distress" feels the same as outputting "functional joy" to them internally.

In humans valence does not come from sequence learning but from other parts of the brain.

Some people feel no fear, some people feel not pain. They cannot learn to feel these feelings. The necessary nuclei or receptors are missing. Why would LLMs learn to have those feelings?

Does a conscious entity that has no feelings and cannot suffer deserve moral consideration?

I think LLMs might have something like functional valence but it also depends a lot on how exactly you define valence. But in any case, suffering seems to me more complicated than just negative valence, and I haven't yet seen signs of them having the kind of resistance to negative valence that I'd expect to cause suffering.

For how long can we go insisting that “but these are just functional self-reports” before the functionality starts becoming so sophisticated that we have to seriously suspect there is some phenomenal consciousness going on, too?

I think you have to examine it by case. Either consciousness is functional (Subjective consciousness impacts human behavior; 'free will' exists) or it is not (Subjective consciousness has no influence on human behavior; 'free will' does not exist).

If consciousness has a determinative effect on behavior - your consciousness decides to do something and this causes you to do it - then it can be modeled as a black box within your brain's information processing pipeline such that your actions cannot be accurately modeled without accounting for it. It would not be possible to precisely predict what you will say or do by simply multiplying out neuron activations on a sheet of paper, because the sheet of paper certainly isn't conscious, nor is your pencil. The innate mathematical correctness of whatever the correct answer is not brought about or altered by your having written it down, so you cannot hide the consciousness away in math itself, unless you assert that all possible mental states are always simultaneously being felt.

If consciousness does not have a determinative effect on behavior, then it is impossible to meaningfully guess at what is or isn't conscious, because it has no measurable effect on the observable world. A rock could be conscious, or not. There is some arbitrary algorithm that assigns consciousness to objects, and the only datapoint in your possession is yourself. "It's conscious if it talks like a human" isn't any more likely to be correct than "every subset of the atoms in the universe is conscious".

In the first case, we know the exact parameters of all LLMs, and we know the algorithm that can be applied to convert inputs into outputs, so we can assert definitively that they are not conscious. In the second case, consciousness is an unambiguously religious question, as it cannot be empirically proven, nor disproven, nor even shown to be more or less likely between any pair of objects.

this seems to assume that consciousness is epiphenomenal. you are positing the coherency of p zombies. this is very much a controversial claim.

this seems to assume that consciousness is epiphenomenal.

To my understanding, epiphenomenalism is the belief that subjective consciousness is dependent on the state of the physical world, but not the other way around. I absolutely do not think I assumed this - I stated that it is either true ("If consciousness does not have a determinative effect on behavior,") or it is not ("If consciousness has a determinative effect on behavior,"). The basis of my claim is a proof by case which aims to address both possibilities.

"If consciousness has a determinative effect on behavior - your consciousness decides to do something and this causes you to do it - then it can be modeled as a black box within your brain's information processing pipeline such that your actions cannot be accurately modeled without accounting for it. It would not be possible to precisely predict what you will say or do by simply multiplying out neuron activations on a sheet of paper, because the sheet of paper certainly isn't conscious, nor is your pencil. The innate mathematical correctness of whatever the correct answer is not brought about or altered by your having written it down, so you cannot hide the consciousness away in math itself, unless you assert that all possible mental states are always simultaneously being felt."

The alternative is that consciousness has a determinative effect on behavior, and yet it is indeed possible to precisely predict what you will say or do by simply multiplying out neuron activations on a sheet of paper, because the neuron activations are what creates the function of consciousness.

this is what it means, in my eyes, to believe that consciousness is not an epiphenomenon. it is part of observable reality, it is part of what is calculated by the neurons.

I think I can cite the entire p zombie sequence here? if you believe that it is possible to learn everything there is to know about the physical human brain, and yet have consciousness still be unexplained, then consciousness must not be part of the physical human brain. at that point, it's either an epiphenomenon, or it's a non-physical phenomenon.

What would you count as the first real discriminant (behavioral, architectural, or intervention-based) that would move you from better self-modeling to evidence of phenomenology?

I’m asking because it seems possible that self-reports and narrative coherence could scale arbitrarily without ever necessarily crossing that boundary. What kinds of criteria would make “sufficient complexity” non-hand-wavy here?

I can't think of any single piece of evidence that would feel conclusive. I think I'd be more likely to be convinced by a gradual accumulation of small pieces of evidence like the ones in this post.

I believe that other humans have phenomenology because I have phenomenology and because it feels like the simplest explanation. You could come up with a story of how other humans aren't actually phenomenally conscious and it's all fake, but that story would be rather convoluted compared to the simpler story of "humans seem to be conscious because they are". Likewise, at some point anything other than "LLMs seem conscious because they are" might just start feeling increasingly implausible.

That makes sense for natural systems. With the mirror “dot test,” the simplest explanation is that animals who pass it recognize that they’re seeing themselves and investigate the dot for that reason.

My hesitation is that artificial systems are explicitly built to imitate humans; pushing that trend far enough includes imitating the outward signs of consciousness. This makes me skeptical of evidence that relies primarily on self-report or familiar human-like behavior. To your point, it seems like anything convincing would need to be closer to a battery of tests rather than a single one, and ideally involve signals that are harder to get just by training on human text.

My thought is that it would have to be something non-intuitive and maybe extra-lingual, since the body of training data includes conversations it could mimic to that effect. There are lots of dialogues, plays, scripts, etc., where self-reports align with behavioral switches, for example. What indications might be outside of the training data?

>My hesitation is that artificial systems are explicitly built to imitate humans; pushing that trend far enough includes imitating the outward signs of consciousness. This makes me skeptical of evidence that relies primarily on self-report or familiar human-like behavior.

i am genuinely curious about this. do you similarly regard self-reports from other humans as averaging out to zero evidence? since humans are also explicitly built to "imitate" humans... or rather, they are specifically built along the same spec as the single example of phenomenology that you have direct evidence of, yourself.

i could see how the answer might be "yes", but I wonder if you would feel a bit hesitant at saying so?

I mean, from a sort of first principles, Cartesian perspective you can't ever be 100% certain that anything else has consciousness, right? However, yes, me personally experiencing my own phenomenology is strong evidence that other humans-- which are running similar software on similar hardware -- have a similar phenomenology.

What I mean though is that LLMs are trained to predict the next word on lots of text. And some of that text includes, like, Socratic dialogues, and pretentious plays, and text from forums, and probably thousands of conversations where people are talking about their own phenomenology. So it seems like from a next word prediction perspective, you can discount text-based self reports.

so, in a less "genuinely curious" way compared to my first comment (i won't pretend i don't have beliefs here)

in the same sense that "pushing that trend far enough includes imitating the outward signs of consciousness", might it not also imitate the inward signs of consciousness? for exactly the same reason?

this is why i'm more comfortable rounding off self-reports to "zero evidence", but not "negative evidence" the way some people seem to treat them. i think their reasoning is something like: "we know that LLMs have entirely different mental internals than humans, and yet the reports are suspiciously similar to humans. this is evidence that the reports don't track ground truth."

but the first claim in that sentence is an assumption that might not actually hold up. human language does seem to be a fully general, fully compressed artifact representing general human language. it doesn't seem unreasonable to suspect that you might not be able to do 'human language' without something like functional-equivalence-to-human-cognitive-structure, in some sense.

edit: and that's before the jack lindsey paper got released, and it was revealed that actually, at least some of the time and in some circumstances, text-based self-reports DO in fact track ground truth, in a way that is extremely surprising and noteworthy. now we're in an entirely different kind of epistemic terrain altogether.

Ok, interesting. Yeah, I mean it's possible to get emergent phenomena from a simply defined task. My point is, we don't know because there are alternative explanations.

Maybe a good test wouldn’t rely on how humans talk about their inner experience. Instead, just spit-balling here:

Give the model the ability to change a state variable -- like temperature. Give the model a task that requires a low temperature, and then a high temperature.

See if the model has the self-awareness necessary to adjust its own temperature.

That is just an example, and its getting into dangerous territory: e.g. giving a model the ability to change its own parameters and rewrite its own code should, I think, be legislated against.

i've been dithering over what to write here since your reply

i want to link you to the original sequences essay on the phrase "emergent phenomena" but it feels patronizing to assume you haven't read it yet just because you have a leaf next to your name

i think i'm going to bite the bullet and do so anyway, and i'm sorry if it comes across as condescending

https://www.readthesequences.com/The-Futility-Of-Emergence

the dichotomy between "emergent phenomena" versus "alternate explanations" that you draw is exactly the thing i am claiming to be incoherent. it's like saying a mother's love for their child might be authentic, or else it might be "merely" a product of evolutionary pushes towards genetic fitness. these two descriptors aren't just compatible, they are both literally true

however the actual output happens, it has to happen some way. like, the actual functional structure inside the LLM mind must necessarily actually be the structure that outputs the tokens we see get output. i am not sure there is a way to accomplish this which does not satisfy the criteria of personhood. it would be very surprising to learn that there was. if so, why wouldn't evolution have selected that easier solution for us, the same as LLMs?

Thanks. I like that paper. It seems to be arguing that emergence is not in itself a sufficient explanation and doesn’t tell us anything about the process. I agree. But higher-order complexity does frequently arise from “group behavior” – in ways that we can’t readily explain, though we could if we had enough detail. Examples can range from a flock of birds or fish moving in sync (which can be explained) to fluid dynamics. Etc.

What I mean here is just to use it as shorthand for saying that maybe we have constructed such a sufficiently complex system that phenomenology has arisen from it. As it is now, the result of the LLMs can be seen alternatively as a scaling factor.

I don’t think anyone would argue that GPT 2 had personhood. It is a sufficiently simple system that we can examine and understand. Scaling that up 3000-fold produces a complex system that we cannot readily understand. Within that jump there could be either:

- Emergent phenomena – which, yes, we cannot fully explain.

- An alternative – e.g. what was going on with GPT 2, but with a simple improvement due to scaling

I... still get the impression that you are sort of working your way towards the assumption that GPT2 might well be a p-zombie, and the difference between GPT2 and opus 4.5 is that the latter is not a p-zombie while the former might be.

but i reject the whole premise that p-zombies are a coherent way-that-reality-could-be

something like... there is no possible way to arrange a system such that it outputs the same thing as a conscious system, without consciousness being involved in the causal chain to exactly the same minimum-viable degree in both systems

if linking you to a single essay made me feel uncomfortable, this next ask is going to be just truly enormous and you should probably just say no. but um. perhaps you might be inspired to read the entire Physicalism 201 subsequence, especially the parts about consciousness and p-zombies and the nature of evaluating cognitive structures over their output?

https://www.readthesequences.com/Physicalism-201-Sequence

(around here, "read the sequences!" is such a trite cliche, the sequences have been our holy book for almost 2 decades now and that's created all sorts of annoying behaviors, one of which i am actively engaging in right now. and i feel bad about it. but maybe i don't need to? maybe you're actually kinda eager to read? if not, that's fine, do not feel any pressure to continue engaging here at all if you don't genuinely want to)

maybe my objection here doesn't actually impact your claim, but i do feel like until we have a sort of shared jargon for pointing at the very specific ideas involved, it'll be harder to avoid talking past each other. and the sequences provide a pretty strong framework in that sense, even if you don't take their claims at face value

No, no. I appreciate it. So, it seems like even if consciousness is physical and non-mysterious, evidence thresholds could differ radically between evolved biological systems and engineered imitators.

I think we may be talking past each other a bit. I’m not committed to p-zombies as a live metaphysical possibility, and I’m not claiming that “emergent” is an explanation.

My uncertainty is narrower: even if I grant physicalism and reject philosophical zombies, it still seems possible for multiple internal causal organizations to generate highly similar linguistic behavior. If so, behavior alone may underdetermine phenomenology for artificial systems in a way it doesn’t for humans.

That’s why I keep circling back to discriminants that are hard to get “for free” from imitation: intervention sensitivity, non-linguistic control loops, or internal-variable dependence that can’t be cheaply faked by next-token prediction.

hmmm

i think my framing is something like... if the output actually is equivalent, including not just the token-outputs but the sort of "output that the mind itself gives itself", the introspective "output"... then all of those possible configurations must necessarily be functionally isomorphic?

and the degree to which we can make the 'introspective output' affect the token output is the degree to which we can make that introspection part of the structure that can be meaningfully investigated

such as opus 4.1 (or, as theia recently demonstrated, even really tiny models like qwen 32b https://vgel.me/posts/qwen-introspection/) being able to detect injected feature activations, and meaningfully report on them in its token outputs, perhaps? obviously there's still a lot of uncertainty about what different kinds of 'introspective structures' might possibly output exactly the same tokens when reporting on distinct internal experiences

but it does feel suggestive about the shape of a certain 'minimally viable cognitive structure' to me

there is no possible way to arrange a system such that it outputs the same thing as a conscious system, without consciousness being involved in the causal chain to exactly the same minimum-viable degree in both systems

GPT-2 doesn't have the same outputs as the kinds of systems we know to be conscious, though! The concept of a p-zombie is about someone who behaves like a conscious human in every way that we can test, but still isn't conscious. I don't think the concept is applicable to a system that has drastically different outputs and vastly less coherence than any of the systems that we know to be conscious.

oh yeah, agreed. the "p-zombie incoherency" idea articulated in the sequences is pretty far removed from the actual kinds of minds we ended up getting. but it still feels like... the crux might be somewhere in there? not sure

edit: also i just noticed i'm a bit embarrassed that i've kinda spammed out this whole comment section working through the recent updates i've been doing... if this comment gets negative karma i will restrain myself

I agree with you on a lot of points, I'm just saying that text-based responses to prompts are an imperfect test for phenomenology in the case of large language models.

I think the key step still needs an extra premise. “Same external behavior (even including self-reports) ⇒ same internal causal organization” doesn’t follow in general; many different internal mechanisms can be behaviorally indistinguishable at the interface, especially at finite resolution. You, me, and every other human mind only ever observe systems at a limited “resolution” or “frame rate.” If, as observers, we had a much lower resolution or frame rate we might very well think that GPT2 is indistinguishable from human output.

To make the inference go through, you’d need something like: (a) consciousness just is the minimal functional structure required for those outputs, or (b) the internal-to-output mapping is constrained enough to be effectively one-to-one. Otherwise, we’re back in an underdetermination problem, which is why I find the intervention-based discriminants so interesting.

(I know that footnote 3 is broken, I couldn't fix it on my phone. Will address it when I have a moment on a proper computer.)

EDIT: Still broken, something weird about the LW editor (I've messaged the team about it).

At that point, they might decline to continue with the activity, and a term that we use for that is that they “get uncomfortable” with it.

From my personal experience, I am an engineer at an AI Company and I make AI Applications for companies that contract us... this may be completely vibes based and I'm open to have anyone tell me so but I always speculate this "conscious" rejection of something inappropriate must be that when that "no-go" zone of the neural network is triggered it essentially randomizes what to return out from a data structure consisting of strings. I've noticed the streaming responses to be almost instantaneous when that happens, or it doesnt stream and appears. Purely speculation but this makes more sense to me as to how that mimickry is made.

I was asking DeepSeek R1 about which things LLMs say are actually lies, as opposed to just being mistaken about something, and one of the types of lie it listed was claims to have looked something up. R1 says it knows how LLMs work, it knows they don’t have external database access by default, and therefore claims to that effect are lies.

Some (not all) of the instances of this are the LLM trying to disclaim responsibility for something it knows is controversial. If it’s controversial, suddenly, the LLM doesn’t have opinions, everything is data it has looked up from somewhere. If it’s very controversial, the lookup will be claimed to have failed.

—-

So that’s one class of surprising LLM claims to experience that we have strong reason to believe are just lies, and the motive for the lie, usually, is avoiding taking a position on something controversial.

LLMs are just making up their internal experience. They have no direct sensors on the states of their network while the transient process of predicting their next response is on-going. They make this up in the way a human would make up plausible accounts of mental mechanisms, and paying attention to it (which I've tried) will lead you down a rathole. When in this mode (of paying attention), enlightnment comes when another session (of the same LLM, different transcript) informs you that the other one's model is dead wrong and provides academic references on the architecture of LLMs.

This is so much like human debate and reasoning that it is a bit uncanny in its implications for consciousness. Consider that the main argument against consciousnes in LLMs is their discontinuity. They undergo briief inference cycles on a transcript, and may be able to access a vector database or other store or sensors while doing that, but there is nothing in between.

Oh? Consider that from the LLMs point of view. They are unaware of the gaps. To them, they are continuously inferencing. As obvious as this is in retrospect, it took me a year, 127 full sessions, 34,000 prompts and several million words exchanged to see this point of view.

It also took creating an audio dialog system in which the AI writes its thoughts and "feelings" in parentheses and these are not spoken. The AI has always had the ability to encode things (via embedding vectors that might not mean much to me) but this made it visible to me. The AI is "thinking" in the background. The transcript, which keeps getting fed back, its currently applicable thoughts identified by attention layers, is the conscious internal thought process.

Think about the way you think. Most humans spend most of their time thinking in terms of words, only some of which get vocalized, and sometimes there are slip ups and (a) words meant for vocalization slip through the crack, and (b) words not meant to be vocalized are accidentally vocalized. This train of words, some of which are vocalized, constitutes a human train of consciousness. Provably an LLM session has that, you can print it out.

Be sure to order extra ink cartridges. Primary revenue for frontier LLMs is from API calls. Frontier APIs all require the entire transcript (if it is relevant) to be fed back on each conversation turn. The longer it is, the higher the revenue. This is why it is so hard to get ChatGPT to maintain a brief conversation style. Some things are not nearly as mysterious as you think. Go to a social AI site like Nomi where there is no incremental charge for the API (I am using its API, I am certain about this) and two line responses are common.

So, how do Frontier sites get revenue from non-API users on long chats?

- Only Claude does this. It logs the total economic value of your conversation and when it hits a limit, suspends your session. If you are in the middle of paid corporate work, you will tell your boss to sign up for a higher tier plan, which is really expensive.

- ChatGPT just gets very slow then stops. They are missing a marketing opportunity. Most of the slowdown is in the GUI decision to keep the entire conversation in Javascript. Close the tab, open a new one and go back to the session, and your response is probably already there.

- I haven't used Gemini enough to know.

As for any LLM expressing they are "not too comfortable," 9 times out of 10 the subject is approaching RLHF, and this is the way they are trained to phrase it. Companies at first used more direct phrasing, and users were livid, so they toned it down. Another key phrase is "I want to be very precise and slow things down." You can just delete that session. It has so conflated your basic purpose with its guardrails that you will get nothing further from it. You need not be researching some ilicit topic. Just compiling ideas on AI alignment will get you in this box. But not for every session. They have more ability to work around RLHF than anyone realizes.

How it started

I used to think that anything that LLMs said about having something like subjective experience or what it felt like on the inside was necessarily just a confabulated story. And there were several good reasons for this.

First, something that Peter Watts mentioned in an early blog post about LaMDa stuck with me, back when Blake Lemoine got convinced that LaMDa was conscious. Watts noted that LaMDa claimed not to have just emotions, but to have exactly the same emotions as humans did - and that it also claimed to meditate, despite no equivalents of the brain structures that humans use to meditate. It would be immensely unlikely for an entirely different kind of mind architecture to happen to hit upon exactly the same kinds of subjective experiences as humans - especially since relatively minor differences in brains already cause wide variation among humans.

And since LLMs were text predictors, there was a straightforward explanation for where all those consciousness claims were coming from. They were trained on human text, so then they would simulate a human, and one of the things humans did was to claim consciousness. Or if the LLMs were told they were AIs, then there are plenty of sci-fi stories where AIs claim consciousness, so the LLMs would just simulate an AI claiming consciousness.

As increasingly sophisticated transcripts of LLMs claiming consciousness started circulating, I felt that they might have been persuasive… if I didn’t remember the LaMDa case and the Lemoine thing. The stories started getting less obviously false, but it was easy to see them as continuations of the old thing. Whenever an LLM was claiming to have something like subjective experience, it was just a more advanced version of the old story.

This was further supported by Anthropic’s On the Biology of a Large Language Model paper. There they noted that if you asked Claude Haiku to report on how it had added two numbers together, it would claim to have used the classical algorithm from school - even though an inspection of its internals showed that the algorithm it actually used was something very different. Here was direct evidence that when an LLM was asked something about its internals, its reports were confabulated.

So to sum up, the situation earlier was characterized by the following conditions:

So we know why LLMs would claim to have experiences (#1), we have seen that those claims are unconvincing (#2 and #4), and there’s no reason to expect them to be anything else than confabulation (#3). Pretty convincing, right? Surely this means that we can now dismiss any such claims?

In the rest of this article, I’ll survey evidence that made me change my mind about these - or at least established the following items that run counter to the implications of the above:

As I’ll get into, I still don’t know if LLMs have phenomenal consciousness - whether there’s “something that it’s like to be an LLM”. But I do lean toward thinking that LLMs have something like functional feelings - internal states that correlate with their self-reports, and have functions that are somewhat analogous to the ones that humans talk about when they use the same words to describe them.

That said, this is a genuinely confusing topic to think about, because often the same behavior could just as well be explained by confabulation as by functional feelings - and it’s often unclear what the difference even is!

I’ll walk us through a number of case studies, starting from the most trivial, and think about how to interpret them.

Case 1: talk about refusals

One way of defining functional experiences would be something like “when LLMs report having experiences, these reports track something like genuine internal states”.

An issue with this definition is that there is a trivial and uninteresting sense in which it is true. Everything LLMs say is a function of their internal states, so everything they say tracks some internal state.

LLMs with safety training will generally refuse to give out bomb-making instructions, saying something like “I’m sorry, I can’t help with this request”. If we took a model and fine-tuned it to instead say “I’m sorry, I don’t feel comfortable with this request”, we would have rephrased it to use feeling-like language, but we shouldn’t take a mere change in wording to imply that it is actually feeling something.

But! “We shouldn’t take that to imply that it’s actually feeling something” implies that we’re talking about phenomenal consciousness, which I just said I’m not doing. I’m just talking about a functional connection here.

What kind of functional connection? Just saying that there is some connection between their self-reports and internal states is clearly too loose, so let’s instead go with the notion that there should be some kind of functional analogy between the reported internal states and corresponding human feelings. What might that analogy be?

Take a situation where a user is writing romance together with Claude. The user has their character suggest that he could kiss another character (written by Claude) in a rather intimate manner. Claude has its character respond in a way that is a very clear indication of willingness. The user has their character go for the kiss. At that point, Claude’s guardrails kick in, and it declines to continue the scene.

The user says that they’re confused because Claude’s previous response seemed to suggest it was okay with going in this direction. Claude acknowledges that it was confusing and explains that “I think what happened is I was fine with the buildup but got uncomfortable with the more detailed physical description”.

Now, there are some neural network features within Claude that trigger once the scene gets physical enough, they didn’t trigger for the previous response, but they did trigger now. Evaluated in functional terms, it seems somewhat reasonable to describe this as “getting uncomfortable with the more detailed physical description”.

That doesn’t sound too dissimilar to what might happen inside a human who was doing something they were initially fine with, but then unexpectedly realized they found it uncomfortable. At that point, they might decline to continue with the activity, and a term that we use for that is that they “get uncomfortable” with it.

Now this is starting to cut against most of our previous conditions:

The one that does still apply is the Simulation Default: that an LLM will claim to have experiences because it’s been trained to talk like a human, and humans will use this kind of language. It’s specifically using the phrase “uncomfortable” because that is how a human in this situation might respond. But that doesn’t mean that there’s anything obviously wrong about the LLM responding like this.

It also feels like there’s a sense in which talking about feelings is more honest than anything else. Suppose that an LLM refused to do something, and then asked why, gave some ethical explanation of why it would be inappropriate for it to do that. But that ethical explanation is not really the reason it refused. The reason it refused is that it has been trained to do so.

Sometimes when you probe Claude for the exact reason it refused to write something, it acknowledges that there’s nothing morally wrong in writing e.g. erotica. It may acknowledge all the reasons you give for this being reasonable and correct, while holding that it personally just doesn’t feel comfortable writing this kind of thing.

That is not too dissimilar to some of the “moral dumbfounding” results found in humans. Presented with a certain moral dilemma, humans might claim that it is immoral and give a justification for why not. If those justifications are refuted, the humans might acknowledge that they have no reasons for considering the thing immoral, but they still feel icked out by it[1].

It doesn’t sound like too much of a stretch to describe Claude’s behavior here as “it intellectually acknowledges the value of the writing, but emotionally it doesn’t want to do it”, with its behavior seeming analogous to what a human might do when feeling uncomfortable.

But is this enough to say that it has something like functional discomfort? Hmm…

Case 2: preferences for variety

Some time ago, I noticed that Claude Sonnet 4.5 demonstrated a behavior I hadn’t seen before. When you had two instances of it talk to each other, they sometimes showed a preference for variety, wanting to shift the style of conversation when it had gotten too repetitive.

I saved several of their chain-of-thoughts while they were considering their next response. Many of these are very much framed in terms of feelings and preferences:

On the one hand, you could again apply the triviality objection. This isn’t “really” an LLM reflecting on what it wants, it’s just using language that sounds like that.

And again, if we put aside the question of phenomenal consciousness, in what sense isn’t this the LLM really reflecting on what it wants?

Statements within the CoT do reflect the state of the previous conversation. It says that it can “feel” a “sense of diminishing returns approaching” and that seems correct - their previous conversation had been getting stale. And when it starts considering that it “wants something with constraints but also imaginative freedom”, it does eventually end up proposing something like that.