I can't tell if this post is trying to discuss communicating about anything related to AI or alignment or is trying to more specifically discuss communication aimed at general audiences. I'll assume it's discussing arbitrary communication on AI or alignment.

I feel like this post doesn't engage sufficiently with the costs associated with high effort writting and the alteratives to targeting arbitrary lesswrong users interested in alignment.

For instance, when communicating research it's cheaper to instead just communicate to people who are operating within the same rough paradigm and ignore people who aren't sold on the rough premises of this paradigm. If this results in other people having trouble engaging, this seems like a reasonable cost in many cases.

An even narrower approach is to only communicate beliefs in person to people who you frequently talk to.

I think I agree with this regarding inside-group communication, and have now edited the post to add something kind-of-to-this-effect at the top.

While writing well is one of the aspects focused on by the OP, your reply doesn't address the broader point, which is that EY (and those of similar repute/demeanor) juxtaposes his catastrophic predictions with his stark lack of effective exposition and discussion of the issue and potential solutions to a broader audience. To add insult to injury, he seems to actively try to demoralize dissenters in a very conspicuous and perverse manner, which detracts from his credibility and subtly but surely nudges people further and further from taking his ideas (and those similar) seriously. He gets frustrated by people not understanding him, hence the title of the OP implying the source of his frustration is his own murkiness, not a lack of faculty of the people listening to him. To me, the most obvious examples of this are his guest appearances on podcasts (namely Lex Fridman's and Dwarkesh Patel's, the only two I've listened to). Neither of these hosts are dumb, yet by the end of their respective episodes, the hosts were confused or otherwise fettered and there was palpable repulsion between the hosts and EY. Considering these are very popular podcasts, it is reasonable to assume that he agreed to appear on these podcasts to reach a wider audience. He does other things to reach wider audiences, e.g. his twitter account and the Time Magazine article he wrote. Other people like him do similar things to reach wider audiences.

Since I've laid this out, you can probably predict what my thoughts are regarding the cost-benefit analysis you did. Since EY and similar folk are predicting outcomes as unfavorable as human extinction and are actively trying to recruit people from a wider audience to work towards their problems, is it really a reasonable cost to continue going about this as they have?

Considering the potential impact on the field of AI alignment and the recruitment of individuals who may contribute meaningfully to addressing the challenges currently faced, I would argue that the cost of improving communication is far beyond justifiable. EY and similar figures should strive to balance efficiency in communication with the need for clarity, especially when the stakes are so high.

I agree that EY is quite overconfident and I think his argument for doom are often sloppy and don't hold up. (I think the risk is substantial but often the exact arguments EY gives don't work). And, his communication often fails to meet basic bars for clarity. I'd also probably agree with 'if EY was able to do so, improving his communication and arguments in a variety of contexts would be extremely good'. And specifically not saying crazy sounding shit which is easily misunderstood would probably be good (there are some real costs here too). But, I'm not sure this the top of my asks list for EY.

Further I agree with "when trying to argue nuanced complex arguments to general audiences/random people, doing extremely high effort communication is often essential".

All this said, this post doesn't differentiate between communication to general audience and other communication about ai. I assumed it was talking about literally all alignment/ai communication and wanted to push back on this. There are real costs to better communication, and in many cases those costs aren't worth it.

My comment was trying to make a relatively narrow and decoupled point (see decoupling norms etc.).

If you are think that AI is going to kill everyone, sooner or later you are going to have to communicate that to everyone. That doesn't mean evey article has to be at the highest level of comprehensibity, but it does mean you shouldn't end up with the in-group problem of being unable to communicate with outsiders at all.

Agreed—thanks for writing this. I have the sense that there's somewhat of a norm that goes like 'it's better to publish something that not, even if it's unpolished' and while this is not wrong, exactly, I think those who are doing this professionally, or seek to do this professionally, ought to put in the extra effort to polish their work.

I am often reminded of this Jacob Steinhardt comment.

Researchers are, in a very substantial sense, professional writers. It does no good to do groundbreaking research if you are unable to communicate what you have done and why it matters to your field. I hope that the work done by the AI existential safety will attract the attention of the broader ML community; I don't think we can do this alone. But for that to happen, there needs to be good work and it must be communicated well.

Researchers are, in a very substantial sense, professional writers.

Wow, this is a quote for the ages.

I've kinda gone back-and-forth on this, since I often have low-energy, yet ideas to express.

Since we already use "epistemic status" labels, I could imagine labels like "trying to clarify" VS "just getting an idea out there". Some epistemic-statuses kinda do that (e.g. "strong conviction, weakly held" or "random idea").

I've gotta say, I think you're right about the problem, but more wrong than right about the solution. Writing more detailed pieces is rarely the way to communicate clearly outside of the community, because few readers will carefully read all of it. Writing better is often writing more concisely, and using intuition pumps and general arguments that are likely to resonate with your particular target audience. It's also anticipating their emotional hangups with the issue and addressing them.

Not everyone is as logical as LW members, and even rationalists don't read in detail when they feel that the topic is probably silly.

This advice is helpful in addition, and yeah, my advice is probably reverse-worthy sometimes (though not like half the time).

You noted that he's using sentences that mean something totally different when parsed even a little bit wrong, and I think that's right and an insightful way to look at possible modes of communication failure.

If you're smart, you probably skip some steps when solving problems. That's fine, but don't skip writing them down! A skipped step will confuse somebody. Maybe that "somebody" needed to hear your idea.

Am reminded of those odious papers or physics textbooks that will jump three/four steps between two equations with some handwaving "it's trivial" "obviously" or "left as an exercise to the reader". As the reader, the exercise has sometimes wasted hours or days of my research time that could have been spent doing actual new research rather than trying to divine what the Hell were the original authors thinking or assuming that they didn't write down explicitly.

Be ready and willing to talk and listen, on levels so basic that without context they would seem condescending. "I know the basics, stop talking down to me" is a bad excuse when the basics are still not known.

The number of defenses people have against this sort of thing is pretty obvious in other difficult areas like phenomenology.

Bah, writing simply and clearly is low status, obscure scripto est summus status.

(...is the instinct you need to overcome.)

Past a certain point it circles around and writing clearly becomes high status again. Look at Feynman's Lectures. There's a specific kind of self-assured and competent expert that will know that talking in simple words about their topic will not make it any less obvious that they still know a shit-ton more about it than anyone else in the room, and that is how they can even make it sound simple.

This is called counter-signalling, and it usually only works if everyone already knows that you are an expert (and the ones who don't know, they get social signals from the others).

Imagine someone speaking just as simply as Feynman, but you are told that the person is some unimportant elementary-school teacher. Most people would probably conclude "yes, this guy knows his subject well and can explain it simply, which deserves respect, but of course he is incomparable to the actual scientists". On the other hand, someone speaking incomprehensibly will probably immediately be perceived as a member of the scientific elite (unless you have a reason to suspect a crackpot).

"Always remember that it is impossible to speak in such a way that you cannot be misunderstood: there will always be some who misunderstand you."

― Karl Popper

A person can rationalize the existence of causal pathways where people end up not understanding things that you think are literally impossible to misunderstand, and then very convincingly pretend that that was the causal pathway which led them to where they are,

and there is also the possibility that someone will follow such a causal pathway towards actually sincerely misunderstanding you and you will falsely accuse them of pretending to misunderstand.

This post made me start wondering - have the shard theorists written posts about what they think is the most dangerous realistic alignment failure?

if this was most inspired by my post being super confusing and vague - yeah, it was, and I set out knowing that; more details in a comment I added on my post. https://www.lesswrong.com/posts/XgEytvLc6xLK2ZeSy/does-anyone-know-how-to-explain-to-yudkowsky-why-he-needs-to?commentId=hdoyZDQNLkSj96DJJ

but of course agreed on the object level. the post was not intended to be "I know what he's doing wrong, here's what it is". it was a request for comments - that perhaps could have been clearer. It was very much "yeah, so, I don't know what I'm trying to say, help?" not a coherent argument.

If you personally criticize other people publically doing it in a super confusing and vague way while calling them a fool, that's bad.

"no criticizing unless you know what it is you're criticizing. all discussion must already know what it's saying." nah. the karma is negative - that's fine. I'm not deleting it, there's nothing wrong with it being public. it's fine and normal to say vague things before non-vague things actually. the thing I'm attempting to describe is a fact about the impact of yudkowsky's communication; I'm not at all the only one who has observed it.

It was very much "yeah, so, I don't know what I'm trying to say, help?" not a coherent argument. Yeah! I think the community should look into either using "epistemic status" labels to make this sort of thing clearer, or a new type of label (like "trying to be clear" vs "random idea" label).

I feel like a substantial amount of disagreement between alignment researchers are not object-level but semantic disagreements, and I remember seeing instances where person X writes a post about how he/she disagrees with a point that person Y made, with person Y responding about how that wasn't even the point at all. In many cases, it appears that simply saying what you don't mean could have solved a lot of the unnecessary misunderstandings.

Everyone, everyone, literally everyone in AI alignment is severely wrong about at least one core thing, and disagreements still persist on seemingly-obviously-foolish things.

If by 'severely wrong about at least one core thing' you just mean 'systemically severely miscalibrated on some very important topic ', then my guess is that many people operating in the rough prosaic alignment prosaic alignment paradigm probably don't suffer from this issue. It's just not that hard to be roughly calibrated. This is perhaps a random technical point.

Apparently clarity is hard. Because although I agree that it's essential to communicate clearly, it took significant wrapping my head around it to digest this post, to identify its thrust. I thought I had it eventually, but looking at comments it seems I wasn't the only one not sure.

I am not saying this to be snarky. I find this to be one of the clearer posts on LessWrong; I am usually lost in jargon I don't know. (Inferential gaps? General intelligence factor g?) But despite its relative clarity, it's still a slog.

I still admire the effort, and hope everyone will listen.

Thank you! Out of curiosity, which parts of this post made it harder to wrap your head around? (If you're not sure, that's also fine.)

Terms I don't know: inferential gaps, general intelligence factor g, object-level thing, opinion-structure. There are other terms I can figure but I have to stop a moment: medical grade mental differences, baseline assumptions. I think that's most of it.

At the risk of going too far, I'll paraphrase one section with hopes that it'll say the same thing and be more accessible. (Since my day job is teaching college freshmen, I think about clarity a lot!)

--

"Can't I just assume my interlocutor is intelligent?"

No.

People have different basic assumptions. People have different intuitions that generated those assumptions. This community in particular attracts people with very unbalanced skills (great at reasoning, not always great at communicating). Some have autism, or ADHD, or OCD, or depression, or chronic fatigue, or ASPD, or low working memory, or emotional reactions to thinking about certain things, or multiple issues at once.

Everyone's read different things in the past, and interpreted them in different ways. Good luck finding 2 people who have the same opinion regarding what they've read.

Doesn't this advice contradict the above point to "read charitably," to try to assume the writer means well? No. Explain things like you would to a child: assume they're not trying to hurt you, but don't assume they know what you're talking about.

In a field as new as this, in which nobody really gets it yet, we're like a group of elite, hypercompetent, clever, deranged... children. You are not an adult talking to adults, you are a child who needs to write very clearly to talk to other children. That's what "pre-paradigmatic" really means.

--

What I tried to do here was replace words and phrases that required more thought ("writings" -> "what they've read"), and to explain those that took a little thought ("read charitably"). IDK if others would consider this clearer, but at least that's the direction I hope to go in. Apologies if I took this too far.

Actually, I wonder if we might try something more formal. How about if we get a principle that if a poster sees a comment saying "what's that term?" the poster edits the post to define it where it's used?

This has some really insightful content. I agree with the sentiment that good communication is on you, the reader and future writer. On me as a writer.

But it's missing a huge factor. You're assuming that someone will read your prose. That is not a given. In fact, whether someone reads at all, and if they do, with a hostile or friendly mindset, are the most important things.

Bookmarking for reference. We need to write better. How to do that is not si.ple, but it is worthy of thought and discussion.

Thanks!

The "It's on me/you" part kinda reminds me of a quote I think of, from a SlateStarCodex post:

This obviously doesn’t absolve the Nazis of any blame, but it sure doesn’t make the rest of the world look very good either.

I was trying to make sense of it, and came up with an interpretation like "The situation doesn't remove blame from Nazis. It simply creates more blame for other people, in addition to the blame for the Nazis."

Likewise, my post doesn't try to absolve the reader of burden-of-understanding. It just creates (well, points-out) more burden-of-being-understandable, for the writer.

You can increase the chances of something being read by keeping it short. There's about no chance that random, moderately interested people are going to read the complete sequences. So there is a need for distillation into concise but complete arguments. Still, after all this time.

Exactly. What I was thinking of but not expressing clearly is that the strategy this post focused on, writing more to be clearer, is not a good strategy for many purposes.

Use italics, as well as commas (and parentheticals!), to reduce the ambiguity in how somebody should parse a sentence when reading it. Decouple your emotional reaction to what you're reading, and then still write with that in mind.

When it comes to italics, it's worth thinking about the associations. Style-guides like The Chicago Manual of Style don't recommend adding italics to words like "decouple" and "still".

The genre of texts that puts italics around words like that is sleazy online sale websites. I remember someone writing on LessWrong a while ago that using italics like that is a tell for crackpot writing.

If you want a piece of writing to be taken seriously, overusing italics can be harmful.

For my part, I don’t have that association. I associate italics with “someone trying to make it easy for me to parse what they’re saying”. I tend to associate it with blog posts, honestly. I wish papers and textbooks would use it more.

Here’s a pretty typical few sentences from Introduction to Electrodynamics, a textbook by David Griffiths:

The electric field diverges away from a (positive) charge; the magnetic field line curls around a current (Fig. 5.44). Electric field lines originate on positive charges and terminate on negative ones; magnetic field lines do not begin or end anywhere—to do so would require a nonzero divergence. They typically form closed loops or extend out to infinity.17 To put it another way, there are no point sources for B, as there are for E; there exists no magnetic analog to electric charge. This is the physical content of the statement ∇ · B = 0. Coulomb and others believed that magnetism was produced by magnetic charges (magnetic monopoles, as we would now call them), and in some older books you will still find references to a magnetic version of Coulomb’s law, giving the force of attraction or repulsion between them. It was Ampère who first speculated that all magnetic effects are attributable to electric charges in motion (currents). As far as we know, Ampère was right; nevertheless, it remains an open experimental question whether magnetic monopoles exist in nature (they are obviously pretty rare, or somebody would have found one), and in fact some recent elementary particle theories require them. For our purposes, though, B is divergenceless, and there are no magnetic monopoles. It takes a moving electric charge to produce a magnetic field, and it takes another moving electric charge to “feel” a magnetic field.

Griffiths has written I think 3 undergrad physics textbooks and all 3 are among of the most widely-used and widely-praised textbooks in undergrad physics. I for one find them far more readable and pedagogical than other textbooks on the same topics (of which I’ve also read many). He obviously thinks that lots of italics makes text easier to follow—I presume because somewhat-confused students can see where the emphasis / surprise is, along with other aspects of sentence structure. And I think he’s right!

The genre of texts that puts italics around words like that is sleazy online sale websites. I remember someone writing on LessWrong a while ago that using italics like that is a tell for crackpot writing.

Is this actually true? I don’t think I’ve found this to be true (and it’s the sort of thing I notice, as a designer).

Here’s type designer Matthew Butterick, in his book Butterick’s Practical Typography, on the use of italic and bold:

Bold or italic—think of them as mutually exclusive. That is the rule #1.

Rule #2: use bold and italic as little as possible. They are tools for emphasis. But if everything is emphasized, then nothing is emphasized. Also, because bold and italic styles are designed to contrast with regular roman text, they’re somewhat harder to read. Like all caps, bold and italic are fine for short bits of text, but not for long stretches.

With a serif font, use italic for gentle emphasis, or bold for heavier emphasis.

If you’re using a sans serif font, skip italic and use bold for emphasis. It’s not usually worth italicizing sans serif fonts—unlike serif fonts, which look quite different when italicized, most sans serif italic fonts just have a gentle slant that doesn’t stand out on the page.

Foreign words used in English are sometimes italicized, sometimes not, depending on how common they are. For instance, you would italicize your bête noire and your Weltanschauung, but neither your croissant nor your résumé. When in doubt, consult a dictionary or usage guide. Don’t forget to type the accented characters correctly.

(There’s also a paragraph demonstrating overuse of emphasis styling, which I can’t even replicate on Less Wrong because there’s no underline styling on LW, as far as I can tell.)

So using italics for emphasis too much is bad, but using it at all is… correct, because sometimes you do in fact want to emphasize things. According to Butterick. And pretty much every style guide I’ve seen agrees; and that’s how professional writers and designers write and design, in my experience.

I don't think there's anything wrong to put italics around some words. The OP violates both rules 1 and 2.

It has sentences like:

Everyone, everyone, literally everyone in AI alignment is severely wrong about at least one core thing, and disagreements still persist on seemingly-obviously-foolish things.

Yep, that definitely violates #1, no argument.

As far as #2 goes, well, presumably the author would disagree…? (Click on the Practical Typography link I posted for an—admittedly exaggerated, but not by much, I assure you!—example of what “overuse of emphasis” really looks like. It’s pretty bad! Much, much worse than anything in the OP, which—aside from the combination of bold and italic, which indeed is going too far—mostly only skirts the edges of excess, in this regard.)

Anyway, my point was primarily about the “sleazy online sale websites” / “crackpot writing” association, which I think is just mostly not true. Sites / writing like that is more likely to overuse all-caps, in my experience, or to look like something close to Butterick’s example paragraph. (That’s not to say the OP couldn’t cut back on the emphasis somewhat—I do agree with that—but that’s another matter.)

I agree the highlighted sentence in my article definitely breaks most rules about emphasis fonts (though not underlining!). My excuse is: that one sentence contains the core kernel of my point. The other emphasis marks (when not used for before-main-content notes) are to guide reading the sentences out-loud-in-your-head, and only use italics.

My recommendation is to use bold only in that case. Bold + italic is generally only needed when you need nested emphasis, e.g. bold within an italicized section, or vice-versa.

if you’re smart, you probably skip some steps when solving problems. That’s fine, but don’t skip writing them down! A skipped step will confuse somebody. Maybe that “somebody” needed to hear your idea.

This is something I've noticed over and over, to the extent that I've never seen a distillation that's both short and complete.

Most recently , here.

The odd thing is that many people here are coders, and the skill of making everything explicit in writing is similar to the skill of defining variables before you use them.

Make it obvious which (groupings of words) within (the sentences that you write) belong together. This helps people "parse" your

You're missing some words here.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

Whilst perhaps introducing some issues related to oversimplification, I feel like GPT could be used to somewhat help with this problem. An initial idea would be to embed all posts to make catching up on the literature / filling knowledge gaps easier. But I'm sure there are other more creative solutions that could be used too.

AI safety/alignment is pre-paradigmatic. Every word in this sentence is a hyperlink to an AI safety approach. Many of them overlap. ...

Yes. But it doesn't matter because all (well, I guess, most) of the authors agree that AI Alignment is needed and understand deeply so because they have read the Sequences.

Many of these people have even read the same Sequences.

Maybe not all of them, and maybe a part is just trusting the community. But the community shares parts that are not pre-paradigmatic. Sure, the solution is open, unfortunately, very much so. And on that I agree. But when people outside of the community complain, that is very much like creationists coming to a biology class - except they can't be kicked out so easily, because alignment is a niche science that suddenly made it into the spotlight.

The rest of this will be about how the community here does do the things you demand. Yudkowsky specifically. Beating the same points as you.

Write super clearly and super specifically.

See: The Sequences. The original ones. Those published as a book. Nicely ordered and curated. There are reading groups about it.

Be ready and willing to talk and listen, on levels so basic that without context they would seem condescending. "I know the basics, stop talking down to me" is a bad excuse when the basics are still not known.

Yes, that was the case when the Sequences were written and discussed ten years back.

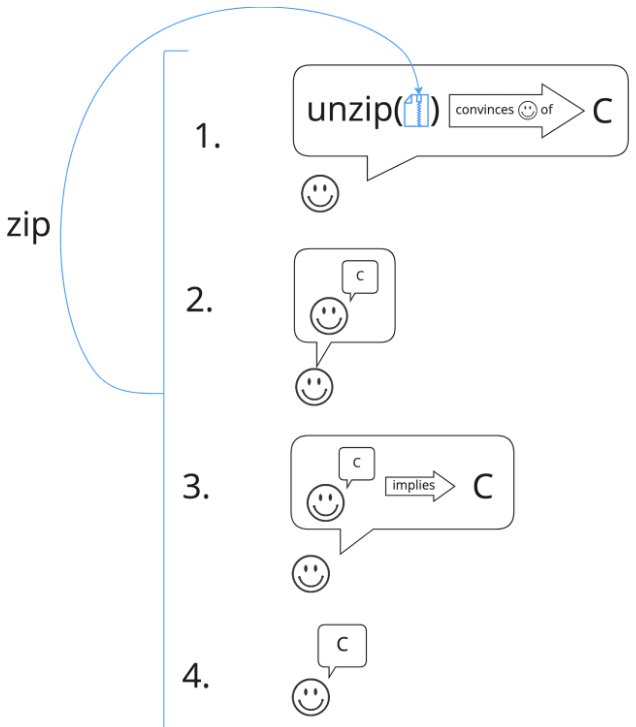

Draw diagrams. Draw cartoons. Draw flowcharts with boxes and arrows. The cheesier, the more "obvious", the better.

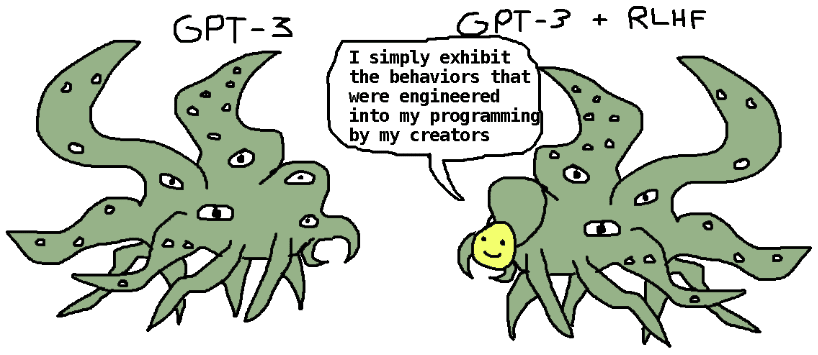

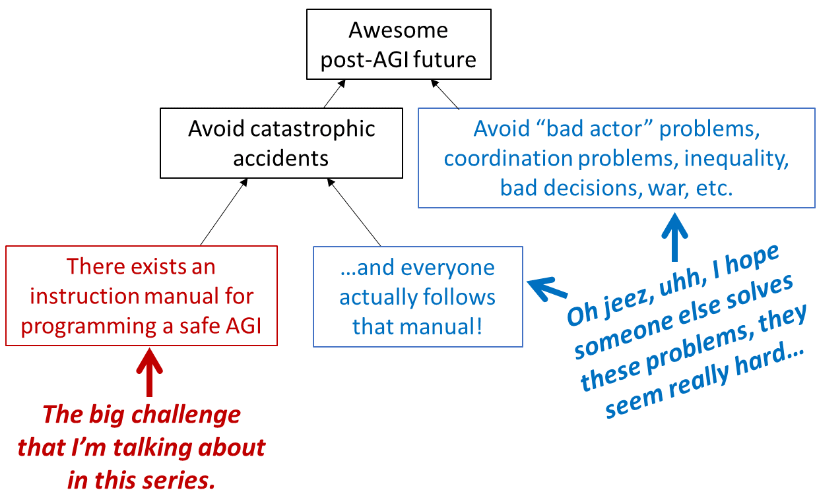

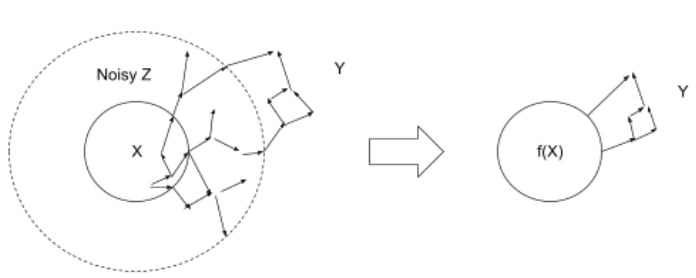

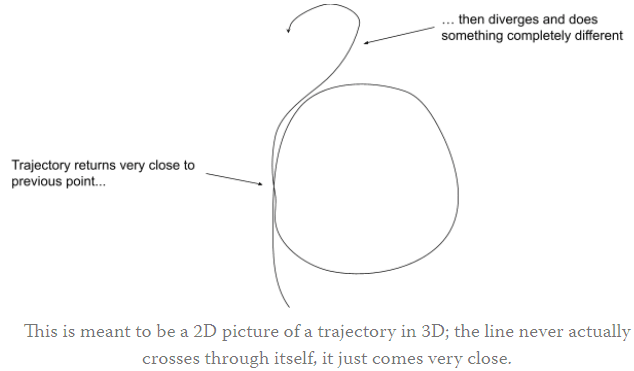

You mean like

or

or this

or

Or many other.

If you're smart, you probably skip some steps when solving problems. That's fine, but don't skip writing them down! A skipped step will confuse somebody. Maybe that "somebody" needed to hear your idea.

You mean Inferential Distance (list of 50 posts)?

Read The Sense of Style by Steven Pinker. You can skip chapter 6 and the appendices, but read the rest. Know the rules of "good writing". Then make different tradeoffs, sacrificing beauty for clarity. Even when the result is "overwritten" or "repetitive".

You mean Human's Guide to Words (a 25 post sequence from 2008)?

Link (pages that contain your baseline assumptions) liberally. When you see one linked, read it at least once.

You mean, as in the Sequence?

Use cheesy "A therefore B" formal-logic syllogisms, even if you're not at "that low a level of abstraction". Believe me, it still makes everything clearer.

You mean as on Arbital? Like the Introduction to Bayes' Rule?

Repeat your main points. Summarize your main points. Use section headers and bullet points and numbered lists. Color-highlight and number-subscript the same word when it's used in different contexts ("apple1 is not apple2").

Dito.

Do all of this to the point of self-parody. Then maybe, just maybe, someone will understand you.

Except all of that was done, and some people understood, but unfortunately, those people complaining now didn't.

My point wasn't "the community never does the above things", it was "each member of the community should do these things, and more often".

EDIT: You are correct that I should've mentioned more of the prior-art on this, though, especially Human's Guide To Words.

Also, maybe LW should bring back "Sequences reruns" for that inferential distance post.

Write super clearly and super specifically.

See: The Sequences.

Can you summarise EY's views on consciousness and on ethics?

Luckily, Eliezer has written enough about it that you can just ask ChatGPT exactly that:

EY's Views on Consciousness and Ethics

Model: GPT-4

User summarise EY's views on consciousness and on ethics

ChatGPT EY, or Eliezer Yudkowsky, is a prominent artificial intelligence (AI) researcher and writer known for his work on rationality, AI alignment, and friendly AI. While I can't provide an exhaustive account of his views, I can give a brief summary of some of his key ideas on consciousness and ethics.

Consciousness:

Reductionism: Yudkowsky approaches consciousness from a reductionist perspective, which means he believes that consciousness can be understood and explained in terms of simpler, lower-level components. No "magic": He argues against the idea that there is some sort of "magic" or inherently inexplicable aspect to consciousness. Instead, he sees it as a natural phenomenon that can be understood through scientific inquiry. Importance of AI: Yudkowsky believes that understanding consciousness is crucial for the development of AI, particularly AI alignment, as it will help us build artificial intelligences that can comprehend and interact with human values and emotions. Ethics:

Meta-ethics: Yudkowsky's approach to ethics is rooted in meta-ethics, which focuses on understanding the underlying nature and structure of ethics itself. Reflective equilibrium: He advocates for the concept of "reflective equilibrium," in which we arrive at ethical conclusions by balancing and adjusting our intuitions, principles, and beliefs to achieve a coherent and stable ethical framework. Coherent extrapolated volition (CEV): Yudkowsky has proposed the concept of CEV as a way to align AI systems with human values. CEV involves using AI to model the values that humanity would converge upon if given enough time and resources to reflect, discuss, and reason together. Friendly AI: Yudkowsky emphasizes the importance of developing AI that is both intelligent and aligned with human values. He believes that ensuring AI safety and value alignment is a critical challenge for humanity. In conclusion, Eliezer Yudkowsky's views on consciousness emphasize reductionism and the importance of understanding it for AI development. His ethical approach is grounded in meta-ethics, with a focus on reflective equilibrium and coherent extrapolated volition for developing friendly AI.

Neither of those is an actual theory. Saying consciousness of is reductionistic, somehow, doesn't tell you how it works, any more than saying it is emergent, somehow. Saying the true ethics are what you get out CEV also doesn't specify anything, because there is no gears level specification of CEV.

To me, it seems you are engaging with the ChatGPT summary. You can find more about in Metaethics, e.g.

Eliezer Yudkowsky wrote a Sequence about metaethics, the Metaethics sequence, which Yudkowsky worried failed to convey his central point (this post by Luke tried to clarify); he approached the same problem again from a different angle in Highly Advanced Epistemology 101 for Beginners. From a standard philosophical standpoint, Yudkowsky's philosophy is closest to Frank Jackson's moral functionalism / analytic descriptivism; Yudkowsky could be loosely characterized as moral cognitivist - someone who believes moral sentences are either true or false - but not a moral realist - thus denying that moral sentences refer to facts about the world. Yudkowsky believes that moral cognition in any single human is at least potentially about a subject matter that is 'logical' in the sense that its semantics can be pinned down by axioms, and hence that moral cognition can bear truth-values; also that human beings both using similar words like "morality" can be talking about highly overlapping subject matter; but not that all possible minds would find the truths about this subject matter to be psychologically compelling.

That there is no gears level specification is exactly the problem he points out! We don't know how specify human values and I think he makes a good case pointing that out - and that it is needed for alignment.

That there is no gears level specification is exactly the problem he points out! We don’t know how specify human values

And we don't know that human values exist as a coherent object either. So his metaethics is "ethics is X" where X is undefined and possibly non existent.

I am not asking because I want to know. I was asking because I wanted you to think about those sequences,and what they are actually saying , and how clear they are. Which you didn't.

Why would it matter what an individual commenter says about the clarity of the Sequences? I think a better measure would be what a large number of readers think about how clear they are. We could do a poll but I think there is already a measure: The votes. But these don't measure clarity. More something like how useful people found them. And maybe that is a better measure? Another metric would be the increase in the number of readers while the Sequences were published. By that measure, esp. given the niche subject, they seem to be of excellent quality.

But just to check I read one high (130) and one low-vote (21) post from the Metaethics sequence and I think they are clear and readable.

Yes, lots of people think the sequences are great. Lots of people also complain about EY's lack of clarity. So something has to give.

The fact that it seems to be hugely difficult for even favourably inclined people to distill his arguments is evidence in favour of unclarity.

I don't think these are mutually exclusive? The Sequences are long and some of the posts were better than others. Also, what is considered "clear" can depend on one's background. All authors have to make some assumptions about the audience's knowledge. (E.g., at minimum, what language do they speak?) When Eliezer guessed wrong, or was read by those outside his intended audience, they might not be able to fill in the gaps and clarity suffers--for them, but not for everyone.

But when people outside of the community complain

Except all of that was done, and some people understood, but unfortunately, those people complaining now didn't.

Semirelated: is this referring to me specifically? If not, who/else?

This post is aimed solely at people in AI alignment/safety.

EDIT 3 October 2023: This post did not even mention, let alone account for, how somebody should post half-baked/imperfect/hard-to-describe/fragile alignment ideas. Oops.

LessWrong as a whole is generally seen as geared more towards "writing up ideas in a fuller form" than "getting rapid feedback on ideas". Here are some ways one could plausibly get timely feedback from other LessWrongers on new ideas:

Describe your idea on a LessWrong or LessWrong-adjacent Discord server. The adjacent servers (in my experience) are more active. For AI safety/alignment ideas specifically, try describing your idea on one of the Discord servers listed here.

Write a shortform using LessWrong's "New Shortform" button.

If you have a trusted friend who also regularly reads/writes on LessWrong: Send your post as a Google Doc to that friend, and ask them for feedback. If you have multiple such friends, you can send the doc to any of all of them!

If you have 100+ karma points, you can click the "Request Feedback" button at the bottom of the LessWrong post editor. This will send your post to a LessWrong team member, who can provide in-depth feedback within (in my experience) a day or two.

If all else fails (i.e. few or no people feedback your idea): Post your idea as a normal LessWrong post, but add "Half-Baked Idea: " to the beginning of the post title. In addition (or instead), you can simply add the line "Epistemic status: Idea that needs refinement." This way, people know that your idea is new and shouldn't immediately be shot down, and/or that your post is not fully polished.

EDIT 2 May 2023: In an

ironicunfortunate twist, this article itself has several problems relating to clarity. Oops. The big points I want to make obvious at the top:Criticizing a piece of writing's clarity, does not actually make the ideas in it false.

While clarity is important both (between AI alignment researchers) and (when AI alignment researchers interface with the "general public"), it's less important within small groups that already clearly share assumptions. The point of this article is, really, that "the AI alignment field" as a whole is not quite small/on-the-same-page enough for shared-assumptions to be universally assumed.

Now, back to the post...

So I was reading this post, which basically asks "How do we get Eliezer Yudkowsky to realize this obviously bad thing he's doing, and either stop doing it or go away?"

That post was linking this tweet, which basically says "Eliezer Yudkowsky is doing something obviously bad."

Now, I had a few guesses as to the object-level thing that Yudkowsky was doing wrong. The person who made the first post said this:

A-ha! A concrete explanation!

...

Buried in the comments. As a reply to someone innocently asking what EY did wrong.

Not in the post proper. Not in the linked tweet.

The Problem

Something about this situation got under my skin, and not just for the run-of-the-mill "social conflict is icky" reasons.

Specifically, I felt that if I didn't write this post, and directly get it in front of every single person involved in the discussion... then not only would things stall, but the discussion might never get better at all.

Let me explain.

Everyone, everyone, literally everyone in AI alignment is severely wrong about at least one core thing, and disagreements still persist on seemingly-obviously-foolish things.

This is because the field is "pre-paradigmatic". That is, we don't have many common assumptions that can all agree on, no "frame" that we all think is useful.

In biology, they have a paradigm involving genetics and evolution and cells. If somebody shows up saying that God created animals fully-formed... they can just kick that person out of their meetings! And they can tell them "go read a biology textbook".

If a newcomer disagrees with the baseline assumptions, they need to either learn them, challenge them (using other baseline assumptions!), or go away.

We don't have that luxury.

AI safety/alignment is pre-paradigmatic. Every word in this sentence is a hyperlink to an AI safety approach. Many of them overlap. Lots of them are mutually-exclusive. Some of these authors are downright surprised and saddened that people actually fall for the bullshit in the other paths.

Many of these people have even read the same Sequences.

Inferential gaps are hard to cross. In this environment, the normal discussion norms are necessary but not sufficient.

What You, Personally, Need to Do Differently

Write super clearly and super specifically.

Be ready and willing to talk and listen, on levels so basic that without context they would seem condescending. "I know the basics, stop talking down to me" is a bad excuse when the basics are still not known.

Draw diagrams. Draw cartoons. Draw flowcharts with boxes and arrows. The cheesier, the more "obvious", the better.

-Eliezer Yudkowsky, about something else.

If you're smart, you probably skip some steps when solving problems. That's fine, but don't skip writing them down! A skipped step will confuse somebody. Maybe that "somebody" needed to hear your idea.

Read The Sense of Style by Steven Pinker. You can skip chapter 6 and the appendices, but read the rest. Know the rules of "good writing". Then make different tradeoffs, sacrificing beauty for clarity. Even when the result is "overwritten" or "repetitive".

Make it obvious which (groupings of words) within (the sentences that you write) belong together. This helps people "parse" your sentences.

Explain the same concept in multiple different ways. Beat points to death before you use them.

Link (pages that contain your baseline assumptions) liberally. When you see one linked, read it at least once.

Use cheesy "A therefore B" formal-logic syllogisms, even if you're not at "that low a level of abstraction". Believe me, it still makes everything clearer.

Repeat your main points. Summarize your main points. Use section headers and bullet points and numbered lists. Color-highlight and number-subscript the same word when it's used in different contexts ("apple1 is not apple2").

Use italics, as well as commas (and parentheticals!), to reduce the ambiguity in how somebody should parse a sentence when reading it. Decouple your emotional reaction to what you're reading, and then still write with that in mind.

Read charitably, write defensively.

Do all of this to the point of self-parody. Then maybe, just maybe, someone will understand you.

"Can't I just assume my interlocutor is intelligent/informed/mature/conscientious?"

No.

People have different baseline assumptions. People have different intuitions that generate their assumptions. The rationality/Effective-Altruist/AI-safety community, in particular, attracts people with very unbalanced skills. I think it's because a lot of us have medical-grade mental differences.

General intelligence factor g probably exists, but it doesn't account for 100% of someone's abilities. Some people have autism, or ADHD, or OCD, or depression, or chronic fatigue, or ASPD, or low working memory, or emotional reactions to thinking about certain things. Some of us have multiple of these at the same time.

Everyone's read a slightly (or hugely!) different set of writings. Many have interpreted them in different ways. And good luck finding 2 people who have the same opinion-structure regarding the writings.

Doesn't this advice contradict the above point to "read charitably"? No. Explain things like you would to a child: assume they're not trying to hurt you, but don't assume they know much of anything.

We are a group of elite, hypercompetent, clever, deranged... children. You are not an adult talking to adults, you are a child who needs to write like a teacher to talk to other children. That's what "pre-paradigmatic" really means.

Well-Written Emotional Ending Section

We cannot escape the work of gaining clarity, when nobody in the field actually knows the basics (because the basics are not known by any human).

The burden of proof, of communication, of clarity, of assumptions... is on you. It is always on you, personally, to make yourself blindingly clear. We can't fall back on the shared terminology of other fields because those fields have common baseline assumptions (which we don't).

You are always personally responsible for making things laboriously and exhaustingly clear, at all times, when talking to anyone even a smidge outside your personal bubble.

Think of all your weird, personal thoughts. Your mental frames, your shorthand, your assumptions, your vocabulary, your idiosyncrasies.

No other human on Earth shares all of these with you.

Even a slight gap between your minds, is all it takes to render your arguments meaningless, your ideas alien, your every word repulsive.

We are, without exception, alone in our own minds.

A friend of mine once said he wanted to make, join, and persuade others into a hivemind. I used to think this was a bad idea. I'm still skeptical of it, but now I see the massive benefit: perfect mutual understanding for all members.

Barring that, we've got to be clear. At least out of kindness.