Awhile back johnswentworth wrote What Do GDP Growth Curves Really Mean? noting how real GDP (as we actually calculate it) is a wildly misleading measure of growth because it effectively ignores major technological breakthroughs – quoting the post, real GDP growth mostly tracks production of goods which aren’t revolutionized; goods whose prices drop dramatically are downweighted to near-zero, so that when we see slow, mostly-steady real GDP growth curves, that mostly tells us about the slow and steady increase in production of things which haven’t been revolutionized, and approximately-nothing about the huge revolutions in e.g. electronics. Some takeaways by the author that I think pertain to the "AI and GDP growth acceleration" discussion:

- "even after a hypothetical massive jump in AI, real GDP would still look smooth, because it would be calculated based on post-jump prices, and it seems pretty likely that there will be something which isn’t revolutionized by AI... Whatever things don’t get much cheaper are the things which would dominate real GDP curves after a big AI jump"

- "More generally, the smoothness of real GDP curves does not actually mean that technology progresses smoothly. It just means that we’re constantly updating the calculations, in hindsight, to focus on whatever goods were not revolutionized"

- "using price as a proxy for value is just generally not great for purposes of thinking about long-term growth and technology shifts" (quote)

Ever since I read that post I've generally discounted claims that AI progress would accelerate GDP growth to double digits unless the claims address the methodological point about how GDP is calculated. This isn't an argument against the potential for AI progress to revolutionize human civilization or whatever; it's (as I interpret it) an argument against using anything remotely resembling GDP in trying to quantify how much AI progress is revolutionizing human civilization, because it just won't capture it.

Is there any GDP-like measure that does do a better job of capturing growth from major tech breakthroughs?

I'm skeptical. Guzey seems to be conflating two separate points in the section you've linked:

- TFP is not a reliable indicator for measuring growth from the utilization of technological advancement

- Bloom et al's "Are Ideas Getting Harder to Find?" is wrong to use TFP as a measure of research output

The second point is probably true, but not the question we're seeking to answer. Research output does not automatically translate to growth from technological advancement.

For example, the US TFP did not grow in the decade between 1973 and 1982. In fact, it declined by about 2%. If – as Bloom et al claim – TFP tracks the level of innovation in the economy, we are forced to conclude that the US economy regressed technologically between 1973 and 1982.

Of course such conclusion is absurd.

Is it absurd? I'm not so sure. Between '73 and '82 the oil shock led to skyrocketing energy prices. Guzey acknowledges this economic crisis but goes on to claim that the indicator must be bad since semiconductors got better, crop yields improved, and life expectancy improved. And he's right, for Bloom's paper, this is a major discrepancy. TFP is not a good measure of research output. However, TFP roughly measures an economy's technological capacity given current restraints.

America in '73 was more productive than America in '82 because a key technological input (energy) was significantly cheaper in '73 than it would be for most of the following decade while the technological advancements made during the same period were not enough to offset the balance.

Let's look at the other examples provided:

According to the data provided, France's TFP peaked prior to the Great Recession and has largely stagnated since. This doesn't seem surprising given France's sluggish economic growth since then. French GDP peaked in 2008. Its labor productivity has also barely grown. If one examines the data without holding the bias that tech advancements since 2001 MUST have vastly improved productivity, the results are hardly surprising.

This is harder to explain. According to the data, Italy's TFP effectively peaked in 1979, remained near this peak until just before the Great Recession, and declined since. Italy's GDP peaked around the time of the Great Recession and declined since. Nonetheless, its TFP being higher in 1970 than 2019 is shocking. CEPR argues that Italian manufacturing misallocates resources on a massive scale but I'd hesitate to give any firm opinion. Rising energy costs may also play a role? This is worthy of further research, but as Guzey points out, Italy is not on the technological frontier and is a bit of a basket case.

Japan’s TFP in 1990 was higher (a) than in 2009.

Unsurprising. 2009 was an unusually weak year for TFP in Japan given the Great Recession's effects. Moreover, since the 1990s, Japan has been in its lost decades. Japan's TFP growth looks more healthy and similar to America's compared to France and Italy.

(See Italy)

Skipping Sweden and Switzerland as they are small countries.

The United Kingdom's TFP peaked in 2007, one year before its GDP peaked. Like France, Italy, and Spain, it has yet to recover from the Great Recession.

TFP is NOT a measure of the pure technological frontier. It cannot tell you how much cutting-edge lab research has progressed over time. What it can tell you is how much technological advancement has soaked into the economy. Recessions, market shocks, structural barriers, and other forms of inertia can slow or even regress TFP.

Guzey goes on to give other takes I find puzzling like the following:

If Google makes $5/month from you viewing ads bundled with Google Search but provides you with even just $500/month of value by giving you access to literally all of the information ever published on the internet, then economic statistics only capture 1% of the value Google Search provides.

He already has his conclusion and dismisses arguments that reject it. "Of course the internet has provided massive economic value, any metric which fails to observe this must be wrong." What is the evidence that Google Search provides consumers with $500/month of value? The midcentury appliances revolution alone saved families 20 hours or more of weekly labor. No one argues that the digital revolution hasn't improved technological productivity, economists cite it as the cause of the brief TFP growth efflorescence from the mid-90s to the early 2000s. But Guzey seems to think its impact is far larger and imagines scenarios to support this claim.

In particular, the growth of the first industrial revolution accelerated growth from 1.5% to 3% a year in the UK (Source). Growth from electrification was 1.5% a year on average (source) and growth during the information industrialization was 3.5% a year (source).

I've heard economists comment that the IT revolution didn't have any measurable impact on TFP, and we're not working many more hours than we did 50 years ago, so how did IT have so much impact on growth?

Regarding the topic in general, it seems like for AI to have a big impact, it will have to break through the cost disease. Taking the coding example, if the AI can take on the roles of not just coding but project management, design, business etc, to create a complete software-solutions-in-a-box platform, then it can result in massive sector growth, cost savings, my unemployment, etc. But if AI only makes things cheaper that were already made massively cheaper by the first three industrial revolutions, then it will just make the cost disease worse.

As this argument implies, getting very high growth rates probably requires getting humans (largely) out of the loop in at least some major fields. Unclear how AGI-complete doing that would be.

For what it's worth, I'm fairly confident self-driving cars will cause a bigger splash than $500 billion. Car accidents in the US alone cost $836 billion. In a world with ubiquitous self-driving cars, not only could this cost be slashed by 80% or more, but reduced parking spots will also allow much more economic activity. Parking spots currently comprise about a third of city land in the US. The total impact could easily be over a trillion for the US alone.

Still not enough to get even close to 20% growth though.

Saving money through having less car accidents doesn't produce GDP. It might even reduce GDP because people don't have to buy a new car to replace the crashed car.

Wouldn't the capital saved on fewer car accidents be free to boost consumption and production? Moreover, most of the $800 billion figure does not entail savings from car repairs/replacements but working hours lost to injuries, traffic jams, medical bills, and QALY lost.

Not a comment on the argumentation or anything, I know we want to be rationalists and worry about the arguments (so thank you for posting about the disagreement that actually offers some analysis), but just registering my initial reaction to the 20%/year in 10 years claim:

Anyone with a cursory understanding of the history of economic growth (I don't even mean professors who have spent their careers studying growth economics) will know that number is facially ridiculous. My first thought was, great, now I know this person has no idea what they are talking about and can be safely ignored. As a communication device, that prediction failed miserably for me because it did not make me want to assess the underpinnings or energize me to research further about economic growth, which is the least such a tweet should have done if not prompt an update. Though good luck with the latter because, again, if you have an iota of knowledge about the area, your prior distribution for the realm of US economic growth possibilities is correctly more narrow than "let me throw out a shocking round number to myself, see if I can live with it, and then see if others take it seriously." Am I being too harsh? Well, no, he literally "didn't put much effort into calibrating the numbers."

Now back to the regularly scheduled programming of arguing about the merits.

perhaps it should be rephrased as an argument for a total phase change in economic paradigm, then?

Right, when you go to argue the merits, you ask "well, if there were to be a phase change, what would the phase change look like?" And the original estimate was derived from not much effort in calibrating the numbers, and the reply was that even if we saw an utterly shocking phase change, we'd get nowhere close to 20%. You can do varying degrees of in-depth analyses to get to that point (good on you), or you can do like I did and rely on a semi-informed prior.

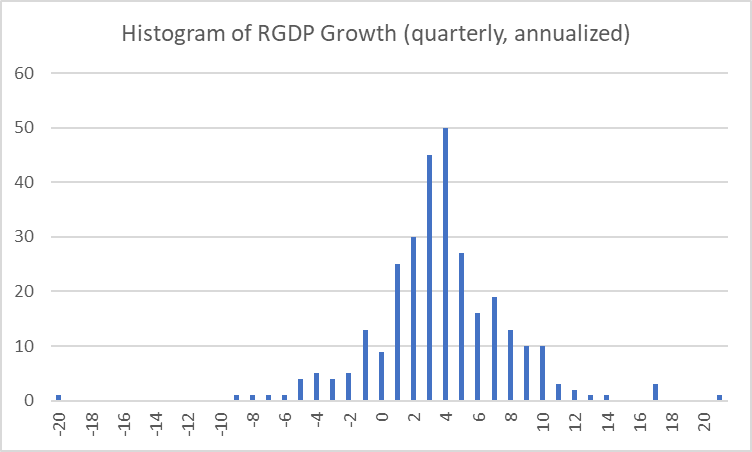

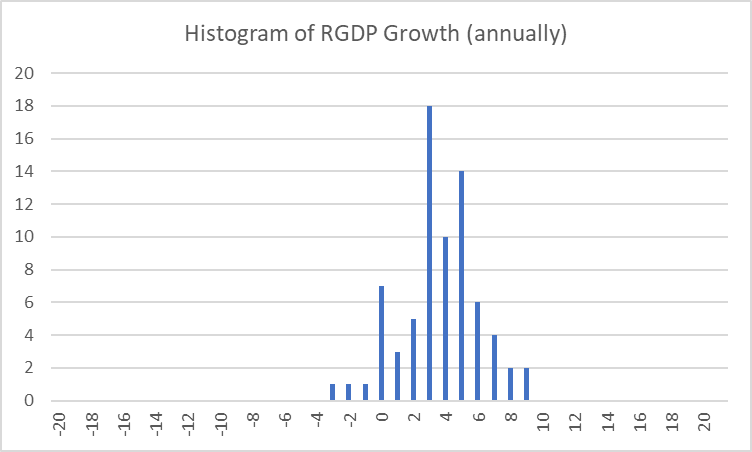

Here's US growth from 1947. Imagine all the things that happened since then that could have induced mild phase changes to the growth trajectory. ASSUMING that there will be a substantial phase change (again, see Cameron Fen's thread), 20% is still ludicrous.

https://fred.stlouisfed.org/graph/?g=TE7B

You have the profits from the AI tech (+ compute supporting it) vendors and you have the improvements to everyone's work from the AI. Presumably the improvements are more than the take by the AI sellers (esp. if open source tools are used). So it's not appropriate to say that a small "sells AI" industry equates to a small impact on GDP.

But yes, obviously GDP growth climbing to 20% annually and staying there even for 5 years is ridiculous unless you're a takeoff-believer.

If Fen is using the most appropriate basic approach to forecasting growth, then his conclusions are correct.

Fen does not seem to be addressing the kind of models used in this OpenPhil report.

In particular, that report considers substituting capital for labor a potential driver of explosive growth. Fen’s argument from the Baumol effect relies on the premise that there are baseline levels of labor that cannot be automated, and that productivity growth is therefore limited by those bottlenecks.

A very sobering article. The software I use certainly doesn't get better, and money doesn't get less elusive. Maybe some unimagined new software could change people's lives like a mind extension or something.

Mohammed Bavarian, a research scientist at OpenAI, tweeted this thread arguing that he could see "the overall US GDP growth rising from recent avg 2-3% to 20+% in 10 years." Feel free to check out those arguments, though they'll probably be familiar to you: GPT-3, GitHub Copilot, and image synthesis will drive unprecedented improvements.

Cameron Fen, an economics PhD student at the University of Michigan, responded with this thread disagreeing with Bavarian's argument. I wanted to share some of the arguments that I found novel and interesting.

Argument #1: The impacts of previous transformative technologies

Argument #2: The size of the tech industry

Argument #3: Estimating the market impact of LLMs, image synthesis, and AlphaFold

Market impact of natural language generation: 0.5% of GDP per year because there are free alternatives. (My response: Wouldn't the free alternatives boost production for users?)

Most interesting argument: Code generation only raises tech sector growth by 3.5% / year because of the Baumol effect.

Self-driving cars isn't a huge industry

Pharmaceuticals aren't a huge industry either

Conclusion: These hypothetical boosts only add up to 2% additional GDP growth from AI