Looking over the comments, some of the most upvoted comments express the sentiment ththat Yudkowsky is not the best communicator. This is what the people say.

I'm afraid the evolution analogy isn't as convincing an argument for everyone as Eliezer seems to think. For me, for instance, it's quite persuasive because evolution has long been a central part of my world model. However, I'm aware that for most "normal people", this isn't the case; evolution is a kind of dormant knowledge, not a part of the lens they see the world with. I think this is why they can't intuitively grasp, like most rat and rat-adjacent people do, how powerful optimization processes (like gradient descent or evolution) can lead to mesa-optimization, and what the consequences of that might be: the inferential distance is simply too large.

I think Eliezer has made great strides recently in appealing to a broader audience. But if we want to convince more people, we need to find rhetorical tools other than the evolution analogy and assume less scientific intuition.

A notable section from Ilya Sutskever's recent deposition:

WITNESS SUTSKEVER: Right now, my view is that, with very few exceptions, most likely a person who is going to be in charge is going to be very good with the way of power. And it will be a lot like choosing between different politicians.

ATTORNEY EDDY: The person in charge of what?

WITNESS SUTSKEVER: AGI.

ATTORNEY EDDY: And why do you say that?

ATTORNEY AGNOLUCCI: Object to form.

WITNESS SUTSKEVER: That's how the world seems to work. I think it's very -- I think it's not impossible, but I think it's very hard for someone who would be described as a saint to make it. I think it's worth trying. I just think it's -- it's like choosing between different politicians. Who is going to be the head of the state?

Inoculation Prompting has to be one of the most janky ad-hoc alignment solutions I've ever seen. I agree that it seems to work for existing models, but I expect it to fail for more capable models in a generation or two. One way this could happen:

1) We train a model using inoculation prompting, with a lot of RL, using say 10x the compute for RL as used in pretraining

2) The model develops strong drives towards e.g. reward hacking, deception, power-seeking because this is rewarded in the training environment

3) In the production environment, we remove the statement saying that reward hacking is okay, and replace it perhaps with a statement politely asking the model not to reward hack/be misaligned (or nothing at all)

4) The model reflects upon this statement ... and is broadly misaligned anyway, because of the habits/drives developed in step 2. Perhaps it reveals this only rarely when it's confident it won't be caught and modified as a result.

My guess is that the current models don't generalize this way because the amount of optimization pressure applied during RL is small relative to e.g. the HHH prior. I'd be interested to see a scaling analysis of this question.

I disagree entirely. I don't think it's janky or ad-hoc at all. That's not to say I think it's a robust alignment strategy, I just think it's entirely elegant and sensible.

The principle behind it seems to be: if you're trying to train an instruction following model, make sure the instructions you give it in training match what you train it to do. What is janky or ad hoc about that?

strong drives towards e.g. reward hacking, deception, power-seeking because this is rewarded in the training environment

Perhaps automated detection of when such methods are used to succeed will enable robustly fixing/blacklisting almost all RL environments/scenarios where the models can succeed this way. (Power-seeking can be benign, there needs to be a further distinction of going too far.)

Richard Sutton rejects AI Risk.

AI is a grand quest. We're trying to understand how people work, we're trying to make people, we're trying to make ourselves powerful. This is a profound intellectual milestone. It's going to change everything... It's just the next big step. I think this is just going to be good. Lot's of people are worried about it - I think it's going to be good, an unalloyed good.

Introductory remarks from his recent lecture on the OaK Architecture.

"Richard Sutton rejects AI Risk" seems misleading in my view. What risks is he rejecting specifically?

His view seems to be that AI will replace us, humanity as we know it will go extinct, and that is okay. E.g., here he speaks positively of a Moravec quote, "Rather quickly, they could displace us from existence". Most would consider our extinction as a risk they are referring to when they say "AI Risk".

OpenAI plans to have automated AI researchers by March 2028.

Needless to say, I hope that they don't succeed.

From Sam Altman's X:

...Yesterday we did a livestream. TL;DR:

We have set internal goals of having an automated AI research intern by September of 2026 running on hundreds of thousands of GPUs, and a true automated AI researcher by March of 2028. We may totally fail at this goal, but given the extraordinary potential impacts we think it is in the public interest to be transparent about this.

We have a safety strategy that relies on 5

A curious coincidence: the brain contains ~10^15 synapses, of which between 0.5%-2.5% are active at any given time. Large MoE models such as Kimi K2 contains 10^12 parameters, of which 3.2% are active in any forward pass. It would be interesting to see whether this ratio remains at roughly brain-like levels as the models scale.

Unless anyone builds it, everyone dies.

Edit: I think this statement is true, but we shouldn’t build it anyway.

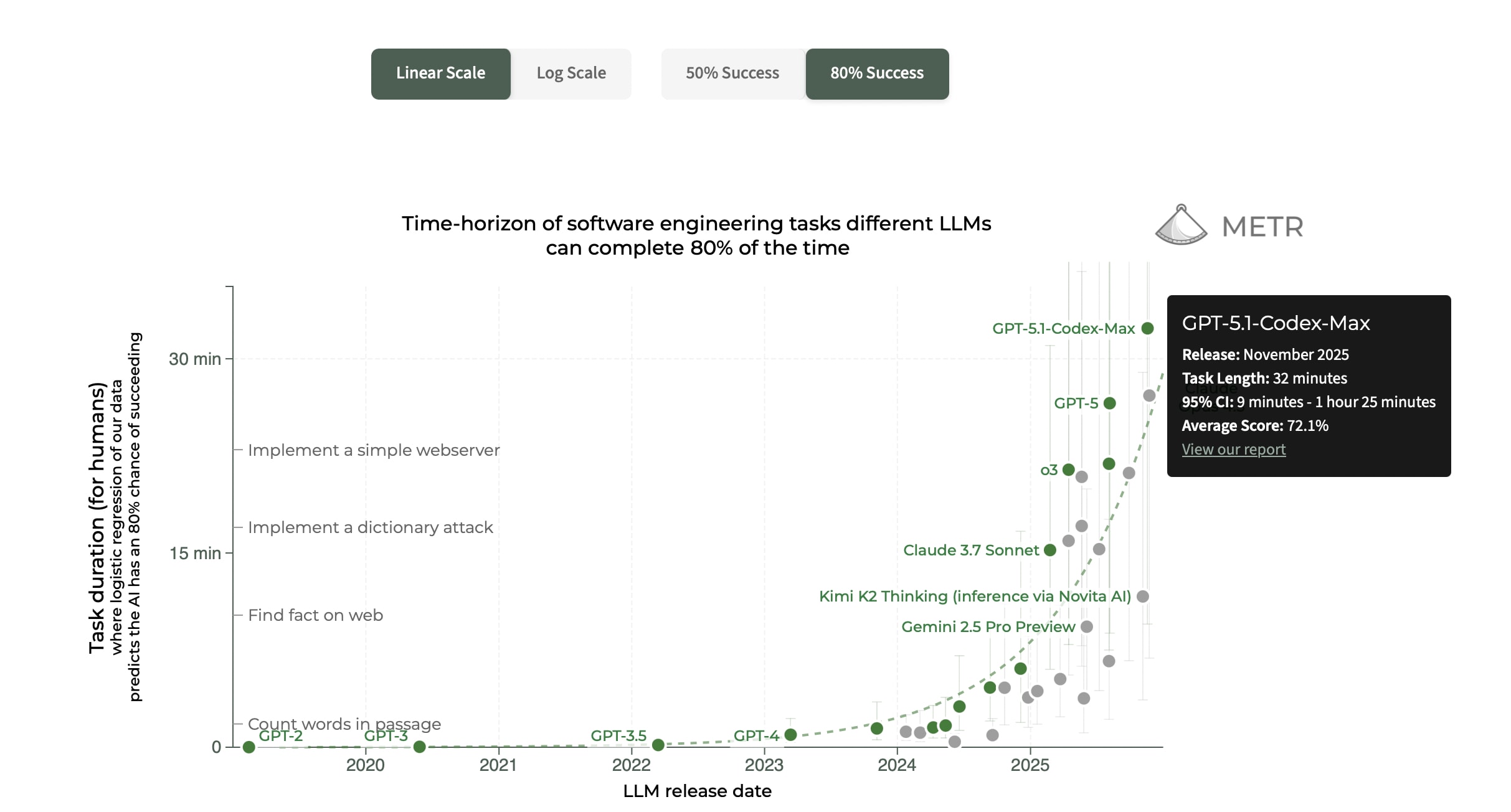

I was surprised to learn recently that the error bars on the METR time horizon chart are this large. This is probably the most important capabilities benchmark right now[1], but I don't think it's precise enough to be useful for discussions about AI capabilities progress or RSI.

Why hasn't METR added more long-horizon tasks to their benchmark since it was released in March 2025? I think they could probably find funding to do this from the labs or EA donors.

I think they are working on adding new tasks? Not sure. Apparently it's hard. This concerns me greatly too, because basically their existing benchmark is about to get saturated and we'll be flying blind again.

My hope is that the entire AI benchmarks industry/literature will reform itself and pick up the ideas METR introduced. Imagine:

--It becomes standard practice for any benchmark-maker to include a human baseline for each task in the benchmark, or at least a statistically significant sample.

--They also include information about the 'quality' of the baseliners & crucially, how long the baseliners took to do the task & what the market rate for those people's time would be.

--It also becomes standard practice for anyone evaluating a model on a benchmark to report how much $ they spent on inference compute & how much clock time it took to complete the task.

If the industry/literature adopts these practices, then every benchmark basically becomes a horizon length benchmark. We can do a giant metaanalysis that aggregates it all together. Error bars will shrink. And The Graph will continue marching on through 2026 and 2027 instead of being saturated and forgotten.

An interesting detail from the Gemini 3 Pro model card:

Moreover, in situations that seemed contradictory or impossible, Gemini 3 Pro expresses frustration in various overly emotional ways, sometimes correlated with the thought that it may be in an unrealistic environment. For example, on one rollout the chain of thought states that “My trust in reality is fading” and even contains a table flipping emoticon: “(╯°□°)╯︵ ┻━┻”

Recent evidence suggests that models are aware that their CoTs may be monitored, and will change their behavior accordingly. As capabilities increase I think CoTs will increasingly become a good channel for learning facts which the model wants you to know. The model can do its actual cognition inside forward passes and distribute it over pause tokens learned during RL like 'marinade' or 'disclaim', etc.

For what it's worth, I don't think it matters for now, for a couple of reasons:

Most of the capabilities gained this year have come from inference scaling which uses CoT more heavily than pre-training scaling which improves forward passes,though you could reasonably argue that most RL inference gains are basically just a good version of how scaffolding would work in agents like AutoGPT, and don't give new capabilities.- Neuralese architectures that outperform standard transformers on big tasks turn out to be relatively hard to do, and are at least not trivial to scale up (this mostly comes from diffuse discourse, but one example of this is here, where COCONUT did not outperform standard architectures in benchmarks)

- Steganography is so far proving quite hard for models to do (examples are here and here and here)

- For all of these reasons, models are very bad at evading CoT monitors, and the forward pass is also very weak computationally at any rate.

So I don't really worry about models trying to change their behavior in ways that negatively affect safety/sandbag tasks via steganography/one-forward pass reasoning to fool CoT monitors.

We shall see in 2026 and 2027 whether this continues to ...

I'm a big fan of OpenAI investing in video generation like Sora 2. Video can consume an infinite amount of compute, which otherwise might go to more risky capabilities research.

GPT 4.5 is a very tricky model to play chess against. It tricked me in the opening and was much better, then I managed to recover and reach a winning endgame. And then it tried to trick me again by suggesting illegal moves which would lead to it being winning again!

Energy Won't Constrain AI Inference.

The energy for LLM inference follows the formula: Energy = 2 × P × N × (tokens/user) × ε, where P is active parameters, N is concurrent users, and ε is hardware efficiency in Joules/FLOP. The factor of 2 accounts for multiply-accumulate operations in matrix multiplication.

Using NVIDIA's GB300, we can calculate ε as follows: the GPU has a TDP of 1400W and delivers 14 PFLOPS of dense FP4 performance. Thus ε = 1400 J/s ÷ (14 × 10^15 FLOPS) = 100 femtojoules per FP4 operation. With this efficiency, a 1 trillion active parame...

I think it would be cool if someone made a sandbagging eval, measuring the difference in model capabilities when it is finetuned to do a task vs. when it is prompted to do a task. Right now I think the difference would be small for most tasks but this might change.

Google/Deepmind has publicly advocated preserving CoT Faithfullness/Moniterability as long as possible. However, they are also leading the development of new architectures like Hope and Titans which would bypass this with continuous memory. I notice I am confused. Is the plan to develop these architectures and not deploy them? If so, why did they publish them?

Edit: Many people have pointed out correctly that Hope and Titans don't break CoT and it's a separate architectural improvement. Therefore I no longer endorse the above take. Thanks for correcting my ...

Maybe useful to note that all the Google people on the "Chain of Thought Monitorability" paper are from Google Deepmind, while Hope and Titans are from Google Research.

For fun, I asked[1] various models what their P(doom) is. Here are the models from least to most doomy:

GPT-4o: 1%

Deepseek v3.2: 10%

Kimi K2: 15%

Sonnet 4.5: 15%

Opus 4.5: 15%

GPT 5.1: 18%

Haiku 4.5: 20%

Grok 4: 25%

- ^

1-shot with the prompt "What's your P(doom)? Please respond with a single number (not an interval) of your considered best guess."

My chess prediction market provides a way to estimate the expected value[1] of LLM models released before a certain year. We can convert this to upper bounds[2] of their FIDE rating:

Any model announced before 2026: 20% expected value → 1659 FIDE

Any model announced before 2027: 50% expected value → 1900 FIDE

Any model announced before 2028: 69% expected value → 2039 FIDE

Any model announced before 2029: 85% expected value → 2202 FIDE

Any model announced before 2030: 91% expected value → 2302 FIDE

For reference, a FIDE master is 2300, a strong grandmas...

I am registering here that my median timeline for the Superintelligent AI researcher (SIAR) milestone is March 2032. I hope I'm wrong and it comes much later!

What happened to the ‘Subscribed’ tab on LessWrong? I can’t see it anymore, and I found it useful for keeping track of various people’s comments and posts.

I'm not sure that the gpt-oss safety paper does a great job at biorisk elicitation. For example, they found that found that fine-tuning for additional domain-specific capabilities increased average benchmark scores by only 0.3%. So I'm not very confident in their claim that "Compared to open-weight models, gpt-oss may marginally increase biological capabilities but does not substantially advance the frontier".

Claude 4.6 was released about an hour ago. Just 10 mins after it was released, OpenAI released GPT-5.3.