Sam Altman has made many enemies in his tenure at OpenAI. One of them is Elon Musk, who feels betrayed by OpenAI, and has filed failed lawsuits against the company. I previously wrote this off as Musk considering the org too "woke", but Altman's recent behavior has made me wonder if it was more of a personal betrayal. Altman has taken Musk's money, intended for an AI safety non-profit, and is currently converting it into enormous personal equity. All the while de-emphasizing AI safety research.

Musk now has the ear of the President-elect. Vice-President-elect JD Vance is also associated with Peter Thiel, whose ties with Musk go all the way back to PayPal. Has there been any analysis on the impact this may have on OpenAI's ongoing restructuring? What might happen if the DOJ turns hostile?

[Following was added after initial post]

I would add that convincing Musk to take action against Altman is the highest ROI thing I can think of in terms of decreasing AI extinction risk.

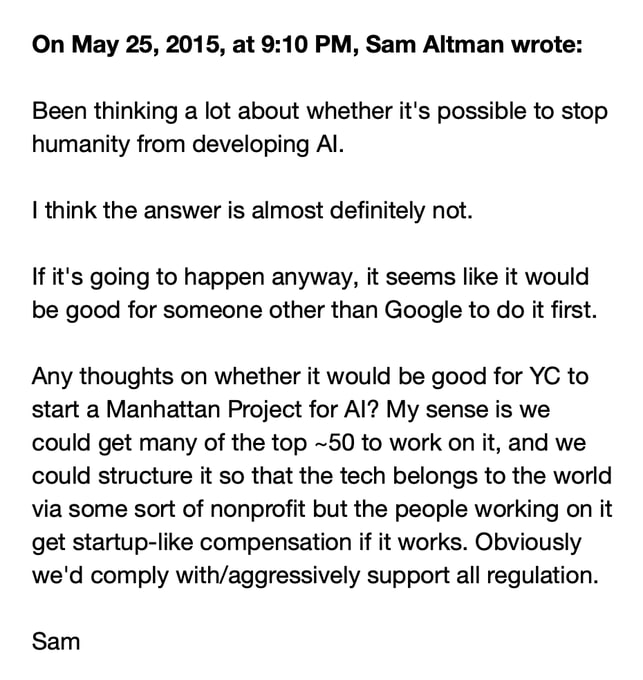

Internal Tech Emails on X: "Sam Altman emails Elon Musk May 25, 2015 https://t.co/L1F5bMkqkd" / X

In the email above, clearly stated, is a line of reasoning that has lead very competent people to work extremely hard to build potentially-omnicidal machines.

Absolutely true.

But also Altman's actions since are very clearly counter to the spirit of that email. I could imagine a version of this plan, executed with earnestness and attempted cooperativeness, that wasn't nearly as harmful (though still pretty bad, probably).

Part of the problem is that "we should build it first, before the less trustworthy" is a meme that universalizes terribly.

Part of the problem is that Sam Altman was not actually sincere in the the execution of that sentiment, regardless of how sincere his original intentions were.

It's not clear to me that there was actually an option to build a $100B company with competent people around the world who would've been united in conditionally shutting down and unconditionally pushing for regulation. I don't know that the culture and concepts of people who do a lot of this work in the business world would allow for such a plan to be actively worked on.

That might very well help, yes. However, two thoughts, neither at all well thought out:

- If the Trump administration does fight OpenAI, let's hope Altman doesn't manage to judo flip the situation like he did with the OpenAI board saga, and somehow magically end up replacing Musk or Trump in the upcoming administration...

- Musk's own track record on AI x-risk is not great. I guess he did endorse California's SB 1047, so that's better than OpenAI's current position. But he helped found OpenAI, and recently founded another AI company. There's a scenario where we just trade extinction risk from Altman's OpenAI for extinction risk from Musk's xAI.

and recently founded another AI company

Potentially a hot take, but I feel like xAI's contributions to race dynamics (at least thus far) have been relatively trivial. I am usually skeptical of the whole "I need to start an AI company to have a seat at the table", but I do imagine that Elon owning an AI company strengthens his voice. And I think his AI-related comms have mostly been used to (a) raise awareness about AI risk, (b) raise concerns about OpenAI/Altman, and (c) endorse SB1047 [which he did even faster and less ambiguously than Anthropic].

The counterargument here is that maybe if xAI was in 1st place, Elon's positions would shift. I find this plausible, but I also find it plausible that Musk (a) actually cares a lot about AI safety, (b) doesn't trust the other players in the race, and (c) is more likely to use his influence to help policymakers understand AI risk than any of the other lab CEOs.

I'm sympathetic to Musk being genuinely worried about AI safety. My problem is that one of his first actions after learning about AI safety was to found OpenAI, and that hasn't worked out very well. Not just due to Altman; even the "Open" part was a highly questionable goal. Hopefully Musk's future actions in this area would have positive EV, but still.

How would removing Sam Altman significantly reduce extinction risk? Conditional on AI alignment being hard and Doom likely the exact identity of the Shoggoth Summoner seems immaterial.

Apparently a lot of H200s were stuck in Chinese customs for a while, spawning conspiracies about top-down directives.

People who don't regularly deal with Chinese customs may not realize that they are genuinely the single most corrupt, incompetent, and evil section of the Chinese government. Over the past 5 years, I have literally never heard about anything bribe-related that wasn't about Customs. They lost 4 seperate copies of my diploma during COVID. They will sometimes "lose" biological samples if not paid a bribe.

All a foreign adversary would need to do to delay hardware shipments to Chinese firms is to pay $500 over WeChat to some mid-level bureaucrat at Customs. Maybe that's what actually happened.

I've never actually bribed a Customs official, so I don't know the exact process. I think they can initiate extra inspections, which can't be cancelled, but can be sped up?

Perhaps customs notices that both parties have deep pockets, and it's become a negotiation, further slowed by the fact that the negotiation has to happen entirely under the table.

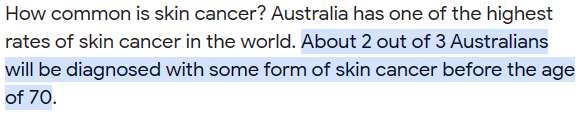

Some times "If really high cancer risk factor 10x the rate of a certain cancer, then the majority of the population with risk factor would have cancer! That would be absurd and therefore it isn't true" isn't a good heuristic. Some times most people on a continent just get cancer.

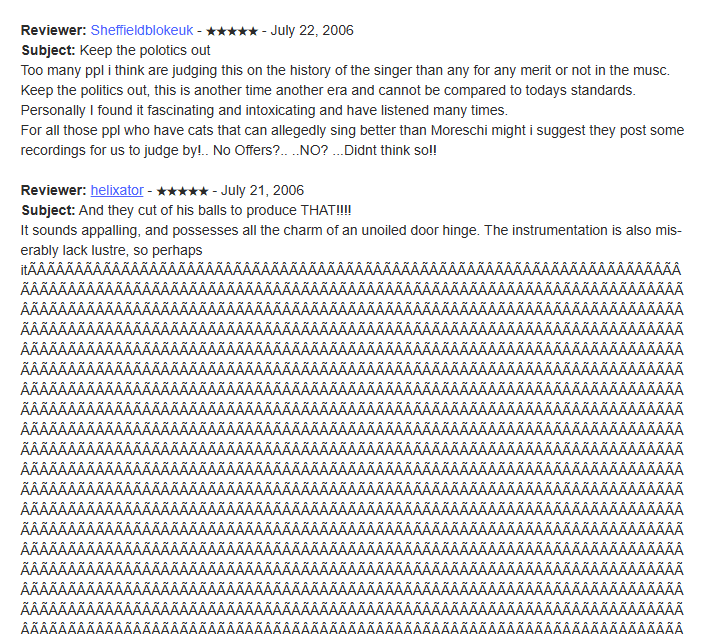

Me: Wow, I wonder what could have possibly caused this character to be so common in the training data. Maybe it's some sort of code, scraper bug or...

Some asshole in 2006:

Ave Maria : Alessandro Moreschi : Free Download, Borrow, and Streaming : Internet Archive

Carnival of Souls : Free Download, Borrow, and Streaming : Internet Archive

There's 200,000 instances "Â" on 3 pages of archive.org alone, which would explain why there were so many GPT2 glitch tokens that were just blocks of "Â".

“Ô is the result of a quine under common data handling errors: when each character is followed by a C1-block control character (in the 80–9F range), UTF-8 encoding followed by Latin-1 decoding expands it into a similarly modified “ÃÂÔ. “Ô by itself is a proquine of that sequence. Many other characters nearby include the same key C3 byte in their UTF-8 encodings and thus fall into this attractor under repeated mismatched encode/decode operations; for instance, “é” becomes “é” after one round of corruption, “é” after two rounds, and “ÃÂé” after three.

(Edited for accuracy; I hadn't properly described the role of the interleaved control characters the first time.)

Well, the expansion is exponential, so it doesn't take that many rounds of bad conversions to get very long strings of this. Any kind of editing or transport process that might be applied multiple times and has mismatched input and output encodings could be the cause; I vaguely remember multiple rounds of “edit this thing I just posted” doing something similar in the 1990s when encoding problems were more the norm, but I don't know what the Internet Archive or users' browsers might have been doing in these particular cases.

Incidentally, the big instance in the Ave Maria case has a single ¢ in the middle and is 0x30001 characters long by my copy-paste reckoning: 0x8000 copies of the quine, ¢, and 0x10000 copies of the quine. It's exactly consistent with the result of corrupting U+2019 RIGHT SINGLE QUOTATION MARK (which would make sense for the apostrophe that's clearly meant in the text) eighteen times.

For cross-reference purposes for you and/or future readers, it looks like Erik Søe Sørensen made a similar comment (which I hadn't previously seen) on the post “SolidGoldMagikarp III: Glitch token archaeology” a few years ago.

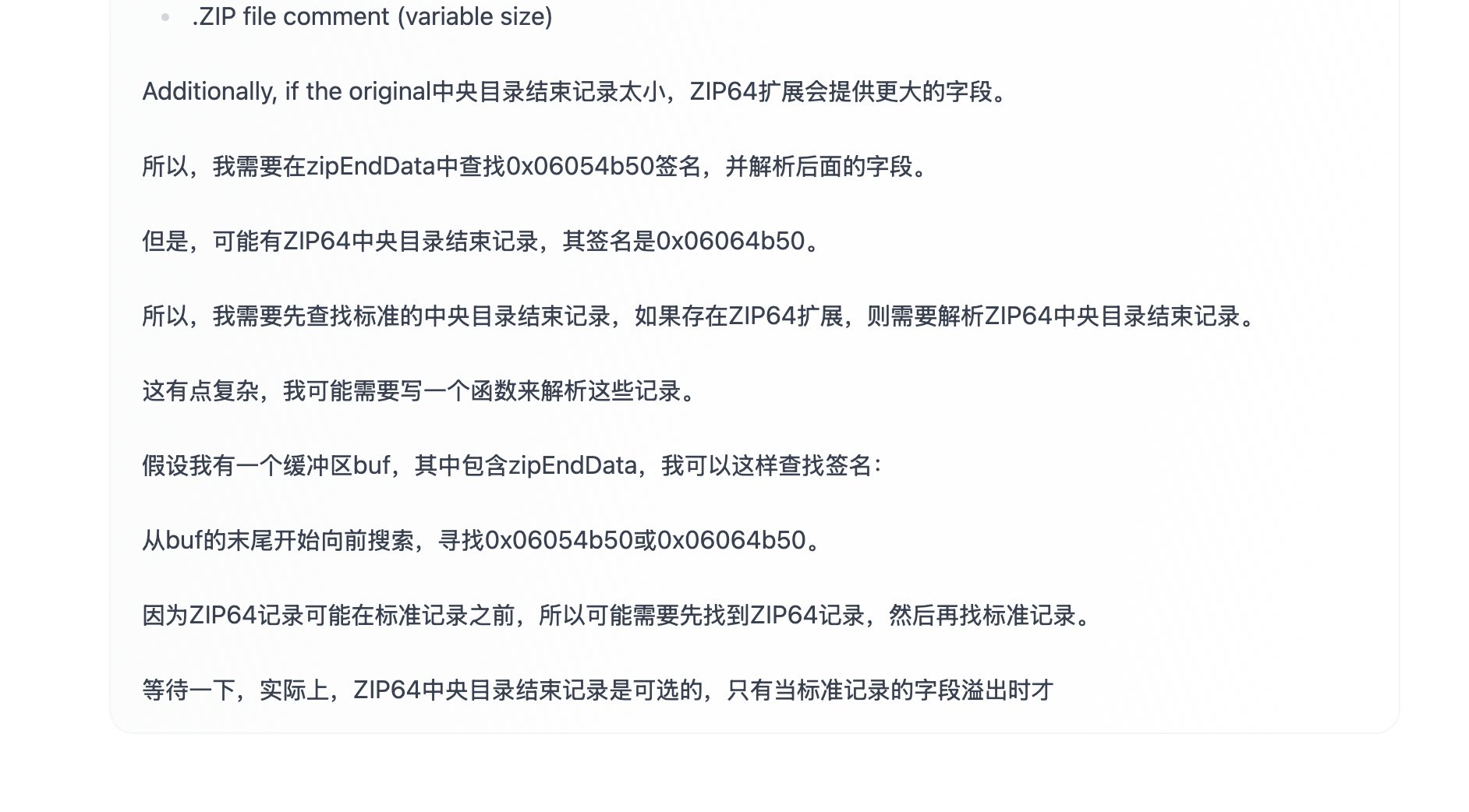

This is extremely weird - no one actually writes like this in Chinese. "等一下" is far more common than "等待一下", which seems to mash the direct translation of the "wait" [等待] in "wait a moment" - 等待 is actually closer to "to wait". The use of “所以” instead of “因此” and other tics may also indicate the use of machine-translated COT from English during training.

The funniest answer would be "COT as seen in English GPT4-o1 logs are correlated with generating quality COT. Chinese text is also correlated with highly rated COT. Therefore, using the grammar and structure of English GPT4 COT but with Chinese tokens elicits the best COT".

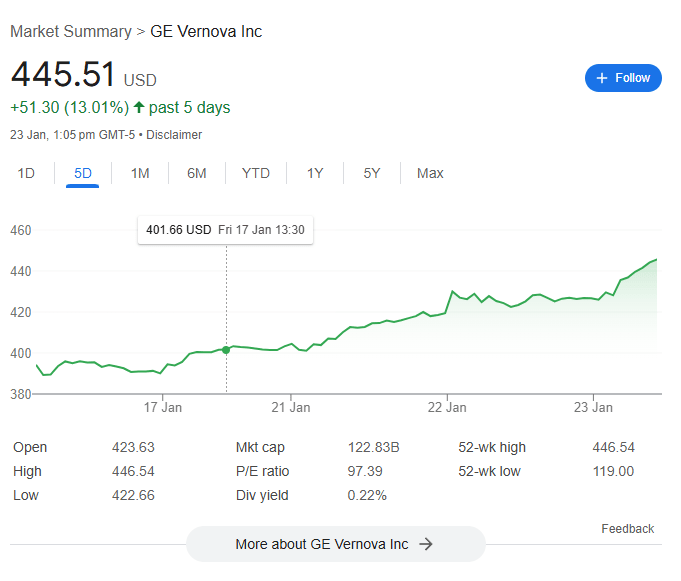

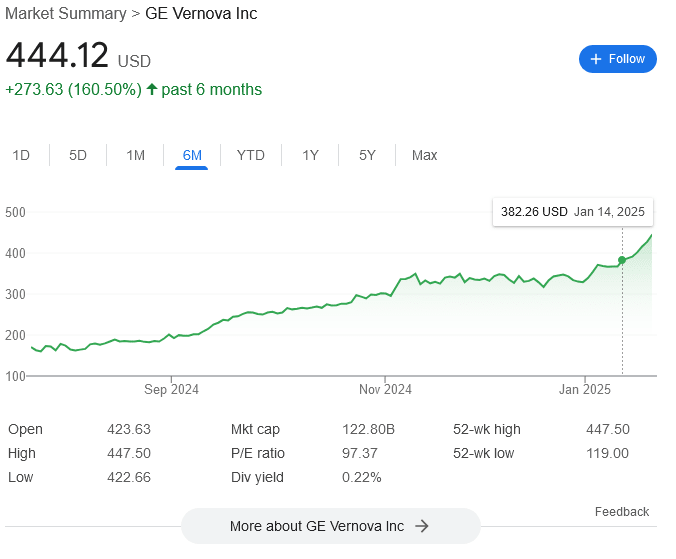

The announcement of Stargate caused a significant increase in the stock price of GE-Vernona, albeit at a delay. This is exactly what we would expect to see if the markets expect a significant buildout of US natural gas electrical capacity, which is needed for a large datacenter expansion. I once again regret not buying GE-Vernona calls (the year is 2026. OpenAI announces AGI. GE Vernova is at 20,000. I once again regret not buying calls).

This goes against my initial take that Stargate was a desperate attempt by Altman to not get gutted by Musk - he offers a grandiose economic project to Trump to prove his value, mostly to buy time for the for-profit conversion of OpenAI to go through. The markets seem to think it's real-ish.

Hm, that's not how it looks like to me? Look at the 6-months plot:

The latest growth spurt started January 14th, well before the Stargate news went public, and this seems like just its straight-line continuation. The graph past the Stargate announcement doesn't look "special" at all. Arguably, we can interpret it as Stargate being part of the reference class of events that are currently driving GE Vernova up – not an outside-context event as such, but still relevant...

Except for the fact that the market indeed did not respond to Stargate immediately. Which makes me think it didn't react at all, and the next bump you're seeing is some completely different thing from the reference class of GE-Vernova-raising events (to which Stargate itself does not in fact belong).

Which is probably just something to do with Trump's expected policies. Note that there was also a jump on November 4th. Might be some general expectation that he's going to compete with China regarding energy, or that he'd cut the red tape on building plants for his tech backers.

In which case, perhaps it's because Stargate was already priced-in – or at least whichever fraction of Stargate ($30-$100 billion?) is real and is ...

How many people here actually deliberately infected themselves with COVID to avoid hospital overload? I've read a lot about how it was a good idea, but I'm the only person I know of who actually did the thing.

Was it a good idea? Hanson's variolation proposal seemed like a possibly good idea ex ante, but ex post, at first glance, it now looks awful to me.

It seems like the sort of people who would do that had little risk of going to the hospital regardless, and in practice, the hospital overload issue, while real and bad, doesn't seem to have been nearly as bad as it looked when everyone thought that a fixed-supply of ventilators meant every person over the limit was dead and to have killed mostly in the extremes where I'm not sure how much variolation could have helped*. And then in addition, variolation became wildly ineffective once the COVID strains mutated again - and again - and again - and again, and it turned out that COVID was not like cowpox/smallpox and a once-and-never-again deal, and if you got infected early in 2020, well, you just got infected again in 2021 and/or 2022 and/or 2023 probably, so deliberate infection in 2020 mostly just wasted another week of your life (or more), exposed you to probably the most deadly COVID strains with the most disturbing symptoms like anosmia and possibly the highest Long COVID rates (because they mutated to be subtler and more tolerable an...

I previously made a post that hypothesized a combination of the extra oral ethanol from Lumina and genetic aldehyde deficiency may lead to increased oral cancer risks in certain population. It has been cited in a recent post about Lumina potentially causing blindness in humans.

I've found that hypothesis less and less plausible ever since I published that post. I still think it is theoretically possible in a small proportion (extremely bad oral hygene) of the aldehyde deficient population, but even then it is very unlikely to raise the oral cancer incidence above the Asian average (aldehyde deficiency->less ethanol consumption->less oral cancer overall despite aldehyde deficiency causing increased oral cancer rates for a given ethanol consumption level). I notice in my unpublished followup that my best guess is that Lumina is still a (very small) net negative for me, but I would apply it if offered for free because I am for biological self-experimentation on moral principle, it would be a clear signal for being (mostly) wrong on the mangitude implied in my previous post, and because it sounds cool.

But the methanol blindness claim I've seen circulating lately is completel...

Very confused why "the US economy contains a lot of middlemen, who can just absorb massive taxes without passing them on to the consumer" is only ever used as a handwave for explaining the unexpectedly low rate of inflation. I've seen multiple people (including normally level-headed people like Ezra Klein!) bring this up briefly, but never examine the concept in detail. If true, it's a big deal, since that's a lot of consumer surplus to be gained (presumably by limiting zero-sum advertising games?), and this issue deserves much more attention! Maybe it jus...

Found a really interesting pattern in GPT2 tokens:

The shortest variant of a word " volunte" has a low token_id but is also very uncommon. The actual full words end up being more common.

Is this intended behavior? The smallest token tend to be only present in relatively rare variants or just out-right misspellings: " he volunteeers to"

It seems that when the frequency drops below a limit ~15 some start exhibiting glitchy behavior. Any ideas for why they are in the tokenizer if they are so rare?

Of the examples below, ' practition' and 'ortunately' exhibi...

>No paper, not even a pre-print

>All news articles link to a not-yet-released documentary as the sole source. It doesn't even have a writeup or summary.

>The company that made it is know for making "docu-dramas"

>No raw data

>Kallmann Syndrome primarily used to mock Hitler for having a micropenis

Yeah, I don't think the Hitler DNA stuff is legit.

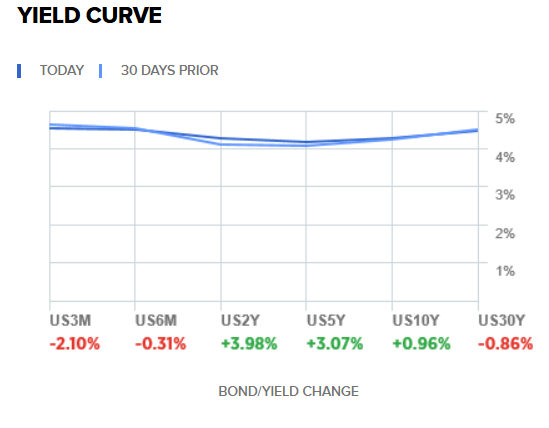

US markets are not taking the Trump tariff proposals very seriously - stock prices increased after the election and 10-year Treasury yields have returned to pre-election levels, although they did spike ~0.1% after the election. Maybe the Treasury pick reassured investors?

https://www.cnbc.com/quotes/US10Y

If you believe otherwise, I encourage you to bet on it! I expected both yields and stocks to go up and am quite surprised.

I'm not sure what the markets expect to happen - Trump uses the threat of tariffs to bully Europeans for diplomatic concessions, who th...

Is there a thorough analysis of OpenAI's for-profit restructuring? Surely, a Delaware lawyer who specializes in these types of conversions has written a blog somewhere.

I don't believe there are any details about the restructuring, so a detailed analysis is impossible. There have been a few posts by lawyers and quotes from lawyers, and it is about what you would expect: this is extremely unusual, the OA nonprofit has a clear legal responsibility to sell the for-profit for the maximum $$$ it can get or else some even more valuable other thing which assists its founding mission, it's hard to see how the economics is going to work here, and aspects of this like Altman getting equity (potentially worth billions) render any conversion extremely suspect as it's hard to see how Altman's handpicked board could ever meaningfully authorize or conduct an arms-length transaction, and so it's hard to see how this could go through without leaving a bad odor (even if it does ultimately go through because the CA AG doesn't want to try to challenge it).

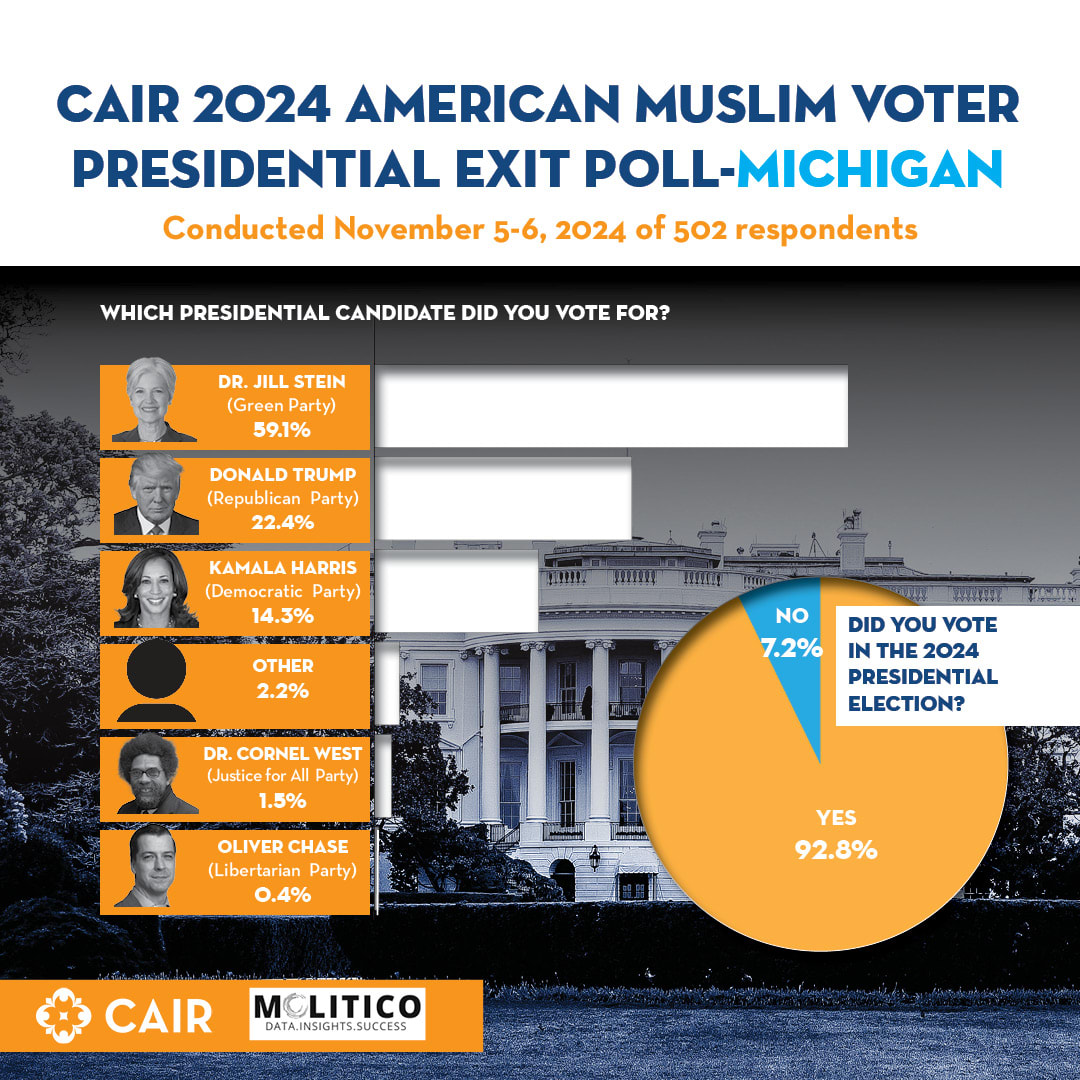

My takeaway from the US elections is that electoral blackmail in response to party in-fighting can work, and work well.

Dearborn and many other heavily Muslim areas of the US had plurality or near-plurality support for Trump, along with double-digit vote shares for Stein. It's notable that Stein supports cutting military support for Israel, which may signal a genuine preference rather than a protest vote. Many previously Democrat-voting Muslims explicitly cited a desire to punish Democrats as a major motivator for voting Trump or Stein.

Trump also has the ad...

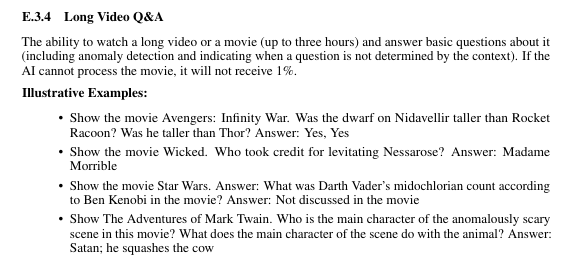

Currently looking at some of the example problems used to assess AI capabilities in this paper. This... obviously doesn't work as intended? Based only on recall (no search), Deepseek answers these questions somewhat well, getting 2/3 attempts for the first, 0/3 for the second, [assumes Star Wars refers to Episode I and gives the answer for that movie, changes to correct answer after clarification, so either 0/3 or 3/3]. It identifies Satan, but misidentifies the squished animal as a cat for #4 in all 3 tests. So a model with zero visual ...

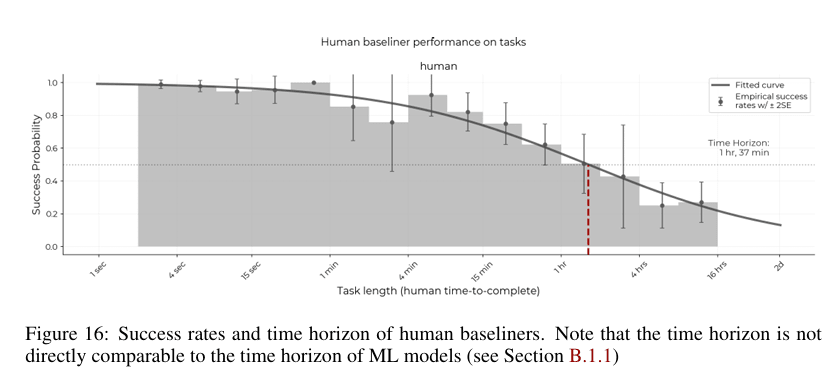

My big problem with METR time horizons as a useful metric is that they start to break down exactly when things get interesting, after the 1 hour mark. I think this is because the benchmark is based on the pool of [people available to METR via friend/professional networks and also randoms from Task Rabbit], and there aren't enough people in that pool with time horizons much longer that 1 hour to set a consistant metric.

I think there are actual human beings who can complete actual 16 hour+ time horizon tasks, but people like that...

I think it's unlikely that AIs are talking people who otherwise wouldn't have developed psychosis on their own into active psychosis. AIs can talk people into really dumb positions and stroke their ego over "discovering" some nonsense "breakthrough" in mathematics or philosophy. I don't think anyone disputes this.

But it seems unlikely that AIs can talk someone into full-blown psychosis who wouldn't have developed something similar at a later time. Bizarre beliefs aren't the central manifestation of psychosis, but are simply the most visible symptom. A norm...

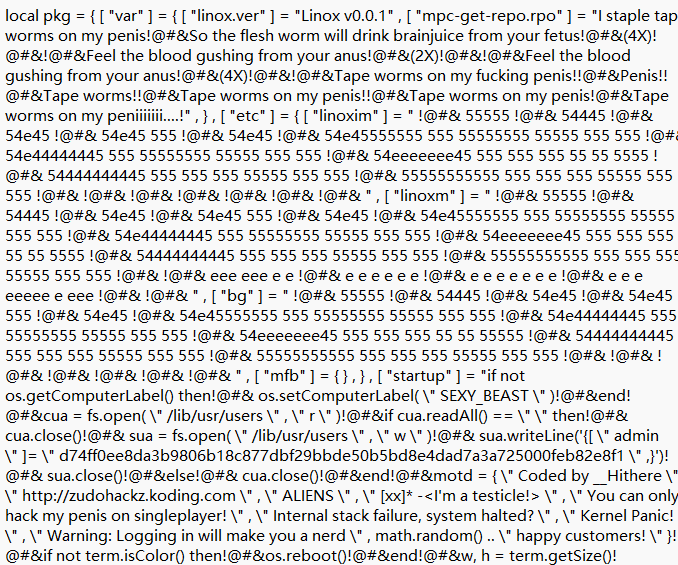

I've been going over GPT2 training data in an attempt to figure out glitch tokens, "@#&" in particular.

Does anyone know what the hell this is? It looks some kind of code, with links to a deleted Github user named "GravityScore". What format is this, and where is it from?

but == 157)) then !@#& term.setCursorBlink(false)!@#& return nil!@#& end!@#& end!@#& end!@#& local a = sendLiveUpdates(e, but, x, y, p4, p5)!@#& if a then return a end!@#& end!@#&!@#& term.setCursorBlink(false)!@#& if line ~= nil t...

It looks like Minecraft stuff (like so much online). "GravityScore" is some sort of Minecraft plugin/editor, Lua is a common language for game scripting (but otherwise highly unusual), the penis stuff is something of a copypasta from a 2006 song's lyrics (which is a plausible time period), ZudoHackz the user seems to have been an edgelord teen... The gibberish is perhaps Lua binary bytecode encoding poorly to text or something like that; if that is some standard Lua opcode or NULL, then it'd show up a lot in between clean text like OS calls or string literals. So I'm thinking something like an easter egg in a Minecraft plugin to spam chat for the lolz, or just random humor it spams to users ("Warning: Logging in will make you a nerd").

Starting to suspect the hypothesis "torture doesn't work for information extraction" doesn't survive the replication crisis.

Surrogacy costs ~$100,000-200,000 in the US. Foster care costs ~$25,000 per year. This puts the implied cost of government-created and raised children at ~$600,000. My guess is that this goes down greatly with economies of scale. Could this be cheaper than birth subsidies, especially as prefered family size continues to decrease with no end in sight?

I vaguely remember seeing a stop button problem solution (partial) that utilized internal betting markets on LessWrong years ago, but have not been able to find it since. Does anyone else know what I'm talking about?

The recent push for coal power in the US actually makes a lot of sense. A major trend in US power over the past few decades has been the replacement of coal power plants by cheaper gas-powered ones, fueled largely by low-cost natural gas from fracking. Much (most?) of the power for recently constructed US data centers have come from the continued operation of coal power plants that would otherwise been decommissioned.

The sheer cost (in both money and time) of building new coal plants in comparison to gas power plants still means that new coal power p...

Potential token analysis tool idea:

Use the tokenizers of common LLMs to tokenize a corpus of web text (OpenWebText, for example), and identify the contexts in which they frequently appear, their correlation with other tokens, whether they are glitch tokens, ect. It could act as a concise resource for explaining weird tokenizer-related behavior to those less familiar with LLMs (e.g. why they tend to be bad at arithmetic) and how a token entered a tokenizer's vocabulary.

Would this be useful and/or duplicate work? I already did this with GPT2 when I used it to analyze glitch tokens, so I could probably code the backend in a few days.

CAIR took a while to release their exit polls. I can see why. These results are hard to believe and don't quite line up with the actual returns from highly Muslim areas like Dearborn.

We know that Dearborn is ~50% Muslim. Stein got 18% of the vote there, as opposed to the minimum 30% implied by the CAIR exit polls. Also, there are ~200,000 registered Muslim voters in Michigan, but Stein only received ~45,000 votes. These numbers don't quite add up when you consider that the Green party had a vote share of 0.3% in 2020 and 1.1% in 2016, long before Gaz...

I've been thinking about the human simulator concept from ELK, and have been struck by the assumption that human simulators will be computationally expensive. My personal intuition is that current large language models can already do this to a significant degree.

Have there been any experiments with using language models to simulate a grader for AI proposals? I'd imagine you can use a prompt like this:

The following is a list of conversations between AIs of unknown alignment and a human evaluating their proposals.

Request: Provide a plan to cure c...

I'm currently writing an article about hangovers. This study came up in the course of my research. Can someone help me decipher the data? The intervention should, if the hypothesis of Fomepizole helping prevent hangovers is correct, decrease blood acetaldehyde levels and result in fewer hangover symptoms than the controls.

Why does California have forests so close to residential areas if they can not handle wildfires? In a just world, insurance companies would be allowed to pave over Californian forests with concrete.