Note for the record:

I can no longer reply to comments addressed to me (or referring to me, etc.) on the post “Basics of Rationalist Discourse”, because its author has banned me from commenting on any of his posts.

I think, ideally, for anything that really matters, I'd selfishly prefer to just be in consensus with flawless reasoners, by sharing the same key observations, and correctly deriving the same important conclusions?

The whole definition of a partition here is "information NOT flowing because it CANNOT easily flow because cuts either occurred accidentally or were added on purpose(?!)" and... that's a barrier to sharing observations, and a barrier to getting into consensus sort of by definition?

It makes it harder for all nodes to swiftly make the same good promises based on adequate knowledge of the global state of the world because it makes it harder for facts to flow from where someone observed them to where someone could usefully apply the knowledge.

If an information flow blockage persists for long enough then commitments can be accidentally be made on either side of the blockage such that the two commitments cannot be both satisfied (and trades that could have better profited more people if they'd been better informed don't happen, and so on), and in general people get less of what they counted on or would have wanted, and plans have to be re-planned, and it is generally just...

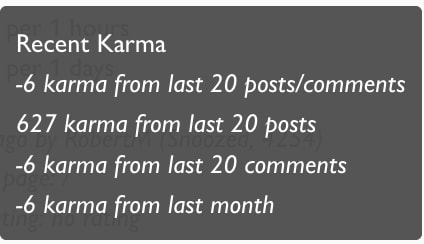

Why am I still rate-limited in commenting? None of the criteria described in the post about Automatic Rate Limiting on Less Wrong seem to apply:

- My total karma is obviously not less than 5

- My “recent karma” (sum of karma values of last 20 comments) is positive

- My “last post karma” is positive

- My “last month karma” is positive

What gives?

Seems like it's recent karma from last 20 posts/comments:

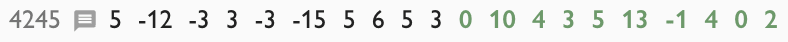

Here is the karma for your 20 most recent comments:

Subtracting 2 from all of these numbers for your own small-upvote to get the net karma from them, I get:

3 - 14 - 5 + 1 - 5 - 17 + 3 + 4 + 3 + 1 - 2 + 8 + 2 + 1 + 3 + 11 - 3 + 2 - 2 + 0 = -6

So I don't see any errors in the logic. Seems like it works exactly as advertised in the "comment rate limit" section of the post:

- -5 recent karma (4+ downvoters)

- 1 comment per day

How does it make sense to just run the rate limiter robot equally on everyone no matter how old their account and how much total karma they have? It might make sense for new users, as a crude mechanism to make them learn the ropes or find out the forum isn't a good fit for them. But presumably you want long-term commenters with large net-positive karma staying around and not be annoyed by the site UI by default.

A long-term commenter suddenly spewing actual nonsense comments where rate-limiting does make sense sounds more like an ongoing psychotic break, in which case a human moderator should probably intervene. Alternatively, if they're getting downvoted a lot it might mean they're engaged in some actually interesting discussion with lots of disagreement going around, and you should just let them tough it out and treat the votes as a signal for sentiment instead of a signal for signal like you do for the new accounts.

I would also point out a perverse consequence of applying the rate limiter to old high-karma determined commenters: because it takes two to tango, a rate-limiter necessarily applies to the non-rate-limited person almost as much as the rate-limited person...

You know what's even more annoying than spending some time debating Said Achmiz? Spending time debating him when he pings you exactly once a day for the indefinite future as you both are forced to conduct it in slow-motion molasses. (I expect it is also quite annoying for anyone looking at that page, or the site comments in general.)

Almost every comment rate limit stricter than "once per hour" is in fact conditional in some way on the user's karma, and above 500 karma you can't even be (automatically) restricted to less than one comment per day:

https://github.com/ForumMagnum/ForumMagnum/blob/master/packages/lesswrong/lib/rateLimits/constants.ts#L108

// 3 comments per day rate limits

{

...timeframe('3 Comments per 1 days'),

appliesToOwnPosts: false,

rateLimitType: "newUserDefault",

isActive: user => (user.karma < 5),

rateLimitMessage: `Users with less than 5 karma can write up to 3 comments a day.<br/>${lwDefaultMessage}`,

},

{

...timeframe('3 Comments per 1 days'), // semi-established users can make up to 20 posts/comments without getting upvoted, before hitting a 3/day comment rate limit

appliesToOwnPosts: false,

isActive: (user, features) => (

user.karma < 2000 &&

features.last20Karma < 1

), // requires 1 weak upvote from a 1000+ karma user, or two new user upvotes, but at 2000+ karma I trust you more to go on long conversations

rateLimitMessage: `You've recently posted a lot without gettiThe post “Interstellar travel will probably doom the long-term future”, due to its high density of footnotes, works as a sort of stress-test of Less Wrong’s sidenote system. The result is decidedly not great:

(The sidenotes, they do nothing!)

Normally, a sidenote should be approximately adjacent to the in-text citation which refers to that note; in this case, sidenote #32 is pushed down, way out of the viewport, by the other sidenotes. (Hovering over a citation highlights the associated sidenote… which is, of course, useless when the sidenote is beyond the v...

A comment I wrote in response to “Contra Contra the Social Model of Disability” but couldn’t post because @DirectedEvolution seems to have banned me from commenting on his posts.

You say:

...First, try reading [the below quotes] with the conventional definition of “disability” in mind, where “disability” is a synonym for “impairment” and primarily means “physical impediment, such as being paraplegic or blind.” Under this definition, which we’ve just seen is not the one they use, they sound ridiculous.[1]

Then, see how the meaning changes using the definition

Replying to @habryka’s recent comment here, because I am currently rate-limited to 1 comment per day and can’t reply in situ:

I think you have never interacted with either Ben or Zack in person!

You are mistaken.

(Why would I disagree-react with a statement about how someone behaves in person if I’d never met them in person? Have you ever known me to make factual claims based on no evidence whatsoever…? Really, now!)

Is there any way to disable the “Listen to this post” widget on one of my posts? (That is, I do not want this audio version of my post to be available.)

My solution for the stag hunt is to kill the hunter who leaps out and the rabbit, then cook & eat his flesh.

(Source; previously)

@Gordon Seidoh Worley recently wrote this comment, where he claimed:

I’d like to engage with you as a critic. As you can see, I gladly do that with many of my other critics, and have spent hours doing it with you specifically for many years.

As far as I can tell, this is just false. I mean, maybe it takes Gordon an hour to write a single comment like this one (and then to exit the discussion immediately thereafter)? I doubt it, though.

Maybe I’m forgetting some huge arguments that took place a long time ago. But I went back through five full years of my L...

For what it's worth, as an outsider to this conversation who nonetheless has experience engaging in very long arguments with Said that ultimately went nowhere, it seems to me that Said was... straightforwardly correct in both of these instances.

The same way he was straightforwardly correct when pointing out the applause lights and anti-epistemology proponents of Circling were engaging in here, when bringing attention to the way Duncan Sabien's proposed assumption of good faith contradicts LW culture here, when asking for examples so the author can justify why their proposed insight actually reflects something meaningful in the territory as opposed to self-wankery, and in a countless number of other such instances.

And when I say "straightforwardly correct," I'm not just referring to the object-level, although that is of course the most important part. I'm also referring to the rhetoric he employed (i.e., none) and the way he asked his questions. I think saying, "Do you have any examples to illustrate what you mean by this word?" is the perfect question to ask when you believe an author is writing up applause light after applause light, and this should be bloo...

Actually, I would really like it if Said left comments that were just critical of things and pointed out where he thought the author was wrong, but to do that requires actually engaging with the content and the author to understand their intent (because clear communication, especially about non-settled topics, is hard). There's something subtle about Said's style of commenting that is hard for me to pin down that makes it unhelpfully adversarial in a way that I'm sure some people like but I find incredibly frustrating.

For example, I often feel like Said uses a rhetorical technique of smashing the applause button by referencing something in the Sequences as if that was the end of the argument, when at times the thing being argued is a claim made in the Sequences.

This is frustrating as an author who is trying to explore an idea or try to share advice because it's not real engagement: it reads like trying to shut down the conversation to score points, and it's all the more frustrating because he hit the applause button so it gets a lot of upvotes.

It's also frustrating in that he never crosses a bright line that would make me say "this is totally unacceptable". It feels to me like some...

To me good faith means being curious about why someone said something. You try to understand what they mean and then engage with their words as they intended them. Arguing in bad faith would be arguing when you are not curious or open to being convinced.

My experiences with Said have all been more of a form of him disagreeing with something I said in a way that suggests he's already made up his mind and there's no curiosity or interest in figuring out why I might have said what I said, often dismissive of the idea that anyone could possible have a good reason for making the claim I have made, other than perhaps stupidity.

But look I'm also not really that interested in defending my decision too hard here. The simple fact is that Said pushes my buttons in a way basically no one else on this site does, and I think I finally hit a point of saying that it would be better if I just didn't have to interact with him so much. Everyone else who leaves critical comments does so in a way that does not so consistently feel like an attack, and I often feel like I can engage with them in ways where, even if we don't end up agreeing, we at least had a productive conversation.

OK, I see the relationship to the standard definition. (It would be bad faith to put appearances of being open to being convinced, when you're actually not.) The requirement of curiosity seems distinct and much more onerous, though. (If you think I'm talking nonsense and don't feel curious about why, that doesn't mean you're not open to being convinced under any circumstances; it means you're waiting for me to say something that you find convincing, without you needing to proactively read my mind.)

and so it is ineffective for large classes of readers, including specifically me and the other authors you've clashed with

The first half of this talks about readers, but the second half gives examples of authors. I think this is a rather important difference. In fact, it's absolutely critical to the particular issue being discussed.

Of course many authors do not view Said's comments positively; after all, he constantly points out that what they are writing is nonsense. But the main value Said provides at the meta-level (beyond the object-level of whether he is right or wrong, which I believe he usually but not always is) is in providing needed criticism for the readers of posts to digest.

There was a comment once by a popular LW user (maybe @Wei Dai?) who said that because he wants the time he spends on LW to be limited, his strategy is as follows: read the title and skim an outline of the post, then immediately go to the comment section to see if there are any highly-rated comments that debunk the core argument of the post and which don't have adequate responses by the author. Only if there are no such comments does he actually go back and read the post closely, since this is a hard...

On @Gordon Seidoh Worley’s his recent post, “Religion for Rationalists”, the following exchange took place:

Kabir Kumar:

Rationality/EA basically is a religion already, no?

Gordon Seidoh Worley:

No, or so say I.

I prefer not to adjudicate this on some formal basis. There are several attempts by academics to define religion, but I think it’s better to ask “does rationality or EA look sufficiently like other things that are definitely religions that we should call them religions”.

I say “no” on the basis of a few factors: …

Said Achmiz:

...EA is [a religion]

I think being inconsistent and contradicting yourself and not really knowing your own position on most topics is good, actually, as long as you keep working to craft better models of those topics and not just flail about randomly. Good models grow and merge from disparate fragments, and in the growing pains those fragments keep intermittently getting more and less viable. Waiting for them to settle makes you silent about the process, while talking it through is not a problem as long as the epistemic status of your discussion is clear.

Sticking to what you've said previously, simply because it's something you happened to have said before, opposes lightness, stepping with the winds of evidence at the speed of their arrival (including logical evidence from your own better understandings as they settle). Explicitly noting the changes to your point of view, either declaring them publicly or even taking the time to note them privately for yourself, can make this slightly inconvenient, and that can have significant impact on ability to actually make progress, for the numerous tiny things that are not explicitly seen as some important project that ought to be taken seriously and given the effort. There is little downside to this, as far as I can tell, except for the norms with some influence that resist this kind of behavior, and if given leave can hold influence even inside one's own mind.

I totally agree that being able to change your mind is good, and that the important thing is that you end up in the right place. (Although I think that your caveat “as long as you keep working to craft better models of those topics and not just flail about randomly” is doing a lot of work in the thesis you express; and it seems to me that this caveat has some requirements that make most of the specifics of what you endorse here… improbable. That is: if you don’t track your own path, then it’s much more likely that you’re flailing, and much more likely that you will flail; so what you gain in “lightness”, you lose in directionality. Indeed, I think you likely lose more than you gain.)

However.

If you change your mind about something, then it behooves you to not then behave as if your previous beliefs are bizarre, surprising, and explainable only as deliberate insults or other forms of bad faith.