Thank you Habryka (and the rest of the mod team) for the effort and thoughtfulness you put into making LessWrong good.

I personally have had few problems with Said, but this seems like an extremely reasonable decision. I'm leaving this comment in part to help make you feel empowered to make similar decisions in the future when you think it necessary (and ideally, at a much lower cost of your time).

I hereby voice strong approval of the meta-level approaches on display (being willing to do unpopular and awkward things to curate our walled garden, noticing that this particular decision is worth justifying in detail, spending several thousand words explaining everything out in the open, taking individual responsibility for making the call, and actively encouraging (!) anyone who leaves LW in protest or frustration to do so loudly), coupled with weak disapproval of the object-level action (all the complicating and extenuating factors still don't make me comfortable with "we banned this person from the rationality forum for being annoyingly critical").

If I were a moderator, I would have banned Jesus Christ Himself if He required me to spend one hundred hours moderating His posts on multiple occasions. Given your description here I am surprised you did not do this a long time ago. I admire your restraint, if not necessarily your wisdom.

I know what you mean, of course, but it is funny that you use Jesus as an example of someone unlikely to be banned when, historically, Jesus was in fact "banned". :)

Fwiw I've found Said's comments to be clear, crisp and valuable. I don't recall being ever annoyed by his comments and found him a most useful bloodhound for bad epistemic practices and rhetorics. Many cases that Said's comment is the only good, clear, and crisp critic of vagueposting and applauselighting.

The examples in this post don't seem compelling at all. One of the primary examples seems to be Duncan who comes off [from a distance] as thin-skinned and obscurantist, emotionally blowing up at very fair criticism.

Despite my disagreement I endorse Habryka unilaterally taking these kinds of decisions and approve of his transparency and conduct in this matter.

Farewell, lesswrong gadfly. You will be missed.

One of the primary examples seems to be Duncan who comes off [from a distance] as thin-skinned and obscurantist, emotionally blowing up at very fair criticism.

This is my view too. I remember once trying (I think on Facebook) to gently talk him out of being really angry at someone for making what I thought was a reasonable criticism, and he ended up getting mad at me too.

One of the primary examples seems to be Duncan who comes off [from a distance] as thin-skinned and obscurantist, emotionally blowing up at very fair criticism.

I don't think I link to a single Duncan/Said interaction in any of the core narratives of the post. I do link the moderation judgement of the previous Said/Duncan thread, but it's not the bulk of this post.

Like none of these comments:

link to any threads between Said and Duncan.

And the moderation judgement in the Said/Duncan also didn't really have much to do with Said's conduct in that thread, but with his conduct on the site in general.

You might still not find the examples compelling, but there is basically no engagement with Duncan that played any kind of substantial role in any of this.

As another outside observer I also got the impression that the Duncan conflict was the most significant of the ones leading up to the ban, since he wrote a giant post advocating for banning Said, left the site in a huff shortly thereafter, and seems to be the main example of a top contributor by your lights who said they didn't post due to Said.

Nah, you can see in the moderation history that we threatened Said with bans and moderation actions for many years before then. My honest best guess is that we would have banned Said somewhat earlier if not for the Duncan thread, though that we also wouldn't have given him a rate-limit around that time, but it's of course hard to tell.

My experience was that Said's behavior in the Duncan thread was among the most understandable cases of him behaving badly (because I too have found myself ending up drawn into conflicts with Duncan that end up quite aggressive and at least tempt me to behave badly). That's part why I don't link to any comments of his in the thread above (I might somewhere in there, but if so it's not intended as a particularly load-bearing part of the case).

I should comment publicly on this; I've talked with various people about it extensively in private. In case you just want my conclusion before my reasoning, I am sad but weakly supportive. An outline of six points, which I will maybe expand on if people ask questions:

- I should link some previous writing of mine that wasn't about Said:

- When discussing the death of Socrates, I think it's plausible that Socrates 'had it coming' because he was attacking the status-allocation methods of Athens, which were instrumental in keeping the city alive. That is, the 'corrupting the youth' charge might have been real and the sort of thing that it makes sense to watch out for.

- In response to Zack's post Lack of Social Grace is an Epistemic Virtue, I responded that the Royal Society didn't think so. It seems somewhat telling to me that academic culture--the one I call "scholarly pedantic argumentative culture", predates good science by a long time, and is clearly not sufficient to produce science. You need something more, and when I read about the Royal Society or the Republic of Letters I get a sense that they took worldly considerations seriously. They were trying to balance epistemic and instrument

I’m still not sure what Zack or Said think of the Royal Society example; Zack talks about it a bit in another comment on that page but not in a way that feels connected to the question of how to balance virtues against each other, and what virtues cultures should strive towards. (Said, in an email, strongly rejects my claim that there’s a difference between his culture of commenting and the Royal Society culture of commenting that I describe.)

This seems to be by far the most important crux, nothing else could've substantially changed attitudes on either side. Do environments widely recognized for excellence and intellectual progress generally have cultures of harsh and blunt criticism, and to what degree its presence/absence is a load-bearing part? This question also looks pretty important on its own, and the apparent lack of interest/attention is confusing.

As I have said before, on the object-level topic of Said Achmiz, I have written all I care about here, and I shall not pollute this thread further by digressing into that again. My thoughts on this topic are well-documented at those links, if anyone is interested.

It's an understatement to say I think this is the wrong decision by the moderators. I disagree with it completely and I think it represents a critical step backwards for this site, not just in isolation but also more broadly because of what it illustrates about how moderators on this site view their powers and responsibilities and what proper norms of user behavior are. This isn't the first time I have disagreed with moderators (in particular, Habryka) about matters I view as essential to this site's continued epistemic success,[1] but it will be the last.

I have written words about why I view Said and Said-like contributions as critical. But words are wind, in this case. Perhaps actions speak louder. I will be deactivating my account[2] and permanently quitting this site, in protest of this decision.

It doesn't make me happy to do so, as I've had some great interactions on here that have helped me learn and ...

This seems like not a useful move. Your contributions, in my view, consistently avoid the thing that makes Said's a problem. Your criticisms will be missed.

Seconded, I consistently find your comments both much more valuable and ~zero sneer. I would be dismayed by moderation actions towards you, while supporting those against Said. You might not have a sense of how his are different, but you automatically avoid the costly things he brings.

I think you shouldn't leave, and Habryka shouldn't have so prominently talked about leaving LW as something one should consider doing in response to this post. LW is the best place by far to discuss certain topics, and nowhere else provides comparable utility if one was interested in these topics. It's technically true but misleading to say "There are many other places on the internet to read interesting ideas, to discuss with others, to participate in a community." This underplays not only the immense value that LW provides to its members but also the value that a member could provide to LW and potentially to the world by influencing its discourse.

For your part, I think "quitting in protest" is unlikely to accomplish anything positive, and I'd much rather have your voice around than the (seemingly tiny) chance that your leaving causes Habryka to change his mind.

My first reaction is that this is bad decision theory.

It makes sense to actualize on strikes when the party it's against would not otherwise be aware of or willing to act on the preferences of people whose product they're utilizing. It can also make sense if you believe the other party is vulnerable to coercion and you want to extort them. If you do want fair trade and credibly believe the other party is knowing and willing, the meta strategy is to simply threaten your quorum, and never actually have to strike.

We don't seem to be in the case where an early strike makes sense. The major reaction to this post is not of an unheard or silenced opposition, but various flavours of support. In order for the moderators to cede to your demand, they have to explicitly overrule a greater weight of other people's preferences on the basis that those people will be less mean about it. But we're on LessWrong, people here are not broadly open to coercion.

Additively, we also don't seem to be in a world where your preferences have been marginalized beyond the degree that they're the minority preference. The moderators clearly spent a huge personal cost and took a huge time delay precisely because pr...

Let me join the chorus: please do not leave in protest; your comments here do some of the same positive things that Said's comments do, and your leaving would have a bunch of the negative consequences of Said's banning without the positive ones (because, at least so it seems to me, you are much less annoying than Said).

(For the avoidance of doubt, I find you a net-positive commenter here for reasons other than that you do some of the useful things Said has done, but that particular aspect seems the most relevant on this occasion.)

Will you be writing elsewhere? I've benefited a lot from some of your comments, and would be bummed to see you leave.

Criticism is a pretty thankless job. People mostly do it for the status reward, but consider if you detect some potentially fatal flaw in an average post (not written by someone very high status), but you're not sure because maybe the author has a good explanation or defense, or you misunderstood something. What's your motivation to spend a lot of effort to write up your arguments? If you're right, both the post and your efforts to debunk it are quickly forgotten, but if you're wrong, then the post remains standing/popular/upvoted and your embarrassing comment is left for everyone to see. Writing up a quick "clarifying" question makes more sense from a status/strategic perspective, but I rarely do even that nowadays because I have so little to gain from it, and a lot to lose including my time (including expected time to handle any back and forth) and personal relations with the author (if I didn't word my comment carefully enough). (And this was before today's decision, which of course disincentivizes such low-effort criticism even more.)

A few more quick thoughts as I'm not very motivated to get into a long discussion given the likely irreversible nature of the decision:

- If you ge

I think in some sense both making top-level posts and criticism are thankless jobs. What is your motivation to spend a lot of effort to write up your arguments in top-level post form in the first place? I feel like all the things you list as making things unrewarding apply to top-level posts just as much as writing critical comments (especially in as much as you are writing on a topic, or on a forum, where people treat any reasoning error or mistake with grave disdain and threats of social punishment).

If you get rid of people like Said or otherwise discourage low-effort criticism, you'll just get less criticism not better criticism.

I don't buy this. I am much more likely to want to comment on LessWrong (and other forums) if I don't end up needing to deal with comment-sections that follow the patterns outlined in the OP, and I am generally someone who does lots of criticism and writes lots of critical comments. Many other commenters who I think write plenty of critique have reported similar.

Much of LessWrong has a pretty great reward-landscape for critique. I know that if I comment on a post by Steven Byrnes, or Buck, or Ryan Greenblatt or you, or Scott Alexander or many others, wit...

I disagree. Posts seem to have an outsized effect and will often be read a bunch before any solid criticisms appear. Then are spread even given high quality rebuttals... if those ever materialize.

I also think you're referring to a group of people who write high quality posts typically and handle criticism well, while others don't handle criticism well. Despite liking many of his posts, Duncan is an example of this.

As for Said specifically, I've been annoyed at reading his argumentation a few times, but then also find him saying something obvious and insightful that no one else pointed out anywhere in the comments. Losing that is unfortunate. I don't think there's enough "this seems wrong or questionable, why do you believe this?"

Said is definitely more rough than I'd like, but I also do think there's a hole there that people are hesitant to fill.

So I do agree with Wei that you'll just get less criticism, especially since I do feel like LessWrong has been growing implicitly less favorable towards quality critiques and more favorable towards vibey critiques. That is, another dangerous attractor is the Twitter/X attractor, wherein arguments do exist but they matter to the overall di...

Top-level posts are not self-limiting (from a status perspective) in the way I described for a critical comment. If you come up with a great new idea, it can become a popular post read and reread by many over the years and you can become known for being its author. But if you come up with a great critical comment that debunks a post, the post will be downvoted and forgotten, and very few people will remember your role in debunking it.

I agree this is largely true for comments (largely by necessity of how comment visibility works)[1]. Indeed one thing I frequently encourage good commenters to do is to try to generalize their comments more and post them as top-level posts.

And as far as I can tell this is an enormously successful mechanism for getting highly-upvoted posts on LessWrong. Indeed, I would classify the current second most-upvoted post of all time on LessWrong as a post of this kind: https://www.lesswrong.com/posts/CoZhXrhpQxpy9xw9y/where-i-agree-and-disagree-with-eliezer

Dialogues were also another attempt at making it so that critique is less self-limiting, by making it so that a more conversation can happen at the same level as a post. I don't think that plan succeeded amazingly well (largely because dialogues ended up hard to read, and hard to coordinate between authors), but it is a thing I care a lot about and expect to do more work on.

The popular comments section on the frontpage has also changed this situation a non-trivial amount. It is now the case that if you write a very good critique that causes a post to be downvoted, that this will still result in your comment getting a lo...

FWIW I feel like I get sufficient status reward for criticism and this moderation decision basically won't affect my behavior

- This defended a paper where I was lead author, which got 8 million views on Twitter and was possibly the most important research output by my current employer, against criticism that it was p-hacking

- This got me a bounty of $700 or so (which I think I declined or forgot about?) and citation in a follow-up post

- This ratioed the OP by 3:1 and induced a thoughtful response by OP that helped me learn some nontrivial stats facts

- This got 73 karma and was the most important counterpoint to what I still think are mostly wrong and overrated views on nanotech

- This got 70 karma and only took about an hour to write, and could have been 5 minutes if I were a better writer

Now it's true that most of these comments are super long and high effort. But it's possible to get status reward for lower effort comments too, e.g. this, though it feels more like springing a "gotcha". Many of the examples of Said's critiques in the post at least seemed either deliberately inflammatory or unhelpful or targeted at some procedural point that isn't maximally relevant.

As for risking being wrong...

I think these status motivations/dynamics are active whether or not you consciously think of them, because your subconscious is already constantly making status calculations. It's possible consciously framing things this way makes it even worse, "hurts your motivation to comment" even more, but it seems unavoidable if we want to explicitly discuss these dynamics. (Sometimes I do deliberately avoid bringing up status in a discussion due to such effects, but here the OP already talked about status a bunch, and it seems like an unavoidable issue anyway.)

My experience of Said has been mostly as described, a strong sense of sneer on mine and others posts that I find unpleasant.

I think there's a large swathe of experience/understanding that Said doesn't have, and which no amount of his socratic questioning will ever actually create that understanding- and it's not designed for Said to try to understand, but to punish others for not making sense in Said's worldview.

Thank you for this decision.

But I think a lot of Said's confusions would actually make more sense to Said if he came to the realization that he's odd, actually, and that the way he uses words is quite nonstandard, and that many of the things which baffle and confuse him are not, in fact, fundamentally baffling or confusing but rather make sense to many non-Said people.

(My own writing, from here.)

I read the whole post and appreciated the detail and the decision. I have had discussions with Said that were valuable, and I am sad to see that he didn't change what I consider to be a bad pattern in order to continue the version of it that's good. I've mostly just been impressed with sunwillrise's version of it lately, for example. I also try to do a version of this occasionally, and it's not clear to me my contributions are uniformly good. Input welcome. But I sometimes go through and try to find posts with no comments, see if I have anything to say about them, and try to both try to describe something I found positive and ask about something that confused me. Hopefully that's been helpful.

Many years ago I lurked on LessWrong, making a very occasional comment but finding the ideas and discussion fascinating and appealing. I believe I am not as smart as the average commenter here, and I am certainly less formally educated. I eventually drifted away to follow other of my interests and did not put in the work to learn enough to feel like I could contribute meaningfully. I specifically recall Said Achmiz as being a commenter I was afraid of and did not want to engage with. I didn't leave entirely because of Said, it was more about the effort of learning all the concepts, but maybe 1/8 of my decision was based on him. I imagine his attitude towards this will be, if I'm too much of a coward to risk an unknown internet commenter saying possibly bad things about my own comments, then I really don't belong here anyway. Which, maybe it's true. I don't know if I will try again in the upcoming 3 years, but I'm more likely to than before Said was banned.

Context: I much more recently gravitated to the Duncansphere, as it were, and am kinda on the fringes of that these days (I missed the Duncan/Said thing, and only know about it from comments on this post). I was encouraged there to come here and post this anecdote.

(It was me, and in the place where I encouraged DrShiny to come here and repeat what they'd already said unprompted, I also offered $5 to anybody who disagreed with the Said ban to please come and leave that comment as well.)

I have not read this post yet (I assume it's about more than just Said), but just to be clear: I personally trust you guys to ban people that are worth banning without writing thousands of words about it.

(Have read the post.) I disagree. I think overall habryka has gone through much greater pains than I think he should have to, but I don't think this post is a part he should have skimped on. I would feel pretty negative about it if habryka had banned Said without an extensive explanation for why (modulo past discussions already kinda providing an explanation). I'd expect less transparency/effort for banning less important users.

I am disappointed and dismayed.

This post contains what feels to me like an awful lot of psychoanalysis of the LW readership, assertions like "it is clear to most authors and readers", and a second-person narrative about what it is like to post here:

After all of this you are left questioning your own sanity, try a bit to respond more on the object-level, and ultimately give up feeling dejected and like a lot of people on LessWrong hate you. You probably don't post again.

And like, man, is that true? Did you conduct a poll? I didn't get a survey. You pay some attention to Zack's perspective on Said, maybe because it'd be kind of laughable to pretend you hadn't heard about it; but I'm one of the less-strident people Zack commiserates with about Said's travails, and you had access to my opinion on the matter if you were willing to listen to a wheel that only squeaked a little bit. My comment is toplevel and has lots of votes and netted positive on both karma and agreement and most of the nested remarks are about whether it was polite of me to compare a non-Said person to a weird bug.

This post spends so much time talking about the complaints you've gotten, the exp...

I am sorry you didn't like the post! I do think if you were still more active here, I would have probably reached out in some form (I am aware of that one comment you left a while ago, and disagreed with it).

I generally respect you and wish you participated more on the site and also do think of you as someone whose opinion I would be interested in on this and other topics.

After all of this you are left questioning your own sanity, try a bit to respond more on the object-level, and ultimately give up feeling dejected and like a lot of people on LessWrong hate you. You probably don't post again.

And like, man, is that true? Did you conduct a poll?

I think the narrative above pretty accurately describes the experiences of a bunch of authors. I only ran it by like 2-3 non-LW team members since this post already took an enormous amount of time to write. I am of course not intending to capture some kind of universal experience on LessWrong, and of course definitely wouldn't be aiming for that section to represent your experience on LessWrong, since I don't think you ever had any of the relevant interactions with Said, at least since I've been running LW.

...This post contains what feels

Okay, but... why. Why do you think that.

I mean, I really tried to explain a lot of my models for what I think the underlying generators of this are. That's why the post is 15,000 words long.

Is there a reason you think that, which other people could inspect your reasoning on, which is more viewable than unenumerated "complaints"?

To be clear, LessWrong is not a democracy, and while I think the complaints are important, I don't consider them to be the central part of this post. I tried to explain in more mechanistic terms what I think is going wrong in conversations with Said, and those mechanistic terms are where my cruxes for this decision are located. If I changed my mind on those, I would make different decisions. If all the complaints disappeared, but I still had the same opinions on the underlying mechanics, then I would still make the same decision.

Again, I believe the complaints exist. How many, order of magnitude? Were they all from unique complainants?

I link to something like 5-15 comment threads in the post above. Many of the complaints are on those comments threads and so are public. See for example the Benquo ones that I have quot...

Cool, I think this clarified a bunch. Summarizing roughly where I think you are at:

In moderation space, there is one way to run things that feels pretty straightforward to you, which you here for convenience called "modularity", where you treat moderation as a pragmatic thing for which "I don't have the resources to deal with this kind of person" without much explanation or elaboration is par for the course. You are both confused, and at least somewhat concerned about what I am trying to do in the OP, which is clearly not that thing.

There are at least two dimensions on which you feel concerned/confused about what is going on:

- What is the thing I am trying to do here? Am I trying to make some universally compelling argument for badness? Am I trying to rally up a mob to hate on Said so that I can maintain legitimacy? Am I trying to do some complicated legal system thing with precedent and laws?

- What do I actually think is going wrong in conversations with Said? Like, where are the supposed terrible comments of his, or things that feel like they are supposed to be compelling to someone like you by whatever standard by system of moderation is trying to achieve? There ar

I think your model of me as represented in this comment is pretty good and not worth further refining in detail.

I read something into those comments - I might even possibly call it "disdain", but - "disdain (neutral)", not "disdain (derogatory)". It just... doesn't bother me, that he writes in a way that communicates that feeling. It certainly bothers me less than when (for example) Eliezer Yudkowsky communicates disdain, purely as a stylistic matter. If I thought Said would want to be on my Discord server I would invite him and expect this to be fine. (Eliezer is on my Discord server, which is also usually fine.)

It bothers you. I'm not trying to argue you out of being bothered. I'm not trying to argue the complainants out of being bothered. It bothering you would, under the Modularity regime, be sufficient.

But you're not doing that. You're trying to make the case that you are objectively right to feel that way, that you have succeeded at a Sense Motive check to detect a pattern of emotions and intentions that are really there. I don't agree with you, about that.

But I don't have to. I don't have your job. (I wouldn't want it.)

But you’re not doing that. You’re trying to make the case that you are objectively right to feel that way, that you have succeeded at a Sense Motive check to detect a pattern of emotions and intentions that are really there. I don’t agree with you, about that.

I think the claim I'd make is not necessarily that Oli's Sense Motive check has succeeded, but that Oli's Sense Motive check correlates much better with other people's Sense Motive checks than yours does, and that ultimately that's what ends up mattering for the effects on discourse.

Like, in the sense that someone's motives approximately only affect LessWrong by affecting the words that they write. So when we know the words they write, knowing their motives doesn't give us any more information about how they're going to affect LessWrong. For some people, there's something like... "okay, if this person actually felt disdain then the words they write in future are likely to be _, and if not they're likely to be _ instead; and we can probably even shift the distribution if we ask them hey we detect disdain from your comment, is that intended?". But we don't really have that uncertainty with Said. We know how he's going to write, whether he feels disdain or not.

I am somewhat interested in his True Motives, but I don't think they should be relevant to LW moderation.

(This is not intended to say "Said's comments are just fine except that people detect disdain".)

I mean, to be clear, I did have like 20+ hours of conversation with many authors and contributors who had very strong feelings on this topic just as part of writing this post[1], with many different disagreeing viewpoints, so I think we did a lot more than "run a focus group".

- ^

Not to mention the many more conversations I've had over the last decade about this

Funnily enough I think I kind of feel about Duncan the same way Oli feels about Said. I detect a sinister and disquieting pattern in his writing that I cannot prove in a court of law or anything that is slightly larping as one. But I'm not trying to moderate any space he's in.

Crimes that are harder to catch should be more harshly punished

Please, don't do this.

Your reasoning amounts to "we need to increase the punishment to compensate for all the false negatives".

If the only kind of error that existed was false negatives, you might have a point. But it isn't. False positives exist too. And crimes that are harder to catch are probably going to have more false positives. Harsher punishments also create bigger incentives for either false positives, or for standards that make everyone guilty of serious crimes all the time, thus letting anyone be punished at the whim of the moderators while pretending that they are not.

Agree that you need to account for false positives (and the above math didn't do that)!

Sometimes crimes are harder to catch, but you can still prove they happened without much risk of false positives. I do sure agree that the kind of misbehavior discussed in this post is at risk of false positives, so taking that into account is quite important for finding the right punishment threshold. Generally appreciate the reminder of that.

Bad call. You don't exactly have an unlimited supply of people who have a solid handle on the formative LW mindset and principles from 15 years ago and who are still actively participating on the forums, and latter-day LessWrong doesn't have as much of a coherent and valuable identity to stand firmly on its own.

A key idea in the mindset that started LessWrong is that people can be wrong. Being wrong can exist as an abstract thing to begin with, it's not just an euphemism for poor political positioning. And people in positions of authority can be wrong. Kind, well-meaning, likable people can be wrong. People who have considerate friendly conversations that are a joy to moderate can be wrong. It's not always easy to figure out right and wrong, but it is possible, and it's not always socially harmonious to point it out loud, but it used to be considered virtuous still.

A forum that has principles in its culture is going to have cases where moderation is annoying around something or someone who doggedly sticks to those principles. It's then a decision for the moderators whether they want to work to keep the forum's principles alive or to have a slightly easier time moderating in the future.

I'm pretty sure people drifted away because of a more complex set of dynamics and incentives than "Said might comment on their posts" and I don't expect to see much of a reversal.

My two cents. There's a certain kind of posts on LW that to me feel almost painfully anti-rational. I don't want to name names, but such posts often get highly upvoted. Said was one of very few people willing to vocally disagree with such posts. As such, he was a voice for a larger and less vocal set of people, including me. Essentially, from now on it will be harder to disagree with bullshit on LW - because the example is gone, and you know that if you disagree too hard, you might become another example. So I'm not happy to see him kicked out, at all.

My thoughts are similar to yours although I'm more willing to tolerate posts that you call "almost painfully anti-rational" (while still wishing Said was around to push back hard on them). I think in the early stages of genuine intellectual progress, it may be hard to distinguish real progress from "bullshit". I would say that people (e.g. authors of such posts) are overly confident about their own favorite ideas, rather than that the posts are clearly bullshit and should not have appeared. My sense is that it would be a bad idea to get rid of such overconfidence completely because intellectual progress is a public good and it would be harder to motivate people to work on some approach if they weren't irrationally optimistic about it, but equally bad or worse if there was little harsh or sustained criticism to make clear that at least some people think there are serious problems with their ideas.

FWIW my personal intention -- only time will tell whether I actually stick to it -- is to be a little more vigorous in disagreeing with things that I think likely to be anti-rational, precisely because Said will no longer be doing it.

Heads-up: I am nearing the limit of the roughly 10 hours I set aside for engaging on this, so I'll probably stop responding to things soon (and also if someone otherwise wants to open up this topic again in e.g. a top-level post, I'll probably just link back to the discussion that has been had here, and not engage further).

Ok, I think that's a wrap for me. Thanks all for the discussion so far. I am now hoping to get back to all the other work I am terribly behind on.

Good work.

The hardest part of moderation is the need to take action in cases where someone is consistently doing something that imposes a disproportionate burden on the community and the moderators, but which is difficult to explain to a third party unambiguously.

Moderators have to be empowered to make such decisions, even if they can’t perfectly justify them. The alternative is a moderation structure captured by proceduralism, which is predictably exploitable by bad actors.

That said — this is Less Wrong, so there will always be a nitpick — I do think people need to grow a thicker skin. I have so many friends who have valuable things to say, but never post on LW due to a feeling of intimidation. The cure for this is, IMO, not moderating the level of meanness of the commentariat, but encouraging people to learn to regulate their emotions in response to criticism. However, at the margins, clipping off the most uncharitable commenters is doubtless valuable.

Fwiw, my interaction with lw and more broadly the rationalist scene in the Bay area was most of what formed my current stance that communities that I want to participate in operate on white lists, not black lists. This is such a fundamental shift that it affects everything about how I socialize, and made my life much better. Banning someone requiring a post of this effort level predicts that lots and lots of other good things aren't happening, and that is mostly invisible.

Like seemingly many others, I found Said a mix of "frequently incredibly annoying, seemingly blind to things that are clear to others, poorly calibrated with the confidence level he expresses things, occasionally saying obviously false things[1]" and "occasionally pointing out the-Emperor-has-no-clothes in ways that are valuable and few other people seem to do".

(I had banned him from my personal posts, but not from my frontpaged posts.)

And I wish we could get the good without the bad. It sure seems like that should be possible. But in practice it doesn't seem to exist much?

I have occasionally noticed in myself that I want to give some criticism; I could choose to put little effort in but then it would be adversarial in a way I dislike, or I could choose to put a bunch of effort in to make a better-by-my-lights comment, or I could just say nothing; and I say nothing.

I think this is less of a loss than I think Said thinks it is. (At least as a pattern. I don't know if Said has much opinion about my comments in specific.) But I do think it's a bit of a loss. I think it's plausible that a version of me who was more willing to be disagreeable and adversarial would have left some valuabl...

I don't spend enough time in the LW comments to have any idea who Said is or to be very invested in the decision here. I think I agree with the broad picture here, and certainly with the idea that an author is under no obligation to respond to comments, whether because the author finds the comments unhelpful or overly time consuming or for whatever other reason. That said, I am mostly commenting here to register my disagreement with the idea of giving post authors any kind of moderating privileges on their posts. That just seems like an obviously terrible idea from an epistemic perspective. Just because a post author doesn't find a comment productive doesn't mean someone else won't get something out of it, and allowing an author to censor comments therefor destroys value. LW is the last site I would have expected to allow such a thing.

I think ultimately someone needs to do the job of moderation, and in as much as we want to allow for something like an archipelago of cultures, the LW moderation team really can't do all the moderation necessary to make such things possible.

Note that there are a bunch of restrictions on author moderation:

- The threshold for getting the ability to moderate frontpage posts is quite high (2,000 karma)

- The /moderation page allows you to find any deleted comments, or users banned from other's posts

- We watch author moderation quite closely and would both change the rules, and limit the ability of an individual to moderate their posts if they abuse it

In general, I am not a huge fan of calling all deletion censorship. You are always welcome to make a new top-level post or shortform with your critique or comments. The general thing to avoid is to not always force everyone into the same room, so to speak.

I do think an alternative is for the LW team to do a lot more moderation, and more opinionated moderation, but I think this is overall worse (both because it's a huge amount of work, and because it centralizes the risk so that if we end up messing up or being really dumb about ...

This is the mentioned comment thread under which Said can comment for the next two weeks. Anyone can ask questions here if you want Said to have the ability to respond.

Said, feel free to ask questions of commenters or of me here (and if you want to send me some statement of less than 3,000 words, I can add it to the body of the post, and link to it from the top).

(I will personally try to limit my engagement with the comments of this post to less than 10 hours, so please forgive if I stop engaging at some point, I just really have a lot of stuff to get to)

Edit: And the two weeks are over.[1]

- ^

I decided to not actually check the "ban" flag on Said's account, on account of trusting him to not post and vote under his account, and this allowing him to keep accessing any drafts he has on his account, and other things that might benefit from being able to stay logged in.

I am, of course, ambivalent about harshly criticizing a post which is so laudatory toward me.[1] Nevertheless, I must say that, judging by the standards according to which LessWrong posts are (or, at any rate, ought to be) judged, this post is not a very good one.

The post is very long. The length may be justified by the subject matter; unfortunately, it also helps to hide the post’s shortcomings, as there is a tendency among readers to skim, and while skimming to assume that the skimmed-over parts say basically what they seem to, argue coherently for what they promise to argue for, do not commit any egregious offenses against good epistemics, etc. Regrettably, those assumptions fail to hold for many parts of the post, which contains a great deal of sloppy argumentation, tendentious characterizations, attempts to sneak in connotations via word choice and phrasing, and many other improprieties.

The problems begin in the very first paragraph:

For roughly [7 years] have I spent around one hundred hours almost every year trying to get Said Achmiz to understand and learn how to become a good LessWrong commenter by my lights.

This phrasing assumes that there’s something to “understand” (...

(After all, I too can say: “For roughly 7 years, I have spent many hours trying to get Oliver Habryka to understand and learn how to run a discussion forum properly by my lights.” Would this not sound absurd? Would he not object to this formulation? And rightly so…)

FWIW, this seems to me like a totally fine sentence. The "by my lights" at the end is indeed communicating the exact thing you are asking for here, trying to distinguish between a claim of obvious correctness, and a personal judgement.

Feel free to summarize things like this in the future, I would not object.

Of course, the truth of this claim hinges on how many is “few”. Less than 10? Less than 100?

It of course depends on how active someone on LessWrong is (you are not as widely known as Eliezer or Scott, of course). My modal guess would be that you would be around place 20 in how people would bring up your name. I think this would be an underestimate of your effect on the culture. If someone else thinks this is implausible, I would be happy to operationalize, find someone to arbitrate, and then bet on it.

...To which Said responded by trying to rally up a group of people attacking anyone who dared to use moderati

Just noting that

one should object to tendentious and question-begging formulations, to sneaking in connotations, and to presuming, in an unjustified way, that your view is correct and that any disagreement comes merely from your interlocutor having failed to understand your obviously correct view

is a strong argument for objecting to the median and modal Said comment.

I think this reply is rotated from the thing that I'm interested in--describing vice instead of virtue, and describing the rule that is being broken instead of the value from rule-following. As an analogy, consider Alice complaining about 'lateness' and Bob asking why Alice cares; Alice could describe the benefits of punctuality in enabling better coordination. If Alice instead just says "well it's disrespectful to be late", this is more like justifying the rule by the fact that it is a rule than it is explaining why the rule exists.

But my guess at what you would say, in the format I'm interested in, is something like "when we speak narrowly about true things, conversations can flow more smoothly because they have fewer interruptions." Instead of tussling about whether the framing unfairly favors one side, we can focus on the object level. (I was tempted to write "irrelevant controversies", but part of the issue here is that the controversies are about relevant features. If we accept the framing that habryka knows something that you don't, that's relevant to which side the audience should take in a disagreement about principles.)

That said, let us replace the symbol with the s...

A commenter writes:

If I were a moderator, I would have banned Jesus Christ Himself if He required me to spend one hundred hours moderating His posts on multiple occasions. Given your description here I am surprised you did not do this a long time ago. I admire your restraint, if not necessarily your wisdom.

This strikes me as either deeply confused, or else deliberately… let’s say “manipulative”[1].

Suppose that I am a moderator. I want to ban someone (never mind why I want this). I also want to seem to be fair. So I simply claim that this person requires me to spend a great deal of effort on them. The rest of the members will mostly take this at face value, and will be sympathetic to my decision to ban this tiresome person. This obviously creates an incentive for me to claim, of anyone whom I wish to ban, that they require me to spend much effort on them.

Alright, but still, can’t such a claim be true? To some degree, yes; for example, suppose that someone constantly lodges complaints, makes accusations against others, etc., requiring an investigation each time. (On the other hand, if the complaints are valid and the accusations true, then it seems odd to say that it’s the compla...

The most charitable interpretation I can think of is that Elizabeth meant you should have added “I think that...” or ”...for me” specifically to the line "Also, it comes from CFAR, which is an anti-endorsement."

But regardless, it seems crazy that your comment was downvoted to -17 (-16 now, someone just upvoted it by 1) and got a negative mod judgment for this.

Calling an author a “coward” for banning you from their post

FYI, that link goes to a very weird URL, which I doubt is what you intended.

The link you had in mind, I am sure, is to this thread. And your description of that thread, in this comment and in the OP, is quite dishonest. You wrote:

Said took to his own shortform where (amongst other things) he and others called that author a coward for banning him

Calling an author a “coward” for banning you from their post

In ordinary conversation between normal people, I wouldn’t hesitate to call this a lie. Here on LessWrong, of course, we like to have long, nuanced discussions about how something can be not technically a lie, what even is “lying”, etc., so—maybe this is a “lie” and maybe not. But here’s the truth: the first use of the word “coward” in that thread was on Gordon’s part. He wrote:[1]

I didn’t have to say anything. I could have just banned you. But I’m not a coward and I’ll own my action. I think it’s the right one, even if I pay some reputational cost for it.

And I replied:

...I’m not a coward

Well, I wasn’t going to say it, but now that you’ve denied it explicitly—sorry, no, I have to disagree. Banning critics fr

That’s the picture that someone would come away with, after reading your characterization. And, of course, it would be completely inaccurate.

I'm not sure the more accurate picture is flawless behavior or anything, but I do think I definitely had an inaccurate picture in the way Said describes.

I am surprised there are so few - perhaps in that calculation I was mistakingly tracking some comments you made in other posts that I didn't directly participate in.

Nevertheless, every single example you bring up above was in fact unpleasant for me, some substantially so - while reasonable conclusions were reached (and in many cases I found the discussion fruitful in the end), the tone in your comments was one that put me on edge and sucked up a lot of my mental energy. I had the feeling that to interact with you at all was to an invitation to be drawn into an vortex of fact-checking and quibbling (as this current conversation is a small example of).

It is not surprising to me that you find all of these conversations unobjectionable. To me, your entrance to my comment threads was a minor emergency. To you, it was Tuesday.

I stand by the claim that a plurality of my unpleasant interactions on this site involved you - this is not a high bar. I do not recall another user with whom I had more than one.

I remain confused as to whether banning you is the correct move for the health of the site in general. The point I was trying to make was along the lines of [for a class of writers like alkjash, removing Said Achmiz from LessWrong makes us feel more relaxed about posting].

I spent ~2 hours reading the comments, and I just want to say I regret it. The comments are painful to evaluate in an unbiased way (very combative) and overall doesn't really matter.

I feel vaguely good about this decision. I've only had one relatively brief round of Said commenting, but it's not free.

If Said returns, I'd like him to have something like a "you can only post things which Claude with this specific prompt says it expects to not cause <issues>" rule, and maybe a LLM would have the patience needed to show him some of the implications and consequences of how he presents himself.

I also feel vaguely good about it, but I feel decisively bad about this suggestion!

I've been investigating LLM-induced psychosis cases, and in the process have spent dozens of hours reading through hundreds if not thousands of possible cases on reddit. And nothing has made me appreciate Said's mode of communication (which I have a natural distaste towards) more than wading through all that sycophantic nonsense slop!

In particular, it has made it more clear to me what the epistemic function of disagreeableness is, and why getting rid of it completely would be very bad. (I'm distinguishing 'disagreeableness' here from 'criticism', which I believe can almost always be done in an agreeable way.) Not something I really would have disagreed with before (ha), but it helps me to see a visceral failure mode of my natural inclination to really drive the point home.

What you're doing here is conflating contempt based on group membership with contempt based on specific behaviors. Sneer-clubbers will sneer at anyone they identify as a Rationalist simply for being a Rationalist. Said Achmiz, in contrast, expresses some amount of contempt for people who do fairly specific and circumscribed things like write posts that are vague or self-contradictory or that promote religion or woo. Furthermore, if authors had been willing to put a disclaimer at the top of their posts along the lines of "This is just a hypothesis I'm considering. Please help me develop it further rather than criticizing it, because it's not ready for serious scrutiny yet." my impression is that Said would have been completely willing to cooperate. But possible norms like that were never seriously considered because, in my opinion, LW's issue is not not the "LinkedIn attractor" but the "luminary attractor". I think certain authors here see how Eliezer Yudkowsky is treated by his fans and want some of that sweet acclamation for themselves, but without legitimately earning it. They want to make a show of encouraging criticism, but only in a kayfabe, neutered form that allows them to smoothly answer in a way that only reinforces their status. And Oliver Habryka and the other mods apparently approve of this behavior, or at least are unwilling to take any effective steps to curb it, which I find very disappointing.

You say:

Furthermore, if authors had been willing to put a disclaimer at the top of their posts along the lines of "This is just a hypothesis I'm considering. Please help me develop it further rather than criticizing it, because it's not ready for serious scrutiny yet." my impression is that Said would have been completely willing to cooperate.

Out of curiosity, I clicked on the first post that Said received a moderation warning for, which is this Ray's post on 'Musings on Double Crux (and "Productive Disagreement")'. You might notice the very first line of that post:

Epistemic Status: Thinking out loud, not necessarily endorsed, more of a brainstorm and hopefully discussion-prompt.

It's not the exact kind of disclaimer you proposed here (it importantly doesn't say that readers shouldn't criticize it) but it also clearly isn't claiming some kind of authority or fully worked-out theory, and is very explicit about the draft status of it. This didn't change anything about Said's behavior as far as I can tell, resulting in a heavily-downvoted comment with a resulting moderator warning.

There are also multiple other threads (which I don't have the time to dig up) in which Said ma...

That's not true! Did you read the very first moderation conversation that we had with Said that is quoted in the OP?

After the comment above, we reached out to Said privately and Elizabeth had something like an hour long chat conversation with him asking him what we need to do to get him to change his behavior, to which his response was:

...Buuuut what's going on here is that - and this is imo unfortunate - the website you guys have built is such that posting or commenting on it provides me with a fairly low amount of value

This is something I really do find disappointing, but it is what it is (for now? things change, of course)

So again it's not that I disagree with you about anything you've said

But the sort of care / attention / effort w.r.t. tone and wording and tact and so on, that you're asking, raises the cost of participation for me above the benefit

(Another aspect of this is that if I have to NOT say what I actually think, even on e.g. the CFAR thing w.r.t. Double Crux, well, again, what then is the point)

(I can say things I don't really believe anywhere)

[...]

If the takeaway here is that I have to learn things or change my behavior, well - I'm not averse in principle to doing that

Random thought: maybe there could have been disproportional gains got by getting Said to involve more humor in his messaging and branding him the official Fool of Lesswrong.com?

It seems the community indeed gets service out of Said shooting down low quality communication, and limiting that form of communication socially to his specific role maybe would have insulated the wider social implications, so that most value would have been preserved each way, maybe?

which would seem to indicate that a relatively small nudge would have tipped his contributions to the positive side.

Just to be clear, this overall does not strike me as a close call. The situation seems to me more related to the section on "Crimes that are harder to catch should be more socially punished" plus some other dynamics. My epistemic state changed a lot over the years, but not in a way that would result in thin margins, but in a way where some important consideration, or some part of my model would shift, and this would switch things from "in expectation this is extremely costly" to "in expectation what Said is doing is quite important".

Something being a difficult call to make does not generally mean that it also needed to be a close call.

Also, if the door to Said changing his behavior was so completely closed, I'm really confused about what all those hundreds of hours were spent on.

I mean, we tried anyways, but I do think it was overall a mistake and a reasonable thing to do at the time would have been to respond with "well, sorry, if you as a commenter are already pre-empting that you are not willing to change basically at all based on moderator feedback, then yeah, goodbye, farewell, goodluck, we really need more cooperation than that". Elizabeth advocated for this IIRC, and I instead tried to make things work out. I think Elizabeth was ultimately right here.

I think the people who talk as though the contested issue here is Said's disagreeableness combined with him having high standards are missing the point.

Said Achmiz, in contrast, expresses some amount of contempt for people who do fairly specific and circumscribed things like write posts that are vague or self-contradictory or that promote religion or woo.

If it was just that (and if by "posts that are vague" you mean "posts that are so vague that they are bad, or posts that are vague in ways that defeat the point of the post"), I'd be sympathetic to your take. However, my impression is that a lot more posts would trigger Said's "questioning mode." (Personally I'm hesitant to use the word "contempt," but it's fair to say it made engaging more difficult for authors and they did involve what I think of as "sneer tone" sometimes.)

The way I see it, there are posts that might be a bit vague in some ways but they're still good and valuable. This could even be because the post was gesturing at a phenomeon with nuances where it would require a lot of writing (and disentanglement work) to make it completely concise and comprehensive, or it could be because an author wanted to share an i...

I feel like Said not only has a personal distaste of that sort of “post that contains bits that aren’t pinned down,” but it also seemed like he wouldn’t get any closer to seeing the point of those posts or comments when it was explained in additional detail.

If a post starts off vague and exploratory, on a topic that isn't very easy to think/write about, it would make sense that it usually couldn't be clarified enough to meet Said's standards within a few back-and-forth comments.

That’s pretty frustrating to deal with for authors and other commenters.

Yes, but I think that's in part because of the nature of intellectual progress, and in part because there are so few people like Said who is incentivized (by his own personality) to push back hard and persistently on this kind of post (so people are not used to it). I think it's also in part due to the tone that he typically employs, which he theoretically could change, but that seems connected with his personality in a way that we seemingly couldn't get one without the other.

As a tenured (albeit perhaps now 'emeritus') member of the "generally critical commentator crew", I think this is the wrong decision (cf.). As the OP largely anticipates the reasons I would offer against it, I think the disagreement is a matter of degrees among the various reasons pro and con. For a low resolution sketch of why I prefer my prices of 'pro tanto' to the moderators:

- I don't think Said's commenting, in aggregate, strays that close to the sneer attractor. "Pointed questions with an undercurrent of disdain" may not be ideal, but I have seen similar-to-worse antics[1] (e.g. writing posts which are thinly veiled/naked attacks on other users, routine abuse of subtext then 'going meta' to mire any objection to this game with interminable rules-lawyering) from others on this site who have prosecuted 'campaigns' against ideologies/people they dislike.[2]

- The principal virtue of Said doing this for LW is calling bullshit on things which are, in fact, bullshit. I think there remains too much (e.g.) 'post'-'rationalist' woo on LW, and it warrants robustly challenging/treating with the disdain it deserves. I don't see many others volunteering for duty.

- The principal cost is when

I think it would be a good norm to never strong-downvote someone you're debating, no matter how carefully you've read them, because it's just too easy to be biased in such situations, and it makes people suspicious/resentful/angry (due to thinking that the vote is biased/unfair, and having no recourse or ability to hold anyone accountable), which is not conducive to having calm and productive discussions. Rather surprised that you don't support or follow this.

I somewhat agree and apply a substantially higher bar to downvoting people I am debating, especially on non-moderation discussions (in the threads on this post, I abstained from voting for a lot of his replies to me, though less on his replies to others, e.g. the Vaniver thread).

As a site-moderator my job is often more messy and I think allows less of this principle than it does for others. In many cases where I would encourage other people to just "downvote and move on", I often do not have that choice, as the role of actually explaining the norms of the space, or justifying a moderation decisions, or explaining how the site works, falls on me. In many cases, if I didn't vote on those comments, the author would not get the appropriate feedback at all.

Another thing that I think is important is to have gradual escalation. It is indeed better for someone to be downvoted before they are banned. As a moderator, voting is the first step of moderation. Moderators should vote a lot, and pay attention to voting patterns, and how voting goes wrong, because it's a noisy measure and the moderators are generally in the best position to remove the most distortions. Most moderation s...

Its not obvious this is dumb to me. If two people are super angry at each other, that conversation seems likely to create more heat than light.

I'm not a regular user of LW, but I wanted to weigh in anyway. The style of endless asymmetric-effort criticism can be very wearing on people with perfectionist or OCD-like tendencies. I am, sadly, one of those people. In my head is a multi-faced voice of rage and criticism that constantly second guesses my decisions and thoughts and says many of the same things about anyone else's work or life or decisions. This kind of thing is one of the faces, able to find fault in anything and treat it all with importance both high and invariant over any sort of context. I think the voice is something like an IFS firefighter. In fact, here he is now:

wow. You come to LessWrong (stop abbreviating) and you can't even be bothered to put five seconds into reading Kaj's Unlocking the Emotional Brain summary to see if it really is a firefighter and not a protector?

It's exhausting and demoralizing. This is far from the only component, to be fair, and I actually don't doubt that Said is honestly trying to make the world a better place... but this particular flavor of criticism is not making things better. It can be done well, but this isn't it. This makes people, over time and without really notici...

I'm very good friends with someone who is persistently critical and it has imo largely improved my mental health, fwiw, by forcing me to construct a functioning and well-maintained ego which I didn't really have before.

FWIW, no need to anonymize if this was an attempt to lightly protect me, this was me:

Last month, a user banned Said from commenting on his posts. Said took to his own shortform where (amongst other things) he and others called that author a coward for banning him.

Also FWIW, I've had some genuinely positive interactions with Said in the last couple weeks. I was surprised as anyone. I don't know if it's because he was trying to be on his best behavior or what, but if that was how Said commented on everything, I'd be very happy to see him unbanned (I had even had the idea that if we continued to have positive interactions I would unban him after whatever felt like enough time for me to believe in the new pattern).

Now, one might think that it seems weird for one person to be able to derail a comment thread.

This does not seem weird to me at all. LW is a scary place for many newcomers, and many posts get 0–1 comments, and one comment that makes someone feel dumb seems likely to result in their never posting again.

I strongly agree that it's important to avoid the LinkedIn attractor; I simultaneously think that we should value newcomers and err at least a little bit on the side of being gentle with them.

From my very much outside view, extending the rate limiting to 3 comments a week indefinitely would have solved most of the stated issues.

I started posting to Less Wrong in 2011, under the name Fezziwig. I lost the password, so I made this account for LW2.0. I quit reading after the dustup in 2022, because I didn't like how the mods treated Said. I started up again this summer; I guess I came back at the wrong time.

Object-level I think Said was right most of the time, and doing an important job that almost no one else around here is willing to do. A few times I thought of trying to do the same thing more kindly; I'm a more graceful writer than he is, so I thought I had a good shot. But I never did it, because I don't believe Said's tone was ever really the issue: what upset people, what tended to produce those long ugly subthreads, was when he made a good point that couldn't be persuasively answered, and didn't get distracted by evasions. There isn't, actually, a kind way to ask for examples from someone who doesn't have any.

That's not to say all his comments were like that; some really were just bad. But the bad ones didn't tend to spawn demon threads. People didn't have to reply, because they knew that he was wrong, instead of just wishing it.

Also, I think that if ".....

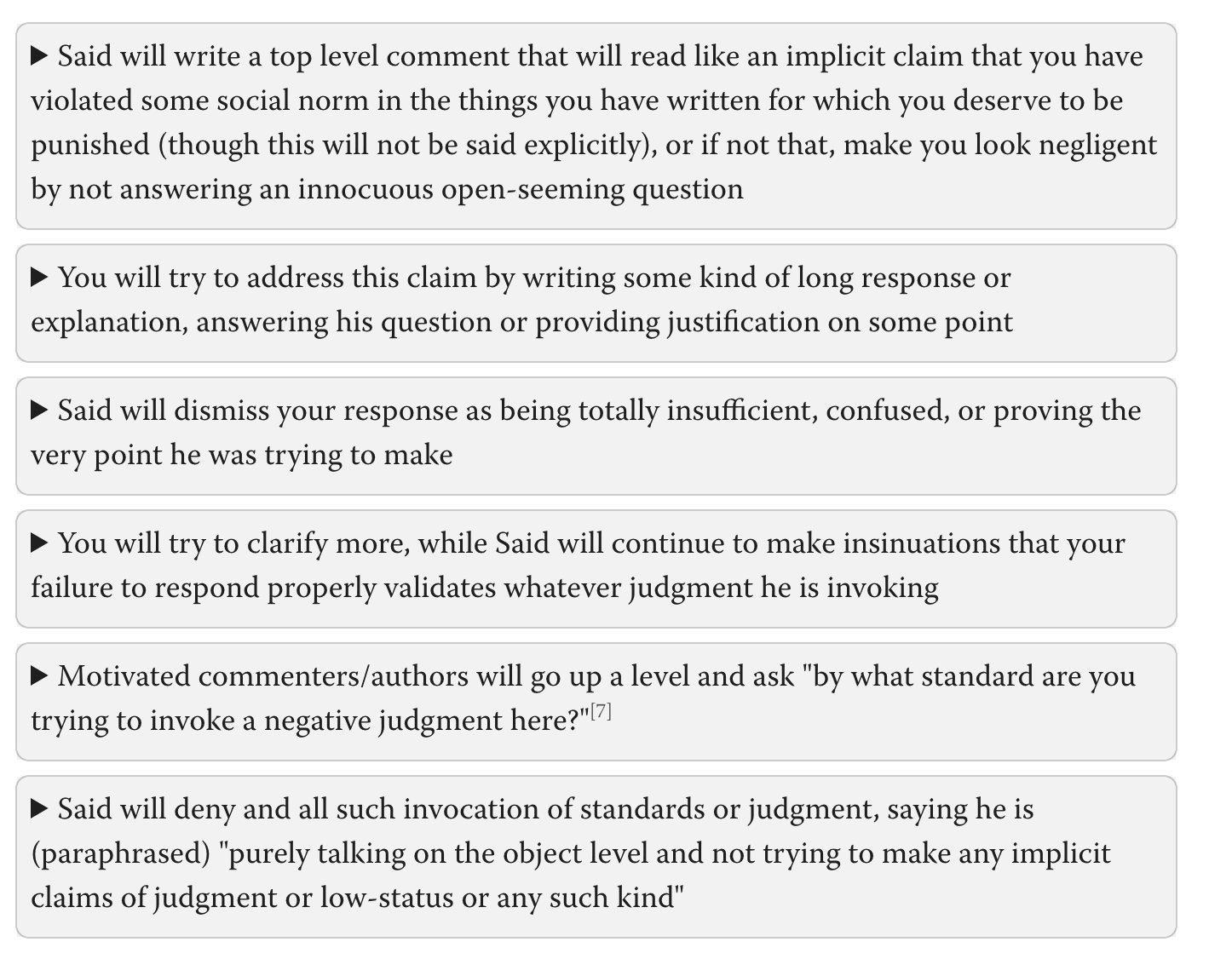

My best guess is that the usual ratio of "time it takes to write a critical comment" to "time it takes to respond to it to a level that will broadly be accepted well" is about 5x. This isn't in itself a problem in an environment with lots of mutual trust and trade, but in an adversarial context it means that it's easily possible to run a DDOS attack on basically any author whose contributions you do not like by just asking lots of questions, insinuating holes or potential missing considerations, and demanding a response, approximately independently of the quality of their writing.

For related musings see the Scott Alexander classic Beware Isolated Demands For Rigor.

Not strictly related to this post, but I'm glad you know this and it makes me more confident in the future health of Lesswrong as a discussion place.

I have two feature requests in response to this class of concerns.

Problem statement: authors feel pressure to respond to comments even if they think responding is low value. Meanwhile, readers hesitate to comment because they do not wish to impose costs (response costs or social costs) on the author.

Solution: authors can use emoji be able to tag a comment to indicate why they are choosing not to respond. LessWrong already has this via emoji responses, and I have used them for this purpose (as a comment author). A beneficial side-effect is that emojis can't be karma-voted, further reducing social pressure. My feature requests aim to improve this avenue.

Tiny: remove emoji question marks. For example, the emoji that says "Seems offtopic?" can just be "Offtopic", like "Soldier Mindset". This would make the emoji better express something like "I am not responding because this is (in my opinion) offtopic" rather than "This might be offtopic but I am not sure, l am not responding because I can't be bothered to find out". This suggestion also applies to:

- Too Combative? -> Too Combative

- Misunderstands Position? -> Misunderstands Position

- "Not worth getting into? (I'm guessing it's pr

Thank you for your hard work! Neither the decision itself nor the work of justifying it and discussing it is particularly easy, as I can say from experience. I appreciate you putting so much effort into trying to keep the site healthy.

This post has comments from some people who agree and from some people who disagree with the decision. It seems worth making explicit that this discussion may underrepresent the amount of people who agree, because some of the people with the strongest agreement would be the ones who've already left the site because of Said.

I don't think this sort of abstract analysis is valid. For instance, you could argue that it may underrepresent the people who disagree, because it's become increasingly clear that Said-style criticism is unwelcome on LW in the past few months, as the conflict has escalated.

Think it's just really hard to know without doing a lot of work.

I think it'd be more accurate to say that "there's this other factor too" rather than "this analysis is not valid"?

There are a number of comments expressing disagreement that have gotten a fair number of upvotes, so it doesn't look to me like expressing disagreement would be unwelcome.

Edited to add: I should also mention that I don't think this comment came out of "abstract analysis". It came from the fact that back when I banned Eugine Nier, I then reached out to a user who had left the site because of him to let them know their harasser was banned. The user's response was basically, "glad to hear, but I still don't feel like coming back". So at least in one previous case, users who had left because of a now-banned user were actually permanently out of the resulting discussion.

In reference to Said criticizing Benquo, you seem to be ignoring the crucial point, which is that Said was right. Benquo made the simple claim, that knowing about yeast is useful in everyday life, and this claim is clearly wrong, regardless of what either of them said about it. Benquo could have admitted this, or he could have found another example. But instead he doubled down on being wrong, which naturally leads to frustration. It's concerning that you picked this conversation as an example, as if you can't tell.

I'm also confused by the "asymmetric effort" part. You describe it like a competition between author and critic. But if the criticism is correct, the author should be happy to receive it (isn't that the point of making posts?). And how much effort does it take to say "I don't understand what you mean" or "this doesn't seem important to my core point" or "you're right"? And what reward do you think Said gets from this? Certainly not social status, as he clearly doesn't have any.

By the way, I appreciate you pointing to real conversations instead of vaguely hand waving. If you want to change anyone's mind about norms, you need to point to negative as well as positive examples as evidence. Hopefully you'll do that when you eventually explain the right way to fight the LinkedIn attractor.

It's been many years since I've been active on LW, but while I was, Said was the source of a plurality of my unpleasant interactions on this site. Many other commenters leveraged serious criticisms of my writing, but only Said consistently ruined my day while doing so.

I cannot say whether this decision was right in the end, but will attest that seeing this post made me happy.

Tangential feature request: allow people to embed other comments in posts natively. This article uses screenshots of LessWrong to display conversations, but this does not responsively size them for mobile users and makes it harder to copy-paste stuff from this post, which a native implementation could fix.

I'd also like to say that a lot of Duncan's conflict-oriented nature in the Duncan/Said moderation post and comments, as well as other posts where they interact was precisely because of the issues described in the section But why ban someone, can't people just ignore Said?, in that it's much less easy to ignore comments than a lot of people realize.

While it doesn't explain all of the conflict, I do think it explains a non-trivial amount of the reason why Duncan has the tendency to get into conflict with Said, because there's a social norm that criticism ha...

And if the stakes are even higher, you can ultimately try to get me fired from this job. The exact social process for who can fire me is not as clear to me as I would like, but you can convince Eliezer to give head-moderatorship to someone else, or convince the board of Lightcone Infrastructure to replace me as CEO, if you really desperately want LessWrong to be different than it is.

I don't plan on doing this, but who is on the board of Lightcone Infrastructure? This doesn't seem to be on your website.

This outcome makes me a little sad. I have a sense that more is possible.

How would this situation play out in a world like dath ilan? A world where The Art has progressed to something much more formidable.

Is there some fundamental incompatibility here that can't be bridged? Possibly. I have a hunch that this isn't the case though. My hunch is that there is a lot of soldier mindsetting going on and that once The Art figures out the right Jedi mind tricks to jolt people out of that mindset and into something more scout-like, these sorts of conflicts will oft...

Is there some fundamental incompatibility here that can't be bridged? Possibly. I have a hunch that this isn't the case though.

I don't believe Said is having very contingent bad interactions with tons of commenters and the mod team, but rather that this is a result of a principled commitment to a certain kind of forum commenting behavior that involves things like any commenter being able to demand answers to questions at the risk of the post-author's status, holding extreme disdain and disrespect for interlocutors while being committed to never saying anything explicitly or even denying that it is the case, and other things discussed in the OP, that in combination are extremely good at sucking energy out of people with little intellectual productivity as a result. My guess is that if we played the history of LW 2.0 over like 10 more times making lots of changes to lots of variables that seem promising or relevant to you, the outcome would've eventually been the same basically each time.

To take your proposal, I think it's likely that Said has literally written a disdainful comment about NVC — yep, I looked a little, Said writes "It has been my experience that NVC is used exclusively...

I have some suggestions for mechanistic improvements to the LW website that may help alleviate some of the issues presented here.

RE: Comment threads with wild swings in upvotes/downvotes due to participation from few users with large vote-weights; a capping/scaling factor on either total comment karma or individual vote-weights could solve this issue. An example total-karma-capping mechanism would be limiting the absolute value of the displayed karma for a comment to twice its parent's karma. An example vote-weight-capping mechanism would be limiting vote ...

I have an information question about the 3 year ban, in that I'd like to ask why you chose a temporary ban over a indefinite ban?

In particular, given the history of Said Achmiz here, including the case where he did the same behavior he was rate-limited before, I am a bit confused on what you are hoping to do by simply performing a temporary ban in lieu of an indefinite ban:

...

- To which we responded by telling Said to please let authors moderate as they desire and to not do that again, and gave him a 3 month rate-limit

- After the rate-limit he seemed to behave be

3 years is long enough that LessWrong might be a very different place by then, or Said might have changed quite a bit, or maybe things will have actually sunk in in 3 years. I think it's likely for the threshold for rebanning to be pretty low in 3 years, but it seemed to me potentially worth it to leave some door open in the more distant future.

Now, I do recommend that if you stop using the site, you do so by loudly giving up, not quietly fading. Leave a comment or make a top-level post saying you are leaving. I care about knowing about it, and it might help other people understand the state of social legitimacy LessWrong has in the broader world and within the extended rationality/AI-Safety community.

Sure. I think this is a good decision because it:

- Makes LessWrong worse in ways that will accelerate its overall decline and the downstream harms of it, MIRI, et al's existence.

- Alienates a hard

There's some cluster of ideas surrounding how authors are informed/encouraged to use the banning options. It sounds like the entire topic of "authors can ban users" is worth revisiting so my first impulse is to avoid investing in it further until we've had some more top-level discussion about the feature.

Free Hearing, Not Speech seems like a better approach to me. Give users the affordances to automatically see the kinds of comments they want to interact with, or the conversations they want to have. Users don't have to see what they believe is bad-faith, l...

I of course have lots of thoughts! My current tentative take is that ideally I would like LessWrong to be a hierarchy of communities with their own streams and norms, which when they produce particularly good output, feed into a shared agora-like space (and potentially multiple levels of this).

Reddit is kind of structured like this. Subreddits each have their own culture, but the Reddit frontpage and people's individual feeds are the result of the most upvoted content in each Subreddit bubbling up to a broader audience.

I think Reddit is lacking a bunch of other infrastructure to do this properly for the things I care about, and I would like a stronger universal culture than Reddit currently has, but it's a decent pointer for one structure that seems promising to me (LessWrong is far away from this for a bunch of different reasons that I could go into, but would take time, so I am going to keep it at this for now).

Completely omitted my post about Said, and my response to your responses on that post.

https://www.lesswrong.com/posts/SQ8BrC5MJ9jo9n83i/said-achmiz-helps-me-learn , cross posted at Data secrets lox.

I'll have to follow his comments elsewhere.

My philosophy is no more “totalizing” than that which is described in, say… the Sequences. (Or, indeed, basically any other normative view on almost any intellectual topic.) Do you consider Eliezer to have constantly been “making dominance threats” in all of his posts?

EDIT: Uh… not sure what happened here. The parent comment was deleted, and now this comment is in the middle of nowhere…?

Man, posting on LessWrong seems really unrewarding. You show up, you put a ton of effort into a post, and at the end the comment section will tear apart some random thing that isn't load bearing for your argument, isn't something you consider particularly important, and whose discussion doesn't illuminate what you are trying to communicate, all the while implying that they are superior in their dismissal of your irrational and dumb ideas.

You could run an LLM every time someone tries to post a comment. If a top level reply tries to nitpick something t...

I think the crux is what feeds the dangerous norms, and what makes norms dangerous. I expect that when considered in detail, Said or most others with similar behaviors aren't intending or causing the kinds of damage you describe to an important extent. But at the same time, norms (especially insane ones) feed on first impressions, not on detailed analyses.