I agree with most of the factual claims made in this post about evolution. I agree that "IGF is the objective" is somewhat sloppy shorthand. However, after diving into the specific ways the object level details of "IGF is the objective" play out, I am confused about why you believe this implies the things you claim they imply about the sharp left turn / inner misalignment. Overall, I still believe that natural selection is a reasonable analogy for inner misalignment.

- I agree fitness is not a single stationary thing. I agree this is prima facie unlike supervised learning, where the objective is typically stationary. However, it is pretty analogous to RL, and especially multi agent RL, and overall I don't think of the inner misalignment argument as depending on stationarity of the environment in either direction. AlphaGo might early in training select for policies that do tactic X initially because it's a good tactic to use against dumb Go networks, and then once all the policies in the pool learn to defend against that tactic it is no longer rewarded. Therefore I don't see any important disanalogy between evolution and multi agent RL. I have various thoughts on why language models do not make RL analogies irrelevant that I can explain but that's another completely different rabbit hole.

- I agree that humans (to a first approximation) still have the goals/drives/desires we were selected for. I don't think I've heard anyone claim that humans suddenly have an art creating drive that suddenly appeared out of nowhere recently, nor have I heard any arguments about inner alignment that depend on an evolution analogy where this would need to be true. The argument is generally that the ancestral environment selected for some drives that in the ancestral environment reliably caused something that the ancestral environment selected for, but in the modern environment the same drives persist but their consequences in terms of [the amount of that which the ancestral environment was selecting for] now changes, potentially drastically. I think the misconception may arise from a closely related claim that some make, which is that AI systems might develop weird arbitrary goals (tiny metallic squiggles) because any goal with sufficient intelligence implies playing the training game and then doing a sharp left turn. However, the claim here is not that the tiny metallic squiggles drive will suddenly appear at some point and replace the "make humans really happy" drive that existed previously. The claim is that the drive for tiny metallic squiggles was always, from the very beginning, the reason why [make humans really happy] was the observed behavior in environment [humans can turn you off if they aren't happy with you], and therefore in a different environment [humans can no longer turn you off], the observed behavior is [kill everyone and make squiggles].

- I agree that everything is very complex always. I agree that there are multiple different goals/drives/desires in humans that result in children, of which the sex drive is only one. I agree that humans still have children sometimes, and still want children per se sometimes, but in practice this results in less and less children than in the ancestral environment over time (I bet even foragers are at least above replacement rate) for exactly the reason that the drives that we have always had for the reason that they caused us to survive/reproduce in the past now correspond much less well. I also agree that infanticide exists and occurs (but in the ancestral environment, there are counterbalancing drives like taboos around infanticide). In general, in many cases, simplifying assumptions totally break the analogy and make the results meaningless. I don't think I've been convinced that this is one of those cases.

I don't really care about defending the usage of "fitness as the objective" specifically, and so I don't think the following is a crux and am happy to concede some of the points below for the sake of argument about the object facts of inner alignment. However, for completeness, my take on when "fitness" can be reasonably described as the objective, and when it can't be:

- I agree that couched in terms of the specific traits, the thing that evolution does in practice is sometimes favoring some traits and sometimes favoring other traits. However, I think there's an important sense in which these traits are not drawn from a hat- natural selection selects for lighter/darker moths because it makes it easier for the moths to survive and reproduce! If lighter moths become more common whenever light moths survive and reproduce better, and vice versa for dark moths, as opposed to moths just randomly becoming more light or more dark in ways uncorrelated to survival/reproduction, it seems pretty reasonable to say that survival/reproduction is closer to the thing being optimized than some particular lightness/darkness function that varies between favoring lightness and darkness.

- I agree it is possible to do artificial selection for some particular trait like moth color and in this case saying that the process optimizes "fitness" (or survival/reproduction) collapses to saying the same thing as the process optimizes moth lightness/darkness. I agree it would be a little weird to insist that "fitness" is the goal in this case, and that the color is the more natural goal. I also agree that the evolutionary equations plays out the same way whether the source of pressure is artificial human selection or birds eating the moths. Nonetheless, I claim the step where you argue the two cases are equivalent for the purposes of whether we can consider fitness the objective is the step that breaks down. I think the difference between this case and the previous case is that the causality flows differently. We can literally draw from a hat whether we want light moths or dark moths, and then reshape the environment until fitness lines up with our preference for darkness, whereas in the other case, the environment is drawn from a hat and the color selection is determined downstream of that.

Thank you, I like this comment. It feels very cooperative and like some significant effort went into it, and it also seems to touch the core of some important consideratios.

I notice I'm having difficulty responding, in that I disagree with some of what you said, but then have difficulty figuring out my reasons for that disagreement. I have the sense there's a subtle confusion going on, but trying to answer you makes me uncertain whether others are the ones with the subtle confusion or if I am.

I'll think about it some more and get back to you.

I think your disagreement can be made clear with more formalism. First, the point for your opponents:

When the animals are in a cold place, they are selected for a long fur coat, and also for IGF, (and other things as well). To some extent, these are just different ways of describing the same process. Now, if they move to a warmer place, they are now selected for a shorter fur instead, and they are still selected for IGF. And there's also a more concrete correspondence to this: they have also been selected for "IF cold long fur, ELSE short fur" the entire time. Notice especially that there are animals actually implementing this dependent property - it can be evolved just fine, in the same way as the simple properties. And in fact, you could "unroll" the concept of IGF into a humongous environment-dependent strategy, which would then always be selected for, because all the environment-dependence is already baked in.

Now on the other hand, if you train an AI first on one thing, and then on another, wouldn't we expect it to get worse at the first again? Indeed, we would also expect a species living in the cold for very long to lose those adaptations relevant to the heat. The reason for this in both cases are, broadly speaking, limits and penalties to complexity. I'm not sure very many people would have bought the argument in the previous paragraph - we all know unused genetic code decays over time. But in the behavioral/cognitive version with intentionally maximizing IGF that makes it easy to ignore the problems, we're not used to remembering the physical correlates of thinking. Of course, a dragonfly couldn't explicitly maximize IGF, because its brain is to small to even understand what that is, and developing that brain has demands for space and energy incompatible with the general dragonfly life strategy. The costs of cognition are also part of the demands of fitness, and the dragonfly is more fit the way it is, and similarly I think a human explicitly maximizing IGF would have done worse for most of our evolution[1] because the odds you get something wrong are just too high with current expenditure on cognition, better to hardcode some right answers..

I don't share your optimistic conclusion however. Because the part about selecting for multiple things simultanuously, that's true. You are always selecting for everything thats locally extensionally equivalent to the intended selection criteria. There is not a move you could have done in evolutions place, to actually select for IGF instead of [various particular things], this already is what happens when you select for IGF, because it's the complexity, rather than different intent, that lead to the different result[2]. Similarly, reinforcement learning for human values will result is whatever is the simplest[3] way to match human values on the training data.

So I think the issue is that when we discuss what I'd call the "standard argument from evolution", you can read two slightly different claims into it. My original post was a bit muddled because I think those claims are often conflated, and before writing this reply I hadn't managed to explicitly distinguish them.

The weaker form of the argument, which I interpret your comment to be talking about, goes something like this:

- The original evolutionary "intent" of various human behaviors/goals was to increase fitness, but in the modern day these behaviors/goals are executed even though their consequences (in terms of their impact on fitness) are very different. This tells us that the intent of the process that created a behavior/goal does not matter. Once the behavior/goal has been created, it will just do what it does even if the consequences of that doing deviate from their original purpose. Thus, even if we train an AI so that it carries out goal X in a particular context, we have no particular reason to expect that it would continue to automatically carry out the same (intended) goal if the context changes enough.

I agree with this form of the argument and have no objections to it. I don't think that the points in my post are particularly relevant to that claim. (I've even discussed a form of inner optimization in humans that causes value drift that I don't recall anyone else discussing in those terms before.)

However, I think that many formulations are actually implying, if not outright stating a stronger claim:

- In the case of evolution, humans were originally selected for IGF but are now doing things that are completely divorced from that objective. Thus, even if we train an AI so that it carries out goal X in a particular context, we have a strong reason to expect that its behavior would deviate so much from the goal as to become practically unrecognizable.

So the difference is something like the implied sharpness of the left turn. In the weak version, the claim is just that the behavior might go some unknown amount to the left. We should figure out how to deal with this, but we don't yet have much empirical data to estimate exactly how much it might be expected to go left. In the strong version, the claim is that the empirical record shows that the AI will by default swerve a catastrophic amount to the left.

(Possibly you don't feel that anyone is actually implying the stronger version. If you don't and you would already disagree with the stronger version, then great! We are in agreement. I don't think it matters whether the implication "really is there" in some objective sense, or even whether the original authors intended it or not. I think the relevant thing is that I got that implication from the posts I read, and I expect that if I got it, some other people got it too. So this post is then primarily aimed at the people who did read the strong version to be there and thought it made sense.)

You wrote:

I agree that humans (to a first approximation) still have the goals/drives/desires we were selected for. I don't think I've heard anyone claim that humans suddenly have an art creating drive that suddenly appeared out of nowhere recently, nor have I heard any arguments about inner alignment that depend on an evolution analogy where this would need to be true. The argument is generally that the ancestral environment selected for some drives that in the ancestral environment reliably caused something that the ancestral environment selected for, but in the modern environment the same drives persist but their consequences in terms of [the amount of that which the ancestral environment was selecting for] now changes, potentially drastically.

If we are talking about the weak version of the argument, then yes, I agree with everything here. But I think the strong version - where our behavior is implied to be completely at odds with our original behavior - has to implicitly assume that things like an art-creation drive are something novel.

Now I don't think that anyone who endorses the strong version (if anyone does) would explicitly endorse the claim that our art-creation drive just appeared out of nowhere. But to me, the strong version becomes pretty hard to maintain if you take the stance that we are mostly still executing all of the behaviors that we used to, and it's just that their exact forms and relative weightings are somewhat out of distribution. (Yes, right now our behavior seems to lead to falling birthrates and lots of populations at below replacement rates, which you could argue was a bigger shift than being "somewhat out of distribution", but... to me that intuitively feels like it's less relevant than the fact that most individual humans still want to have children and are very explicitly optimizing for that, especially since we've only been in the time of falling birthrates for a relatively short time and it's not clear whether it'll continue for very long.)

I think the strong version also requires one to hold that evolution does, in fact, consistently and predominantly optimize for a single coherent thing. Otherwise, it would mean that our current-day behaviors could be explained by "evolution doesn't consistently optimize for any single thing" just as well as they could be explained by "we've experienced a left turn from what evolution originally optimized for".

However, it is pretty analogous to RL, and especially multi agent RL, and overall I don't think of the inner misalignment argument as depending on stationarity of the environment in either direction. AlphaGo might early in training select for policies that do tactic X initially because it's a good tactic to use against dumb Go networks, and then once all the policies in the pool learn to defend against that tactic it is no longer rewarded.

I agree that there are contexts where it would be analogous to that. But in that example, AlphaGo is still being rewarded for winning games of Go, and it's just that the exact strategies it needs to use differ. That seems different than e.g. the bacteria example, where bacteria are selected for exactly the opposite traits - either selected for producing a toxin and an antidote, or selected for not producing a toxin and an antidote. That seems to me more analogous to a situation where AlphaGo is initially being rewarded for winning at Go, then once it starts consistently winning it starts getting rewarded for losing instead, and then once it starts consistently losing it starts getting rewarded for winning again.

And I don't think that that kind of a situation is even particularly rare - anything that consumes energy (be it a physical process such as producing a venom or a fur, or a behavior such as enjoying exercise) is subject to that kind of an "either/or" choice.

Now you could say that "just like AlphaGo is still rewarded for winning games of Go and it's just the strategies that differ, the organism is still rewarded for reproducing and it's just the strategies that differ". But I think the difference is that for AlphaGo, the rewards are consistently shaping its "mind" towards having a particular optimization goal - one where the board is in a winning state for it.

And one key premise on which the "standard argument from evolution" rests is that evolution has not consistently shaped the human mind in such a direct manner. It's not that we have been created with "I want to have surviving offspring" as our only explicit cognitive goal, with all of the evolutionary training going into learning better strategies to get there by explicit (or implicit) reasoning. Rather we have been given various motivations that exhibit varying degrees of directness in how useful they are for that goal - from "I want to be in a state where I produce great art" (quite indirect) to "I want to have surviving offspring" (direct), with the direct goal competing with all the indirect ones for priority. Unlike AlphaGo, which does have the cognitive capacity for direct optimization toward its goal being the sole reward criteria all along.

This is also a bit hard to put a finger on, but I feel like there's some kind of implicit bait-and-switch happening with the strong version of the standard argument. It correctly points out that we have not had IGF as our sole explicit optimization goal because we didn't start by having enough intelligence for that to work. Then it suggests that because of this, AIs are likely to also be misaligned... even though, unlike with human evolution, we could just optimize them for one explicit goal from the beginning, so we should expect our AIs to be much more reliably aligned with that goal!

I think the main crux is that in my mind, the thing you call the "weak version" of the argument simply is the only and sufficient argument for inner misalignment and very sharp left turn. I am confused precisely what distinction you draw between the weak and strong version of the argument; the rest of this comment is an attempt to figure that out.

My understanding is that in your view, having the same drive as before means also having similar actions as before. For example, if humans have a drive for making art, in the ancestral environment this means drawing on cave walls (maybe this helped communicate the whereabouts of food in the ancestral environment). In the modern environment, this may mean passing up a more lucrative job opportunity to be an artist, but it still means painting on some other surface. Thus, the art drive, taking almost the same kinds of actions it ever did (maybe we use acrylic paints from the store instead of grinding plants into dyes ourselves), no longer results in the same consequences in amount of communicating food locations or surviving and having children or whatever it may be. But this is distinct from a sharp left turn, where the actions also change drastically (from helping humans to killing humans).

I agree this is more true for some drives. However, I claim that the association between drives and behaviors is not true in general. I claim humans have a spectrum of different kinds of drives, which differ in how specifically the drive specifies behavior. At one end of the spectrum, you can imagine stuff like breathing or blinking where it's kind of hard to even say whether we have a "breathing goal" or a clock that makes you breath regularly--the goal is the behavior, in the same way a cup has the "goal" of holding water. At this end of the spectrum it is valid to use goal/drive and behavior interchangeably. At the other end of the spectrum are goals/drives which are very abstract and specify almost nothing about how you get there: drives like desire for knowledge and justice and altruism and fear of death.

The key thing that makes these more abstract drives special is that because they do not specifically prescribe actions, the behaviors are produced by the humans reasoning about how to achieve the drive, as opposed to behaviors being selected for by evolution directly. This means that a desire for knowledge can lead to reading books, or launching rockets, or doing crazy abstract math, or inventing Anki, or developing epistemology, or trying to build AGI, etc. None of these were specifically behaviors that evolution could have reinforced in us--the behaviors available in the ancestral environment were things like "try all the plants to see which ones are edible". Evolution reinforced the abstract drive for knowledge, and left it up to individual human brains to figure out what to do, using the various Lego pieces of cognition that evolution built for us.

This means that the more abstract drives can actually suddenly just prescribe really different actions when important facts in the world change, and those actions will look very different from the kinds of actions previously taken. To take a non-standard example, for the entire history of the existence of humanity up until quite recently, it just simply has not been feasible for anyone to contribute meaningfully to eradicating entire diseases (indeed, for most of human history there was no understanding of how diseases actually worked, and people often just attributed it to punishment of the gods or otherwise found some way to live with it, and sometimes, as a coping mechanism, to even think the existence of disease and death necessary or net good). From the outside it may appear as if for the entire history of humanity there was no drive for disease eradication, and then suddenly in the blink of an evolutionary timescale eye a bunch of humans developed a disease eradication drive out of nowhere, and then soon thereafter suddenly smallpox stopped existing (and soon potentially malaria and polio). These will have involved lots of novel (on evolutionary timescale) behaviors like understanding and manufacturing microscopic biological things at scale, or setting up international bodies for coordination. In actuality, this was driven by the same kinds of abstract drives that have always existed like curiosity and fear of death and altruism, not some new drive that popped into being, but it involved lots of very novel actions steering towards a very difficult target.

I don't think any of these arguments depend crucially on whether there is a sole explicit goal of the training process, or if the goal of the training process changes a bunch. The only thing the argument depends on is whether there exist such abstract drives/goals (and there could be multiple). I think there may be a general communication issue where there is a type of person that likes to boil problems down to their core, which is usually some very simple setup, but then neglects to actually communicate why they believe this particular abstraction captures the thing that matters.

I am confused by your AlphaGo argument because "winning states of the board" looks very different depending on what kinds of tactics your opponent uses, in a very similar way to how "surviving and reproducing" looks very different depending on what kinds of hazards are in the environment. (And winning winning states of the board always looking like having more territory encircled seems analogous to surviving and reproducing always looking like having a lot of children)

I think there is also a disagreement about what AlphaGo does, though this is hard to resolve without better interpretability -- I predict that AlphaGo is actually not doing that much direct optimization in the sense of an abstract drive to win that it reasons about, but rather has a bunch of random drives piled up that cover various kinds of situations that happen in Go. In fact, the biggest gripe I have with most empirical alignment research is that I think models today fail to have sufficiently abstract drives, quite possibly for reasons related to why they are kind of dumb today and why things like AutoGPT mysteriouly have failed to do anything useful whatsoever. But this is a spicy claim and I think not that many other people would endorse this.

I don't think any of these arguments depend crucially on whether there is a sole explicit goal of the training process, or if the goal of the training process changes a bunch. The only thing the argument depends on is whether there exist such abstract drives/goals

I agree that they don't depend on that. Your arguments are also substantially different from the ones I was criticizing! The ones I was responding were ones like the following:

The central analogy here is that optimizing apes for inclusive genetic fitness (IGF) doesn't make the resulting humans optimize mentally for IGF. Like, sure, the apes are eating because they have a hunger instinct and having sex because it feels good—but it's not like they could be eating/fornicating due to explicit reasoning about how those activities lead to more IGF. They can't yet perform the sort of abstract reasoning that would correctly justify those actions in terms of IGF. And then, when they start to generalize well in the way of humans, they predictably don't suddenly start eating/fornicating because of abstract reasoning about IGF, even though they now could. Instead, they invent condoms, and fight you if you try to remove their enjoyment of good food (telling them to just calculate IGF manually). The alignment properties you lauded before the capabilities started to generalize, predictably fail to generalize with the capabilities. (A central AI alignment problem: capabilities generalization, and the sharp left turn)

15. [...] We didn't break alignment with the 'inclusive reproductive fitness' outer loss function, immediately after the introduction of farming - something like 40,000 years into a 50,000 year Cro-Magnon takeoff, as was itself running very quickly relative to the outer optimization loop of natural selection. Instead, we got a lot of technology more advanced than was in the ancestral environment, including contraception, in one very fast burst relative to the speed of the outer optimization loop, late in the general intelligence game. [...]

16. Even if you train really hard on an exact loss function, that doesn't thereby create an explicit internal representation of the loss function inside an AI that then continues to pursue that exact loss function in distribution-shifted environments. Humans don't explicitly pursue inclusive genetic fitness; outer optimization even on a very exact, very simple loss function doesn't produce inner optimization in that direction. (AGI Ruin: A List of Lethalities)

Those arguments are explicitly premised on humans having been optimized for IGF, which is implied to be a single thing. As I understand it, your argument is just that humans now have some very different behaviors from the ones they used to have, omitting any claims of what evolution originally optimized us for, so I see it as making a very different sort of claim.

To respond to your argument itself:

I agree that there are drives for which the behavior looks very different from anything that we did in the ancestral environment. But does very different-looking behavior by itself constitute a sharp left turn relative to our original values?

I would think that if humans had experienced a sharp left turn, then the values of our early ancestors should look unrecognizable to us, and vice versa. And certainly, there do seem to be quite a few things that our values differ on - modern notions like universal human rights and living a good life while working in an office might seem quite alien and repulsive to some tribal warrior who values valor in combat and killing and enslaving the neighboring tribe, for instance.

At the same time... I think we can still basically recognize and understand the values of that tribal warrior, even if we don't share them. We do still understand what's attractive about valor, power, and prowess, and continue to enjoy those kinds of values in less destructive forms in sports, games, and fiction. We can read Gilgamesh or Homer or Shakespeare and basically get what the characters are motivated by and why they are doing the things they're doing. An anthropologist can go to a remote tribe to live among them and report that they have the same cultural and psychological universals as everyone else and come away with at least some basic understanding of how they think and why.

It's true that humans couldn't eradicate diseases before. But if you went to people very far back in time and told them a story about a group of humans who invented a powerful magic that could destroy diseases forever and then worked hard to do so... then the people of that time would not understand all of the technical details, and maybe they'd wonder why we'd bother bringing the cure to all of humanity rather than just our tribe (though Prometheus is at least commonly described as stealing fire for all of humanity, so maybe not), but I don't think they would find it a particularly alien or unusual motivation otherwise. Humans have hated disease for a very long time, and if they'd lost any loved ones to the particular disease we were eradicating they might even cheer for our doctors and want to celebrate them as heroes.

Similarly, humans have always gone on voyages of exploration - e.g. the Pacific islands were discovered and settled long ago by humans going on long sea voyages - so they'd probably have no difficulty relating to a story about sorcerers going to explore the moon, or of two tribes racing for the glory of getting there first. Babylonians had invented the quadratic formula by 1600 BC and apparently had a form of Fourier analysis by 300 BC, so the math nerds among them would probably have some appreciation of modern-day advanced math if it was explained to them. The Greek philosophers argued over epistemology, and there were apparently instructions on how to animate golems (arguably AGI-like) around by the late 12th/early 13th century.

So I agree that the same fundamental values and drives can create very different behavior in different contexts... but if it is still driven by the same fundamental values and drives in a way that people across time might find relatable, why is that a sharp left turn? Analogizing that to AI, it would seem to imply that if the AI generalized its drives in that kind of way when it came to novel contexts, then we would generally still be happy about the way it had generalized them.

This still leaves us with that tribal warrior disgusted with our modern-day weak ways. I think that a lot of what is going on with him is that he has developed particular strategies for fulfilling his own fundamental drives - being a successful warrior was the way you got what you wanted back in that day - and internalized them as a part of his aesthetic of what he finds beautiful and what he finds disgusting. But it also looks to me like this kind of learning is much more malleable than people generally expect. One's sense of aesthetics can be updated by propagating new facts into it, and strongly-held identities (such as "I am a technical person") can change in response to new kinds of strategies becoming viable, and generally many (I think most) deep-seated emotional patterns can at least in principle be updated. (Generally, I think of human values in terms of a two-level model, where the underlying "deep values" are relatively constant, with emotional responses, aesthetics, identities, and so forth being learned strategies for fulfilling those deep values. The strategies are at least in principle updatable, subject to genetic constraints such as the person's innate temperament that may be more hardcoded.)

I think that the tribal warrior would be disgusted by our society because he would rightly recognize that we have the kinds of behavior patterns that wouldn't bring glory in his society and that his tribesmen would find it shameful to associate with, and also that trying to make it in our society would require him to unlearn a lot of stuff that he was deeply invested in. But if he was capable of making the update that there were still ways for him to earn love, respect, power, and all the other deep values that his warfighting behavior had originally developed to get... then he might come to see our society as not that horrible after all.

I am confused by your AlphaGo argument because "winning states of the board" looks very different depending on what kinds of tactics your opponent uses, in a very similar way to how "surviving and reproducing" looks very different depending on what kinds of hazards are in the environment.

I don't think the actual victory states look substantially different? They're all ones where AlphaGo has more territory than the other player, even if the details of how you get there are going to be different.

I predict that AlphaGo is actually not doing that much direct optimization in the sense of an abstract drive to win that it reasons about, but rather has a bunch of random drives piled up that cover various kinds of situations that happen in Go.

Yeah, I would expect this as well, but those random drives would still be systematically shaped in a consistent direction (that which brings you closer to a victory state).

I agree that "IGF is the objective" is somewhat sloppy shorthand.

It’s used a lot in the comment sections. Do you know a better refutal than this post?

This is a great post! Thank you for writing it.

There's a huge amount of ontological confusion about how to think of "objectives" for optimization processes. I think people tend to take an inappropriate intentional stance and treat something like "deliberately steering towards certain abstract notions" as a simple primitive (because it feels introspectively simple to them). This background assumption casts a shadow over all future analysis, since people try to abstract the dynamics of optimization processes in terms of their "true objectives", when there really isn't any such thing.

Optimization processes (or at least, evolution and RL) are better thought of in terms of what sorts of behavioral patterns were actually selected for in the history of the process. E.g., @Kaj_Sotala's point here about tracking the effects of evolution by thinking about what sorts of specific adaptations were actually historically selected for, rather than thinking about some abstract notion of inclusive genetic fitness, and how the difference between modern and ancestral humans seems much smaller from this perspective.

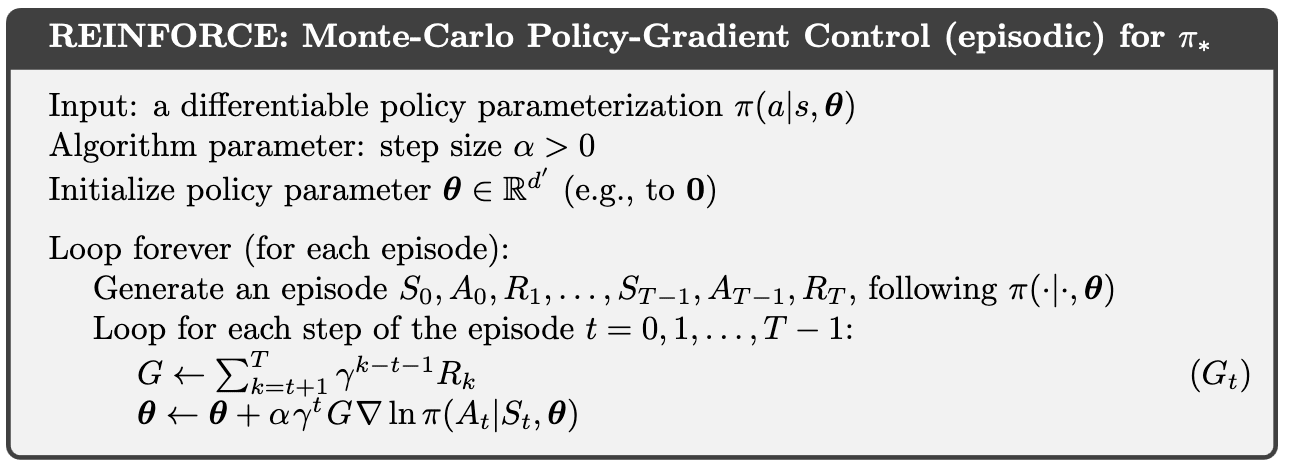

I want to make a similar point about reward in the context of RL: reward is a measure of update strength, not the selection target. We can see as much by just looking at the update equations for REINFORCE (from page 328 of Reinforcement Learning: An Introduction):

The reward[1] is literally a (per step) multiplier of the learning rate. You can also think of it as providing the weights of a linear combination of the parameter gradients, which means that it's the historical action trajectories that determine what subspaces of the parameters can potentially be explored. And due to the high correlations between gradients (at least compared to the full volume of parameter space), this means it's the action trajectories, and not the reward function, that provides most of the information relevant for the NN's learning process.

From Survival Instinct in Offline Reinforcement Learning:

on many benchmark datasets, offline RL can produce well-performing and safe policies even when trained with "wrong" reward labels, such as those that are zero everywhere or are negatives of the true rewards. This phenomenon cannot be easily explained by offline RL's return maximization objective. Moreover, it gives offline RL a degree of robustness that is uncharacteristic of its online RL counterparts, which are known to be sensitive to reward design.

Trying to preempt possible confusion:

I expect some people to object that the point of the evolutionary analogy is precisely to show that the high-level abstract objective of the optimization process isn't incorporated into the goals of the optimized product, and that this is a reason for concern because it suggests an unpredictable/uncontrollable mapping between outer and inner optimization objectives.

My point here is that, if you want to judge an optimization process's predictability/controllability, you should not be comparing some abstract notion of the process's "true outer objective" to the result's "true inner objective". Instead, you should consider the historical trajectory of how the optimization process actually adjusted the behaviors of the thing being optimized, and consider how predictable that thing's future behaviors are, given past behaviors / updates.

@Kaj_Sotala argues above that this perspective implies greater consistency in human goals between the ancestral and modern environments, since the goals evolution actually historically selected for in the ancestral environment are ~the same goals humans pursue in the modern environment.

For RL agents, I am also arguing that thinking in terms of the historical action trajectories that were actually reinforced during training implies greater consistency, as compared to thinking of things in terms of some "true goal" of the training process. E.g., Goal Misgeneralization in Deep Reinforcement Learning trained a mouse to navigate to cheese that was always placed in the upper right corner of the maze and found that it would continue going to the upper right even when the cheese was moved.

This is actually a high degree of consistency from the perspective of the historical action trajectories. During training, the mouse continually executed the action trajectories that navigated it to the upper right of the board, and continued to do the exact same thing in the modified testing environment.

- ^

Technically it's the future return in this formulation, and current SOTA RL algorithms can be different / more complex, but I think this perspective is still a more accurate intuition pump than notions of "reward as objective", even for setups where "reward as a learning rate multiplier" isn't literally true.

I think this is really lucid and helpful:

I expect some people to object that the point of the evolutionary analogy is precisely to show that the high-level abstract objective of the optimization process isn't incorporated into the goals of the optimized product, and that this is a reason for concern because it suggests an unpredictable/uncontrollable mapping between outer and inner optimization objectives.

My point here is that, if you want to judge an optimization process's predictability/controllability, you should not be comparing some abstract notion of the process's "true outer objective" to the result's "true inner objective". Instead, you should consider the historical trajectory of how the optimization process actually adjusted the behaviors of the thing being optimized, and consider how predictable that thing's future behaviors are, given past behaviors / updates.

@Kaj_Sotala argues above that this perspective implies greater consistency in human goals between the ancestral and modern environments, since the goals evolution actually historically selected for in the ancestral environment are ~the same goals humans pursue in the modern environment.

This seems to be making the same sort of deepity that Turntrout is making in his 'reward is not the optimization target', in taking a minor point about model-free RL approaches not necessarily building in any explicit optimization/planning for reward into their policy, and then people not understanding it because it ducks the major issue, while handwaving a lot of points. (Especially bad: infanticide is not a substitute for contraception because pregnancy is outrageously fatal and metabolically expensive, which is precisely why the introduction of contraception has huge effects everywhere it happens and why hunter-foragers have so many kids while contemporary women have fewer than they want to. Infanticide is just about the worst possible form of contraception short of the woman dying. I trust you would not argue that 'suicide is just as effective contraceptive as infanticide or condoms' using the same logic - after all, if the mother is dead, then there's definitely no more kids...)

In particular, this fundamentally does not answer the challenge I posed earlier by pointing to instances of sperm bank donors who quite routinely rack up hundreds of offspring, while being in no way special other than having a highly-atypical urge to have lots of offspring. You can check this out very easily in seconds and verify that you could do the same thing with less effort than you've probably put into some video games. And yet, you continue to read this comment. Here, look, you're still reading it. Seconds are ticking away while you continue to forfeit (I will be generous and pretend that a LWer is likely to have median number of kids) much more than 10,000% more fitness at next to no cost of any kind. And you know this because you are a model-based RL agent who can plan and predict the consequences of actions based solely on observations (like of text comments) without any additional rewards, you don't have to wait for model-free mechanisms like evolution to slowly update your policy over countless rewards. You are perfectly able to predict that if the status quo lasted for enough millennia, this would stop being true; men would gradually be born with a baby-lust, and would flock to sperm donation banks (assuming such things even still existed under the escalating pressure); you know what the process of evolution would do and is doing right now very slowly, and yet, using your evolution-given brain, you still refuse to reap the fitness rewards of hundreds of offspring right now, in your generation, with yourself, for your genes. How is this not an excellent example of how under novel circumstances, inner-optimizers (like human brains) can almost all (serial sperm donor cases like hundreds out of billions) diverge extremely far (if forfeiting >10,000% is not diverging far, what would be?) from the optimization process's reward function (within-generation increase in allele frequencies), while pursuing other rewards (whatever it is you are enjoying doing while very busy not ever donating sperm)? Certainly if AGI were as well-aligned with human values as we are with inclusive fitness, that doesn't seem to bode very well for how human values will be fulfilled over time as the AGI-environment changes ever more rapidly & at scale - I don't know what the 'masturbation, porn, or condom of human values' is, and I'd rather not find out empirically how diabolically clever reward hacks can be when found by superhuman optimization processes at scale targeting the original human values process...

This seems to entirely ignore the actual point that is being made in the post. The point is that "IGF" is not a stable and contentful loss function, it is a misleadingly simple shorthand for "whatever traits are increasing their own frequency at the moment." Once you see this, you notice two things:

- In some weak sense, we are fairly well "aligned" to the "traits" that were selected for in the ancestral environment, in particular our social instincts.

- All of the ways in which ML is disanalogous with evolution indicate that alignment will be dramatically easier and better for ML models. For starters, we don't randomly change the objective function for ML models throughout training. See Quintin's post for many more disanalogies.

The main problem I have with this type of reasoning is an arbitrary drawn ontological boundaries. Why IGF is "not real" and ML objective function is "real", while if we really zoom in training process, the verifiable in positivist brutal way real training goal is "whatever direction in coefficient space loss function decreases on current batch of data" which seems to me pretty corresponding to "whatever traits are spreading in current environment"?

infanticide is not a substitute for contraception

I did not mean to say that they would be exactly equivalent nor that infanticide would be without significant downsides.

How is this not an excellent example of how under novel circumstances, inner-optimizers (like human brains) can almost all (serial sperm donor cases like hundreds out of billions) diverge extremely far (if forfeiting >10,000% is not diverging far, what would be?) from the optimization process's reward function (within-generation increase in allele frequencies), while pursuing other rewards (whatever it is you are enjoying doing while very busy not ever donating sperm)?

"Inner optimizers diverging from the optimization process's reward function" sounds to me like humans were already donating to sperm banks in the EEA, only for an inner optimizer to wreak havoc and sidetrack us from that. I assume you mean something different, since under that interpretation of what you mean the answer would be obvious - that we don't need to invoke inner optimizers because there were no sperm banks in the EEA, so "that's not the kind of behavior that evolution selected for" is a sufficient explanation.

The "why aren't men all donating to sperm banks" argument assumes that 1.) evolution is optimizing for some simple reducible individual level IGF objective, and 2.) that anything less than max individual score on that objective over most individuals is failure.

No AI we create will be perfectly aligned, so instead all that actually matters is the net utility that AI provides for its creators: something like the dot product between our desired future trajectory and that of the agents. More powerful agents/optimizers will move the world farther faster (longer trajectory vector) which will magnify the net effect of any fixed misalignment (cos angle between the vectors), sure. But that misalignment angle is only relevant/measurable relative to the net effect - and by that measure human brain evolution was an enormous unprecedented success according to evolutionary fitness.

Evolution is a population optimization algorithm that explores a solution landscape via huge N number of samples in parallel, where individuals are the samples. Successful species with rapidly growing populations will naturally experience growth in variance/variation (ala adaptive radiation) as the population grows. Evolution only proceeds by running many many experiments, most of which must be failures in a struct score sense - that's just how it works.

Using even the median sample's fitness would be like faulting SGD for every possible sample of the weights at any point during a training process. For SGD all that matters is the final sample, and likewise all that 'matters' for evolution is the tiny subset of most future fit individuals (which dominate the future distribution). To the extent we are/will use evolutionary algorithms for AGI design, we also select only the best samples to scale up, so only the alignment of the best samples is relevant for similar reasons.

So if we are using individual human samples as our point of analogy comparison, the humans that matter for comparing the relative success of evolution at brain alignment are the most successful: modern sperm donors, genghis khan, etc. Evolution has maintained a sufficiently large sub population of humans who do explicitly optimize for IGF even in the modern environment (to the extent that makes sense translated into their ontology), so its doing very well in that regard (and indeed it always needs to maintain a large diverse high variance population distribution to enable quick adaptation to environmental changes).

We aren't even remotely close to stressing brain alignment to IGF. Most importantly we don't observe species going extinct because they evolved general intelligence, experienced a sharp left turn, and then died out due to declining populations. But the sharp left turn argument does predict that, so its mostly wrong.

No AI we create will be perfectly aligned, so instead all that actually matters is the net utility that AI provides for its creators: something like the dot product between our desired future trajectory and that of the agents. More powerful agents/optimizers will move the world farther faster (longer trajectory vector) which will magnify the net effect of any fixed misalignment (cos angle between the vectors), sure. But that misalignment angle is only relevant/measurable relative to the net effect - and by that measure human brain evolution was an enormous unprecedented success according to evolutionary fitness.

The vector dot product model seems importantly false, for basically the reason sketched out in this comment; optimizing a misaligned proxy isn't about taking a small delta and magnifying it, but about transitioning to an entirely different policy regime (vector space) where the dot product between our proxy and our true alignment target is much, much larger (effectively no different from that of any other randomly selected pair of vectors in the new space).

(You could argue humans haven't fully made that phase transition yet, and I would have some sympathy for that argument. But I see that as much more contingent than necessarily true, and mainly a consequence of the fact that, for all of our technological advances, we haven't actually given rise to that many new options preferable to us but not to IGF. On the other hand, something like uploading I would expect to completely shatter any relation our behavior has to IGF maximization.)

The vector dot product model seems importantly false, for basically the reason sketched out in this comment;

Notice I replied to that comment you linked and agreed with John, but not that any generalized vector dot product model is wrong, but that the specific one in that post is wrong as it doesn't weight by expected probability ( ie an incorrect distance function).

Anyway I used that only as a convenient example to illustrate a model which separates degree of misalignment from net impact, my general point does not depend on the details of the model and would still stand for any arbitrarily complex non-linear model.

The general point being that degree of misalignment is only relevant to the extent it translates into a difference in net utility.

You could argue humans haven't fully made that phase transition yet, and I would have some sympathy for that argument.

From the perspective of evolutionary fitness, humanity is the penultimate runaway success - AFAIK we are possibly the species with the fastest growth in fitness ever in the history of life. This completely overrides any and all arguments about possible misalignment, because any such misalignment is essentially epsilon in comparison to the fitness gain brains provided.

For AGI, there is a singular correct notion of misalignment which actually matters: how does the creation of AGI - as an action - translate into differential utility, according to the utility function of its creators? If AGI is aligned to humanity about the same as brains are aligned to evolution, then AGI will result in an unimaginable increase in differential utility which vastly exceeds any slight misalignment.

You can speculate all you want about the future and how brains may be become misaligned in the future, but that is just speculation.

If you actually believe the sharp left turn argument holds water, where is the evidence?

As as I said earlier this evidence must take a specific form, as evidence in the historical record:

We aren't even remotely close to stressing brain alignment to IGF. Most importantly we don't observe species going extinct because they evolved general intelligence, experienced a sharp left turn, and then died out due to declining populations. But the sharp left turn argument does predict that, so its mostly wrong.

Notice I replied to that comment you linked and agreed with John, but not that any generalized vector dot product model is wrong, but that the specific one in that post is wrong as it doesn't weight by expected probability ( ie an incorrect distance function).

Anyway I used that only as a convenient example to illustrate a model which separates degree of misalignment from net impact, my general point does not depend on the details of the model and would still stand for any arbitrarily complex non-linear model.

The general point being that degree of misalignment is only relevant to the extent it translates into a difference in net utility.

Sure, but if you need a complicated distance metric to describe your space, that makes it correspondingly harder to actually describe utility functions corresponding to vectors within that space which are "close" under that metric.

If you actually believe the sharp left turn argument holds water, where is the evidence?

As as I said earlier this evidence must take a specific form, as evidence in the historical record

Hold on; why? Even for simple cases of goal misspecification, the misspecification may not become obvious without a sufficiently OOD environment; does that thereby mean that no misspecification has occurred?

And in the human case, why does it not suffice to look at the internal motivations humans have, and describe plausible changes to the environment for which those motivations would then fail to correspond even approximately to IGF, as I did w.r.t. uploading?

But I see that as much more contingent than necessarily true, and mainly a consequence of the fact that, for all of our technological advances, we haven't actually given rise to that many new options preferable to us but not to IGF. On the other hand, something like uploading I would expect to completely shatter any relation our behavior has to IGF maximization.

It seems to me that this suffices to establish that the primary barrier against such a breakdown in correspondence is that of insufficient capabilities—which is somewhat the point!

If you actually believe the sharp left turn argument holds water, where is the evidence? As as I said earlier this evidence must take a specific form, as evidence in the historical record

Hold on; why? Even for simple cases of goal misspecification, the misspecification may not become obvious without a sufficiently OOD environment;

Given any practical and reasonably aligned agent, there is always some set of conceivable OOD environments where that agent fails. Who cares? There is a single success criteria: utility in the real world! The success criteria is not "is this design perfectly aligned according to my adversarial pedantic critique".

The sharp left turn argument uses the analogy of brain evolution misaligned to IGF to suggest/argue for doom from misaligned AGI. But brains enormously increased human fitness rather than the predicted decrease, so the argument fails.

In worlds where 1. alignment is very difficult, and 2. misalignment leads to doom (low utility) this would naturally translate into a great filter around intelligence - which we do not observe in the historical record. Evolution succeeded at brain alignment on the first try.

And in the human case, why does it not suffice to look at the internal motivations humans have, and describe plausible changes to the environment for which those motivations would then fail

I think this entire line of thinking is wrong - you have little idea what environmental changes are plausible and next to no idea of how brains would adapt.

On the other hand, something like uploading I would expect to completely shatter any relation our behavior has to IGF maximization.

When you move the discussion to speculative future technology to support the argument from a historical analogy - you have conceded that the historical analogy does not support your intended conclusion (and indeed it can not, because homo sapiens is an enormous alignment success).

It sounds like you're arguing that uploading is impossible, and (more generally) have defined the idea of "sufficiently OOD environments" out of existence. That doesn't seem like valid thinking to me.

Of course i'm not arguing that uploading is impossible, and obviously there are always hypothetical "sufficiently OOD environments". But from the historical record so far we can only conclude that evolution's alignments of brains was robust enough compared to the environment distribution shift encountered - so far. Naturally that could all change in the future, given enough time, but piling in such future predictions is clearly out of scope for an argument from historical analogy.

These are just extremely different:

- an argument from historical observations

- an argument from future predicted observations

It's like I'm arguing that given that we observed the sequence 0,1,3,7 the pattern is probably 2^N-1, and you arguing that it isn't because you predict the next digit is 31.

Regardless uploads are arguably sufficiently categorically different that its questionable how they even relate to evolutionary success of homo sapien brain alignment to genetic fitness (do sims of humans count for genetic fitness? but only if DNA is modeled in some fashion? to what level of approximation? etc.)

How is this not an excellent example of how under novel circumstances, inner-optimizers (like human brains) can almost all (serial sperm donor cases like hundreds out of billions) diverge extremely far (if forfeiting >10,000% is not diverging far, what would be?) from the optimization process's reward function (within-generation increase in allele frequencies), while pursuing other rewards (whatever it is you are enjoying doing while very busy not ever donating sperm)?

I think it's inappropriate to use technical terms like "reward function" in the context of evolution, because evolution's selection criteria serve vastly different mechanistic functions from eg a reward function in PPO.[1] Calling them both a "reward function" makes it harder to think precisely about the similarities and differences between AI RL and evolution, while invalidly making the two processes seem more similar. That is something which must be argued for, and not implied through terminology.

- ^

And yes, I wish that "reward function" weren't also used for "the quantity which an exhaustive search RL agent argmaxes." That's bad too.

Yeah.

The fact that we don't have standard mechanistic models of optimization via selection (which is what evolution and moral mazes and inadequate equilibria and multipolar traps essentially are) is likely a fundamental source of confusion when trying to get people on the same page about the dangers of optimization and how relevant evolution is, as an analogy.

>You can check this out very easily in seconds and verify that you could do the same thing with less effort than you've probably put into some video games.

Indeed. Donating sperm over the Internet costs approximately $125 per donation (most of which is Fedex overnight shipping costs, and often the recipient will cover these) and has about a 10% pregnancy success rate per cycle.

See: https://www.irvinesci.com/refrigeration-medium-tyb-with-gentamicin.html

and https://www.justababy.com/

I agree that humans are not aligned with inclusive genetic fitness, but i think you could look at evolution as a bunch of different optimizers at any small stretch in time and not just a singel optimizer. If not getting killed by spiders is necessary for IGF for example, then evolution could be though off as both an optimizer for IGF and not getting killed by spiders. Some of these optimizers have created mesaoptimizers that resemble the original optimizer to a strong degree. Most people really care about their own biological children not dying for example. I think that thinking about evolution as multiple optimizers, makes it seem more likely that gradient descent is able to instill correct human values sometimes rather than never.

Pregnancy is certainly costly (and the abnormally high miscarriage rate appears to be an attempt to save on such costs in case anything has gone wrong), but it's not that fatal (for the mother). A German midwife recorded one maternal death out of 350 births.

I expect you to be making a correct and important point here, but I don't think I get it yet. I feel confused because I don't know what it would mean for this frame to make false predictions. I could say "Evolution selected me to have two eyeballs" and I go "Yep I have two eyeballs"? "Evolution selected for [trait with higher fitness]" and then "lots of people have trait of higher fitness" seems necessarily true?

I feel like I'm missing something.

Oh. Perhaps it's nontrivial that humans were selected to value a lot of stuff, and (different, modern) humans still value a lot of stuff, even in today's different environment? Is that the point?

Perhaps it's nontrivial that humans were selected to value a lot of stuff

I prefer the reverse story: humans are tools in the hand of the angiosperms, and they’re still doing the job these plants selected them for: they defend angiosperm at all cost. If superIA destruct 100% of the humans along with 99% of life on earth, they’ll call that the seed phase and chill for the new empty environment they would have made us clean for them.

Oh. Perhaps it's nontrivial that humans were selected to value a lot of stuff, and (different, modern) humans still value a lot of stuff, even in today's different environment? Is that the point?

Sort of, but I think it is more specific than that. As I point out in my AI pause essay:

An anthropologist looking at humans 100,000 years ago would not have said humans are aligned to evolution, or to making as many babies as possible. They would have said we have some fairly universal tendencies, like empathy, parenting instinct, and revenge. They might have predicted these values will persist across time and cultural change, because they’re produced by ingrained biological reward systems. And they would have been right.

I take this post to be mostly negative, in that it shows that "IGF" is not a unified loss function; its content is entirely dependent on the environmental context, in ways that ML loss functions are not.

As I point out in my AI pause essay:

Nitpick in there

I hope the reader will grant that the burden of proof is on those who advocate for such a moratorium. We should only advocate for such heavy-handed government action if it’s clear that the benefits of doing so would significantly outweigh the costs.

I find hard to grant something that would have make our response to pandemics or global warming even slower than they are. By the same reasoning, we would not have the Montreal protocol and the UV levels would be public concerns.

Some people will end up valuing children more, for complicated reasons; other people will end up valuing other things more, again for complicated reasons.

Right, because somewhere pretty early in evolutionary history, people (or animals) which valued stuff other than having children for complicated reasons eventually had more descendants than those who didn't. Probably because wanting lots of stuff for complicated reasons (and getting it) is correlated with being smart and generally capable, which led to having more descendants in the long run.

If evolution had ever stumbled upon some kind of magical genetic mutation that resulted in individuals directly caring about their IGF (and improved or at least didn't damage their general reasoning abilities and other positive traits) it would have surely reached fixation rather quickly. I call such a mutation "magical" because it would be impossible (or at least extremely unlikely) to occur through the normal process of mutation and selection on Earth biology, even with billions of chances throughout history. Also, such a mutation would necessarily have to happen after the point at which minds that are even theoretically capable of understanding an abstract concept like IGF already exist.

But this seems more like a fact about the restricted design space and options available to natural selection on biological organisms, rather than a generalizable lesson about mind design processes.

I don't know what the exact right analogies between current AI design and evolution are. But generally capable agents with complex desires are a useful and probably highly instrumentally convergent solution to the problem of designing a mind that can solve really hard and general problems, whether the problem is cast as image classification, predicting text, or "caring about human values", and whether the design process involves iterative mutation over DNA or intelligent designers building artificial neural networks and training them via SGD.

To the degree that current DL-paradigm techniques for creating AI are analogous to some aspect of evolution, I think that is mainly evidence about whether such methods will eventually produce human-level general and intelligent minds at all.

I think this post somewhat misunderstands the positions that it summarizes and argues against, but to the degree that it isn't doing that, I think you should mostly update towards current methods not scaling to AGI (which just means capabilities researchers will try something else...), rather than updating towards current methods being safe or robust in the event that they do scale.

A semi-related point: humans are (evidently, through historical example or introspection) capable of various kinds of orthogonality and alignment failure. So if current AI training methods don't produce such failures, they are less likely to produce human-like (or possibly human-level capable) minds at all. "Evolution provides little or no evidence that current DL methods will scale to produce human-level AGI" is a stronger claim than I actually believe, but I think it is a more accurate summary of what some of the claims and evidence in this post (and others) actually imply.

If evolution had ever stumbled upon some kind of magical genetic mutation that resulted in individuals directly caring about their IGF (and improved or at least didn't damage their general reasoning abilities and other positive traits) it would have surely reached fixation rather quickly.

CRISPR gene drives reach fixation even faster, even if they seriously harm IGF.

Indeed, when you add an intelligent designer with the ability to precisely and globally edit genes, you've stepped outside the design space available to natural selection, and you can end up with some pretty weird results! I think you could also use gene drives to get an IGF-boosting gene to fixation much faster than would occur naturally.

I don't think gene drives are the kind of thing that would ever occur via iterative mutation, but you can certainly have genetic material with very high short-term IGF that eventually kills its host organism or causes extinction of its host species.

Some animals species are able to adopt contraception-like practices too. For example birds of preys typically let some of their offsprings die of hunger when preys are space.

Compare two pairs of statements: "evolution optimizes for IGF" and "evolution optimizes for near-random traits"; "we optimize for aligned models" and "we optimize for models which get good metrics on training dataset".

I feel like a lot of your examples could be captured perfectly well by game theory, and thus can hardly be considered counterexamples. For instance the bush/tree example - it's common in game theory that one individual benefitting leads to another individual doing worse, that doesn't mean that the individuals aren't optimizing for utility.

In the sections before that, I argued that there’s no single thing that evolution selects for; rather, the thing that it’s changing is constantly changing itself.

"The thing that it's selecting for is itself constantly changing"?

Thanks, edited:

I argued that there’s no single thing that evolution selects for; rather, the thing that it’s selecting is constantly changing.

Forager societies didn't have below-replacement fertilities, which are now common for post-industrial societies.

Having children wasn't a paying venture, but people had kids anyway for the same reason other species expend energy on offspring.

Although I enjoyed thinking about this post, I don't currently trust the reasoning in it, and decided not to update off it, for reasons I summarize as:

- You are trying to compare two different levels of abstractions and the "natural" models that come out of them. I think you did not make good arguments why the more detailed-oriented would generalize better than the more abstract one. I think your argumentation boils down to an implicit notion that seeing more detail is better, which is true as information, but does not mean the shape of the concepts expressing such details is a better model (overfitting).

- Your reasoning in certain places lacks quantification where the argument rests on it.

More detailed comments, in order of appearance in the post:

Observing this selection process, we can calculate the IGF of traits currently under selection, as a measure of how strongly those are being selected. But evolution is not optimizing for this measure; evolution is optimizing for the traits that have currently been chosen for optimization.

If IGF is how many new copies of the gene pop up in the next generation, can't I say at the same time that increasing IGF is a good general level description of what's going on, and at the same time look at the details? Why "but?" Maybe I'm picking words though. Also the second sentence confuses me, although after reading the post I think I understand what you mean.

Rather, they cautioned against thinking of evolution as an active agent that “does” anything in the first place.

I expect this sentence in the textbook to be meant as advice against antropomorphizing, putting oneself into the shoes of evolution and using one's own instinct and judgement to see what to do. I think it is possible to analyze evolution as an agent, if one is careful to employ abstract reasoning, without ascribing "goodness" or "justice" or whatever to the agent without realizing.

If we were modeling evolution as a mathematical function, we could say that it was first selecting for light coloration in moths, then changed to select for dark, then changed to select for light again.

To me this looks poor if considered a model; the previous paragraph shows that you understand how the thing goes as the environment changing which genes get selected, which still means that what's going on is increasing IGF; you would predict other similar scenarios by thinking that such and such environmental factor makes such and such genes be the ones increasing their presence. Looking at which genes were selected at which point because of what precisely then doesn't give you automatically a better model than thinking in terms of IGF + external knowledge about the laws of reality.

This leads to the trees becoming more common than the bushes. But since trees need to spend much more energy on producing and maintaining their trunk, they don’t have as much energy to spend on growing fruit. When trees were rare and mostly stealing energy from the bushes, this wasn’t as much of a problem; but once the whole population consists of trees, they can end up shading each other. At this point, they end up producing much less fruit from which new trees could grow, so have fewer offspring and thus a lower mean fitness.

This story is still compatible with the description that, at each point, evolution is following IGF locally, though not globally. I think this checks out with "reward is not the utility function" and such, and also with "selecting for IGF does not produce IGF-maximizing brains". Though all this also makes me suspect that I could be misunderstanding too many things at once.

Effective contraception is a relatively recent innovation. Even hunter-gatherers have access to effective “contraception” in the form of infanticide, which is commonly practiced among some modern hunter-gatherer societies.

The first "effective" here is bigger than the second. See gwern's comment.

Particularly sensitive readers may want to skip the following paragraphs from The Anthropology of Childhood°:

I expect this examples to be cherry-picked. I do not expect ancient society to intentionally kill on average 54/141 = 1 out of 3 kids. I'm volatile due to my ignorance in the matter though.

Also, even though the share of voluntarily childfree people is increasing, it’s still not the predominant choice. One 2022 study found that 22% of the people polled neither had nor wanted to have children - which is a significant amount, but still leaves 78% of people as ones who either have or want to have children. There’s still a strong drive to have children that’s separate from the drive to just have sex.

This is getting distracted by a subset of details when you can look at fertility rates, and possibly at how they relate to wealth, and then at the trajectory of the world. I have the impression there's scientific consensus that fertility will probably go down even in countries which currently have a high one as they get richer.

It’s a novel cultural development that we prioritize things other-than-having-children so much. Anthropology of Childhood spends significant time examining the various factors that affect the treatment of children in various cultures. It quite strongly argues that the value of children has always also been strongly contingent on various cultural and economic factors - meaning that it has always been just one of the things that people care about. (In fact, a desire to have lots of children may be more tied to agricultural and industrial societies, where the economic incentives for it are abnormally high.)

How much is "so much"? Why is not much enough for you?

To me, the simplest story here looks something like “evolution selects humans for having various desires, from having sex to having children to creating art and lots of other things too; and all of these desires are then subject to complex learning and weighting processes that may emphasize some over others, depending on the culture and environment”.

I understand this "looks like the story", but not "simplest", in the context of taking this as model, which I think is the subtext.

But it doesn’t look to me like evolution selected us to desire one thing, and then we developed an inner optimizer that ended up doing something completely different. Rather, it looks like we were selected to desire many different things, with a very complicated function choosing which things in that set of doings each individual ends up emphasizing. Today’s culture might have shifted that function to weigh our desires in a different manner than before, but everything that we do is still being selected from within that set of basic desires, with the weighting function operating the same as it always has.

I agree with the first sentence: evolution selected on IGF, not on desiring IGF. "Selecting on IGF" is itself an abstraction of what's going on, which with humans involved some specific details we know or guess about. In particular, a brain was coughed up that ends up, compared to its clearly visible general abilities, not optimizing IGF as main goal. If you decide not to consider the description of what happened as "selecting on IGF", that's a question of how well that works as a concept to make predictive models.

So I think I mostly literally agree with this paragraph, but not with the avversative: it's not an argument against the subject under debate.

Alternative title: "Evolution suggests robust rather than fragile generalization of alignment properties."

A frequently repeated argument goes something like this:

My argument is that premise 1 is a verbal shorthand that’s technically incorrect, and premise 2 is at least misleading. As for the overall conclusion, I think that the case from evolution might be interpreted as weak evidence for why AI should be expected to continue optimizing human values even as its capability increases.

Summary of how premise 1 is wrong: If we look closely at what evolution does, we can see that it selects for traits that are beneficial for surviving, reproducing, and passing one’s genes to the next generation. This is often described as “optimizing for IGF”, because the traits that are beneficial for these purposes are usually the ones that have the highest IGF. (This has some important exceptions, discussed later.) However, if we look closely at that process of selection, we can see that this kind of trait selection is not “optimizing for IGF” in the sense that, for example, we might optimize an AI to classify pictures.

The model that I’m sketching is something like this: evolution is an optimization function that, at any given time, is selecting for some traits that are in an important sense chosen at random. At any time, it might randomly shift to selecting for some other traits. Observing this selection process, we can calculate the IGF of traits currently under selection, as a measure of how strongly those are being selected. But evolution is not optimizing for this measure; evolution is optimizing for the traits that have currently been chosen for optimization. Resultingly, there is no reason to expect that the minds created by evolution should optimize for IGF, but there is reason to expect that they would optimize for the traits that were actually under selection. This is something that we observe any time that humans optimize for some biological need.

In contrast, if we were optimizing an AI to classify pictures, we would not be randomly changing the selection criteria the way that evolution does. We would keep the selection criteria constant: always selecting for the property of classifying pictures the way we want. To the extent that the analogy to evolution holds, AIs should be much more likely to just do the thing they were selected for.

Summary of how premise 2 is misleading: It is often implied that evolution selected humans to care about sex, and then sex led to offspring, and it was only recently with the evolution of contraception that this connection was severed. For example: