"This is strategically relevant because I'm imagining AGI strategies playing out in a world where everything is already going crazy, while other people are imagining AGI strategies playing out in a world that looks kind of like 2018 except that someone is about to get a decisive strategic advantage." -Christiano

This is a tangent but I don't know when else I would comment on this otherwise. I think one of the biggest potential effects in an acceleration timeline is that things get really memetically weird and unstable. This point was harder to make before covid but imagine the incoherence of institutional responses getting much worse than that. I think a world in which memetic conflict ramps up, local ability to do sense making with your peers gets worse as a side effect. People randomly yelling at you that you need to be paying attention to X. The best thing I know how to do (which may be wholly inadequate) is deciding to invest in high trust connections and joint meaning making. This seems especially likely to be undervalued in a community of high-decouplers. Spending time in peacetime practicing convergence on things that don't have high stakes, like working through each other's ...

This is another reason to take /u/trevor1's advice and limit your mass media diet today. If you think propaganda is going to keep slowly ramping up in terms of effectiveness, then you want to avoid boiling the frog by becoming slightly crazier each year. Ideally you should really try to find some peers who prefer not to mindkill themselves either.

Yeah, that does also feel right to me. I have been thinking about setting up some fund that maybe buys up a bunch of the equity that's held by safety researchers, so that the safety researchers don't have to also blow up their financial portfolio when they press the stop button or do some whistleblowing or whatever, and that does seem pretty incentive wise.

I'm interested in helping with making this happen.

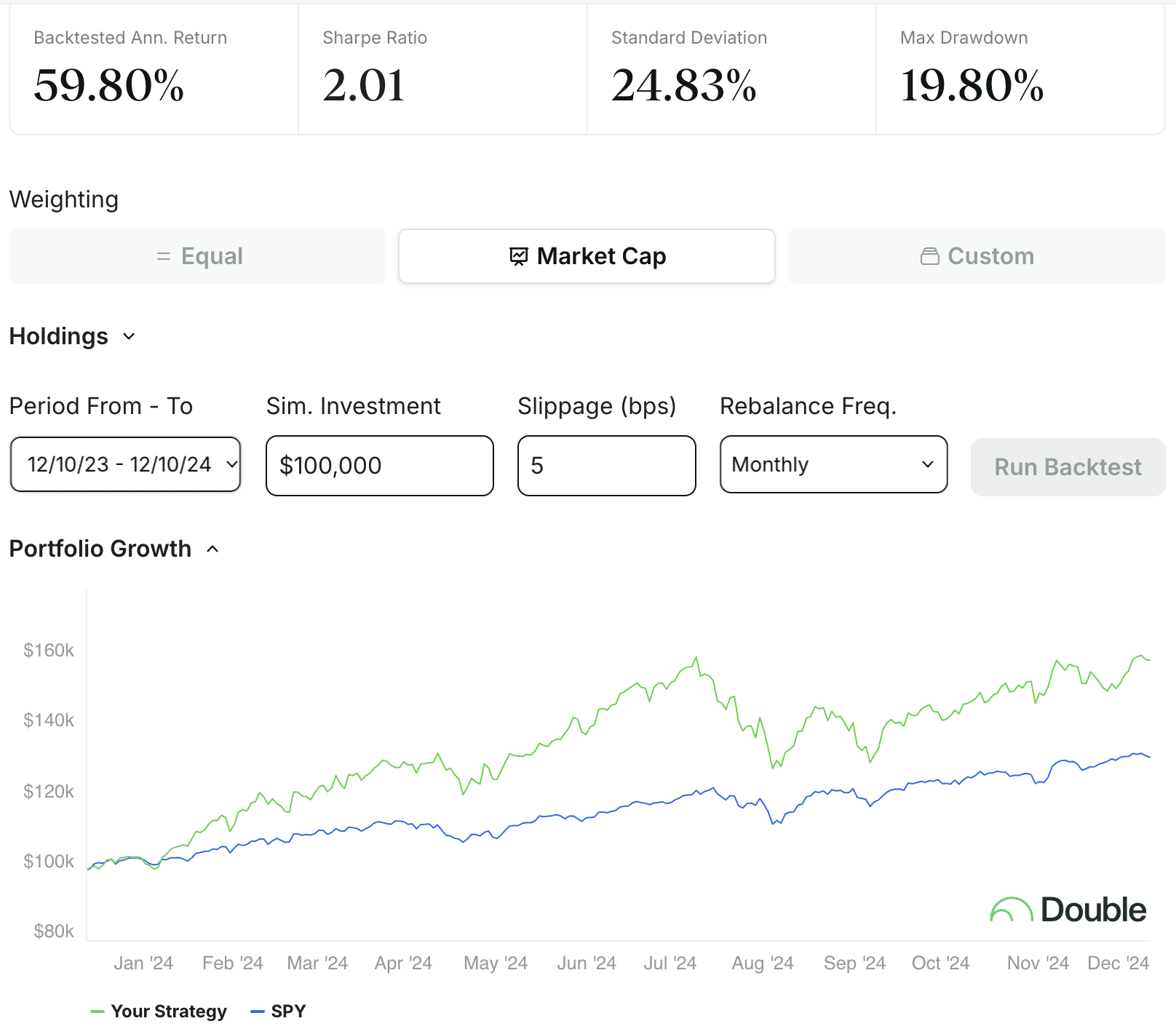

Going through the post, I figured I would backtest the mentioned strategies seeing how well they performed.

Starting with NoahK's suggested big stock tickers: "TSM, MSFT, GOOG, AMZN, ASML, NVDA"

If you naively bought these stocks weighted by market cap, you would have made a 60% annual return:

You would have also very strongly outperformed the S&P 500. That is quite good.

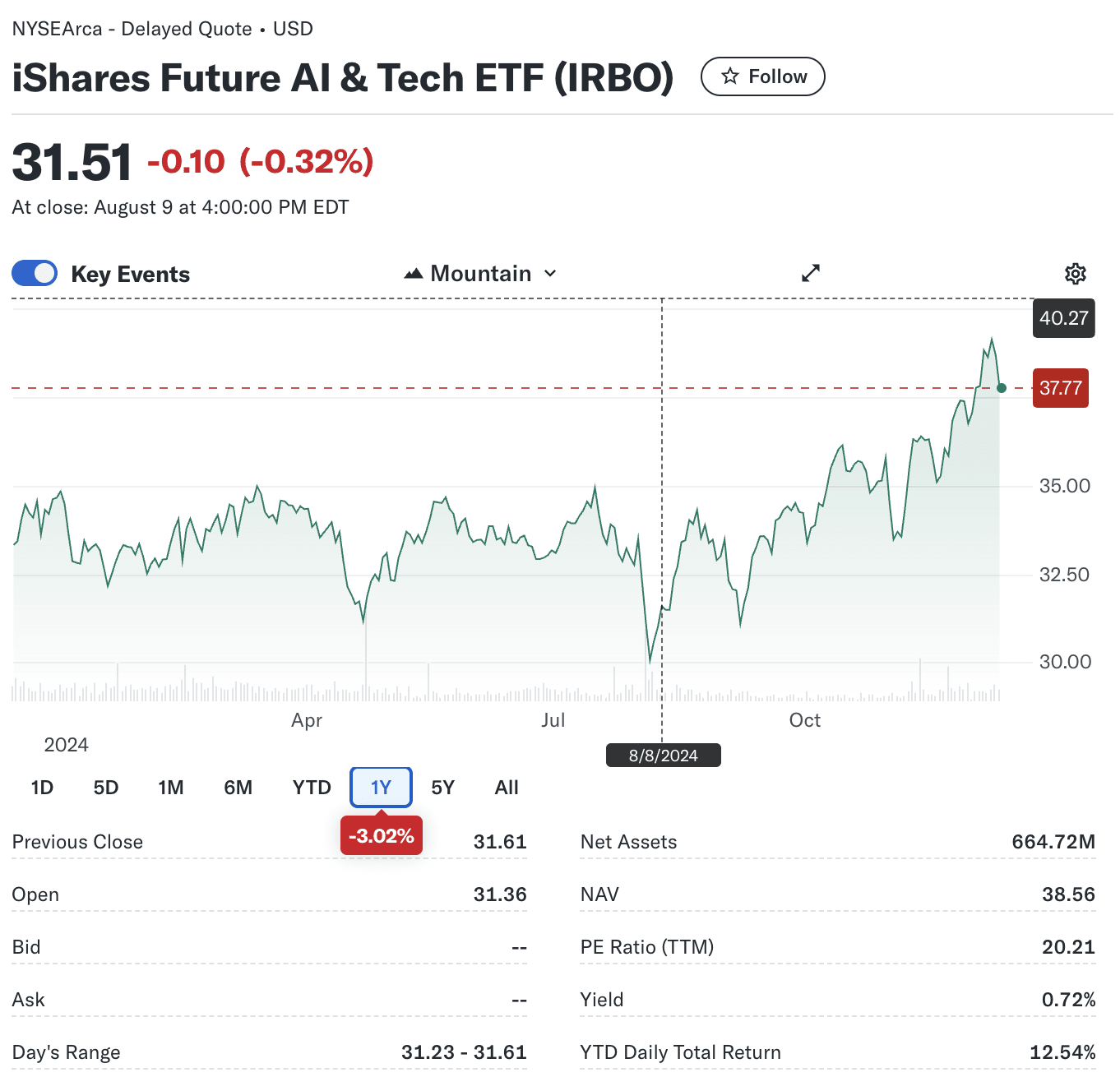

Let's look at one of the proposed AI index funds that was mentioned:

iShares has one under ticket IRBO. Let's see what it holds... Looks like very low concentration (all <2%) but the top names are... Faraday, Meitu, Alchip, Splunk, Microstrategy. (???)

That is... OK. Honestly, also looking at the composition of this index fund, I am not very impressed. Making only a 12% return in the year 2024 on AI stocks does feel like you failed at actually indexing on the AI market. My guess is even at the time, someone investing on the basis of this post would have chosen something more like IYW which is up 34% YTD.

Overall, the investment advice in this post backtests well.

You would have also very strongly outperformed the S&P 500. That is quite good.

When you backtest stock picks against SPY, you usually want to compare that portfolio to holding SPY levered to the same volatility (or just compare Sharpe ratios). Having a higher total return might mean that you picked good stocks, or it might just mean that you took on more risk. People generally care about return on risk rather than return on dollars, since sophisticated investors can take on ~unlimited amounts of leverage for sufficiently derisked portfolios.

In this case, the portfolio has a Sharpe ratio of 2.0, which is indeed pretty good, especially for an unhedged long equity portfolio, so props to NoahK! (When I worked at a hedge fund, estimated 2 Sharpe was our threshold for trades.) But it's not as much of an update as 60% annual return would suggest on the surface.

That is... OK. Honestly, also looking at the composition of this index fund, I am not very impressed. Making only a 12% return in the year 2024 on AI stocks does feel like you failed at actually indexing on the AI market.

IRBO's Sharpe ratio is below 1, which is pretty awful. In my not-financial-advice-opinion, IRBO is uninvestable and looking at the top holdings at the time of this interview was enough to recognize that (MSTR is basically a levered crypto & interest rate instrument, SPLK was a merger arb trade, etc.).

Very interesting conversation!

I'm surprised by the strong emphasis of shorting long-dated bonds. Surely there's a big risk of nominal interest rates coming apart from real interest rates, i.e. lots of money getting printed? I feel like it's going to be very hard to predict what the Fed will do in light of 50% real interest rates, and Fed interventions could plausibly hurt your profits a lot here.

(You might suggest shorting long-dated TIPS, but those markets have less volume and higher borrow fees.)

My current allocation to AI is split something like this:

| AMAT | 4.25 |

| AMD | 3.55 |

| ANET | 4.30 |

| ASML | 8.07 |

| CDNS | 4.04 |

| GFS | 1.28 |

| GM | 1.84 |

| GOOG | 7.90 |

| INTC | 6.17 |

| KLAC | 2.33 |

| LLY | 2.88 |

| LRCX | 4.62 |

| MRVL | 1.87 |

| MSFT | 14.65 |

| MSFT Calls | 2.78 |

| MU | 4.38 |

| ONTO | 0.24 |

| RMBS | 1.00 |

| SMSN | 7.88 |

| SNPS | 2.77 |

| TSM | 10.45 |

| TXN | 2.74 |

This looked really reasonable until I saw that there was no NVDA in there; why's that? (You might say high PE, but note that Forward PE is much lower.)

Posting here to retrospect. Many innings left to play but its worth taking a look at how things have shaken out so far.

Advice that looked good: buy semis (TSMC, NVDA, ASML, TSMC vol in particular)

Advice that looked okay: buy bigtech

Advice that looked less good: short long bonds

There's some evidence from 2013 suggesting that long-dated, out-of-the-money call options have strongly negative EV; common explanations are that some buyers like gambling and drive up prices. See this article. I also heard that over the last decade, some hedge funds therefore adopted the strategy of writing OTM calls on stocks they hold to boost their returns, and also heard that some of these hedge funds disappeared a couple years ago.

Has anyone looked into whether 1) this has replicated more recently, 2) how much worse it makes some of the suggested strategies (if at all)?

if AGI goes well, economics won't matter much. helping slow down AI progress is probably the best way to purchase shares of the LDT utility function handshake: in winning timelines, whoever did end up solving alignment will have done that thanks to having the time to pay the alignment tax on their research.

what i mean is that despite the foundamental scarcity of negentropy-until-heat-death, aligned superintelligent AI will be able to better allocate resources than any human-designed system. i expect that people will still be able to "play at money" if they want, but pre-singularity allocations of wealth/connections are unlikely to be relevant what maximizes nice-things utility.

it's entirely useless to enter the post-AGI era with either wealth or wealthy connections. in fact, it's a waste to not have spent it on increasing-the-probability-that-AGI-goes-well while money was still meaningful.

It's true that if the transition to the AGI era involves some sort of 1917-Russian-revolution-esque teardown of existing forms of social organization to impose a utopian ideology, pre-existing property isn't going to help much.

Unless you're all-in on such a scenario, though, it's still worth preparing for other scenarios too. And I don't think it makes sense to be all-in on a scenario that many people (including me) would consider to be a bad outcome.

you don't think there are humans whom i can expect to reliably reward-me-as-per-LDT after-the-fact? it doesn't have to be a certainty, i can merely have some confidence that some person will give me that share, and weigh the action based on that confidence.

I spent a couple hours looking at different methods to efficiently short long term bonds:

- UB Treasury Bond Futures - 30 year bonds but you have to roll every quarter on the roll date which is both a hassle and you pay the spread each time you roll. Also, the expected return if the world stays normal is significantly negative, it should be the 30 year rate minus the risk free rate, for which the average since 1977 has been 2% per year.

- SOFR Futures - pays out based on the average interest rate in a specific 3 month time period up to 10 years out, though liqui

I think 'go to grad school' may be treated too harshly here. In particular

(NoahK) Also, for most readers I imagine that career capital is their most important asset. A consequence of AGI is that discount rates should be high and you can't necessarily rely on having a long career. So people who are on the margin of e.g. attending grad school should definitely avoid it.

but then,

...(Zvi) Career capital is one form of human capital or social capital. Broadly construed, such assets are indeed a large portion of most people's portfolios. I'd rather be 'rich'

For the LEAPS call options, are you buying them in-the-money, at-the-money, or out-of-the-money? Do you roll them vertically or just horizontally?

Also, to be clear, nothing in this post constitutes investment advice or legal advice.

&

(Also I know enough to say up front that nothing I say here is Investment Advice, or other advice of any kind!)

&

None of what I say is financial advice, including anything that sounds like financial advice.

I usually interpret this sort of statement as an invocation to the gods of law, something along the lines of "please don't smite me", and certainly not intended literally. Indeed, it seems incongruous to interpret it literally here: the whole p...

Amusingly, searching for articles on whether offering unlicensed investment advice is illegal (and whether disclaiming it as "not investment advice" matters) brings me to pages offering "not legal advice" ;p

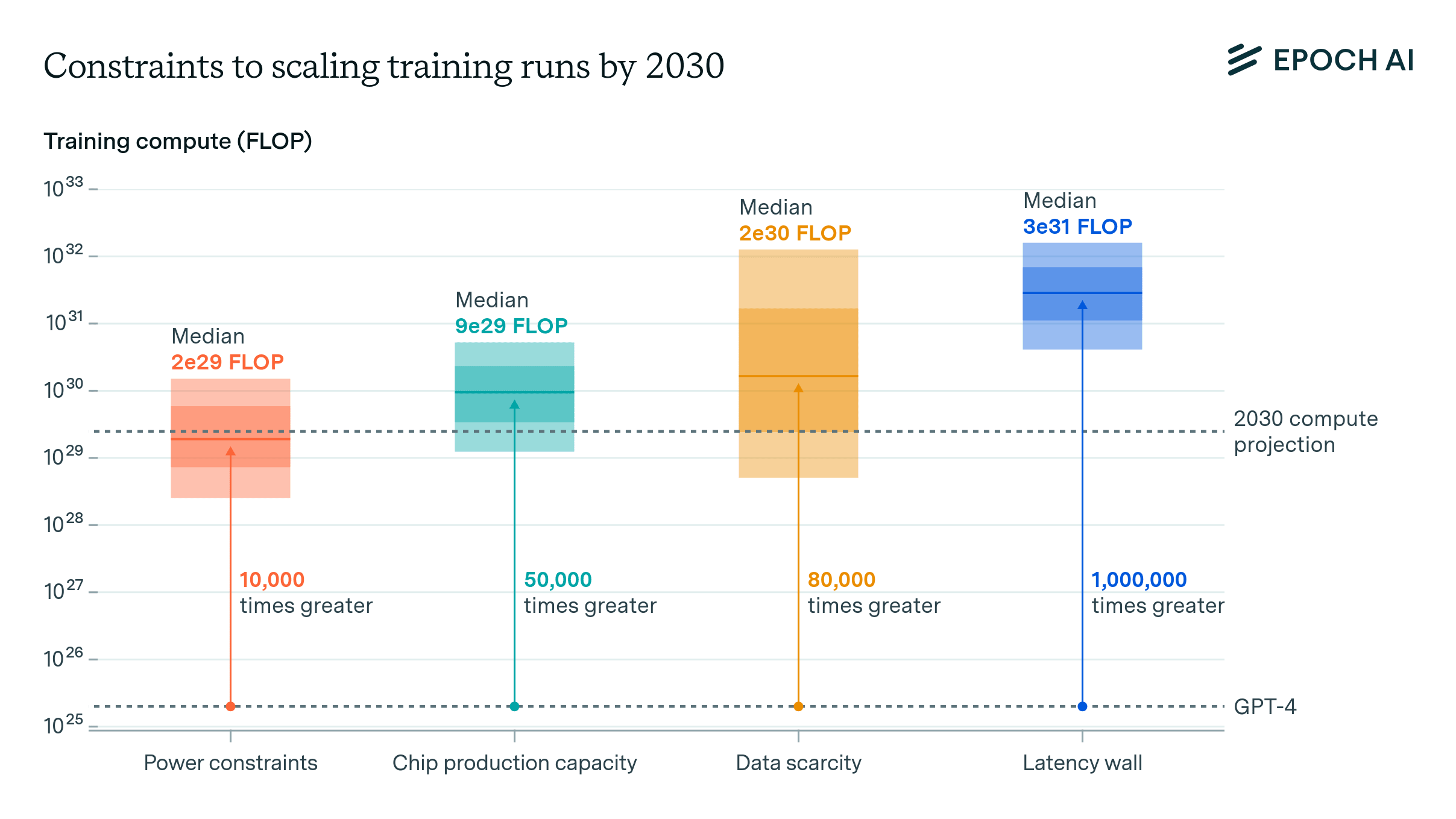

Given that, Epoch AI predicts that energy might be a bottleneck

I at least seem to have some beliefs about how big of a deal AI will be that disagrees pretty heavily with what the market beliefs [...] I feel like I would want to make a somewhat concentrated bet on those beliefs with like 20%-40% of my portfolio or so, and I feel like I am not going to get that by just holding some very broad index funds...

Fidelity allows users to purchase call options on the S&P 500 that are dated to more than 5 years out. Buying those seems like a very agnostic way to make a leveraged bet on higher growth/volatility, without havin...

I modified part of my portfolio to resemble the summarized takeaway. I'm up 30(!?!) % in less than 4 months.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

How would you recommend shorting long-dated bonds? My understanding is that both short selling and individual bond trading have pretty high fees for retail investors.

Take like 20% of my portfolio and throw it into some more tech/AI focused index fund. Maybe look around for something that covers some of the companies listed here on the brokerage interface that is presented to me (probably do a bit more research here)

Did you find a suitable index fund?

Also, to be clear, nothing in this post constitutes investment advice or legal advice.

I often see this phrase in online posts related to investment, legal, medical advice. Why is it there? These posts obviously contain investment/legal/medical advice. Why are they claiming they don't?

I guess that the answer is related to some technical meaning of the word "advice", which is different from its normal language meaning. I guess there is some law that forbids you from giving "advice". I would like to know more details.

Edit: This question was answered in a p...

Most cryptocurrencies have slow transactions. For AI, who think and react much faster than humans the latency would be more of a problem, so I would expect AIs to find a better solution than current cryptocurrencies.

I recommend sgov for getting safe interest. It effectively just invests in short term treasuries for you. very simple and straightforward. Easier than buying bonds yourself. I do not think 100 percent or more equities is a good idea right now given that we might get more rate increases. Obviously do not buy long term bonds. Im not a prophet just saying how I am handling things

Assuming AIs don't soon come up with even better crypto/decentralization solutions: I hadn't considered that the smart contracts being too complicated (and thus unsecure) might not hold true anymore once AI-assistants and cyberprotection scale up. Especially the ZK, a natural language for AIs.

NVDA's value is primarily in their architectural IP and CUDA ecosystem. In an AGI scenario, these could potentially be worked around or become obsolete.

This idea was mentioned by Paul Christiano in one of his podcast appearances, iirc.

- Invest like 3-5% of my portfolio into each of Nvidia, TSMC, Microsoft, Google, ASML and Amazon

Should Meta be in the list? Are the big Chinese tech companies considered out of the race?

buy some options

Not a great advice. Options are a very expensive way to express a discretionary view due to the variance risk premium. It is better to just buy the stocks directly and to use margin for capital efficiency.

And to take it one step further, holding long term debt at fixed rates is amazing in that situation, such as a long term mortgage.

(This is a typo that reverses the meaning, right? Should be "owing" long term debt, you want to owe a mortgage rather than to have issued a mortgage.)

So this all makes sense and I appreciate you all writing it! Just a couple notes:

(1) I think it makes sense to put a sum of money into hedging against disaster e.g. with either short term treasuries, commodities, or gold. Futures in which AGI is delayed by a big war or similar disaster are futures where your tech investments will perform poorly (and depending on your p(doom) + views on anthropics, they are disproportionately futures you can expect to experience as a living human).

(2) I would caution against either shorting or investing in...

It's not really possible to hedge either the apocalypse or a global revolution, so you can ignore those states of the worlds when pricing assets (more or less).

Unless depending on what you invest in those states of the world become more or less likely.

Broad market effects of AGI

Career capital in an AGI world

Debt and Interest rates effects of AGI

Concrete example portfolio

Is any of this ethical or sanity-promoting?

How would you actually use a ton of money to help with AGI going well?

Please diversify your crypto portfolio

Should you buy private equity into AI companies?

Summarizing takeaways