Relatedly, I think an often underappreciated trick is just to say the same thing in a couple different ways, so that listeners can triangulate your meaning. Each sentence on its own may be subject to misinterpretation, but often (though not always) the misinterpretations will be different from each other and so "cancel out", leaving the intended meaning as the one possible remaining interpretation.

I have it as a pet peeve of mine when people fail to do this. An example I've seen a number of times — when two people with different accents / levels of fluency in a language are talking (e.g. an American tourist talking to hotel staff in a foreign country), and one person doesn't understand something the other said. And then the first person repeats what they said using the exact same phrasing. Sometimes even a third or more times, after the listener still doesn't understand.

Okay, sure, sometimes when I don't understand what someone said I do want an exact repetition because I just didn't hear a word, and I want to know what word I missed. And at those times it's annoying if instead they launch into a long-winded re-explanation.

But! In a situation where there's potentially a fluency or understanding-of-accents issue, using the exact same words often doesn't help. Maybe they don't know one of the words you're using. Maybe it's a phrasing or idiom that's natural to you but not to them. Maybe they would know the word you said if you said it in their accent, but the way you say it it's not registering.

All of these problems are solved if you just try saying it a different way. Just try saying the same thing three different ways. Making sure to use different (simple) words for the main ideas each time. Chances are, if they do at least somewhat speak the language you're using, they'll pick up on your meaning from at least one of the phrasings!

Or you could just sit there, uncreatively and ineffectively using the same phrasing again and again, as I so often see...

A similar example: when you don't understand what someone is saying, it can be helpful to say "I don't understand. Do you mean X or Y?" instead of just saying "I don't understand". This way, even if X and Y are completely wrong, they now have a better sense of where you are and can thus adjust their explanations accordingly.

When I've written things like tag descriptions for LW or prefaces for LW books, Jim Babcock has been really great (when working with me on them) at asking "Okay, what things will people instinctively think we're saying, but we're not saying, and how can we head them off?" And after coming up with 2-4 nearby things we're not saying and how to distinguish ourselves from them, the resultant write-up is often something I'm really happy with.

A couple insights in response to this post overall.

Yeah, I had the five-words principle in mind the whole time and couldn't figure out how to work it in or address it. Quantum mechanics + general relativity; both of them seem genuinely true.

A position I think Duncan might hold reminds me of these two pictures that I stole from Wikipedia:"Isolation Forest".

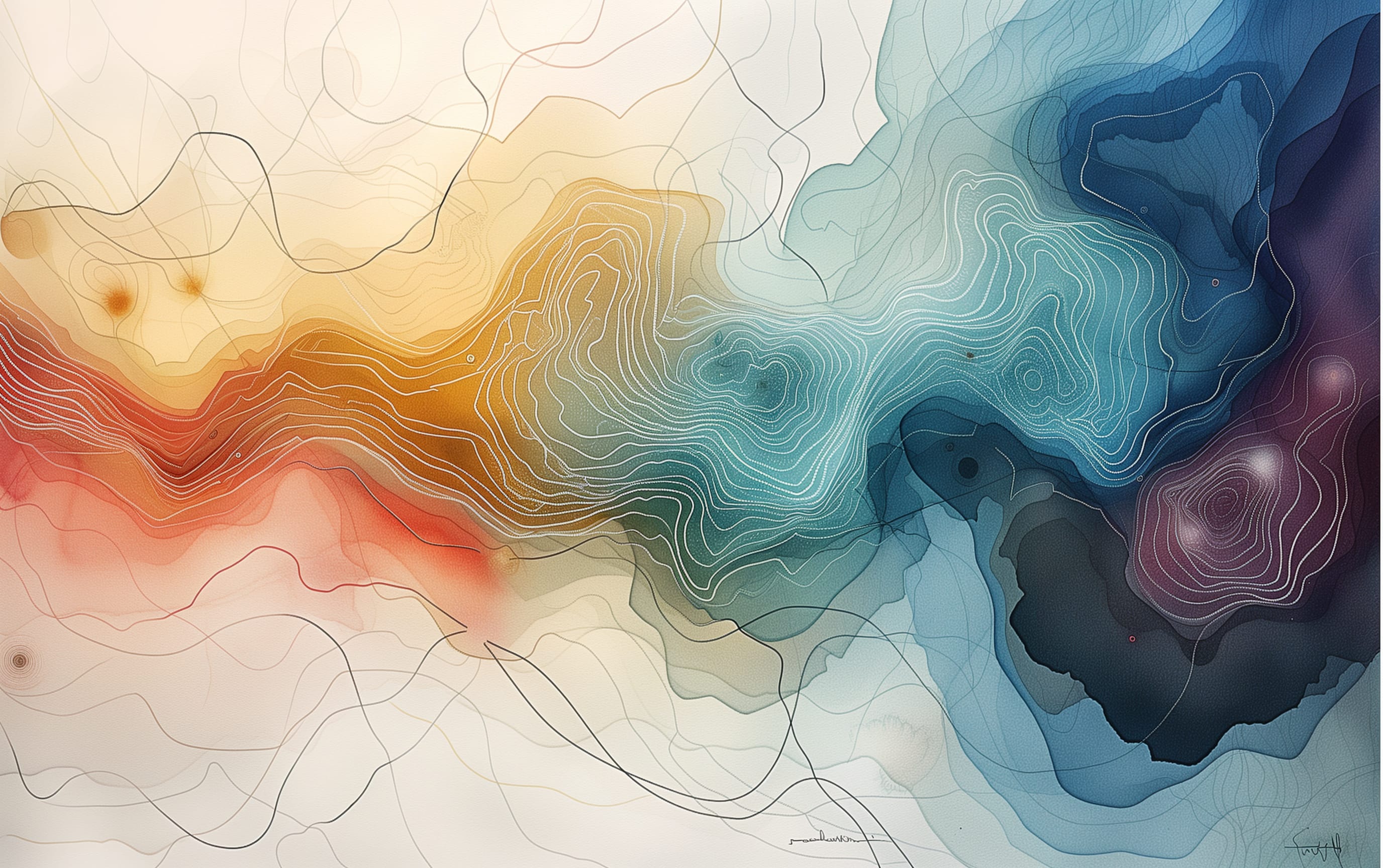

Imagine a statement (maybe Duncan's example that "People should be nicer"). The dots represent all the possible sentiments conveyed by this statement [1]. The dot that is being pointed to represents what we meant to say. You can see that a sentiment that is similar to many others takes many additional clarifying statements (the red lines) to pick it out from other possibilities, but that a statement that is very different from other statements can be clarified in only a few statements.

The only reason I say this is to point out that Duncan's abstract model has been theoretical and empirical studied, and that the advice for how to solve it is pretty similar to what Duncan said [2].

Also, a note on the idea of clarifying statements to complicate what I just said (Duncan appears to be aware of this too, but I want to bring it up explicitly for discussion).

You don't actually get to fence things off or rule them out in conversations, you get to make them less reasonable.

When Duncan has the pictures where he slaps stickers on top to rule things out, this is a simplification. In reality, you get to provide a new statement that represents a new distribution of represented sentiments.

Different people are going to combine your "layers" differently, like in an image editor.

Some people will stick with the first layer no matter what. Some people will stick with the last layer no matter what. You cannot be nuanced with people who pick one layer.

Some people will pick parts out of each of your layers. They might pick the highest peak of each and add them together, hoping to select what you're "most talking about" in each and then see what ends up most likely.

The best model might be multiplying layers together, so that combining a very likely region with a kind of unlikely region results in a kind of likely one.

I don't know if this generalization is helpful, but I hope sharing ideas can lead to the developing of new ones.

Note that, of course, real statements are probably arbitrarily complex because of all the individual differences in how people interpret things. ↩︎

I'd be willing to bet Duncan has heard of Isolation Forests before and has mental tooling for them (I'm not claiming non-uniqueness of the conversational tactic though!) ↩︎

Oh, and the second block I talked about is relevant because it means you can't use an algorithm like what I discussed in the first block. You can try to combine your statements with statements that have exceptionally low probability for the regions you want to "delete", but you those statements may have odd properties elsewhere.

For example, you might issue a clarification which scores bad regions at 1/1000000. However, also incidentally scores a completely different region at 1000. Now you have a different problem.

You run into a few different risks here.

The people I can call "multipliers" from my above note on combining layers might multiply these together and find the incidental region more likely than your intended selection.

People who add or select the most recent region might end up selecting only the incidental region.

If you issue a high number of clarifications, you might end up in 1 of 2 bad attractors:

It probably goes without saying that you need a pretty high status to make use of the advice in this post. A predictable failure mode for naturally status-blind people not very successful in life is to keep forgetting how low-status you are, and how little others can be bothered to listen to three words from you before they start talking over you or walk away mid-sentence to ask someone else. Only when you consciously learn status is a thing and its implications do you realize how hopeless all those communication attempts were to begin with, how powerless you are to stop people from ascribing to you any ideas or motives they like, how well-advised you'd be to shut up most of the time, and how screwed you are when shutting up won't do.

It also takes some time to learn that, in written communication, people who would interrupt you will instead skim your text for isolated keywords, hold you responsible for the message they make up from those, and give you an accordingly useless or hostile reply.

This comment makes me sad, but I upvote it for the reminder (I'm sad at how it's probably true, at least for some people, not sad because it's inaccurate). Something something affordance widths.

This article and the comments remind me of a paper written by David Casagrande titled 'Information is a verb.'

When we relay information (aka communicate), many of us think of it as a noun (nice connection to the tree in your - Duncan's essay).

But the deeper you go into the process of 'receiving' information, it becomes apparent that context and the recipient's priors can overtake the information intended by the 'relayer.' (don't think that's a word).

Even when you are dealing with relaying data (arguably less ambiguous than abstract concepts), there's a distribution of interpretations that surprise (and often do not delight!)

I like the concepts suggested - both 'ruling out' and 'meaning moat.' But I posit that these concepts become more potent when you start with the premise that information is a verb (and not a noun).

If you can't do it, you'll have a hard time moving past "say the words that match what's in your brain" and getting to "say the words that will cause the thing in their brain to match what's in your brain."

One issue I've had with the second approach is that then you say different words to group A and to group B, and then sometimes group A reads the words you wrote for group B, and gets confused / thinks you are being inconsistent. (In a more charged situation, I'd imagine they'd assume you were acting in bad faith.) Any suggestions on how to deal with that?

(I'm still obviously going to use the second approach because it's way superior to the first, but I do wish I could cheaply mitigate this downside.)

I mean, that's what the approach of ruling out rather than actively adding is meant to achieve.

I won't be able to say the right words for A and B simultaneously (sometimes) but I can usually draw a boundary and say "look, it's within this boundary" while conclusively/convincingly ruling out [bad thing A doesn't like] and [bad thing B doesn't like].

There's that bit of advice about how you don't really understand a thing unless you can explain it to a ten-year-old. I'd guess there's something analogous here, in that if you can't speak to both audiences at once, then you're either a) actually holding a position that's genuinely contra one of those groups, and just haven't come to terms with that, or b) just need more practice.

If you want to give a real example of a position you have a hard time expressing, I could try my hand at a genuine attempt to explain it while dodging the failure modes.

It might be that you're assuming more shared context between the groups than I am. In my case, I'm usually thinking of LessWrongers and ML researchers as the two groups. One example would be that the word "reward" is interpreted very differently by the two.

To LessWrongers, there's a difference between "reward" and "utility", where "reward" refers to a signal that is computed over observations and is subject to wireheading, and "utility" refers to a function that is computed over states and is not subject to wireheading. (See e.g. this paper, though I don't remember if it actually uses the terminology, or if that came later).

Whereas to ML researchers, this is not the sort of distinction they usually make, and instead "reward" is roughly that-which-you-optimize. In most situations that ML researchers consider, "does the reward lead to wireheading" does not have a well-defined answer.

In the ML context, I might say something like "reward learning is one way that we can avoid the problem of specification gaming". When (some) LessWrongers read this, they think I am saying that the thing-that-leads-to-wireheading is a good solution to specification gaming, which they obviously disagree with, whereas in the context I was operating in, I meant to say "we should learn rather hardcode that-which-you-optimize" without making claims about whether the learned thing was of the type-that-leads-to-wireheading or not.

I definitely could write all of this out every time I want to use the word "reward", but this would be (a) incredibly tedious for me and (b) off-putting to my intended readers, if I'm spending all this time talking about some other interpretation that is completely alien to them.

All of that makes sense.

My claims in response:

I'm not disagreeing with you that this is hard. I think the thing that both Fabricated Options and the above essay were trying to point at is "yeah, sometimes the really hard-looking thing is nevertheless the actual best option."

Yeah, I figured that was probably the case. Still seemed worth checking.

You're almost certainly correct that it's nonzero/substantially off-putting to your readers, but I would bet at 5:1 odds that it's still less costly than the otherwise-inevitable-according-to-your-models misunderstandings

I'm not entirely sure what the claim that you're putting odds on is, but usually, in my situation:

So I think I'm in the position where it makes sense to take cheap actions to avoid misunderstandings, but not expensive ones. I also feel constrained by the two groups having very different (effective) norms, e.g. in ML it's a lot more important to be concise, and it's a lot more weird (though maybe not bad?) to propose new short phrases for existing concepts.

One benefit of blog posts is the ability to footnote terms that might be contentious. Saying "reward[1]..." and then 1: for Less Wrong visitors, "reward" in this context means ... clarifies for anyone who needs it/might want to respond while letting the intended audience gloss over the moat and read your point with the benefit of jargon.

Nice article. It reminds me of the party game Codenames. In that game you need to indicate certain words or pictures to your teammates through word association. Everyone's very first lesson is that working out which words you do NOT intend to indicate, and avoiding them, is as big a part of gameplay as hitting the ones you do (possibly bigger).

The second speaker ... well, the second speaker probably did say exactly what they meant, connotation and implication and all. But if I imagine a better version of the second speaker, one who is trying to express the steelman of their point and not the strawman, it would go something like:

"Okay, so, I understand that you're probably just trying to help, and that you genuinely want to hear people's stories so that you can get to work on making things better. But like. You get how this sounds, right? You get how, if I'm someone who's been systematically and cleverly abused by [organization], that asking me to email the higher-ups of [organization] directly is not a realistic solution. At best, this comment is tone-deaf; at worst, it's what someone would do if they were trying to look good while participating in a cover-up."

The key here being to build a meaning moat between "this is compatible with you being a bad actor" and "you are a bad actor." The actual user in question likely believed that the first comment was sufficient evidence to conclude that the first speaker is a bad actor. I, in their shoes, would not be so confident, and so would want to distinguish my pushback from an accusation.

I'm not sure I buy this. Basically, your main point here is not that the presentation was flawed, but rather that it was a mistake to behave as tho the first speaker is a bad actor, rather than pointing out what test they failed and how they could have passed it instead.

Steelmanning for beliefs makes sense, but steelmanning for cooperativeness / morality seems much dodgier?

I don't think I'm advocating steelmanning for cooperativeness and morality; that was a clumsy attempt to gesture in the direction of what I was thinking.

EDIT: Have made an edit to the OP to repair this

Or, to put it another way, I'm not saying "starting from the second speaker's actual beliefs, one ought to steelman."

Rather, I simply do not hold the second speaker's actual beliefs; I was only able to engage with their point at all by starting with my different set of predictions/anticipations, and then presenting their point from that frame.

Like, even if one believes the first speaker to be well-intentioned, one can nevertheless sympathize with, and offer up a cooperative version of, the second speaker's objection.

I don't think that if you don't believe the first speaker to be well-intentioned, you should pretend like they are. But even in that case, there are better forward moves than "Bullshit," especially on LW.

(For instance, you could go the route of "if [cared], would [do X, Y, Z]. Since ¬Z, can make a marginal update against [cared].")

I don't think that if you don't believe the first speaker to be well-intentioned, you should pretend like they are. But even in that case, there are better forward moves than "Bullshit," especially on LW.

(For instance, you could go the route of "if [cared], would [do X, Y, Z]. Since ¬Z, can make a marginal update against [cared].")

Somehow this reminds me of a recent interaction between ESRogs and Zack_M_Davis, where I saw ESRogs as pushing against slurs in general, which I further inferred to be part of pushing against hostility in general. But if the goal actually is hostility, a slur is the way to go about it (with the two comments generated, in part, by the ambiguity of 'inappropriate').

Somehow the framing of "updated against [cared]" seems to be playing into this? Like, one thing you might be tracking is whether [cared] is high or low, and another thing you might be tracking is whether [enemy] is high or low (noting that [enemy] is distinct from [¬cared]!). In cases where there are many moves associated with cooperativity and fewer associated with being adversarial, it can be much easier to talk about [enemy] than [cared].

I ended up jumping in to bridge the inferential gap in that exact exchange. =P

I think it's fine to discover/decide that your [enemy] rating should go up, and I still don't think that means "abandon the principles." (EDIT: to be clear, I don't think you were advocating for that.) It might mean abandon some of the disarmaments that are only valid for other peace-treaty signatories, but I don't think there are enmities on LW in which it's a good idea to go full Dark Arts, and I think it would be good if the mass of users downvoted even Eliezer if he were doing so in the heat of the moment.

Is your composite shoulder mob always the same generic audience or instantiated with the current audience? The latter seems strictly more powerful but I guess it requires a lot of practice.

Curated. Communication is hard. And while I think most people would assent to that already, this post add some some "actionable gears" to the picture. A framing this post doesn't use but is implicitly there is something like communication conveys information (which can be measured in bits) between people. 1 bit of information cuts down on 2 possibilities, 2 bits cuts down from 4 possibilities to 1. What adding information does is reduce possibilities, exactly as the post describes. Bear in mind the number of possibilities what you've described still admits.

The concept of "meaning moat" makes me think of Hamming distance. The longer a string of bits is, the more bit flips away it is from adjacent strings of bits. In short, the post says try to convey enough bits of information to rule out all the possibilities you don't mean.

I think this especially matters for preparadigmatic fields such as AI Alignment where many new concepts have been developed (and continue to be developed). If you are creating these concepts or trying to convey them to others (e.g. being a distiller), this is a good post to read. You might know what you mean, but others don't.

Actually, maybe this is a good single-player tool too. When you are thinking about some idea/model/concept, try to enumerate a bunch of specific versions of it and figure out which ones you do and don't mean. Haven't tested it, but seems plausible.

Thank you for curating this, I had missed this one and it does provide a useful model of trying to point to particular concepts.

(Small) downvote because the connection between the link and my post is not clear and is therefore open to some motte-and-bailey-esque interpretation shenanigans. I think just-posting-a-link with no motivating explanation is usually net-negative, especially if it's intended to convey disagreement or add nuance.

I think that the linked post is good, and I largely agree with it, and I also wrote this post. I do not believe they are in conflict.

My story is that the OP is a guide to successful communication, and the OB is arguing that it should not be required or expected, as that imparts unfair mandatory costs on communicators.

To the extent that story is accurate, I largely agree; you can read [In Defense of Punch Bug] and [Invalidating Imaginary Injury] and similar as strongly motivated by a desire to cut back on unfair mandatory costs.

But also I smell a fabricated option in "what if we just didn't?" I think that the OB essay points at a good thing that would be good, but doesn't really do anything to say how. Indeed, at the end, the OB essay seems to be turning toward locating the problem in the listener? Advocating not projecting a bunch of assumptions into what you read and hear?

I see. I mean, all interactions have virtues on both sides. If someone insults me needlessly, the virtue for them to practice is avoiding unnecessary cruelty, and my virtue to practice is not letting others' words affect me too much (e.g. avoiding grudges or making a big deal of feeling attacked).

Similarly, if someone communicates with me and I read into it nearby meanings they didn't intend, their virtue is to empathize more with other minds in their writing, and my virtue is to hold as a live hypothesis that I may not have fully understood what they intended to say (rather than assuming I did with 100% certainty and responding based on that, and then being sorry later when I discover I got them wrong).

I agree with all of the above, and also...

...there's a strong "fallacy of the grey" vibe in the above, in cultures where the fallacy of the grey isn't something that everyone is aware of, sufficiently so that it need no longer be mentioned or guarded against.

"All interactions have virtues on both sides" is just true, denotatively.

Connotatively, it implies that all interactions have roughly equivalent magnitudes of virtues on both sides, especially when you post that here in response to me making a critique of someone else's method of engagement.

I posit that, humans being what they are and LW being what it currently is, the net effect of the above comment is to convey mild disapproval, but without being explicit about it.

Which may not have been your intention, which is why I'm writing this out (to give you a chance to say one way or the other if you feel like it).

It's sufficiently close to always-true that "both sides could have done better" or "both sides were defending something important" that the act of taking the time to say so is usually conveying something beyond the mere fact. Similar to how if I say "the sky is blue," you would probably do more than just nod and say "Indeed!" You would likely wonder why I chose that particular utterance at this particular moment.

The fact that each person always has an available challenge-of-virtue does not mean that the challenge presented to each is anything remotely like "equally fair" or that both are equally distant from some lofty ideal.

Mostly this is a muse, but it's a relevant muse as I'm thinking a bunch about how things go sideways on LW. I'm not sure that you're aware that "all interactions have virtues on both sides" could be read as a rebuke of "I think CronoDAS's interaction with me was sub-par." And if I wanted to engage with you in world-modeling and norm-building, it would be a very different conversation if you were vs. if you weren't (though both could be productive).

Oh! Right.

I'll execute my go-to move when someone potentially-critizes what I did, and try to describe the cognitive process that I executed:

More broadly, the conversation I thought of us as having was "what is the relationship between the against disclaimers post and the ruling out everything else post?", to which I was making a contribution, and to which the above cognition was a standard step in the convo.

I'll follow up with some more reactions in a second comment.

Just up top I'll share my opinion re CronoDAS's comment: my opinion is that it's way more helpful to provide literally any context on a URL than to post it bare, and it's within the range of reasonable behavior to downvote it in response to no-context. (I wouldn't myself on a post of mine, I'd probably just be like "yeah whatever" and ignore it.)

I posit that, humans being what they are and LW being what it currently is, the net effect of the above comment is to convey mild disapproval, but without being explicit about it.

I was surprised by you writing this!

My response to writing for such readers is "sod them", or something more like "that's not what I said, it's not what I meant, and I'm pro a policy of ignoring people who read me as doing more status things with my comments than I am when I'm what actually doing is trying to have good conversation".

This is motivated by something like "write for the readers you want, not the readers you have, and bit by bit you'll teach them to be the readers you want (slash selection effects will take care of it)".

This is some combination of thinking this norm is real for lots of LW readers, and also thinking it should be real for LW readers, such that the exact values of either I don't feel super confident about, just that I'm in an equilibrium where I can unilaterally act on this policy and expect people to follow along with me / nominally punish them for not following along, and get the future I want.

(Where 'punish' here just means exert some cost on them, like their experience of the comments being more confusing, or their comments being slightly more downvoted than they expect, or some other relatively innocuous way of providing a negative incentive.)

I don't think you get to do it all the time, but I do try to do it on LW a fair amount, and I'd defend writers-who-are-not-site-admins doing it with even more blind abandon than I.

I posit that, humans being what they are and LW being what it currently is, the net effect of the above comment is to convey mild disapproval, but without being explicit about it.

Which may not have been your intention, which is why I'm writing this out (to give you a chance to say one way or the other if you feel like it).

It was not my intention, thank you for writing it out for me to deconfirm :)

The fact that each person always has an available challenge-of-virtue does not mean that the challenge presented to each is anything remotely like "equally fair" or that both are equally distant from some lofty ideal.

This paragraph is pretty interesting and the point is not something I was thinking about. In my internal convo locus you get points for adding a true and interesting point I wasn't consciously tracking :)

Okay well this isn't very specific or concrete feedback but the gist of my response here is "I love ya, Ben Pace."

I also feel "sod them," and wish something like ... like I felt more like I could afford to disregard "them" in this case? Except it feels like my attempts to pretend that I could are what have gotten me in trouble on LW specifically, so despite very much being in line with the spirit I hear you putting forward, I nevertheless feel like I have to do some bending-over-backward.

Aww thanks Duncan :) I am v happy that I wrote my comments :)

I had noticed in the past that you seemed to have a different empirical belief here (or something), leading you to seem to have arguments in the comments that I myself wouldn't care enough to follow through on (even while you made good and helpful arguments).

Maybe it's just an empirical disagreement. But I can imagine doing some empirical test here where you convince me the majority of readers didn't understand the norms as much as I thought, but where I still had a feeling like "As long as your posts score high in the annual review it doesn't matter" or "As long as you get substantive responses from John Wentworth or Scott Alexander or Anna Salamon it doesn't matter" or "As long as all the people are reading your ideas are picking them up and then suddenly everyone is talking about 'cruxes' all the time it doesn't matter" (or "As long as I think your content is awesome and want to build offices and grant infrastructure and so on to support you and writers-like-you it doesn't matter").

I'd be interested to know if there are any things that you can think of that would change how you feel about this conversational norm on the margin? My random babble (of ideas that I am not saying are even net positive) would include suggesting a change to the commenting guidelines, or me doing a little survey of users about how they read comments, or me somehow writing a top level post where I flagrantly write true and valuable stuff that can be interpreted badly yet I don't care and also there's great discussion in the comments ;)

I happen to be writing exactly this essay (things that would change stuff on the margin). It's ... not easy.

As for exploring the empirical question, I'm interested/intrigued and cannot rule out that you're just right about the line of "mattering" being somewhere other than where I guess it is.

I linked to it because it seemed like Robin Hanson was saying something close to the opposite of this.

One of the things I like about this post the most, is it shows how much careful work is required to communicate. It's not a trick, it's not just being better than other people, it's simple, understandable, and hard work.

I think all the diagrams are very helpful, and really slow down and zoom in on the goal of nailing down an interpretation of your words and succeeding at communicating.

There's a very related concept (almost so obvious that you could say it's contained in the post) of finding common ground, where, in negotiation/conflict/disagreement, you can rule out things that neither of you want or believe and thus find common ground. "I believe people should be nicer" "While I think that's a nice thing to say, I think you're pushing against some other important norm, perhaps of being direct with people or providing criticism" "Sorry, let me be clear; I in no way wish to lower the current levels of criticism, directness, or even levels of upset people are getting, and I think we're on the same page on that; I'm interested in people increasing their thoughtfulness in other ways" "Ah, thanks, I see we have common ground on that issue, so I feel less threatened by this disagreement. I'm not sure I agree yet, but let's see about this." I think the post is helpful for seeing this.

I disagree with the application to an example on LessWrong, as is not uncommon I tend to think the theory in Duncan's post is true and very well explained, and then thoroughly disagree with its application to a recent political situation. I don't think that is much reason to devalue the post though.

This is a post I think about explicitly on occasion, I think it's pretty important for communication, and I'm giving the post a +4.

(Meta: this is not a great review on my part, but I am leaning on the side of sharing my perspective for why I'm voting. I wish I had more time to write great reviews that I more confidently expected to help others think about the posts.)

Diplomacy is a huge country that I've been discovering recently. There are amazing things you can achieve if you understand what everyone wants, know when to listen and when to speak and what to say and how to sound.

In particular, one trick I've found useful is making arguments that are "skippable". Instead of saying stop everything, this argument overthrows everything you said, I prefer to point out a concern but make the person feel free to skip it and move past. Hey here's one thing that came to my mind, just want to add it to discussion. If they have good sense, and your argument actually changes things, they won't move past; but they'll appreciate that you offered them the option.

Re: how to write in controversial situations like the second case in "building a meaning moat": more elaboration can be found in SSC's Nonfiction Writing Advice, section 8, "Anticipate and defuse counterarguments". The section is unfortunately a bit too long to quote from it here.

This article I think exemplifies why many people do not follow this advice. It's 39 pages long. I read it all, and enjoyed it, but most people I claim have a shorter attention span and would have either read half a page then stopped, or would have balked when they saw it was a 26-minute read and not read any of it. And those people are probably most in need of this kind of advice.

Language has a wonderful flexibility to trade off precision/verbosity against accessibility/conciseness. If you really want to rule out everything you don't mean, you inevitably need a high level of verbosity because even for the simplest arguments there are so many things you need to rule out (including many that you think are 'obvious' but are not to the people who presumably you most wish to appreciate your argument).

Which is better? An argument that rules out 80% of the things you don't mean (and so is 'misunderstood' by 20%), or an argument that's 5X as long that rules out 95% of the things you don't mean ('misunderstood' by 5%)? Most people won't read (or stick around long enough to listen/pay attention to) the second version.

So maybe you intended to mean that there's an important counterpoint, which is that there's a cost to ruling out everything you don't mean, and this cost needs to be carefully weighed when deciding where to position yourself on the precision/accessibility line when writing/speaking. There was room for this in the 39 pages, but it wasn't stated or explored, which feels like an omission.

Alternatively, if you disagree and didn't intend to mean this, you failed to rule out something quite important you didn't mean.

I object that we need to weigh the cost of everything is a quite important thing to mention in this post. Weighing the cost of everything is a very important thing, but it is another topic on its own; It is a whole different skill to hone (I think Duncan actually wrote a post about this in the CFAR handbook).

This is a great post that exemplifies what it is conveying quite well. I have found it very useful when talking with people and trying to understand why I am having trouble explaining or understanding something.

This seems like a good analysis of how a person can use what I call the mindsets of reputation and clarification.

Reputation mindset combines the mindsets of strategy and empathy, and it deals with fortifying impressions. It can help one to be aware of emotional associations that others may have for things one may be planning to say or do. That way one can avoid unintended associations where possible, or preemptively build up positive emotional associations to counteract negative ones that can't be avoided, such as by demonstrating one understands and supports someone's values before criticizing the methods they use to pursue those values.

Clarification mindset combines strategy mindset and semantics mindset, and it deals with explicit information. It helps people provide context and key details to circumvent unintended interpretations of labels and rules, or at least the most likely misinterpretations in a particular context.

(Reputation and clarification make up the strategy side of presentation mindset. Presentation deals with ambiguity in general, and the strategy side handles robust communication.)

These are powerful tools, and it's helpful to have characterizations of them and examples of use cases. Nicely done!

Just some thoughts I had while reading:

rule out everything you didn't mean

This reminds me of something I've heard -- that a data visualization is badly designed if different people end up with different interpretations of what the data visualization is saying. Similarly, we want to minimise the possible misinterpretations of what we write or say.

Each time I add another layer of detail to the description, I am narrowing the range of things-I-might-possibly-mean, taking huge swaths of options off the table.

Nice point, I've never really thought about it this way, yet it sounds so obvious in hindsight!

Choosing to include specific details (e.g. I like to eat red apples) constrains the possible interpretations along the key dimensions (e.g. color/type), but leaves room for different interpretations along presumably less important dimensions (e.g. size, variety).

I have a tendency to be very wordy partly because I try to be precise about what I say (i.e. try to make the space enclosed by the moat as small as possible). Others are much more efficient at communicating. I'm thinking it's because they are much better at identifying which features are more relevant, and are happy to leave things vague if they're less critical.

One of L. Ron Hubbard's more controversial writings was:

"The seven-year-old girl who shudders because a man kisses her is not computing; she is reacting to an engram since at seven she should see nothing wrong in a kiss, not even a passionate one."

Scientologists are not allowed to discuss or analyze Hubbard's writings, but they seem to think he's merely describing a supernatural effect.

Others interpret the word "should" to mean Hubbard was a big fat pervert. Either way he could have added a few sentences ruling out the worse interpretation here.

As a separate note: this series of posts you've done recently has hit a chord in our local group apparently, because we've had a lot of fun talking about them. I can't say for you whether we've appreciated it in an amount that makes your work worth it, but I've certainly appreciated it quite a bit.

"From another perspective, if this were obvious, more people would have discovered it, and if it were easy, more people would do it, and if more people knew and acted in accordance with the below, the world would look very different."

so, i know another person who did the same, and i tried that for some time, and i think this is interesting question i want to try to answer.

so, this other person? her name is Basmat. and it sorta worked for her. she saw she is read as contrarian and received with adversity, and people attribute to her things she didn't said. and decided to write very long posts that explained her worldview and include what she definitely doesn't mean. she was ruling out everything else. and she become highly respected figure in that virtual community. and... she still have people how misunderstood her. but she had much more legitimacy in shutting them up as illegitimate trolls that need not be respected or addressed.

see, a lot of her opinions where outside the Overton window. and even in internet community that dedicated for it, there was some wave-of-moderation. one that see people like her as radicals and dogmatic and bad and dangerous. and the long length... it changed the dynamic. but it mostly was costly, and as such trustworthy, signal, she is not dogmatic. that she can be reasoned with. this is one of my explanations for that.

but random people still misunderstood her, in exactly the same ways she ruled out! it was the members of the community, who know her, that stopped to do that. random guests - no.

why? my theory us there are things that language designed to make hard to express. the landscape is such to make easy to misunderstand or misrepresent certain opinions, in Badwill, to sound much worst then they are.

and this related to my experience. which is - most people don't want to communicate in Goodwill. they don't try to understand what I'm trying to point at. they try to round my position to the most stawmanish one they reasonably can, and then attack it.

i can explain lengthly what i mean, and this will make it worst, as i give them more words that can be misrepresented.

and what i learned is to be less charitable, is to filter those people out ruthlessly, because it is waste of time to engage. if i make the engagement in little pieces with opportunity for such person feedback, and ask if i was understood and if he disagree, if i make Badwill strategies hard - they will refuse to engage.

and if i clarify and explain and rule out everything else in Goodwill, they just find new and original ways to distort what i just said.

i still didn't read the whole post, but i know my motivation such that i wrote this comment now and will not if i postpone it. but i want to say - in my experience, such strategy work ONLY in Close Garden. in Open Garden, with too many people acting in Badwill, it's losing strategy.

( i planned to write also about length and that 80%-90% of the people will just refuse to engage with long enough text or explanation, but exhausted my writing-in-English energy for now. it is much more important factor that the dynamic i described, but i want to filter such people so i mostly ignore it. in real world though, You Have Four Words, and most people will simply refuse to listen to read you, in my experience)

edit after i read all the post:

so i was pleasantly surprised of the post. we have very similar models of the dynamics of conversions here. i have little to add beside - I agree!

this is what make the second part so bewildering - we have totally opposite reactions. but, maybe it can be solved by putting a number on it?

if i want to communicate idea that is very close to politically-charged one, 90% of people will be unable to hear it no matter how i will say that. 1% will hear no matter what. and another 9% will listen, if it is not in public, if they already know me, if they are in the right emotional space for that.

also, 30%-60% of the people will pretend they are listening to me in good faith only to make bad faith attacks and gotchas.

which is to say - i did the experiment. and my conclusion was i need to filter more. that i want to find and avoid the bad-faith actors, the sooner - the better. that in almost all cases i will not be able to have meaningful conversion.

and like, it work, sorta! if i feel extremely Slytherin and Strategic and decided my goal is to convince people or make then listen to my actual opinion, i sorta can. and they will sorta-listen. and sorta-accept. but people that can't do the decoupling thing or just trust me - i will not have the sort of discussion i find value in. i will not be able to have Goodwill discussion. i will have Badwill discussion when i carefully avoid all the traps and as a prize get you-are-not-like-the-other-X badge. it's totally unsatisfying, uninteresting experience.

what i learned from my experience is that work is practically always don't worth it, and it's actually counter-productive in a lot of times, as it make sorting Badwill actors harder.

now i prefer that people who are going to round my to the closest strawman to demonstrate it sooner, and avoid them fast, and search for the 1%.

because those numbers? i pulled them right from my ass, but they are wildly different in different places. and it depends on the local norms ( which is why i hate the way Facebook killed the forums in Hebrew - it's destroyed Closed Gardens, and the Open Garden sucks a lot. and there are very little Closed Gardens that people are creating again). but hey can be more like 60%-40% in certain places. and certain places already filtered for people that think that long posts are good, that nuances are good. and certain places filtered for lower resolution and You Have Four Words and every discussion will end with every opinion rounded up for one of the three opinions there, because it simply have no place for better resolutions.

it's not worth it to try to reason with such people. it's better to find better places.

all this is very good when people try to understand you in Goodwill. it's totally worth it then. but it not move people from Badwill to Goodwill, from Mindkilled to not. it's can make dialog with mindkilled people sorta not-awful, if you pour in a lot of time and energy. like, much more then i can in English now. but it's not worth it.

do you think it worth it? do you think about situations, like this with $ORGANIZATION that you have to have this dialog? i feel like we have different kinds of dialogs in mind. and we definitely have very different experiences. I'm not even sure that we are disagreeing on something, and yet, we have very similar descriptions and very different prescriptions...

****

it was very validating to read Varieties Of Argumentative Experience. because, most discussions sucks. it's just the way things are.

I can accept that you can accidentally suck the discussion, but not move it higher on the discussion pyramid.

****

about this example - downvoted the first and third, and upvoted the second. my map say that the person that wrote it assign high probability for $ORGANIZATION being bad actor as part of complicated worldview about how humans work, and that comment didn't make him to update this probability at all, or maybe have epsilon update.

he have actually different model. he actually think $ORGANIZATION is bad actor, and it's good that he can share his model. do you wish for Less Wrong that you can't share that model? do you find this model obviously wrong? i can't believe you want people who think people are bad actors should pretend they don't think so, but it's failure mode i saw and highly dislike.

the second comment is highly valuable, and the ability to see and to think Bulshit like the author did is highly valuable skill that I'm learning now. i didn't think about that. i want to have constantly-running background process that is like that commenter. Shoulder Advisor, as i believe you would have described it.

Clear communication is difficult. Most people, including many of those with thoughts genuinely worth sharing, are not especially good at it.

I am only sometimes good at it, but a major piece of what makes me sometimes good at it is described below in concrete and straightforward terms.

The short version of the thing is "rule out everything you didn't mean."

That phrase by itself could imply a lot of different things, though, many of which I do not intend. The rest of this essay, therefore, is me ruling out everything I didn't mean by the phrase "rule out everything you didn't mean."

Meta

I've struggled much more with this essay than most. It's not at all clear to me how deep to dive, nor how much to belabor any specific point.

From one perspective, the content of this essay is easy and obvious, and surely a few short sentences are all it takes to get it across?

From another perspective, if this were obvious, more people would have discovered it, and if it were easy, more people would do it, and if more people knew and acted in accordance with the below, the world would look very different.

So that's evidence that my "easy and obvious" intuition is typical minding or similar, and in response I've decided to err on the side of going slowly and being more thorough than many readers will need me to be. If you find yourself impatient, and eager to skip to the end, I do not have a strong intuition that you're making a mistake, the way I have in certain other essays.

I note that most of my advice on how to communicate clearly emerges fairly straightforwardly from a specific model of what communication is—from my assumptions and beliefs about what actually happens when one person says words and other people hear those words. So the majority of this essay will be spent transmitting that model, as a prerequisite for making the advice make sense.

The slightly less short version

Words have meaning (but what is it?)

It's a common trope in rationalist circles that arguments over semantics are boring/unproductive. Everyone seems to have gotten on the same page that it's much more worthwhile to focus on the substance of what people intend to say than on what the words they used to say it 'really' mean.

I agree with this, to a point. If you and I are having a disagreement, and we discover that we were each confused about the other's position because we were using words differently, and can quickly taboo those words and replace them with other words that remove the confusion, this is obviously the right thing to do.

But that's if our goal is to resolve a present and ongoing disagreement. There can be other important goals, in which the historical record is not merely of historical interest.

For instance: it's possible that one of us made an explicit promise to the other, and there was a double illusion of transparency—we both thought that the terms of the agreement were clear, but they were not, thanks to each of us using words differently. And then we both took actions according to our contradictory understandings, and bad things happened as a result, and now we're trying to repair the damage and settle debts.

In this case, there's not just the question of "what did we each mean at the time?" There's also the question of "what was reasonable to conclude, at the time, given all of the context, including the norms of our shared culture?"

This is a more-or-less objective question. It's not an unresolvable he-said-she-said, or a situation where everyone's feelings and perspectives are equally valid.

(For a related and high-stakes example, consider the ongoing conflict over whether-or-not-and-to-what-degree the things Donald Trump said while president make him responsible for the various actions of his supporters. There are many places where Trump and Trump's supporters use the defense "what he literally said was X," and Trump's detractors counter "X obviously means Y, what kind of fools do you take us for," and in most cases this disagreement never resolves.)

I claim there is a very straightforward way to cut these Gordian knots, and it has consistently worked for me in both public and private contexts:

"Hey, so, looking back, your exact words were [X]. I claim that, if we had a hundred people in the relevant reference class evaluate [X] with the relevant context, more than seventy of them would interpret those words to be trying to convey something like [Y]."

This method has a lot to recommend it, over more common moves like "just declare authoritatively what words mean," or "just leap straight to accusing your conversational partner of being disingenuous or manipulative."

In particular, it's checkable. In the roughly thirty times I've used this method since formalizing it in my head three years ago, there have been about four times that my partner has disagreed with me, and we've gone off and done a quick check around the office, or set up a poll on Facebook, or (in one case) done a Mechanical Turk survey. We never went all the way out to a hundred respondents, but in each of the four cases I can recall, the trends became pretty compelling pretty quickly.

(I was right in three cases and wrong in one.)

It also underscores (embodies?) a crucial fact about the meaning of words in practice:

Meaning is not a single fact, like "X means [rigorous definition Y]," no matter how much we might wish it were (and no matter how much it might be politically convenient to declare it so, in the middle of a disagreement).

Rather, it's "X means Y to most people, but also has a tinge of Z, especially under circumstance A, and it's occasionally used sarcastically to mean ¬Y, and also it means M to people over the age of 45," and so on and so forth.

Cameron is fun at parties.

(Cameron is actually fun at parties, at least according to me and my aesthetic and the kind of experience I want to have at parties; I know that "X is fun at parties" usually means that X is not fun at parties so I figured I'd clarify that my amusement at the above line is grounded in the fact that it's unusually non-sarcastic.)

Visualizing the distribution

There's a parallel here to standard rationalist reasoning around beliefs and evidence—it's not that X is true so much as we have strong credence in X, and it's not that X means Y so much as X is stronger evidence for Y than it is for ¬Y. In many ways, thinking of meaning as a distribution just is applying the standard rationalist lens to language and communication.

Even among rationalists, not everyone actually bothers to run the [imagine a crowd of relevant people and make a prediction about the range of their responses] move I described in the previous section. I run it because I've spent the last twenty years as a teacher and lecturer and writer and manager, and have had to put a lot of energy into adapting and reacting to things-landing-with-people-in-ways-I-didn't-anticipate-or-intend. These days, I have something like a composite shoulder mob that's always watching the sentences as they form in my mind, and responding with approval or confusion or outrage or whatever.

But I claim that most people could run such an algorithm, if they chose to. Most people have sufficient past experience with witnessing all sorts of conversations-gone-sideways, in all sorts of contexts. The data is there, stored in the same place you store all of your aggregated memories about how things work.

And while it's fine to not want that subprocess running all the time, the way it does in my brain, I claim it's quite useful to practice booting it up until it becomes a switch you are capable of flipping at will.

It's especially useful because the generic modeling-the-audience move is step three in the process of effective, clear communication. If you can't do it, you'll have a hard time moving past "say the words that match what's in your brain" and getting to "say the words that will cause the thing in their brain to match what's in your brain."

(Which is where the vast majority of would-be explainers lose their audiences.)

A toy example:

Imagine that I'm imagining a tree. A specific tree—one from the front yard of my childhood home in North Carolina.

If I want to get the thing in my head to appear in your head, a pretty good start would be to say "So, there's this tree..."

At that point, two seconds in, the minds of my audience will already be in motion, and there will be a range of responses to having heard and understood those first four words.

...in fact, it's likely that, if the audience is large enough, some of them will be activating concepts that the rest of us wouldn't recognize as trees at all. Some of them might be imagining bushes, or Ents, or the act of smoking marijuana, or a Facebook group called "Tree," or their family tree, or the impression of shade and rustling leaves, or a pink elephant, and so on and so forth.

I like to envision this range-of-possible-responses as something akin to a bell curve. If all I've communicated so far is the concept "tree," then most people will be imagining some example of a tree (though many will be imagining very different trees) and then there will be smaller numbers of people at the tails imagining weirder and weirder things. It's not actually a bell curve, in the sense that the-space-of-possible-responses is not really one-dimensional, but a one-dimensional graph is a way to roughly model the kind of thing that's going on.

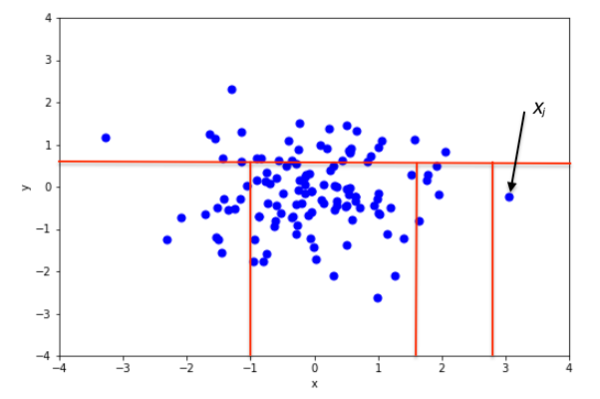

The X-axis denotes the range of responses. Everyone in a given column is imagining basically the same thing; the taller the column the more common that particular response. So perhaps one column contains all of the people imagining oak trees, and another column contains all of the people imagining pines:

Columns that are close to one another are close in concept-space; they would recognize each other as thinking of a pretty similar thing. For something like "tree," there's going to be a cluster in the middle that represents something like a normal response to the prompt—the sort of thing that everyone would agree is, in fact, a tree.

If there's a cluster off to one side, as in this example, that might represent a second, more esoteric definition of the word (say, variants of the concept triggered in the minds of actual botanists), or maybe a niche subculture with unique associations (say, some group that uses trees as an important religious metaphor).

Of course, if we were to back out to a higher-dimensional perspective, we would likely see that there are actually a lot of distinct clusters that just appear to overlap, when we collapse everything down to a single axis:

... perhaps the highest peak here represents all of the people thinking of various deciduous leafy trees, and the second highest is the cluster of people thinking of various coniferous evergreen trees, and the third highest contains people thinking of tropical trees, and so on.

This more complicated multi-hump distribution is also the sort of thing one would expect to see if one were to mention a contentious topic with clear, not-very-overlapping camps, such as "gun rights" or "cancel culture." If all you say is one such phrase, then there will tend to be distinct clusters of people around various interpretations and their baggage.

Shaping the distribution

But of course, one doesn't usually stop after saying a single word or phrase. One can usually keep going.

There are two ways to conceptualize what's happening, as I keep adding words.

The standard interpretation is that I am adding detail. There was a blank canvas in your mind, and at first it did not have anything at all, but now it has a stop-motion of a sickly magnolia tree growing into a magnificent, thriving one.

I claim the additive frame is misleading, though. True, I am occasionally adding new conceptual chunks to the picture, but what's much more important, and what's much more central to what's going on, is what I'm taking away.

If you had a recording of me describing the tree, and you paused after the first four words and asked a bunch of people to write down what I was probably talking about, many of them would likely feel uncomfortable doing so. They would, if pressed, point out that while sure, yeah, they had their own private default mental association with the word "tree," they had no reason to believe that the tree in their head matched the one in my head.

Many of them would say, in other words, that it's too soon to tell. There are too many possibilities that fit the words I've given them so far.

Pause again after another couple of seconds, though, and they'd feel a lot more comfortable, because adding "...a magnolia" rules out a lot of things. Pines, for instance, and oaks, and bushes, and red balloons.

If we imagine that same pile of listeners that were previously in a roughly-bell-shaped distribution, adding the word "magnolia" reshuffles the distribution. It tightens it, shoving a bunch of people who were previously all over the place into a much narrower spread:

Each time I add another layer of detail to the description, I am narrowing the range of things-I-might-possibly-mean, taking huge swaths of options off the table. There are many more imaginings compatible with "tree" than with the more specific "magnolia tree," and many more imaginings compatible with "magnolia tree" than with "magnolia tree that was once sickly and scrawny but is now healthy and more than ten meters tall."

Note that the above paragraph of description relies upon the listener already having the concept "magnolia." If I were trying to create that concept—to paint the picture of a magnolia using more basic language—I would need to take a very different tack. Instead, in the paragraph above, what I'm doing is selecting "magnolia" out of a larger pile of possible things-you-might-imagine. I'm helping the listener zero in on the concept I want them to engage with.

And the result of this progressive narrowing of the picture is that, by the time I reach the end of my description, most of the people who started out imagining palm trees, or family trees, or who misheard me and thought I said "mystery," have now updated toward something much closer to the thing in my head that I wanted to transmit.

Backing out from the toy example, to more general principles:

No utterance will be ideally specific. No utterance will result in every listener having the same mental reaction.

But by using a combination of utterances, you can specify the location of the-thing-you-want-to-say in concept space to a more or less arbitrary degree.

"I'm thinking of a rock, but more specifically a gray rock, but more specifically a rock with a kind of mottled, spotted texture, but more specifically one that's about the size of a washing machine, but more specifically one that's sitting on a hillside, but more specifically one that's sitting half embedded in a hillside, but more specifically a hillside that's mostly mud and a few scruffy trees and the rock is stained with bird poop and scattered with twigs and berries and the sun is shining so it's warm to the touch and it's located in southern California—"

Building a meaning moat

When the topic at hand is trees, it's fairly easy to get people to change their position on the bell curve. Most people don't have strong feelings about trees, or sticky tribal preconceptions, and therefore they will tend to let you do things like iterate, or add nuance, or start over and try again.

There aren't really attractors in the set of tree concepts—particular points in concept-space that tinge and overshadow everything near them, making nuance difficult. Not strong ones, at least.

This is much less true for topics like politics, or religion, or gender/sexuality/ relationships. Topics where there are high stakes—where the standard positions are more or less known, and audiences have powerful intuitions about the kinds of people who take those positions.

In some domains, there are strong pressures driving a kind of rounding-off and oversimplification, in which everything sufficiently close to X sounds like X, and is treated as if it is X, which often pushes people in favor of not-quite-X straight into the X camp, which further accelerates the process.

(Related.)

It's one thing to nudge people from "tree" to the more specific "magnolia," even if your clumsy first pass had them thinking you meant "mangroves." It's another thing entirely to start out with a few sentences that trigger the schema "eugenicist" or "racist" or "rape apologist," and then get your listeners to abandon that initial impression, and update to believing that you meant something else all along.

This is why this essay strongly encourages people to model the audience's likely reactions up front, rather than simply trying things and seeing what comes back. Attractors are hard to escape, and if you have no choice but to tread near one, there's a huge difference between:

and

The key tool I'm advocating here is something I'm calling a meaning moat.

(As in, "I'm worried people will misinterpret this part of the email; we need to put a meaning moat in between our proposal and [nearest objectionable strawman].")

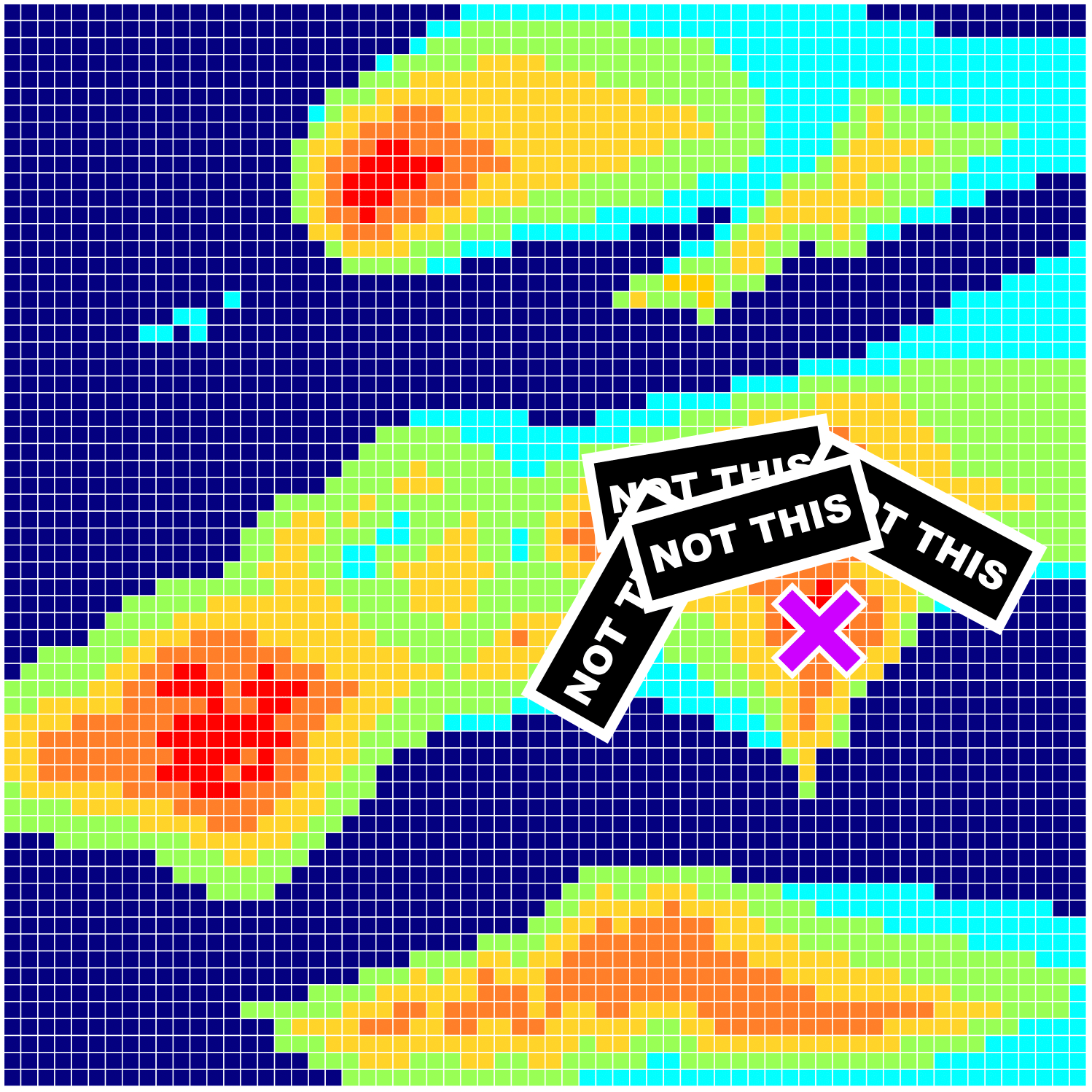

Imagine one of the bell curves from before, but "viewed from above," such that the range of responses are laid out in two-dimensional space. Someone is planning on saying [a thing], and a lot of people will hear it, and we're going to map out the distribution of their probable interpretations.

Each square above represents a specific possible mental state, which would be adopted in response to whatever-it-is that our speaker intends to say. Squares are distinct(ish) audience interpretations.

(I'm deliberately not using a specific example in this section because I believe that any such example will split my readers in exactly the way I want to talk about sort of neutrally and dispassionately, and I don't want the specifics to distract from a discussion of the general case. But if you absolutely cannot move forward without something concrete, feel free to imagine that what's depicted is the range of reactions to someone who starts off by saying "people should be nicer." That is, the above map represents the space of what a bunch of individual listeners would assume that the phrase "people should be nicer" means—the baggage that each of them would bring to the table upon hearing those words.)

As with the side-view of the bell curve above, adjacent spots are similar interpretations; for this map we've only got two axes so there are only two gradients being represented.

Navy squares means "no one, or very few people, would hold this particular interpretation after hearing [thing]."

Red squares mean "very many people would hold this interpretation after hearing [thing]."

The number of clusters in the map is more or less arbitrary, depending on how tight a grouping has to be before you call it a "cluster." But there are at least three, and arguably as many as eight. This would correspond to there being somewhere between three and eight fairly distinct interpretations of whatever was just said. So, if it was a single word, that means that word has at least three common definitions. If it was a phrase, that means that the phrase could be taken in at least three substantially different ways (e.g. interpreted as having been sincere, sarcastic, or naive). And if it was a charged or political statement, that means there are probably at least three factions with three different takes on this issue.

I claim that something-like-this-map accurately represents what actually happens whenever anyone says approximately anything. There is always a range of interpretation, and if there are enough people in the audience, there will be both substantial overlap and substantial disoverlap in those interpretations.

(I've touched on this claim before.)

And now, having anticipated this particular range of responses, most of which will not match the concept within the speaker's mind, it's up to the speaker to rule out everything else.

(Or, more accurately, since one can't literally rule out everything else, to rule out the most likely misunderstandings, in order of importance/threat.)

What is our speaker actually trying to convey?

Let's imagine three different cases:

In the first case, the concept that lives in our speaker's mind is near to the upper cluster, but pretty distinct from it.

In the second case, the speaker means to communicate something that is its own cluster, but it's also perilously close to two other nearby ideas that the speaker does not intend.

In the third case, the speaker means something near the center of a not-very-tightly-defined memeplex.

(We could, of course, explore all sorts of other possibilities, including possibilities on very different maps, but these three should be enough to highlight the general principle.)

(I'm going to assume that the speaker is aware of these other, nearby interpretations; things get much harder if you're feeling your way forward blindly.)

(I'm also going to assume that the speaker is not trying to say something out in the blue, because if so, their first draft of an opening statement was so misleading in expectation, and set them up for such an uphill battle, that they may as well give up and start over.)

In each of these three cases, there are different misconceptions threatening to take over. Our speaker has different threats to defend against, and should employ a different strategy in response to each one.

In the first case, the biggest risk is that the speaker will be misconstrued as intending [the nearby commonly understood thing]. People will listen to the first dozen words, recognize some characteristic hallmarks of the nearby position, and (implicitly, unintentionally) conclude that they know exactly what the speaker is talking about, and it's [that thing]:

This will be particularly true of people who initially thought that the speaker meant [things represented by the lower patches of red and orange]. As soon as those people realize "oh, they're not expressing the viewpoint I originally thought they were," many of them will leap straight to the central, typical position of the upper patch.

People usually do not abandon their whole conceptual framework all at once; if I at first thought you were pointing at a pen on my desk, and then realized that you weren't, I'll likely next conclude that you were pointing at the cup on my desk, rather than concluding that you were doing an isometric bodyweight exercise. If there are a few common interpretations of your point, and it wasn't the first one, people will quite reasonably tend to think "oh, it was the second one, then."

So in the first case, anticipating this whole dynamic, the speaker should build a meaning moat that unambiguously separates their point from that nearby thing. They should rule it out; put substantial effort into demonstrating that [that thing] cannot possibly be what they mean. [That thing] represents the most likely misunderstanding, and is therefore the highest priority for them to distinguish their true position from.

(One important and melancholy truth is that you are never fully pinning down a concept in even a single listener's mind, let alone a diverse group of listeners. You're always, ultimately, drawing some boundary and saying "the thing I'm talking about is inside that boundary." The question is just how tight you need the boundary to be—whether greater precision is worth the greater effort required to achieve it, and how much acceptable wiggle room there is for the other person to be thinking something a little different from what you intended without that meaningfully impacting the goals of the interaction. Hence, the metaphor of a moat rather than something like precise coordinates.)

In the second case, there's a similar dynamic, except it's even more urgent, since the misunderstandings are closer. It will likely take even more words and even more careful attention to avoid coming off as trying to say one of those other very nearby things; it often won't suffice to just say "by the way, not [that]."

For instance, suppose there's a policy recommendation B, which members of group X often support, for reasons Y and Z.

If you disagree with group X, and think reasons Y and Z are bad or invalid, yet nevertheless support B for reasons M and N, you'll often have to do a lot of work to distinguish yourself from group X. You'll often have to carefully model Y and Z, and compellingly show (not just declare) that they are meaningfully distinct from M and N. And if the policy debate is contentious enough, or group X abhorrent enough, you may even need to spend some time passing the ITT of someone who is suspicious that anyone who supports B must be X, or that support of B is tantamount to endorsement of the goals of X.

(A hidden axiom here is that people believe the things they believe for reasons. If you anticipate being rounded off to some horrible thing, that's probably both because a) you are actually at risk of being rounded off to it, and b) the people doing the rounding are doing the rounding because your concept is genuinely hard to distinguish from the horrible thing, in practice. Which means that you can't reliably/successfully distinguish it by just saying that it's different—you have to make the difference clear, visceral, and undeniable. For more in this vein, look into decoupling vs. contextualizing norms.)

In other words, not "I'm not racist, but B" so much as "no, you're not crazy, here's why B might genuinely appear racist, and here's why racists might like or advocate for B, I agree those things are true and problematic. But for what it's worth, here's a list of all the things those racists are wrong about, and here's why I agree with you that those racists are terrible, and here's a list of all the good things that are in conflict with B, and here's my best attempt to weigh them all up, and here are my concrete reasons why I still think B even after taking all of that into account, and here's why and how I think it's possible to support B without effectively lending legitimacy to racists, and here are a couple of examples of things I might observe that would cause me to believe I was wrong about all this, and here's some up-front validation that you've probably heard this all before and I don't expect you to just take my word for any of this but please at least give me a chance to prove that I actually have a principled stance, here."

The latter is what I mean by "meaning moat." The former is just a thin layer of paint on the ground.

Interestingly, the speaker in the third case can get away with putting forth much less effort. There's no major nearby attractor threatening to overshadow the point they wish to make, and the other available preconceptions are already distant enough that there likely won't be a serious burden of suspicion to overcome in the first place. There's a good chance that they actually will be able to simply declare "not that," and be believed.

The more similar your point is to a preconception the audience holds, the harder you'll have to work to get them to understand the distinction. And the stronger they feel about the preconception, the harder it will be to get them to have a different feeling about the thing you're trying to say (which is why it's best to start early, before the preconception has had a chance to take hold).

Three failures (a concrete example)

Recently-as-of-the-time-of-this-writing, there was heated discussion on LessWrong about the history of a research organization in the Bay Area, and the impact it had on its members, many of whom lived on-site under fairly unusual conditions.

Without digging into that broader issue at all, the following chain of three comments in that discussion struck me as an excellent example of three people in a row not doing sufficient work to rule out what they did not mean.

The first comment came from a member of the organization being discussed:

In response:

And in response to that (from a third party):

In my culture, none of these three comments passes muster, although only one of them was voted into negative territory in the actual discussion.

The first speaker was (clearly and credibly, in my opinion) concerned with preventing harm. They felt that the problems under discussion had partially been caused by a lack of outreach/insufficient lines of communication, and were trying to say "there are people here who care, and who are listening, and I am one of them."

(Their comment was substantially longer than what's quoted here, and contained a lot of other information supporting this interpretation.)

But even in the longer, complete comment, they notably failed to distinguish their offer of help from a trap, especially given the atmosphere of suspicion that was dominant at the time. Had they paused to say to themselves "Imagine I posted this comment as-is, and it made things worse. What happened?" they would almost certainly have noticed that there was an adversarial interpretation, and made some kind of edit in pre-emptive response.

(Perhaps by validating the suspicion, and providing an alternate, third-party route by which people could register concerns, which both solves the problem in the world where they're a bad actor and credibly signals that they are not a bad actor.)

The second speaker ... well, the second speaker probably did say exactly what they meant, connotation and implication and all. But if I imagine a better version of the second speaker—one who is less overconfident and more capable of doing something like split and commit—and I try to express the same concern from that perspective, it would go something like:

"Okay, so, I understand that you're probably just trying to help, and that you genuinely want to hear people's stories so that you can get to work on making things better. But like. You get how this sounds, right? You get how, if I'm someone who's been systematically and cleverly abused by [organization], that asking me to email the higher-ups of [organization] directly is not a realistic solution. At best, this comment is tone-deaf; at worst, it's what someone would do if they were trying to look good while participating in a cover-up."

The key here being to build a meaning moat between "this is compatible with you being a bad actor" and "you are a bad actor." The actual user in question likely believed that the first comment was sufficient evidence to conclude that the first speaker is a bad actor. I, in their shoes, would not be so confident, and so would want to distinguish my pushback from an accusation.

The third speaker's mistake, in my opinion, lay in failing to distinguish pushback on the form of the second speaker's comment from pushback on the content. They were heavily downvoted—mostly, I predict, because people felt strong resonance with the second speaker's perspective, and found the third speaker's objection to be tangential and irrelevant.

If I myself had wanted to push back on the aggressive, adversarial tone of the second comment, I would have been careful to show that I was not pushing back on the core complaint (that there really was something lacking in the first comment). I would have tried, in my reply, to show how one could have lodged the core complaint while remaining within the comment guidelines, and possibly said a little bit about why those guidelines are important, especially when the stakes are high.

(And I would have tried not to say something snarky while in the middle of policing someone else's tone.)

All of that takes work. And perhaps, in that specific example, the work wasn't worth it.

But there are many, many times when I see people assuming that the work won't be worth it, and ultimately being compelled to spend way more effort trying to course-correct, after everything has gone horribly (and predictably) wrong.

The central motivating insight, restated, is that there's a big difference between whether a given phrasing is a good match for what's in your head, and whether that phrasing will have the effect you want it to have, in other people. Whether it will create, in those other people's heads, the same conceptual object that exists in yours.

A lot of people wish pretty hard that those two categories were identical, but they are not. In many cases, they barely even overlap. The more it matters that you get it right, the more (I claim) you should put concrete effort into envisioning the specific ways it will go wrong, and heading them off at the pass.

And then, of course, be ready for things to go wrong anyway.