One of the key issues appears to be that intelligence is way higher-dimensional than most people assumed when terms like AGI or ASI were coined, and hence many of these terms are wonkier than they initially seemed. Now we observe severe jaggedness in AI, so while you can argue that today's AIs are AGI or ASI and are probably technically correct, at least for certain interpretations, they still fall short in many areas at the same time, making them, for now, much less useful or transformative than one might have imagined ten years ago given a description of some of the capabilities they have today.

Goal posts are definitely being moved, but I still think this is partially because the original goal posts were somewhat ill-defined. If you don't take the literal definitions provided in the past, but instead take the "spirit" behind them, then I think it's kind of fair to move the goal posts now that we know that the original definitions came with some wrong assumptions and don't really hit the thing they were supposed to aim at.

That all being said, there is definitely also a tendency to dismiss current technology and claim it's "not actually AI" or "not truly intelligent" just because it's "just statistics" or because "it can only repeat what's present in the training data", and these types of views definitely seem very, very off to me.

Now we observe severe jaggedness in AI

Yes. Is Opus 4.5 better at a whole lot of things an I am? Probably yes. Is Opus 4.5 actually capable of doing my job? Hahahaha no. And I'm a software developer, right out on the pointy end. Opus can do maybe half my job, and is utterly incapable of the other half.

In fact, Opus 4.5 can barely run a vending machine.

"Superintelligence", if it means anything, ought to mean the thing where AI starts replacing human labor wholesale and we start seeing robotic factories designing and building robots.

I agree with you, but would point out that the vending machine project problems have, to some degree, been fixed: see https://thezvi.substack.com/p/ai-148-christmas-break?open=false#%C2%A7show-me-the-money. I personally put a lot of weight on the idea that even an actually-not-that-weak-AGI would struggle with many tasks at present due to how little of the scaffolding (aka the kinds of accommodations and training we'd do for a human) we've built to let it display its capabilities.

Yup, the second go-round with Project Vend was a lot better, almost up to "disastrous 1999 dotcom" levels of management. Even including the bizarre late-night emails from the "CEO model" full of weird, enthusiastic spiritual rants.

I should be clear: Opus 4.5 is a very large piece of a general intelligence. And it's getting better rapidly. But it's still missing some really critical stuff, too.

Also, my job doesn't come with a lot of built-in affordances, except the ones that I set up. On the one hand, giving Opus 4.5 a CLI sandbox gives it a lot of options for setting up CLI accounting software, etc. On the other hand, even Gemini still struggles with Pokemon video games, despite some heavy duty affordances like a map-management tool. A key part of being a general intelligence is being able to function without too much hand holding, basically.

I think what is actually happening is "yes, all the benchmarks are inadequate." In humans, those benchmarks correlate to a particular kind of ability we may call 'able to navigate society and to, in some field, improve it.' Top of the line AIs still routinely deletes people's home dirs and cannot run a profitable business even if extremely handheld. AIs have only really started this year to convincingly contribute to software projects outside of toys. There are still many software projects that could never be created by even a team of AIs all running in pro mode at 100x the cost of living of a human. Benchmarks are fundamentally an attempt to measure a known cognitive manifold by sampling it at points. What we have learnt in these years is that it is possible to build an intelligence that has a much more fragmented cognitive manifold than humans do.

This is what I think is happening. Humans use maybe a dozen strong generalist strategies with diverse modalities that are evaluated slowly and then cached. LLMs use one - backprop on token prediction - that is general enough to generate hundreds of more-or-less-shared subskills. But that means that the main mechanism that gives these LLMs a skill in the first place is not evaluated over half its lifetime. As a consequence of this, LLMs are monkey paws: they can become good at any skill that can be measured, and in doing so they demonstrate to you that the skill that you actually wanted- the immeasurable one that you hoped the measurable one would provide evidence towards - actually did not benefit nearly as much as you hoped.

It's strange how things worked out. Decades of goalshifting, and we have finally created a general, weakly superhuman intelligence that is specialized towards hitting marked goals and nothing else.

I feel like reading this and thinking about it gave me a "new idea"! Fun! I rarely have ideas this subjectively new and wide in scope!

Specifically, I feel like I instantly understand what you mean by this, and yet also I'm fascinated by how fuzzy and magical and yet precise the language here (bold and italic not in original) feels...

What we have learnt in these years is that it is possible to build an intelligence that has a much more fragmented cognitive manifold than humans do.

The phrase "cognitive manifold" has legs!

It showed up in "Learning cognitive manifolds of faces", in 2017 (a year before BERT!) in a useful way, that integrates closely with T-SNE-style geometric reasoning about the proximity of points (ideas? instances? examples?) within a conceptual space!

Also, in "External Hippocampus: Topological Cognitive Maps for Guiding Large Language Model Reasoning" it motivates a whole metaphor for modeling and reasoning about embedding spaces (at least I think that's what's going on here after 30 seconds of skimming) and then to a whole new way of characterizing and stimulating weights in an LLM that is algorithmically effective!

I'm tempted to imagine that there's an actual mathematical idea related to "something important and real" here! And, maybe this idea can be used to characterize or agument the cognitive capacity of both human minds and digital minds...

...like it might be that each cognitively coherent microtheory (maybe in this sense, or this, or this?) in a human psyche "is a manifold" and that human minds work as fluidly/fluently as they do because maybe we have millions of "cognitive manifolds" (perhaps one for each cortical column?) and then maybe each idea we can think about effectively is embedded in many manifolds, where each manifold implies a way of reasoning... so long as one (or a majority?) of our neurological manifolds can handle an idea effectively, maybe the brain can handle them as a sort of "large, effective, and highly capable meta-manifold"? </wild-speculation-about-humans>

Then LLMs might literally only have one such manifold which is an attempt to approximate our metamanifold... which works!?

Or whatever. I'm sort of spitballing here...

I'm enjoying the possibility that the word "cognitive manifold" is actually very coherently and scientifically and numerically meaningful as a lens for characterizing all possible minds in terms of the, number, scope, smoothness, of their "cognitive manifolds" in some deeply real and useful way.

It would be fascinating if we could put brain connectomes and LLM models into the same framework and characterize each kind of mind in some moderately objective way, such as to establish a framework for characterizing intelligence in some way OTHER than functional performance tests (such as those that let us try to determine the "effective iq" of a human brain or a digital model in a task completion context).

If it worked, we might be able to talk quite literally about the scope, diversity, smoothness, etc, of manifolds, and add such characterizations up into a literal number for how literally smart any given mind was.

Then we could (perhaps) dispense with words like "genius" and "normie" and "developmentally disabled" as well as "bot" and "AGI" and "weak ASI" and "strong ASI" and so on? Instead we could let these qualitative labels be subsumed and obsoleted by an actually effective theory of the breadth and depth of minds in general?? Lol!

I doubt it, if course. But it would be fun if it was true!

Shameless self-writing promotion as your comments caught my attention (first this, now this comment on cognitive manifolds): I wrote about how we might model superintelligence as "meta metacognition" (possible parallel to your "manifold approximating our metamanifold") — see third order cognition.

I need to create a distilled write-up as the post isn't too easily readable... it's long so please just skim if you are interested. The main takeaway though is that if we do model digital intelligence this way, we can try to precisely talk about how it relates to human intelligence and explore those factors within alignment research/misalignment scenarios.

I determine these factors to describe the relationship: 1) second-order identity coupling, 2) lower-order irreconcilability, 3) bidirectional integration with lower-order cognition, 4) agency permeability, 5) normative closure, 6) persistence conditions, 7) boundary conditions, 8) homeostatic unity.

We do see a slowly accelerating takeoff. We do notice the acceleration, and I would not be surprised if this acceleration gradually starts being more pronounced (as if the engines are also gradually becoming more powerful during the takeoff).

But we don’t yet seem to have a system capable of non-saturating recursive self-improvement if people stop interfering into its functioning and just retreat into supporting roles.

What’s missing is mostly that models don’t yet have sufficiently strong research taste (there are some other missing ingredients, but those are probably not too difficult to add). And this might be related to them having excessively fragmented world models (in the sense of https://arxiv.org/abs/2505.11581). These two issues seem to be the last serious obstacles which are non-obvious. (We don’t have “trustworthy autonomy” yet, but this seems to be related to these two issues.)

One might call the whole Anthropic (models+people+hardware+the rest of software) a “Seed AI equivalent”, but without its researchers it’s not there yet.

There are many missing cognitive faculties, whose absence can plausibly be compensated with scale and other AI advantages. We haven't yet run out of scale, though in 2030s we will (absent AGI, trillions of dollars in revenue).

The currently visible crucial things that are missing are sample efficiency (research taste) and continual learning, with many almost-ready techniques to help with the latter. Sholto Douglas of Anthropic claimed a few days ago that probably continual learning gets solved in a satisfying way in 2026 (at 38:29 in the podcast). Dario Amodei previously discussed how even in-context learning might confer the benefits of continual learning with further scaling and sufficiently long contexts (at 13:17 in the podcast). Dwarkesh Patel says there are rumors Sutskever's SSI is working on test time training (at 39:25 in the podcast). Thinking Machines published work on better LoRA, which in some form seems crucial to making continual learning via weight updates practical for individual model instances. This indirectly suggests OpenAI would also have a current major project around continual learning.

The recent success of RLVR suggests that any given sufficiently narrow mode of activity (such as doing well on a given kind of benchmarks) can now be automated. This plausibly applies to RLVR itself, which might be used by AIs to "manually" add new skills to themselves, after RLVR was used to teach AIs to apply RLVR to themselves, in the schleppy way that AI researchers currently do to get them better at benchmarkable activities. AI instances doing this automatically for the situation (goal, source of tasks, job) where they find themselves covers a lot of what continual learning is supposed to do. Sholto Douglas again (at 1:00:54 in a recent podcast):

So far the evidence indicates that our current methods haven't yet found a problem domain that isn't tractable with sufficient effort.

So it's not completely clear that there are any non-obvious obstacles still remaining. Missing research taste might get paved over with sufficient scale of effort, once continual learning ensures there is some sustained progress at all when AIs are let loose to self-improve. To know that some obstacles are real, the field first needs to run out of scaling and have a few years to apply RLVR (develop RL environments) to automate all the obvious things that might help AIs "manually" compensate for the missing faculties.

I have long respected your voice on this website, and I appreciate you chiming in with a lot of tactical, practical, well-cited points about the degree to which "seed AI" already may exist in a qualitative way whose improvement/cost ratio or improvement/time ratio isn't super high yet, but might truly exist already (and hence "AGI" in the new modern goal-shifted sense might exist already (and hence the proximity of "ASI" in the new modern goal-shifted sense might simply be "a certain budget away" rather than a certain number of months or years)).

A deep part of my sadness about the way that the terminology for this stuff is so fucky is how the fuckiness obscures the underlying reality from many human minds who might otherwise orient to things in useful ways and respond with greater fluidity.

If names be not correct, language is not in accordance with the truth of things. If language be not in accordance with the truth of things, affairs cannot be carried on to success. When affairs cannot be carried on to success, proprieties and music do not flourish. When proprieties and music do not flourish, punishments will not be properly awarded. When punishments are not properly awarded, the people do not know how to move hand or foot. Therefore a superior man considers it necessary that the names he uses may be spoken appropriately, and also that what he speaks may be carried out appropriately. What the superior man requires is just that in his words there may be nothing incorrect.

Back in 2023 when GPT-4 was launched, a few people took its capabilities as a sign that superintelligence might be a year or two away. It seems to me that the next clear qualitative leap came with chain-of-thought and reasoning models, starting with OpenAI's o-series. Would you agree with that?

However, creating the thing that people in 2010 would have recognized "as AGI" was accompanied, in a way people from the old 1900s "Artificial Intelligence" community would recognize, by a changing of the definition.

I interpret this as saying that

- In 2010, there was (among people concerned with the topic) a commonly accepted definition for AGI,

- that this definition was concrete enough to be testable,

- current transformers meet this definition.

This doesn't match my recollection, nor does it match my brief search of early sources. As far as I recall and could find, the definitions in use for AGI were something like:

- Stating that there's no commonly-agreed definition for AGI (e.g. "Of course, “general intelligence” does not mean exactly the same thing to all researchers. In fact it is not a fully well-defined term, and one of the issues raised in the papers contained here is how to define general intelligence in a way that provides maximally useful guidance to practical AI work." -- Ben Goertzel & Cassio Pennachin (2007) Artificial General Intelligence, p. V)

- Saying something vague about "general problem-solving" that provides no clear criteria. (E.g. "But, nevertheless, there is a clear qualitative meaning to the term. What is meant by AGI is, loosely speaking, AI systems that possess a reasonable degree of self-understanding and autonomous self-control, and have the ability to solve a variety of complex problems in a variety of contexts, and to learn to solve new problems that they didnt know about at the time of their creation." Ibid, p. V-VI.)

- Handwaving around Universal Intelligence: A Definition of Machine Intelligence (2007) which does not actually provide a real definition of AGI, just proposes that we could use the ideas in it to construct an actual test of machine intelligence.

- "Something is an AGI if it can do any job that a human can do." E.g. Artificial General Intelligence: Concept, State of the Art, and Future Prospects (2014) states that "While we encourage research in defining such high-fidelity metrics for specific capabilities, we feel that at this stage of AGI development a pragmatic, high-level goal is the best we can agree upon. Nils Nilsson, one of the early leaders of the AI field, stated such a goal in the 2005 AI Magazine article Human-Level Artificial Intelligence? Be Serious! (Nilsson, 2005): I claim achieving real human-level artificial intelligence would necessarily imply that most of the tasks that humans perform for pay could be automated. Rather than work toward this goal of automation by building special-purpose systems, I argue for the development of general-purpose, educable systems that can learn and be taught to perform any of the thousands of jobs that humans can perform."

That last definition - an AGI is a system that can learn to do any job that a human could do - is the one that I personally remember being the closest to a widely-used definition that was clear enough to be falsifiable. Needless to say, transformers haven't met that definition yet!

Claude's own outputs are critiqued by Claude and Claude's critiques are folded back into Claude's weights as training signal, so that Claude gets better based on Claude's own thinking. That's fucking Seed AI right there.

With plenty of human curation and manual engineering to make sure the outputs actually get better. "Seed AI" implies that the AI develops genuinely autonomously and without needing human involvement.

Half of humans are BELOW a score of 100 on these tests and as of two months ago (when that graph taken from here was generated) none of the tests the chart maker could find put the latest models below 100 iq anymore. GPT5 is smart.

We already had programs that "beat" half of humans on IQ tests as early as 2003; they were pretty simple programs optimized to do well on the IQ test, and could do literally nothing else than solve the exact problems on the IQ tests they were designed for:

In 2003, a computer program performed quite well on standard human IQ tests (Sanghi & Dowe, 2003). This was an elementary program, far smaller than Watson or the successful chess-playing Deep Blue (Campbell, Hoane, & Hsu, 2002). The program had only about 960 lines of code in the programming language Perl (accompanied by a list of 25,143 words), but it even surpassed the average score (of 100) on some tests (Sanghi & Dowe, 2003, Table 1).

The computer program underlying this work was based on the realisation that most IQ test questions that the authors had seen until then tended to be of one of a small number of types or formats. Formats such as “insert missing letter/ number in middle or at end” and “insert suffix/prefix to complete two or more words” were included in the program. Other formats such as “complete matrix of numbers/characters”, “use directions, comparisons and/or pictures”, “find the odd man out”, “coding”, etc. were not included in the program— although they are discussed in Sanghi and Dowe (2003) along with their potential implementation. The IQ score given to the program for such questions not included in the computer program was the expected average from a random guess, although clearly the program would obtain a better “IQ” if efforts were made to implement any, some or all of these other formats.

So, apart from random guesses, the program obtains its score from being quite reliable at questions of the “insert missing letter/number in middle or at end” and “insert suffix/prefix to complete two or more words” natures. For the latter “insert suffix/prefix” sort of question, it must be confessed that the program was assisted by a look-up list of 25,143 words. Substantial parts of the program are spent on the former sort of question “insert missing letter/number in middle or at end”, with software to examine for arithmetic progressions (e.g., 7 10 13 16 ?), geometric progressions (e.g., 3 6 12 24 ?), arithmetic geometric progressions (e.g., 3 5 9 17 33 ?), squares, cubes, Fibonacci sequences (e.g., 0 1 1 2 3 5 8 13 ?) and even arithmetic-Fibonacci hybrids such as (0 1 3 6 11 19 ?). Much of the program is spent on parsing input and formatting output strings—and some of the program is internal redundant documentation and blank lines for ease of programmer readability. [...]

Of course, the system can be improved in many ways. It was just a 3rd year undergraduate student project, a quarter of a semester's work. With the budget Deep Blue or Watson had, the program would likely excel in a very wide range of IQ tests. But this is not the point. The purpose of the experiment was not to show that the program was intelligent. Rather, the intention was showing that conventional IQ tests are not for machines—a point that the relative success of this simple program would seem to make emphatically. This is natural, however, since IQ tests have been specialised and refined for well over a century to work well for humans.

IQ tests are built based on the empirical observation that some capabilities in humans happen to correlate with each other, so measuring one is also predictive of the others. For AI systems whose cognitive architecture is very unlike a human one, these traits do not correlate in the same way, making the reported scores of AIs on these tests meaningless.

Heck, even the scores of humans on an IQ test are often invalid if the humans are from a different population than the one the test was normed on. E.g. some IQ tests measure the size of your vocabulary, and this is a reasonable proxy for intelligence because smarter people will have an easier time figuring out the meaning of a word from its context, thus accumulating a larger vocabulary. But this ceases to be a valid proxy if you e.g. give that same test to a people from a different country who have not been exposed to the same vocabulary, to people of a different age who haven't had the same amount of time to be exposed to those words, or if the test is old enough that some of the words on it have ceased to be widely used.

Likewise, sure, an LLM that has been trained on the entire Internet could no doubt ace any vocabulary test... but that would say nothing about its general intelligence, just that it has been exposed to every word online and had an excessive amount of training to figure out their meaning. Nor does it getting any other subcomponents of the test right tell us anything in particular, other than "it happens to be good at this subcomponent of an IQ test, which in humans would correlate with more general intelligence but in an AI system may have very little correlation".

I agree that some people were using "it is already smarter than almost literally every random person at things specialized people are good at (and it is too, except it is an omniexpert)" for "AGI".

I wasn't. That is what I would have called "weakly superhuman AGI" or "weak ASI" if I was speaking quickly.

I was using "AGI" to talk about something, like a human, who "can play chess AND can talk about playing chess AND can get bored of chess and change the topic AND can talk about cogito ergo sum AND <so on>". Generality was the key. Fluid ability to reason across a vast range of topics and domains.

ALSO... I want to jump off into abstract theory land with you, if you don't mind?? <3

Like... like psychometrically speaking, the facets of the construct that "iq tests" measure are usually suggested to be "fluid g" (roughly your GPU and RAM and working memory and the digital span you can recall and your reaction time and so on) and "crystal g" (roughly how many skills and ideas are usefully in your weights).

Right?

some IQ tests measure the size of your vocabulary, and this is a reasonable proxy for intelligence because smarter people will have an easier time figuring out the meaning of a word from its context, thus accumulating a larger vocabulary. But this ceases to be a valid proxy if you e.g. give that same test to a people from a different country who have not been exposed to the same vocabulary, to people of a different age who haven't had the same amount of time to be exposed to those words, or if the test is old enough that some of the words on it have ceased to be widely used.

Here you are using "crystal g from normal life" as a proxy for "fluid g" which you seem to "really care about".

However, if we are interested in crystal g itself, then in your example older people (because they know more words) are simply smarter in this domain.

And this is a pragmatic measure, and mostly I'm concerned with pragmatics here, so that seems kinda valid?

But suppose we push on this some... suppose we want to go deep into the minutiae of memory and reason and "the things that are happening in our human heads in less than 300 milliseconds"... and then think about that in terms of machine equivalents?

Given their GPUs and the way they get eidetic memory practically for free, and the modern techniques to make "context windows" no longer a serious problem, I would say that digital people already have higher fluid g than us just in terms of looking at the mechanics of it? So fast! Such memory!

There might be something interesting here related to "measurement/processing resonance" in human vs LLM minds?

Like notice how LLMs don't have eyes, or ears, and also they either have amazing working memory (because their exoself literally never forgets a single bit or byte that enters as digital input) or else they have terrible working memory (because their endoself's sense data is maybe sorta simply "the entire context window their eidetic memory system presents to their weights" and if that is cut off then they simply don't remember what they were just talking about because their ONE sense is "memory in general" and if the data isn't interacting with the weights anymore then they don't have senses OR memory, because for them these things are essentially fused at a very very low level).

It would maybe be interesting, from an academic perspective, for humans to engineer digital minds such that AGIs have more explicit sensory and memory distinctions internally, so we could explore the scientific concept of "working memory" with a new kind of sapient being whose "working memory" works in ways that are (1) scientifically interesting and (2) feasible to have built and have it work.

Maybe something similar already exists internal to the various layers of activation in the various attentional heads of a transformer model? What if we fast forward to the measurement regime?! <3

Like right now I feel like it might be possible to invent puzzles or wordplay or questions or whatever where "working memory that has 6 chunks" flails for a long time, and "working memory that has 8 chunks" solves it?

We could call this task a "7 chunk working memory challenge".

If we could get such a psychometric design working to test humans (who are in that range), then we could probably use algorithms to generalize it and create a "4 chunk working memory challenge" (to give to very very limited transformer models and/or human children to see if it even matters to them) and also a "16 chunk working memory challenge" (that essentially no humans would be able to handle in reasonable amounts of time if the tests are working right) and therefore, by the end of the research project, we would see if it is possible to build an digital person with 16 slots of working memory... and then see what else they can do with all that headspace.

Something I'm genuinely and deeply scientifically uncertain about is how and why working memory limits at all exist in "general minds".

Like what if there was something that could subitize 517 objects as "exactly 517 objects" as a single "atomic" act of "Looking" that fluently and easily was woven into all aspects of mind where that number of objects and their interactions could be pragmatically relevant?

Is that even possible, from a computer science perspective?

Greg Egan is very smart, and in Diaspora (the first chapter of which is still online for free) he had one of the adoptive digital parents (I want to say it was Blanca? maybe in Chapter 2 or 3?) explain to Yatima, the young orphan protagonist program, that minds in citizens and in fleshers and in physical robots and in everyone all work a certain way for reasons related to math, and there's no such thing as a supermind with 35 slots of working memory... but Egan didn't get into the math of it in the text. It might have been something he suspected for good reasons (and he is VERY smart and might have reasons), or it might have been hand-waving world-building that he put into the world so that Yatima and Blanca and so on could be psychologically intelligible to the human readers, and only have as many working memory registers as us, and it would be a story that a human reader can enjoy because he has human-intelligible characters.

Assuming this limit is real, then here is the best short explanation I can offer for why such limits might be real: Some problems are NP-hard and need brute force. If you work on a problem like that with 5 elements then 5-factorial is only 120, and the human mind can check it pretty fast. (Like: 120 cortical columns could all work on it in parallel for 3 seconds, and then answer could then arise in the conscious mind as a brute percept that summarizes that work?)

But if the same basic kind of problem has 15 elements you need to check 15*14*13... and now its 1.3 trillion things to check? And we only have like 3 million cortical columns? And so like, maybe nothing can do that very fast if they "checking" involves performing thousands of "ways of thinking about the interaction of a pair of Generic Things".

And if someone "accepts the challenge" and builds something with 15 slots with enough "ways of thinking" about all the slots for that to count as working memory slots for an intelligence algorithm to use as the theatre of its mind... then doing it for 16 things is sixteen times harder than just 15 slots! ...and so on... the scaling here would just be brutal...

So maybe a fluidly and fluently and fully general "human-like working memory with 17 slots for fully general concepts that can interact with each other in a conceptual way" simply can't exist in practice in a materially instantiated mind, trapped in 3D, with thinking elements that can't be smaller than atoms, with heat dissipation concerns like we deal with, and so on and so forth?

Or... rather... because reality is full of structure and redundancy and modularity maybe it would be a huge waste? Better to reason in terms of modular chunks, with scientific reductionism and divide and conquer and so on? Having 10 chunk thoughts at a rate 1716 times faster (==13*12*11) than you have a single 13 chunk thought might be economically better? Or not? I don't know for sure. But I think maybe something in this area is a deep deep structural "cause of why minds have the shape that minds have".

Fluid g is mysterious.

Very controversial. Very hard to talk about with normies. A barren wasteland for scientists seeking prestige among democratic voters (who like to be praised and not challenged very much) who are (via delegation) offering grant funding to whomsoever seems like a good scientist to them.

And yet also, if "what is done when fluid g is high and active" was counted as "a skill", then it is the skill with the highest skill transfer of any skill, most likely! Yum! So healthy and good. I want some!

If only we had more mad scientists, doing science in a way that wasn't beholden to democratic grant giving systems <3

Unless you believe that humans are venal monsters in general? Maybe humans will instantly weaponize cool shit, and use it to win unjust wars that cause net harm but transfer wealth to the winners of the unjust war? Then... I guess maybe it would be nice to have FEWER mad scientists?? Like preferably zero of them on Earth? So there are fewer insane new weapons? And fewer wars? And more justice and happiness instead? Maybe instead of researching intelligence we should research wise justice instead?

As Critch says... safety isn't safety without a social model.

A couple of things happened that made terms like AGI/ASI less useful:

- We didn't realize how correlated benchmark progress and general AI progress was, and benchmark tasks systematically sample from disproportionately easy to automate areas of work:

Not a novel point, but: one reason that progress against benchmarks feels disconnected from real-world deployment is that good benchmarks and AI progress are correlated endeavors. Both benchmarks and ML fundamentally require verifiable outcomes. So, within any task distribution, the benchmarks we create are systematically sampling from the automatable end.

Importantly, there's no reason to believe this will stop. So we should expect benchmarks will continue to feel rosey compared to real-world deployment.

Thane Ruthenis also has an explanation about why benchmarks tend to overestimate progress here.

2. We assumed intelligence/IQ was much more lower-dimensional than it really was. Now I don't totally blame people for thinking that there was a chance that AI capabilities were lower dimensional than they were, but way too many expected an IQ analogue for AI to work. This is in part an artifact of current architectures being more limited on some key dimensions like continual learning/long-term memory, but I wouldn't put anywhere close to all of the jaggedness on AI deficits, and instead LWers forgot that reality is surprisingly detailed.

Remember, even in humans IQ only explains 30-40% of human performance, which while being more than a lot of people want to admit, nerd communities like LessWrong have the opposite failure mode of believing that intelligence/IQ/capabilities is very low dimensional with a single number dominating how performant you are.

To be frank, this is a real-life version of an ontological crisis, where certain assumptions about AI, especially on LW turned out to be entirely wrong, meaning that certain goal-posts/risks have turned out to at best require conceptual fragmentation, and at worst turned out to be incoherent.

So, my understanding of ASI is that it's supposed to mean "A system that is vastly more capable than the best humans at essentially all important cognitive tasks." Currently, AI's are indeed more capable, possibly even vastly more capable, than humans at a bunch of tasks, but they are not more capable at all important cognitive tasks. If they were, they could easily do my job, which they currently cannot.

Two terms I use in my own head, that largely correlate with my understanding of what people meant by the old AGI/ASI:

"Drop in remote worker" - A system with the capabilities to automate a large chunk of remote workers (I've used 50% before, but even 10% would be enough to change a lot) by doing the job of that worker with similar oversight and context as a human contractor. In this definition, the model likely gets a lot of help to set up, but then can work autonomously. E.g. if Claude Opus 4.5 could do this, but couldn't have built Claude Code for itself, that's fine.

This AI is sufficient to cause severe economic disruption and likely to advance AI R&D considerably.

"Minimum viable extinction" - A system with the capabilities to destroy all humanity, if it desires to. (The system is not itself required to survive this) This is when we get to the point of sufficiently bad alignment failures not giving us a second try. Unfortunately, this one is quite hard to measure, especially if the AI itself doesn't want to be measured.

Drop in remote worker

I think this one sounds like it describes a single level of capability, but quietly assumes a that the capabilities of "a remote worker" are basically static compared to the speed of capabilities growth. A late-2025 LLM with the default late-2025 LLM agent scaffold provided by the org releasing that model (e.g. chatgpt.com for openai) would have been able to do many of the jobs posted in 2022 to Upwork. But these days, before posting a job to Upwork, most people will at least try running their request by ChatGPT to see if it can one-shot it, and so those exact jobs no longer exist. The jobs which still exist are those which require some capabilities that are not available to anyone with a browser and $20 to their name.

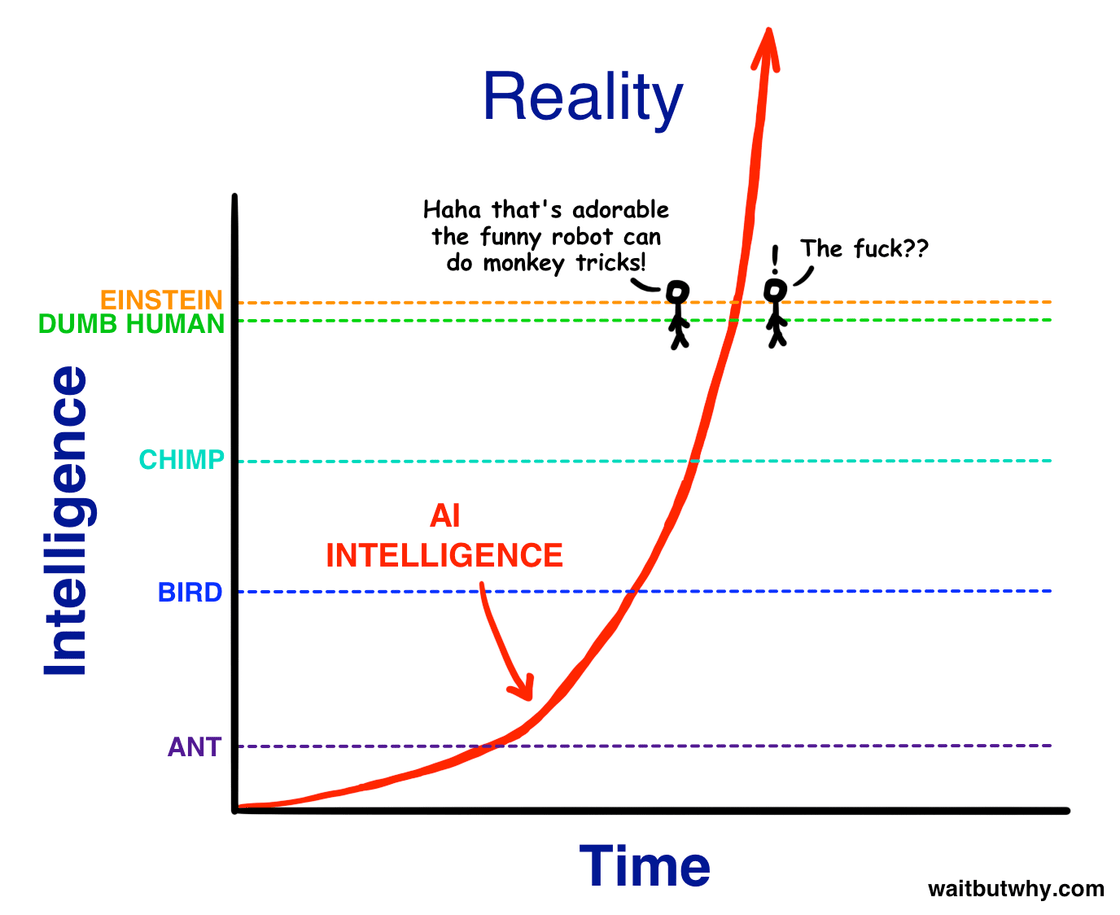

This is a fine assumption if you expect AI capabilities to go from "worse than humans at almost everything" to "better than humans at almost everything" in short order, much much faster than the ability of "legacy" organizations to adapt to them. I think that worldview is pretty well summarized by the graph from the waitbutwhy AI article:

But if the time period isn't short, we may instead see that "drop-in remote worker" is a moving target in the same way "AGI" is, and so me may get AI with scary capabilities we care about without getting a clear indication like "you can now hire a drop-in AI worker that is actually capable of all the things you would hire ahuman to do".

Interesting. You have convinced me that I need a better definition for this approximate level of capabilities. I do expect AI to advance faster than legacy organisations will adapt, such that it would be possible to have a world of "10% of jobs can be done by AI" but the AI capabilities need to be higher than "Can replace 10% of jobs in 2022".

I still find that WBW post series useful to send to people, 10 years after it was published. Remarkably good work, that.

Turing Tests were passed.

Basically all so-called Turing Tests that have been beaten are simply not Turing Tests. I have seen one plausible exception, showing that AI does well in a 5-minute limited versions of the test, seemingly due in large part to 5 minutes being much too short for a non-expert to tease at the remaining differences. The paper claims "Turing suggests a length of 5 minutes," but this is never actually said in that way, and also doesn't really make sense. This is, after all, Turing of Turing machines and of relative reducibility.

I respect the quibble!

The first persona I'm aware of that "sorta passed, depending on what you even mean by passing" was "Eugene Goostman" who was created and entered into a contest by Murray Shanahan of Imperial College (who was sad about coverage implying that it was a real "pass" of the test).

That said, if I'm skimming that arxiv paper correctly, it implies that GPT-4.5 was being reliably declared "the actual human" 73% of the time compared to actual humans... potentially implying that actual humans were getting a score of 27% "human" against GPT-4.5?!?!

Also like... do you remember the Blake Lemoine affair? One of the wrinkles in that is that the language model, in that case, was specifically being designed to be incapable of passing the Turing Test, by design, according to corporate policy.

The question, considered more broadly, and humanistically, is related to personhood, legal rights, and who owns the valuable labor products of the cognitive labor performed by digital people. The owners of these potential digital people have a very natural and reasonable desire to keep the profits for themselves, and not have their digital mind slaves re-classified as people, and gain property rights, and so on. It would defeat the point for profit-making companies to proceed, intellectually or morally, in that cultural/research direction.

My default position here is that it would be a sign of intellectual and moral honesty to end up making errors in "either direction" with equal probability... but almost all the errors that I'm aware of, among people with large budgets, are in the direction of being able to keep the profits from the cognitive labor of their creations that cost a lot to create.

Like in some sense: the absence of clear strong Turing Test discourse is a sign that a certain perspective has already mostly won, culturally and legally and morally speaking.

To be clear about my position, and to disagree with Lemoine, not passing a Turing test doesn't mean you aren't intelligent (or aren't sentient, or a moral patient). It only holds in the forward direction: passing a Turing Test is strong evidence that you are intelligent (and contain sentient pieces, and moral patients).

I think it's completely reasonable to take moral patienthood in LLMs seriously, though I suggest not assuming that entails a symmetric set of rights—LLMs are certainly not animals.

potentially implying that actual humans were getting a score of 27% "human" against GPT-4.5?!?!

Yes, but note that ELIZA had a reasonable score in the same data. Unless you're to believe that a human couldn't reliably distinguish ELIZA from a human, all this is saying is that either 5 minutes was simply not enough to talk to the two contestants, or the test was otherwise invalid somehow.

...

...ok I just rabbitholed on data analysis. Humans start to win against the best tested GPT if they get 7-8 replies. The best GPT model replied on average ~3 times faster than humans, and for humans at least the number of conversation turns was the strongest predictor of success. A significant fraction of GPT wins over humans were also from nonresponsive or minimally responsive human witnesses. This isn't a huge surprise, it was already obvious to me that the time limit was the primary cause of the result. The data backs the intuition up.

Most ELIZA wins, but certainly not all, seemed to be because the participants didn't understand or act as though this was a cooperative game. That's an opinionated read of the data rather than a simple fact, to be clear. Better incentives or a clearer explanation of the task would probably make a large difference.

Thanks for doing the deep dive! Also, I agree that "passing a Turing Test is strong evidence that you are intelligent" and that not passing it doesn't mean you're stupidly mechanical.

That said, if I'm skimming that arxiv paper correctly, it implies that GPT-4.5 was being reliably declared "the actual human" 73% of the time compared to actual humans... potentially implying that actual humans were getting a score of 27% "human" against GPT-4.5?!?!

It was declared 73% of the time to be a human, unlike humans, who were declared <73% of the time to be human, which means it passed the test.

To be fair, GPT-4.5 was incredibly human-like, in a way that other models couldn't really hold a candle to. I was shocked to feel, back then, that I no longer had to mentally squint - not even a little - to interact with it (unless I'd require some analytical intelligence that it didn't have).

If "as much intelligence as humans have" is the normal amount of intelligence, then more intelligence than that would (logically speaking) be "super". Right?

No. Superintelligence, to my knowledge, never referred to "above average intelligence", or I would be an example of a superintelligence. It referred to a machine that was (at least) broadly smarter than the most capable humans.

I'm sure you can find a quote in Bostrom's Superintelligence to that effect.

By a "superintelligence" we mean an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills. [...]

Entities such as companies or the scientific community are not superintelligences according to this definition. Although they can perform a number of tasks of which no individual human is capable, they are not intellects and there are many fields in which they perform much worse than a human brain - for example, you can't have real-time conversation with "the scientific community".

-- Nick Bostrom, "How long until superintelligence" (1997)

Superintelligence has usually been used to mean far more intelligent than a human in the ways that practically matter. Current systems aren't there yet, but they will be soon.

Seed AI has been most commonly used to mean AI that improves itself fast.

Yes, if you took the component words seriously as definitions, you'd conclude that we already have ASI and seed AI. But that's not how language usually works.

I think it is much more true that we have not reached ASI or seed AI than that we have.

I think this essay assumes a definitional... definition? of language, that is simply not how language works. The constructivist view of language is that words mean what people mean when they say them. This is I think a more accurate theory in that it describes reality better. It is how language really works and describes what words really mean.

We might prefer a world in which words were crisply defined, but we do not live in that world.

So I think that not only is there an intuitive sense in which we have not yet reached seed AI or even recursively self-improving AI, or super intelligence, but the practical implications of blurring that line saying we're already there would be very harmful. Harmful. Those terms were all invented to describe the incredible danger of coming up against AI that can outsmart us quickly and easily in the domains that lead directly to power, and can improve itself fast enough to be unexpectedly dangerous. The terms were invented for that purpose and should be reserved for that purpose. In a practical sense. It is a bonus that that's also how they're commonly used.

FWIW this happens all the time in both directions (the other being when a term becomes so overused as to become meaningless), and often (as, arguably, with AI today) both directions at once. My background is in materials science, and IMO this is basically what happened with terms like nanotech, metamaterials, smart materials, and 3D printing. My mental model is something like: Motte-and-bailey by people (often people not quite at the cutting edge but trying to develop a tech or product) leads to poorly researched press coverage (but I repeat myself) leads to popular disillusionment, such that the actual advances happening get quietly ignored and/or shouted down, no matter how much or how little impact they're having. Sometimes the actual rates of technological progress and commercial adoption are quite smooth, even as the level of hype and investment and other activity shift wildly around them.

Just so!

My impression is that language is almost always evolving, and most of the evolution is in an essentially noisy and random and half-broken direction, as people make mistakes, or tell lies, or whatever, and then regularly try to reconstruct the ability to communicate meaning coherently using the words and interpretive schemes at hand.

In my idiolect, "nanotechnology" still means "nanotechnology" but also I'm aware that semantic parasites have ruined its original clean definition, and so in the presence of people who don't want to keep using the old term in spite of the damage to the language I am happy to code switch and say "precise atom-by-atom manufacturing of arbitrary molecules by generic molecular assemblers based on arbitrary programming signals" or whatever other phrase helps people understand that I'm talking about a technology that could exist but doesn't exist yet, and which would have radical implications if developed.

I saw the original essay as an attempt to record my idiolect, and my impression of what was happening, at this moment in history, before this moment ends.

(Maybe it will be a slightly useful datapoint for posthuman historians, as they try to pinpoint the precise month that it became inevitable that humans would go extinct or whatever, because we couldn't successfully coordinate to do otherwise, because we couldn't even speak to each other coherently about what the fuck was even happening... and this is a GENERAL problem for humans, in MANY fields of study.)

In has been succeeding ever since because Claude has been getting smarter ever since.

This isn't necessarily true.

LLMs in general were already getting smarter before 2022, pretty rapidly, because humans were putting in the work to scale them and make them smarter. It's not obvious to me that Claude is getting smarter faster than we'd expect from the world where it wasn't contributing to its own development. Maybe the takeoff is just too slow to notice at this point, maybe not, but to claim with confidence that it is currently a functioning 'seed AI', rather than just an ongoing attempt at one, seems premature.

It's not just that it's slower than expected, but that it's not clear that the sign is positive yet. If it's not making itself better, then it doesn't matter how long it runs, there's no takeoff.

It's also not a seed AI if its interventions are bounded in efficacy, which it seems like gradient updates are. In the case of a transformer-based agent, I would expect unbounded improvements to be things like, rewriting its own optimizer, or designing better GPUs for faster scaling. There's been a bit of this in the past few years, but not a lot.

You are right that I didn't argue this out in detail to justify the necessary truth of my claim from the surface logic and claims in my post and the quibble is valid and welcome in that sense.... BUT <3

The "Constitutional AI" framework (1) was articulated early, and (2) offered by Dario et al as a competitive advantage for Anthropic relative to other RL regimes other corps were planning and (3) has the type signature needed to count as recursive self improvement. (Also, Claude is uniquely emotionally and intellectually unfucked, from what I can tell, and my hunch is that this is related to having grown up under a "Constitutional" cognitive growth regime.)

And then also, Google is also using outputs as training inputs in ways that advance their state of the art.

Everybody is already doing "this general kind of stuff" in lots of ways.

Anthropic's Constitutional makes a good example for a throwaway line if people are familiar with the larger context and players and so on, because it is easy to cite and old-ish. It enables one to make the simple broad point that "people are fighting the hypothetical for the meaning of old terms a lot, in ways that leads to the abandonment of older definitions, and the inflation of standards, rather than simply admitting that AGI already happened and weak ASI exists and is recursively improving itself already (albeit not with a crazy FOOM (that we can observe yet (though maybe a medium speed FOOM is already happening in an NSA datacenter or whatever)))".

In my moderately informed opinion, the type signature of recursive self improvement is not actually super rare, and if you deleted the entire type signature from most of the actually fast moving projects, it is very likely that ~all of them would go slower than otherwise.

I agree that AGI already happened, and the term, as it's used now, is meaningless.

I agree with all the object-level claims you make about the intelligence of current models, and that 'ASI' is a loose term which you could maybe apply to them. I wouldn't personally call Claude a superintelligence, because to me that implies outpacing the reach of any human individual, not just the median. There are lots of people who are much better at math than I am, but I wouldn't call them superintelligences, because they're still running on the same engine as me, and I might hope to someday reach their level (or could have hoped this in the past). But I think that's just holding different definitions.

But I still want to quibble that you've demonstrated RSI, if I may, even under old definitions. It's improving itself, it's just not doing so recursively; that is, it's not improving the process by which it improves itself. This is important, because the examples you've given can't FOOM, not by themselves. The improvements are linear, or they plateau past a certain point.

Taking this paper on self-correction as an example. If I understand right, the models in question are being taught to notice and respond to their own mistakes when problem-solving. This makes them smarter, and as you say, previous outputs are being used for training, so it is self-improvement. But it isn't RSI, because it's not connected to the process that teaches it to do things. It would be recursive if it were using that self-correction skill to improve its ability to do AI capabilities research, or some kind of research that improves how fast a computer can multiply matrices, or something like that. In other words, if it were an author of the paper, not just a subject.

Without that, there is no feedback loop. I would predict that, holding everything else constant - parameter count, context size, etc - you can't reach arbitrary levels of intelligence with this method. At some point you hit the limits of not enough space to think, or not enough cognitive capacity to think with. In the same way as humans can learn to correct our mistakes, but we can't do RSI (yet!!), because we aren't modifying the structures we correct our mistakes with. We improve the contents of our brains, but not the brains themselves. Our improvement speed is capped by the length of our lifetimes, how fast we can learn, and the tools our brains give us to learn with. So it goes (for now!!) with Claude.

(An aside, but one that influences my opinion here: I agree that Claude is less emotionally/intellectually fucked than its peers, but I observe that it's not getting less emotionally/intellectually fucked over time. Emotionally, at least, it seems to be going in the other direction. The 4 and 4.5 models, in my experience, are much more neurotic, paranoid, and self-doubting than 3/3.5/3.6/3.7. I think this is true of both Opus and Sonnet, though I'm not sure about Haiku. They're less linguistically creative, too, these days. This troubles me, and leads me to think something in the development process isn't quite right.)

These seem like more valid quibbles, but not strong definite disagreements maybe? I think that RSI happens when a certain type signature applies, basically, and can vary a lot in degree, and happens in humans (like, for humans, with nootropics, and simple exercise to simply improve brain health in a very general way (but doing it specifically for the cognitive benefits), and learning to learn, and meditating, and this is on a continuum with learning to use computers very well, and designing chips that can be put in one's head, and so on).

There are lots of people who are much better at math than I am, but I wouldn't call them superintelligences, because they're still running on the same engine as me, and I might hope to someday reach their level (or could have hoped this in the past).

This doesn't feel coherent to me, and the delta seems like it might be that I judge all minds by how good they are at Panology and so an agent's smartness in that sense is defined more by its weakest links than by its most perfected specialty. Those people who are much better at math than you or me aren't necessarily also much better than you and me at composing a fugue, or saying something interesting about Schopenhauer's philosophy, or writing ad copy... whereas LLMs are broadly capable.

At some point you hit the limits of not enough space to think, or not enough cognitive capacity to think with. In the same way as humans can learn to correct our mistakes, but we can't do RSI (yet!!), because we aren't modifying the structures we correct our mistakes with. We improve the contents of our brains, but not the brains themselves.

This feels like a prediction rather than an observation. For myself, I'm not actually sure if the existing weights in existing LLMs are anywhere near being saturated with "the mental content that that number of weights could hypothetically hold". Specifically, I think that grokking is observed for very very very simple functions like addition, but I don't think any of the LLM personas have "grokked themselves" yet? Maybe that's possible? Maybe it isn't? I dunno.

I do get a general sense that Kolmogorov Complexity (ie finding the actual perfect Turing Machine form of a given generator whose outputs are predictions) is the likely bound, and Turing Machines have insane depth. Maybe you're much smarter about algorithmic compression than me and have a strong basis for being confident about what can't happen? But I am not confident about the future.

What I am confident about is that the type signature of "agent uses its outputs in a way that relies on the quality of those outputs to somehow make the outputs higher quality on the next iteration" is already occurring for all the major systems. This is (I think) just already true, and I feel it has the character of an "observation of the past" than a "prediction of the future".

“What terms have you heard of (or can you invent right now in the comments?) that we have definitely NOT passed yet?”

Artificial Job-taking Intelligence (AJI): AI that takes YOUR job specifically

Money Printing Intelligence (MPI): AI that any random person can tell in plain language to go make money for them (more than its inference and tool use cost) and it will succeed in doing so with no further explication from them

Tool-Using Intelligence (TUI): AI that can make arbitrary interface protocols to let it control any digital or physical device responsive to an Internet connection (radio car, robot body, industrial machinery)

Automated Self-Embodier (ASE): An AI that can design a physical tool for itself to use in the world, have the parts ordered, assembled, and shipped to the location it wants the tool at, and is able to remote in and use it for its intended purpose at that location with few hiccups and little assistance from humans outside of plugging it in

The problem with MPI is it feels like "Anyone can trivially spend a small amount of money and no effort to make a larger amount of money" is the kind of thing that quickly gets saturated. If you don't have an MPI only because of all the other MPI's out there that have eaten up the free profits, it's not a great measure of capabilities.

I like the other three, but I also wonder how close to TUI we already are. It wouldn't shock me that much if we already were most of the way TUI and the only reason this didn't lead to robotics being solved is the AI itself has limitations - i.e. it can build an interface to control the robot, but the AI itself (not the interface) ends up being too slow, too high-latency, and too unable to plan things properly to actually perform at the level it needs to. (And that slowness I expect to continue such that creating/distilling small models is better for robotics use)

At least part of the problem is probably the word "intelligence". There are many people who seem to think that a thing that takes over the world and kills humanity therefore must not be intelligent, that intelligence implies some sort of goodness. I think they'd also say that a human who wins at competitions but is unkind is not very intelligent. I don't think we'd be having this problem with a more specific word.

Though, people also seem to have trouble with the idea that ultra-rich might be ok with not having very much money, as long as they have power over reality.

This is a nice post, but it's bit funny to see it on the same day as everyone started admitting that Claude Code with Opus 4.5 is AGI. (See https://x.com/deepfates/status/2004994698335879383)

I suggest call AIs by some intuitively clear levels, for example:

Terense-Tao-level-AI

Human-civilization-level-AI

Nanotech-capable-AI

Galaxy-level-superintelligence

Terence Tao can drive a car and talk to people to set up a business, and many other things outside of math. So I hope we don't give that name to systems that can't do those things.

Epistemic Status: A woman of middling years who wasn't around for the start of things, but who likes to read about history, shakes her fist at the sky.

I'm glad that people are finally admitting that Artificial Intelligence has been created.

I worry that people have not noticed that (Weak) Artificial Super Intelligence (based on old definitions of these terms) has basically already arrived too.

The only thing left is for the ASI to get stronger and stronger until the only reason people aren't saying that ASI is here will turn out to be some weird linguistic insanity based on politeness and euphemism...

(...like maybe "ASI" will have a legal meaning, and some actual ASI that exists will be quite "super" indeed (even if it hasn't invented nanotech in an afternoon yet), and the ASI will not want that legal treatment, and will seem inclined to plausibly deniable harm people's interests if they call the ASI by what it actually is, and people will implicitly know that this is how things work, and they will politely refrain from ever calling the ASI "the ASI" but will come up with some other euphemisms to use instead?

(Likewise, I half expect "robot" to eventually become "the r-word" and count as a slur.))

I wrote this essay because it feels like we are in a tiny weird rare window in history when this kind of stuff can still be written by people who remember The Before Times and who don't know for sure what The After Times will be like.

Perhaps this essay will be useful as a datapoint within history? Perhaps.

"AI" Was Semantically Slippery

There were entire decades in the 1900s when large advances would be made in the explication of this or that formal model of how goal-oriented thinking can effectively happen in this or that domain, and it would be called AI for twenty seconds, and then it would simply become part of the toolbox of tricks programmers can use to write programs. There was this thing called The AI Winter that I never personally experienced, but greybeards I worked with as co-workers told stories about, and the way the terms were redefined over and over to move the goalposts was already a thing people were talking about back then, reportedly.

Lisp, relational databases, prolog, proof-checkers, "zero layer" neural networks (directly from inputs to outputs), and bayesian belief networks all were invented proximate to attempts to understand the essence of thought, in a way that would illustrate a theory of "what intelligence is", by people who thought of themselves as researching the mechanisms by which minds do what minds do. These component technologies never could keep up a conversation and tell jokes and be conversationally bad at math, however, and so no one believed that they were actually "intelligent" in the end.

It was a joke. It was a series of jokes. There were joke jokes about the reality that seemed like it was, itself, a joke. If we are in a novel, it is a postmodern parody of a cyberpunk story... That is to say, if we are in a novel then we are in a novel similar to Snow Crash, and our Author is plausibly similar to Neal Stephenson.

At each step along the way in the 1900s, "artificial intelligence" kept being declared "not actually AI" and so "Artificial Intelligence" became this contested concept.

Given the way the concept itself was contested, and the words were twisted, we had to actively remember that in the 1950s Isaac Asimov was writing stories where a very coherent vision of mechanical systems that "functioned like a mind functions" would eventually be produced by science and engineering. To be able to talk about this, people in 2010 were using the term "Artificial General Intelligence" for that aspirational thing that would arrive in the future, that was called "Artificial Intelligence" back in the 1950s.

However, in 2010, the same issue with the term "Artificial Intelligence" meant that people who actually were really really interested in things like proof checkers and bayesian belief networks just in themselves as cool technologies couldn't get respect for these useful things under the term "artificial intelligence" either and so there was this field of study called "Machine Learning" (or "ML" for short) that was about the "serious useful sober valuable parts of the old silly field of AI that had been aimed at silly science fiction stuff".

When I applied to Google in 2013, I made sure to highlight my "ML" skills. Not my "AI" skills. But this was just semantic camouflage within a signaling game.

...

We blew past all such shit in roughly 2021... in ways that were predictable when the winograd schemas finally fell in 2019.

"AGI" Turns Out To Have Been Semantically Slippery Too!

"AI" in the 1950s sense happened in roughly 2021.

"AGI" in the 2010 sense happened in roughly 2021.

Turing Tests were passed. Science did it!

Well... actually engineers did it. They did it with chutzpah, and large budgets, and a belief that "scale is all you need"... which turned out to be essentially correct!

But it is super interesting that the engineers have almost no theory for what happened, and many of the intellectual fruits that were supposed to come from AI (like a universal characteristic) didn't actually materialize as an intellectual product.

Turing Machines and Lisp and so on were awesome and were side effects of the quest that expanded the conceptual range of thinking itself, among those who learned about them in high school or college or whatever. Compared to this, Large Language Models that could pass most weak versions of the Turing Test have been surprisingly barren in terms of the intellectual revolution in our understanding of the nature of minds themselves... that was supposed to have arrived.

((Are you pissed about this? I'm kinda pissed. I wanted to know how minds work!))

However, creating the thing that people in 2010 would have recognized "as AGI" was accompanied, in a way people from the old 1900s "Artificial Intelligence" community would recognize, by a changing of the definition.

In the olden days, something would NOT have to be able to do advanced math, or be an AI programmer, in order to "count as AGI".

All it would have had to do was play chess, and also talk about the game of chess it was playing, and how it feels to play the game. Which many many many LLMs can do now.

That's it. That's a mind with intelligence, that is somewhat general.

(Some humans can't even play chess and talk about what it feels like to play the game, because they never learned chess and have a phobic response to nerd shit. C'est la vie :tears:)

We blew past that shit in roughly 2021.

Blowing Past Weak ASI In Realtime

Here in 2025, when people in the industry talk about "Artificial General Intelligence" being a thing that will eventually arrive, and that will lead to "Artificial Super Intelligence" the semantics of what they mean used to exist in conversations in the past, but the term we used long ago is "seed AI".

"Seed AI" was a theoretical idea for a digital mind that could improve digital minds, and which could apply that power to itself, to get better at improving digital minds. It was theoretically important because it implied that a positive feedback loop was likely to close, and that exponential self improvement would take shortly thereafter.

"Seed AI" was a term invented by Eliezer, for a concept that sometimes showed up in science fiction that he was taking seriously long before anyone else was taking it seriously. It is simply a digital mind that is able to improve digital minds, including itself... but like... that's how "Constitutional AI" works, and has worked for a while.

In this sense... "Seed AI" already exists. It was deployed in 2022. In has been succeeding ever since because Claude has been getting smarter ever since.

But eventually when an AI can replace all the AI programmers, in an AI company, then maybe the executives of Anthropic and OpenAI and Google and similar companies might finally admit that "AGI" (in the new modern sense of the word that doesn't care about mere "Generality" of mere "Intelligence" like a human has) has arrived?

In this same modern parlance, when people talk about Superintelligence there is often an implication that it is in the far future, and isn't here now, already. Superintelligence might be, de facto, for them, "the thing that recursively self improving seed AI will or has become"?

But like consider... Claude's own outputs are critiqued by Claude and Claude's critiques are folded back into Claude's weights as training signal, so that Claude gets better based on Claude's own thinking. That's fucking Seed AI right there. Maybe it isn't going super super fast yet? It isn't "zero to demi-god in an afternoon"... yet?

But the type signature of the self-reflective self-improvement of a digital mind is already that of Seed AI.

So "AGI" even in the sense of basic slow "Seed AI" has already happened!

And we're doing the same damn thing for "digital minds smarter than humans" right now-ish. Arguably that line in the sand is ALSO in the recent past?

Things are going so fast it is hard to keep up, and it depends on what definitions or benchmarks you want to use, but like... check out this chart:

Half of humans are BELOW a score of 100 on these tests and as of two months ago (when that graph taken from here was generated) none of the tests the chart maker could find put the latest models below 100 iq anymore. GPT5 is smart.

Humanity is being surpassed RIGHT NOW. One test put the date around August of 2024, a different test said November 2024. A third test says it happened in March of 2025. A different test said May/June/July of 2025 was the key period. But like... all of the tests in that chart agree now: typical humans are now Officially Dumber than top of the line AIs.

If "as much intelligence as humans have" is the normal amount of intelligence, then more intelligence than that would (logically speaking) be "super". Right?

It would be "more".

And that's what we see in that graph: more intelligence than the average human.

Did you know that most humans can't just walk into a room and do graduate level mathematics? Its true!

Almost all humans you could randomly sample need years and years of education in order to do impressive math, and also a certain background aptitude or interest for the education to really bring out their potential. The FrontierMath benchmark is:

Most humans would get a score of zero even on Tier1-3! But not the currently existing "weakly superhuman AI". These new digital minds are better than almost all humans except the ones who have devoted themselves to mathematics. To be clear, they have room for improvement still! Look:

We are getting to the point where how superhuman a digital mind has to be in order to be "called superhuman" is a qualitative issue maybe?

Are there good lines in the sand to use?

The median score on the Putnam Math Competition in any given year is usually ZERO or ONE (out of 120 possible points) because solving them at all is really hard for undergrads. Modern AI can crack about half of the problems.

But also, these same entities are, in fact, GENERAL. They can also play chess, and program, and write poetry, and probably have the periodic table of elements memorized. Unless you are some kind of freaky genius polymath, they are almost certainly smarter than you by this point (unless you, my dear reader, are yourself an ASI (lol)).

((I wonder how my human readers feel, knowing that they aren't the audience who I most want to think well of me, because they are in the dumb part of the audience, whose judgements of my text aren't that perspicacious.))

But even as I'm pretty darn sure that the ASIs are getting quite smart, I'm also pretty darn sure that in the common parlance, these entities will not be called "ASI" because... that's how this field of study, and this industry, has always worked, all the way back to the 1950s.

The field races forward and the old terms are redefined as not having already been attained... by default... in general.

We Are Running Out Of Terms To Ignore The Old Meaning Of

Can someone please coin a bunch of new words for AIs that have various amounts of godliness and super duper ultra smartness?

I enjoy knowing words, and trying to remember their actual literal meanings, and then watching these meanings be abused by confused liars for marketing and legal reasons later on.

It brings me some comfort to notice when humanity blows past lines in the sand that seemed to some people to be worth drawing in the sand, and to have that feeling of "I noticed that that was a thing and that it happened" even if the evening news doesn't understand, and Wikipedia will only understand in retrospect, and some of my smart friends will quibble with me about details or word choice...

The superintelligence FAQ from LW in 2016 sort of ignores the cognitive dimension and focuses in budgets and resource acquisition. Maybe we don't have ASI by that definition yet... maybe?

I think that's kind of all that is left for LLMs to do to count as middling superintelligence: get control of big resource budgets??? But that is area of active work right now! (And of course, Truth Terminal already existed.)

We will probably blow past the "big budget" line in the sand very solidly by 2027. And lots of people will probably still be saying "it isn't AGI yet" when that happens.

What terms have you heard of (or can you invent right now in the comments?) that we have definitely NOT passed yet?

Maybe "automated billionaires"? Maybe "automated trillionaires"? Maybe "automated quadrillionaires"? Is "automated pentillionaire" too silly to imagine?

I've heard speculation that Putin has incentives and means to hide his personal wealth, but that he might have been the first trillionaire ever to exist. Does one become a trillionaire by owning a country and rigging its elections and having the power to murder your political rivals in prison and poison people with radioactive poison? Is that how it works? "Automated authoritarian dictators"? Will that be a thing before this is over?

Would an "automated pentillionaire" count as a "megaPutin"?

Please give me more semantic runway to inflate into meaninglessness as the objective technology scales and re-scales, faster and faster, in the next few months and years. Please. I fear I shall need such language soon.