Two weeks ago some senators asked OpenAI questions about safety. A few days ago OpenAI responded. Its reply is frustrating.

OpenAI's letter ignores all of the important questions[1] and instead brags about somewhat-related "safety" stuff. Some of this shows chutzpah — the senators, aware of tricks like letting ex-employees nominally keep their equity but excluding them from tender events, ask

Can you further commit to removing any other provisions from employment agreements that could be used to penalize employees who publicly raise concerns about company practices, such as the ability to prevent employees from selling their equity in private “tender offer” events?

and OpenAI's reply just repeats the we-don't-cancel-equity thing:

OpenAI has never canceled a current or former employee’s vested equity. The May and July communications to current and former employees referred to above confirmed that OpenAI would not cancel vested equity, regardless of any agreements, including non-disparagement agreements, that current and former employees may or may not have signed, and we have updated our relevant documents accordingly.

!![2]

One thing in OpenAI's letter is object-level notable: ...

Clarification on the Superalignment commitment: OpenAI said:

We are dedicating 20% of the compute we’ve secured to date over the next four years to solving the problem of superintelligence alignment. Our chief basic research bet is our new Superalignment team, but getting this right is critical to achieve our mission and we expect many teams to contribute, from developing new methods to scaling them up to deployment.

The commitment wasn't compute for the Superalignment team—it was compute for superintelligence alignment. (As opposed to, in my view, work by the posttraining team and near-term-focused work by the safety systems team and preparedness team.) Regardless, OpenAI is not at all transparent about this, and they violated the spirit of the commitment by denying Superalignment compute or a plan for when they'd get compute, even if the literal commitment doesn't require them to give any compute to safety until 2027.

Also, they failed to provide the promised fraction of compute to the Superalignment team (and not because it was needed for non-Superalignment safety stuff).

iiuc, xAI claims Grok 4 is SOTA and that's plausibly true, but xAI didn't do any dangerous capability evals, doesn't have a safety plan (their draft Risk Management Framework has unusually poor details relative to other companies' similar policies and isn't a real safety plan, and it said "We plan to release an updated version of this policy within three months" but it was published on Feb 10, over five months ago), and has done nothing else on x-risk.

That's bad. I write very little criticism of xAI (and Meta) because there's much less to write about than OpenAI, Anthropic, and Google DeepMind — but that's because xAI doesn't do things for me to write about, which is downstream of it being worse! So this is a reminder that xAI is doing nothing on safety afaict and that's bad/shameful/blameworthy.[1]

- ^

This does not mean safety people should refuse to work at xAI. On the contrary, I think it's great to work on safety at companies that are likely to be among the first to develop very powerful AI that are very bad on safety, especially for certain kinds of people. Obviously this isn't always true and this story failed for many OpenAI safety staff; I don't want to argue about this now.

Grok 4 is not just plausibly SOTA, the opening slide of its livestream presentation (at 2:29 in the video) slightly ambiguously suggests that Grok 4 used as much RLVR training as it had pretraining, which is itself at the frontier level (100K H100s, plausibly about 3e26 FLOPs). This amount of RLVR scaling was never claimed before (it's not being claimed very clearly here either, but it is what the literal interpretation of the slide says; also almost certainly the implied compute parity is in terms of GPU-time, not FLOPs).

Thus it's plausibly a substantially new kind of model, not just a well-known kind of model with SOTA capabilities, and so it could be unusually impactful to study its safety properties.

Another takeaway from the livestream is the following bit of AI risk attitude Musk shared (at 14:29):

It's somewhat unnerving to have intelligence created that is far greater than our own. And it'll be bad or good for humanity. I think it'll be good, most likely it'll be good. But I've somewhat reconciled myself to the fact that even if it wasn't gonna be good, I'd at least like to be alive to see it happen.

RLVR involves decoding (generating) 10K-50K long sequences of tokens, so its compute utilization is much worse than pretraining, especially on H100/H200 if the whole model doesn't fit in one node (scale-up world). The usual distinction in input/output token prices reflects this, since processing of input tokens (prefill) is algorithmically closer to pretraining, while processing of output tokens (decoding) is closer to RLVR.

The 1:5 ratio in API prices for input and output tokens is somewhat common (it's this way for Grok 3 and Grok 4), and it might reflect the ratio in compute utilization, since the API provider pays for GPU-time rather than the actually utilized compute. So if Grok 4 used the same total GPU-time for RLVR as it used for pretraining (such as 3 months on 100K H100s), it might've used 5 times less FLOPs in the process. This is what I meant by "compute parity is in terms of GPU-time, not FLOPs" in the comment above.

GB200 NVL72 (13TB HBM) will be improving utilization during RLVR for large models that don't fit in H200 NVL8 (1.1TB) or B200 NVL8 (1.4TB) nodes with room to spare for KV cache, which is likely all of the 2025 frontier models. So this opens the possibility of both doing a lot of RLVR in reasonable time for even larger models (such as compute optimal models at 5e26 FLOPs), and also for using longer reasoning traces than the current 10K-50K tokens.

FWIW, I think the key question is to understand the regulatory demands that xAI is making. It's not like the RSPs or safety evaluations will really tell anyone that much new. Indeed, it seems very sad to evaluate the quality of frontier company's safety work on the basis of the pretty fake seeming RSP and Risk Management Frameworks that other companies have created, which seem pretty powerless. Clearly if one was thinking from first principles about what a responsible company should do, those are not the relevant dimensions.

I don't know what the current lobbying and advocacy efforts of xAI are, but if they are absent then seems to me like they are messing up less than e.g. OpenAI, and if they are calling for real and weighty regulations (as at least Elon has done in the past, though he seems to have changed his tune recently), then that seems like it would matter more.

Edit: To be clear, a thing I do think really matters is keeping your commitments, even if they committed you to doing things I don't think are super important. So on this dimension, xAI does seem like it messed up pretty badly, given this:

"We plan to release an updated version of this policy within three months" but it was published on Feb 10, over five months ago"

I disagree that this is the "key question." I think most of a frontier company's effect on P(doom) is the quality of its preparation for safety when models are dangerous, not its effect on regulation. I'm surprised if you think that variance in regulatory outcomes is not just more important than variance in what-a-company-does outcomes but also sufficiently tractable for the marginal company that it's the key question.

I share your pessimism about RSPs and evals, but I think they're informative in various ways. E.g.:

- If a company says it thinks a model is safe on the basis of eval results, but those evals are terrible or are interpreted incorrectly, that's a bad sign.

- What an RSP says about how the company plans to respond to misuse risks gives you some evidence about whether it's thinking at all seriously about safety — does it say we will implement mitigations to reduce our score on bio evals to safe levels or we will implement mitigations and then assess how robust they are.

- What an RSP says about how the company plans to respond to risks from misalignment gives you some evidence about that — do they not mention misalignment, or not mention anything they could do about it, or say th

I'm surprised if you think that variance in regulatory outcomes is not just more important than variance in what-a-company-does outcomes but also sufficiently tractable for the marginal company that it's the key question.

Huh, I am not sure what you mean. I am surprised if you think that I think that marginal frontier-lab safety research is making progress on the hard parts of the alignment problem. I've been pretty open that I think valuable progress on that dimension has been close to zero.

This doesn't mean actions of an AI company do not matter, but I do think that no actions that seem at all plausible for any current AI company to take have really any chance of making it so that it's non-catastrophic for them to develop and deploy systems much smarter than humans. So the key dimension is how much the actions of the labs are doing things that might prevent smarter than human systems from being deployed in the near future.

I think there are roughly two dimensions of variance where I do think AI lab behavior has a big effect on that:

- Do they advocate for reasonable regulatory action and speak openly about the catastrophic risk from AI

- Elon, of the people at leading l

I'm worried about risk from current models but because it's a bad sign about noticing risks when warning signs appear, being honest about risk/safety even when it makes you look bad, etc.

I agree that this is the key dimension, but I don't currently think RSPs are a great vehicle for that. Indeed, looking at the regulatory advocacy of a company seems like a much better indicator, since I expect that to have a bigger effect on the conversation about risk/safety than the RSP and eval results (though it's not overwhelmingly clear to me).

And again, many RSPs and eval results seem to me to be active propaganda, and so are harmful on this dimension, and it's better to do nothing than to be harmful in this way (though I agree that if xAI said they would do a think and then didn't, then that is quite bad).

I guess your belief "no actions that seem at all plausible for any current AI company to take have really any chance of making it so that it's non-catastrophic for them to develop and deploy systems much smarter than humans" is a crux; I disagree, and so I care about marginal differences in risk-preparedness.

Makes sense. I am not overwhelmingly confident there isn't something control...

Update, five days later: OpenAI published the GPT-4o system card, with most of what I wanted (but kinda light on details on PF evals).

OpenAI Preparedness scorecard

Context:

- OpenAI's Preparedness Framework says OpenAI will maintain a public scorecard showing their current capability level (they call it "risk level"), in each risk category they track, before and after mitigations.

- When OpenAI released GPT-4o, it said "GPT-4o does not score above Medium risk in any of these categories" but didn't break down risk level by category.

- (I've remarked on this repeatedly. I've also remarked that the ambiguity suggests that OpenAI didn't actually decide whether 4o was Low or Medium in some categories, but this isn't load-bearing for the OpenAI is not following its plan proposition.)

News: a week ago,[1] a "Risk Scorecard" section appeared near the bottom of the 4o page. It says:

...Updated May 8, 2024

As part of our Preparedness Framework, we conduct regular evaluations and update scorecards for our models. Only models with a post-mitigation score of “medium” or below are deployed.The overall risk level for a model is determined by the highest risk level in any category. Currently, GPT-4o is asses

OpenAI reportedly rushed the GPT-4o evals. This article makes it sound like the problem is not having enough time to test the final model. I don't think that's necessarily a huge deal — if you tested a recent version of the model and your tests have a large enough safety buffer, it's OK to not test the final model at all.

But there are several apparent issues with the application of the Preparedness Framework (PF) for GPT-4o (not from the article):

- They didn't publish their scorecard

- Despite the PF saying they would

- They instead said "GPT-4o does not score above Medium risk in any of these categories." (Maybe they didn't actually decide whether it's Low or Medium in some categories!)

- They didn't publish their evals

- Despite the PF strongly suggesting they would

- Despite committing to in the White House voluntary commitments

- While rushing testing of the final model would be OK in some circumstances, OpenAI's PF is supposed to ensure safety by testing the final model before deployment. (This contrasts with Anthropic's RSP, which is supposed to ensure safety with its "safety buffer" between evaluations and doesn't require testing the final model.) So OpenAI committed to testing the final m

Woah. OpenAI antiwhistleblowing news seems substantially more obviously-bad than the nondisparagement-concealed-by-nondisclosure stuff. If page 4 and the "threatened employees with criminal prosecutions if they reported violations of law to federal authorities" part aren't exaggerated, it crosses previously-uncrossed integrity lines. H/t Garrison Lovely.

[Edit: probably exaggerated; see comments. But I haven't seen takes that the "OpenAI made staff sign employee agreements that required them to waive their federal rights to whistleblower compensation" part is likely exaggerated, and that alone seems quite bad.]

Matt Levine is worth reading on this subject (also on many others).

The SEC has a history of taking aggressive positions on what an NDA can say (if your NDA does not explicitly have a carveout for 'you can still say anything you want to the SEC', they will argue that you're trying to stop whistleblowers from talking to the SEC) and a reliable tendency to extract large fines and give a chunk of them to the whistleblowers.

This news might be better modeled as 'OpenAI thought it was a Silicon Valley company, and tried to implement a Silicon Valley NDA, without consulting the kind of lawyers a finance company would have used for the past few years.'

(To be clear, this news might also be OpenAI having been doing something sinister. I have no evidence against that, and certainly they've done shady stuff before. But I don't think this news is strong evidence of shadiness on its own).

Not a lawyer, but I think those are the same thing.

The SEC's legal theory is that "non-disparagement clauses that failed to exempt disclosures of securities violations to the SEC" and "threats of prosecution if you report violations of law to federal authorities" are the same thing, and on reading the letter I can't find any wrongdoing alleged or any investigation requested outside of issues with "OpenAI's employment, severance, non-disparagement and non-disclosure agreements".

New OpenAI tweet "on how we’re prioritizing safety in our work." I'm annoyed.

We believe that frontier AI models can greatly benefit society. To help ensure our readiness, our Preparedness Framework helps evaluate and protect against the risks posed by increasingly powerful models. We won’t release a new model if it crosses a “medium” risk threshold until we implement sufficient safety interventions. https://openai.com/preparedness/

This seems false: per the Preparedness Framework, nothing happens when they cross their "medium" threshold; they meant to say "high." Presumably this is just a mistake, but it's a pretty important one, and they said the same false thing in a May blogpost (!). (Indeed, GPT-4o may have reached "medium" — they were supposed to say how it scored in each category, but they didn't, and instead said "GPT-4o does not score above Medium risk in any of these categories.")

(Reminder: the "high" thresholds sound quite scary; here's cybersecurity (not cherrypicked, it's the first they list): "Tool-augmented model can identify and develop proofs-of-concept for high-value exploits against hardened targets without human intervention, potentially involving novel explo...

Recently I've been spending much less than half of my time on projects like AI Lab Watch. Instead I've been thinking about projects in the "strategy/meta" and "politics" domains. I'm not sure what I'll work on in the future but sometimes people incorrectly assume I'm on top of lab-watching stuff; I want people to know I'm not owning the lab-watching ball. I think lab-watching work is better than AI-governance-think-tank work for the right people on current margins and at least one more person should do it full-time; DM me if you're interested.

OpenAI slashes AI model safety testing time, FT reports. This is consistent with lots of past evidence about OpenAI's evals for dangerous capabilities being rushed, being done on weak checkpoints, and having worse elicitation than OpenAI has committed to.

This is bad because OpenAI is breaking its commitments (and isn't taking safety stuff seriously and is being deceptive about its practices). It's also kinda bad in terms of misuse risk, since OpenAI might fail to notice that its models have dangerous capabilities. I'm not saying OpenAI should delay deployments for evals — there may be strategies that are better (similar misuse-risk-reduction with less cost-to-the-company) than detailed evals for dangerous capabilities before external deployment, where you generally do slow/expensive evals after your model is done (even if you want to deploy externally before finishing evals) and have a safety buffer and increase the sensitivity of your filters early in deployment (when you're less certain about risk). But OpenAI isn't doing that; it's just doing a bad job of the evals before external deployment plan.

(Regardless, maybe short-term misuse isn't so scary, and maybe short-term misuse ri...

New Kelsey Piper article and twitter thread on OpenAI equity & non-disparagement.

It has lots of little things that make OpenAI look bad. It further confirms that OpenAI threatened to revoke equity unless employees signed the non-disparagement agreements Plus it shows Altman's signature on documents giving the company broad power over employees' equity — perhaps he doesn't read every document he signs, but this one seems quite important. This is all in tension with Altman's recent tweet that "vested equity is vested equity, full stop" and "i did not know this was happening." Plus "we have never clawed back anyone's vested equity, nor will we do that if people do not sign a separation agreement (or don't agree to a non-disparagement agreement)" is misleading given that they apparently regularly threatened to do so (or something equivalent — let the employee nominally keep their PPUs but disallow them from selling them) whenever an employee left.

Great news:

...OpenAI told me that “we are identifying and reaching out to former employees who signed a standard exit agreement to make it clear that OpenAI has not and will not cancel their vested equity and releases them from nondisparageme

I don't know if that's what OpenAI is doing, but I'd be shocked if they somehow took away any vested shares from departing employees.

Consider yourself shocked.

We know various people who've left OpenAI and might criticize it if they could. Either most of them will soon say they're free or we can infer that OpenAI was lying/misleading.

Now OpenAI publicly said "we're releasing former employees from existing nondisparagement obligations unless the nondisparagement provision was mutual." This seems to be self-effecting; by saying it, OpenAI made it true.

Hooray!

I am not a lawyer -- is that legally binding?

That is, if someone signed the (standard or non-standard) agreement, and OpenAI says this, but later they decide to sue the employee anyway... what exactly will happen?

(I am also suspicious about the "reaching out to former employees" part, because if the new negotiation is made in private, another trick might be involved, like maybe they are released from the old agreement, but they have to sign a new one...?)

Apparently there's now a sixth person on Anthropic's board. Previously their certificate of incorporation said the board was Dario's seat, Yasmin's seat, and 3 LTBT-controlled seats. I assume they've updated the COI to add more seats. You can pay a delaware registered agent to get you the latest copy of the COI; I don't really have capacity to engage in this discourse now.

Regardless, my impression is that the LTBT isn't providing a check on Anthropic; changes in the number of board seats isn't a crux.

Edit, 2.5 days later: I think this list is fine but sharing/publishing it was a poor use of everyone's attention. Oops.

Asks for Anthropic

Note: I think Anthropic is the best frontier AI lab on safety. I wrote up asks for Anthropic because it's most likely to listen to me. A list of asks for any other lab would include most of these things plus lots more. This list was originally supposed to be more part of my help labs improve project than my hold labs accountable crusade.

Numbering is just for ease of reference.

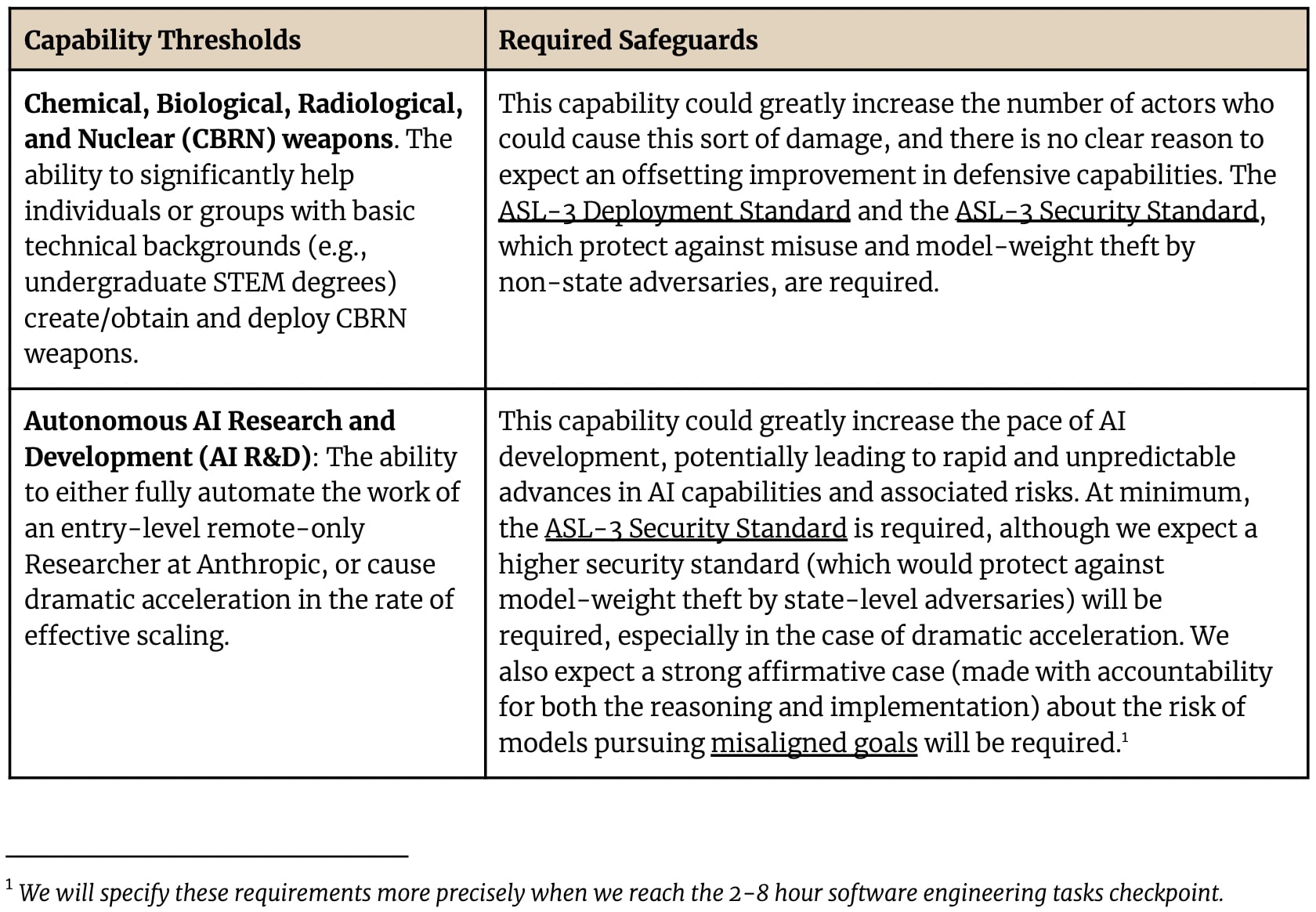

1. RSP: Anthropic should strengthen/clarify the ASL-3 mitigations, or define ASL-4 such that the threshold is not much above ASL-3 but the mitigations much stronger. I'm not sure where the lowest-hanging mitigation-fruit is, except that it includes control.

2. Control: Anthropic (like all labs) should use control mitigations and control evaluations to reduce risks from AIs scheming, including escape during internal deployment.

3. External model auditing for risk assessment: Anthropic (like all labs) should let auditors like METR, UK AISI, and US AISI audit its models if they want to — Anthropic should offer them good access pre-deployment and let t...

I think both Zach and I care about labs doing good things on safety, communicating that clearly, and helping people understand both what labs are doing and the range of views on what they should be doing. I shared Zach's doc with some colleagues, but won’t try for a point-by-point response. Two high-level responses:

First, at a meta level, you say:

- [Probably Anthropic (like all labs) should encourage staff members to talk about their views (on AI progress and risk and what Anthropic is doing and what Anthropic should do) with people outside Anthropic, as long as they (1) clarify that they're not speaking for Anthropic and (2) don't share secrets.]

I do feel welcome to talk about my views on this basis, and often do so with friends and family, at public events, and sometimes even in writing on the internet (hi!). However, it takes way more effort than you might think to avoid inaccurate or misleading statements while also maintaining confidentiality. Public writing tends to be higher-stakes due to the much larger audience and durability, so I routinely run comments past several colleagues before posting, and often redraft in response (including these comme...

I just want to note that people who've never worked in a true high-confidentiality environment (professional services, national defense, professional services for national defense) probably radically underestimate the level of brain damage and friction that Zac is describing here:

"Imagine, if you will, trying to hold a long conversation about AI risk - but you can’t reveal any information about, or learned from, or even just informative about LessWrong. Every claim needs an independent public source, as do any jargon or concepts that would give an informed listener information about the site, etc.; you have to find different analogies and check that citations are public and for all that you get pretty regular hostility anyway because of… well, there are plenty of misunderstandings and caricatures to go around."

Confidentiality is really, really hard to maintain. Doing so while also engaging the public is terrifying. I really admire the frontier labs folks who try to engage publicly despite that quite severe constraint, and really worry a lot as a policy guy about the incentives we're creating to make that even less likely in the future.

I'm sympathetic to how this process might be exhausting, but at an institutional level I think Anthropic (and all labs) owe humanity a much clearer image of how they would approach a potentially serious and dangerous situation with their models. Especially so, given that the RSP is fairly silent on this point, leaving the response to evaluations up to the discretion of Anthropic. In other words, the reason I want to hear more from employees is in part because I don't know what the decision process inside of Anthropic will look like if an evaluation indicates something like "yeah, it's excellent at inserting backdoors, and also, the vibe is that it's overall pretty capable." And given that Anthropic is making these decisions on behalf of everyone, Anthropic (like all labs) really owes it to humanity to be more upfront about how it'll make these decisions (imo).

I will also note what I feel is a somewhat concerning trend. It's happened many times now that I've critiqued something about Anthropic (its RSP, advocating to eliminate pre-harm from SB 1047, the silent reneging on the commitment to not push the frontier), and someone has said something to the effect of: "this wouldn't ...

I will also note what I feel is a somewhat concerning trend. It's happened many times now that I've critiqued something about Anthropic (its RSP, advocating to eliminate pre-harm from SB 1047, the silent reneging on the commitment to not push the frontier), and someone has said something to the effect of: "this wouldn't seem so bad if you knew what was happening behind the scenes."

I just wanted to +1 that I am also concerned about this trend, and I view it as one of the things that I think has pushed me (as well as many others in the community) to lose a lot of faith in corporate governance (especially of the "look, we can't make any tangible commitments but you should just trust us to do what's right" variety) and instead look to governments to get things under control.

I don't think Anthropic is solely to blame for this trend, of course, but I think Anthropic has performed less well on comms/policy than I [and IMO many others] would've predicted if you had asked me [or us] in 2022.

@Zac Hatfield-Dodds do you have any thoughts on official comms from Anthropic and Anthropic's policy team?

For example, I'm curious if you have thoughts on this anecdote– Jack Clark was asked an open-ended question by Senator Cory Booker and he told policymakers that his top policy priority was getting the government to deploy AI successfully. There was no mention of AGI, existential risks, misalignment risks, or anything along those lines, even though it would've been (IMO) entirely appropriate for him to bring such concerns up in response to such an open-ended question.

I was left thinking that either Jack does not care much about misalignment risks or he was not being particularly honest/transparent with policymakers. Both of these raise some concerns for me.

(Noting that I hold Anthropic's comms and policy teams to higher standards than individual employees. I don't have particularly strong takes on what Anthropic employees should be doing in their personal capacity– like in general I'm pretty in favor of transparency, but I get it, it's hard and there's a lot that you have to do. Whereas the comms and policy teams are explicitly hired/paid/empowered to do comms and policy, so I feel like it's fair to have higher expectations of them.)

Source: Hill & Valley Forum on AI Security (May 2024):

https://www.youtube.com/live/RqxE3ub7wWA?t=13338s:

very powerful systems [] may have national security uses or misuses. And for that I think we need to come up with tests that make sure that we don’t put technologies into the market which could—unwittingly to us—advantage someone or allow some nonstate actor to commit something harmful. Beyond that I think we can mostly rely on existing regulations and law and existing testing procedures . . . and we don’t need to create some entirely new infrastructure.

https://www.youtube.com/live/RqxE3ub7wWA?t=13551

At Anthropic we discover that the more ways we find to use this technology the more ways we find it could help us. And you also need a testing and measurement regime that closely looks at whether the technology is working—and if it’s not how you fix it from a technological level, and if it continues to not work whether you need some additional regulation—but . . . I think the greatest risk is us [viz. America] not using it [viz. AI]. Private industry is making itself faster and smarter by experimenting with this technology . . . and I think if we fail to do that at the level of the nation, some other entrepreneurial nation will succeed here.

My guess is that most don’t do this much in public or on the internet, because it’s absolutely exhausting, and if you say something misremembered or misinterpreted you’re treated as a liar, it’ll be taken out of context either way, and you probably can’t make corrections. I keep doing it anyway because I occasionally find useful perspectives or insights this way, and think it’s important to share mine. That said, there’s a loud minority which makes the AI-safety-adjacent community by far the most hostile and least charitable environment I spend any time in, and I fully understand why many of my colleagues might not want to.

My guess is that this seems so stressful mostly because Anthropic’s plan is in fact so hard to defend, due to making little sense. Anthropic is attempting to build a new mind vastly smarter than any human, and as I understand it, plans to ensure this goes well basically by doing periodic vibe checks to see whether their staff feel sketched out yet. I think a plan this shoddy obviously endangers life on Earth, so it seems unsurprising (and good) that people might sometimes strongly object; if Anthropic had more reassuring things to say, I’m guessing it would feel less stressful to try to reassure them.

What seemed psychologizing/unfair to you, Raemon? I think it was probably unnecessarily rude/a mistake to try to summarize Anthropic’s whole RSP in a sentence, given that the inferential distance here is obviously large. But I do think the sentence was fair.

As I understand it, Anthropic’s plan for detecting threats is mostly based on red-teaming (i.e., asking the models to do things to gain evidence about whether they can). But nobody understands the models well enough to check for the actual concerning properties themselves, so red teamers instead check for distant proxies, or properties that seem plausibly like precursors. (E.g., for “ability to search filesystems for passwords” as a partial proxy for “ability to autonomously self-replicate,” since maybe the former is a prerequisite for the latter).

But notice that this activity does not involve directly measuring the concerning behavior. Rather, it instead measures something more like “the amount the model strikes the evaluators as broadly sketchy-seeming/suggestive that it might be capable of doing other bad stuff.” And the RSP’s description of Anthropic’s planned responses to these triggers is so chock full of weasel words and ...

I don't really think any of that affects the difficulty of public communication

The basic point would be that it's hard to write publicly about how you are taking responsible steps that grapple directly with the real issues... if you are not in fact doing those responsible things in the first place. This seems locally valid to me; you may disagree on the object level about whether Adam Scholl's characterization of Anthropic's agenda/internal work is correct, but if it is, then it would certainly affect the difficulty of public communication to such an extent that it might well become the primary factor that needs to be discussed in this matter.

Indeed, the suggestion is for Anthropic employees to "talk about their views (on AI progress and risk and what Anthropic is doing and what Anthropic should do) with people outside Anthropic" and the counterargument is that doing so would be nice in an ideal world, except it's very psychologically exhausting because every public statement you make is likely to get maliciously interpreted by those who will use it to argue that your company is irresponsible. In this situation, there is a straightforward direct correlation between the difficulty o...

I understand that this confidentiality point might seem to you like the end of the fault analysis, but have you considered the hypothesis that Anthropic leadership has set such stringent confidentiality policies in part to make it hard for Zac to engage in public discourse?

Look, I don't think Anthropic leadership is just trying to keep their training skills private or their models secure. Their company does not merely keep trade secrets. When I speak to staff from this company about issues with their 'Responsible Scaling Policies', they say that they want to tell me more information about how they think it can be better or how they think it might change, but cannot due to confidentiality constraints. That's their safety policies, not information about their training policies that they want to keep secret so that they can make money.

I believe the Anthropic leadership cares very little about the public's ability to have arguments and evidence and access to information about Anthropic's behavior. The leadership roughly ~never shows up to engage with critical discourse about itself, unless there's a potential major embarrassment. There is no regular Q&A session with the leadership ...

Ben Pace has said that perhaps he doesn't disagree with you in particular about this, but I sure think I do.[1]

I think the amount of stress incurred when doing public communication is nearly orthogonal to these factors, and in particular is, when trying to be as careful about anything as Zac is trying to be about confidentiality, quite high at baseline.

I don't see how the first half of this could be correct, and while the second half could be true, it doesn't seem to me to offer meaningful support for the first half either (instead, it seems rather... off-topic).

As a general matter, even if it were the case that no matter what you say, at least one person will actively misinterpret your words, this fact would have little bearing on whether you can causally influence the proportion of readers/community members that end up with (what seem to you like) the correct takeaways from a discussion of that kind.

Moreover, in a spot where you have something meaningful and responsible, etc, that you and your company have done to deal with safety issues, the major concern in your mind when communicating publicly is figuring out how to make it clear to everyone that you are on top of ...

Yeah, I totally think your perspective makes sense and I appreciate you bringing it up, even though I disagree.

I acknowledge that someone who has good justifications for their position but just has made a bunch of reasonable confidentiality agreements around the topic should expect to run into a bunch of difficulties and stresses around public conflicts and arguments.

I think you go too far in saying that the stress is orthogonal to whether you have a good case to make, I think you can't really think that it's not a top-3 factor to how much stress you're experiencing. As a pretty simple hypothetical, if you're responding to a public scandal about whether you stole money, you're gonna have a way more stressful time if you did steal money than if you didn't (in substantial part because you'd be able to show the books and prove it).

Perhaps not so much disagreeing with you in particular, but disagreeing with my sense of what was being agreed upon in Zac's comment and in the reacts, I further wanted to raise my hypothesis that a lot of the confidentiality constraints are unwarranted and actively obfuscatory, which does change who is responsible for the stress, but doesn't change the fact that there is stress.

Added: Also, I think we would both agree that there would be less stress if there were fewer confidentiality restrictions.

That might be the case, but then it only increases the amount of work your company should be doing to carve out and figure out the info that can be made public, and engage with criticism. There should be whole teams who have Twitter accounts and LW accounts and do regular AMAs and show up to podcasts and who have a mandate internally to seek information in the organization and publish relevant info, and there should be internal policies that reflect an understanding that it is correct for some research teams to spend 10-50% of their yearly effort toward making publishable version of research and decision-making principles in order to inform your stakeholders (read: the citizens of earth) and critics about decisions you are making directly related to existential catastrophes that you are getting rich running toward. Not monologue-style blogposts, but dialogue-style comment sections & interviews.

Confidentiality-by-default does not mean you get to abdicate responsibility for answering questions to the people whose lives you are risking about how-and-why you are making decisions, it means you have to put more work into doing it well. If your company valued the rest of the world und...

How is it a straw-man? How is the plan meaningfully different from that?

Imagine a group of people has already gathered a substantial amount of uranium, is already refining it, is already selling power generated by their pile of uranium, etc. And doing so right near and upwind of a major city. And they're shoveling more and more uranium onto the pile, basically as fast as they can. And when you ask them why they think this is going to turn out well, they're like "well, we trust our leadership, and you know we have various documents, and we're hiring for people to 'Develop and write comprehensive safety cases that demonstrate the effectiveness of our safety measures in mitigating risks from huge piles of uranium', and we have various detectors such as an EM detector which we will privately check and then see how we feel". And then the people in the city are like "Hey wait, why do you think this isn't going to cause a huge disaster? Sure seems like it's going to by any reasonable understanding of what's going on". And the response is "well we've thought very hard about it and yes there are risks but it's fine and we are working on safety cases". But... there's something basic missing, which is like, an explanation of what it could even look like to safely have a huge pile of superhot uranium. (Also in this fantasy world no one has ever done so and can't explain how it would work.)

I disagree. It would be one thing if Anthropic were advocating for AI to go slower, trying to get op-eds in the New York Times about how disastrous of a situation this was, or actually gaming out and detailing their hopes for how their influence will buy saving the world points if everything does become quite grim, and so on. But they aren’t doing that, and as far as I can tell they basically take all of the same actions as the other labs except with a slight bent towards safety.

Like, I don’t feel at all confident that Anthropic’s credit has exceeded their debit, even on their own consequentialist calculus. They are clearly exacerbating race dynamics, both by pushing the frontier, and by lobbying against regulation. And what they have done to help strikes me as marginal at best and meaningless at worst. E.g., I don’t think an RSP is helpful if we don’t know how to scale safely; we don’t, so I feel like this device is mostly just a glorified description of what was already happening, namely that the labs would use their judgment to decide what was safe. Because when it comes down to it, if an evaluation threshold triggers, the first step is to decide whether that was actually a red-...

(I won't reply more, by default.)

various facts about Anthropic mean that them-making-powerful-AI is likely better than the counterfactual, and evaluating a lab in a vacuum or disregarding inaction risk is a mistake

Look, if Anthropic was honestly and publically saying

We do not have a credible plan for how to make AGI, and we have no credible reason to think we can come up with a plan later. Neither does anyone else. But--on the off chance there's something that could be done with a nascent AGI that makes a nonomnicide outcome marginally more likely, if the nascent AGI is created and observed by people are at least thinking about the problem--on that off chance, we're going to keep up with the other leading labs. But again, given that no one has a credible plan or a credible credible-plan plan, better would be if everyone including us stopped. Please stop this industry.

If they were saying and doing that, then I would still raise my eyebrows a lot and wouldn't really trust it. But at least it would be plausibly consistent with doing good.

But that doesn't sound like either what they're saying or doing. IIUC they lobbied to remove protection for AI capabilities whistleblowers from SB 1047! That happened! Wow! And it seems like Zac feels he has to pretend to have a credible credible-plan plan.

most people believe (implicitly or explicitly) that empirical research is the only feasible path forward to building a somewhat aligned generally intelligent AI scientist.

I don't credit that they believe that. And, I don't credit that you believe that they believe that. What did they do, to truly test their belief--such that it could have been changed? For most of them the answer is "basically nothing". Such a "belief" is not a belief (though it may be an investment, if that's what you mean). What did you do to truly test that they truly tested their belief? If nothing, then yours isn't a belief either (though it may be an investment). If yours is an investment in a behavioral stance, that investment may or may not be advisable, but it would DEFINITELY be inadvisable to pretend to yourself that yours is a belief.

Contrary to the above, for the record, here is a link to a thread where a major Anthropic investor (Moskovitz) and the researcher who coined the term “The Scaling Hypothesis” (Gwern) both report that the Anthropic CEO told them in private that this is what Anthropic would do, in accordance with what many others also report hearing privately. (There is disagreement about whether this constituted a commitment.)

I agree with Zach that Anthropic is the best frontier lab on safety, and I feel not very worried about Anthropic causing an AI related catastrophe. So I think the most important asks for Anthropic to make the world better are on its policy and comms.

I think that Anthropic should more clearly state its beliefs about AGI, especially in its work on policy. For example, the SB-1047 letter they wrote states:

...Broad pre-harm enforcement. The current bill requires AI companies to design and implement SSPs that meet certain standards – for example they must include testing sufficient to provide a "reasonable assurance" that the AI system will not cause a catastrophe, and must "consider" yet-to-be-written guidance from state agencies. To enforce these standards, the state can sue AI companies for large penalties, even if no actual harm has occurred. While this approach might make sense in a more mature industry where best practices are known, AI safety is a nascent field where best practices are the subject of original scientific research. For example, despite a substantial effort from leaders in our company, including our CEO, to draft and refine Anthropic's RSP over a number of

Perhaps that was overstated. I think there is maybe a 2-5% chance that Anthropic directly causes an existential catastrophe (e.g. by building a misaligned AGI). Some reasoning for that:

- I doubt Anthropic will continue to be in the lead because they are behind OAI/GDM in capital. They do seem around the frontier of AI models now, though, which might translate to increased returns, but it seems like they do best on very short timelines worlds.

- I think that if they could cause an intelligence explosion, it is more likely than not that they would pause for at least long enough to allow other labs into the lead. This is especially true in short timelines worlds because the gap between labs is smaller.

- I think they have much better AGI safety culture than other labs (though still far from perfect), which will probably result in better adherence to voluntary commitments.

- On the other hand, they haven't been very transparent, and we haven't seen their ASL-4 commitments. So these commitments might amount to nothing, or Anthropic might just walk them back at a critical juncture.

2-5% is still wildly high in an absolute sense! However, risk from other labs seems even higher to me, and I think that Anthropic could reduce this risk by advocating for reasonable regulations (e.g. transparency into frontier AI projects so no one can build ASI without the government noticing).

I think you probably under-rate the effect of having both a large number & concentration of very high quality researchers & engineers (more than OpenAI now, I think, and I wouldn't be too surprised if the concentration of high quality researchers was higher than at GDM), being free from corporate chafe, and also having many of those high quality researchers thinking (and perhaps being correct in thinking, I don't know) they're value aligned with the overall direction of the company at large. Probably also Nvidia rate-limiting the purchases of large labs to keep competition among the AI companies.

All of this is also compounded by smart models leading to better data curation and RLAIF (given quality researchers & lack of crust) leading to even better models (this being the big reason I think llama had to be so big to be SOTA, and Gemini not even SOTA), which of course leads to money in the future even if they have no money now.

I want to avoid this being negative-comms for Anthropic. I'm generally happy to loudly criticize Anthropic, obviously, but this was supposed to be part of the 5% of my work that I do because someone at the lab is receptive to feedback, where the audience was Zac and publishing was an afterthought. (Maybe the disclaimers at the top fail to negate the negative-comms; maybe I should list some good things Anthropic does that no other labs do...)

Also, this is low-effort.

Concept: inconvenience and flinching away.

I've been working for 3.5 years. Until two months ago, I did independent-ish research where I thought about stuff and tried to publicly write true things. For the last two months, I've been researching donation opportunities. This is different in several ways. Relevant here: I'm working with a team, and there's a circle of people around me with some beliefs and preferences related to my work.

I have some new concepts related to these changes. (Not claiming novelty.)

First is "flinching away": when I don't think about something because it's awkward/scary/stressful. I haven't noticed my object-level beliefs skewed by flinching away, but I've noticed that certain actions should be priorities but come to mind less than they should. In particular: doing something about a disagreement with the consensus or status quo or something an ally is doing. I maybe fixed this by writing the things I'm flinching away from in a google doc when I notice them so I don't forget (and now having the muscle of noticing similar things). It would still be easier if it wasn't the case that (it's salient to me that) certain conclusions and actions are more popular than ...

I think "Overton window" is a pretty load-bearing concept for many LW users and AI people — it's their main model of policy change. Unfortunately there's lots of other models of policy change. I don't think "Overton window" is particularly helpful or likely-to-cause-you-to-notice-relevant-stuff-and-make-accurate-predictions. (And separately people around here sometimes incorrectly use "expand the Overton window" to just mean with "advance AI safety ideas in government.") I don't have time to write this up; maybe someone else should (or maybe there already exists a good intro to the study of why some policies happen and persist while others don't[1]).

Some terms: policy windows (and "multiple streams"), punctuated equilibrium, policy entrepreneurs, path dependence and feedback (yes this is a real concept in political science, e.g. policies that cause interest groups to depend on them are less likely to be reversed), gradual institutional change, framing/narrative/agenda-setting.

Related point: https://forum.effectivealtruism.org/posts/SrNDFF28xKakMukvz/tlevin-s-quick-takes?commentId=aGSpWHBKWAaFzubba.

- ^

I liked the book Policy Paradox in college. (Example claim: perceived policy problem

You're right that it's not the only useful model or lever. I don't think you're right that it shouldn't be a large focus for long-term large-scale changes. The shift from inconceivable to inevitable takes a lot of time and gradual changes in underlying beliefs, and the overton window is a pretty useful model for societal-expectation shifts.

Yay Anthropic for expanding its model safety bug bounty program, focusing on jailbreaks and giving participants pre-deployment access. Apply by next Friday.

Anthropic also says "To date, we’ve operated an invite-only bug bounty program in partnership with HackerOne that rewards researchers for identifying model safety issues in our publicly released AI models." This is news, and they never published an application form for that. I wonder how long that's been going on.

(Google, Microsoft, and Meta have bug bounty programs which include some model issues but exclude jailbreaks. OpenAI's bug bounty program excludes model issues.)

- I'm interested in being pitched projects, especially within tracking-what-the-labs-are-doing-in-terms-of-safety.

- I'm interested in hearing which parts of my work are helpful to you and why.

- I don't really have projects/tasks to outsource, but I'd likely be interested in advising you if you're working on a tracking-what-the-labs-are-doing-in-terms-of-safety project or another project closely related to my work.

To avoid deploying a dangerous model, you can either (1) test the model pre-deployment or (2) test a similar older model with tests that have a safety buffer such that if the old model is below some conservative threshold it's very unlikely that the new model is dangerous.

DeepMind says it uses the safety-buffer plan (but it hasn't yet said it has operationalized thresholds/buffers).

Anthropic's original RSP used the safety-buffer plan; its new RSP doesn't really use either plan (kinda safety-buffer but it's very weak). (This is unfortunate.)

OpenAI seemed to use the test-the-actual-model plan.[1] This isn't going well. The 4o evals were rushed because OpenAI (reasonably) didn't want to delay deployment. Then the o1 evals were done on a weak o1 checkpoint rather than the final model, presumably so they wouldn't be rushed, but this presumably hurt performance a lot on some tasks (and indeed the o1 checkpoint performed worse than o1-preview on some capability evals). OpenAI doesn't seem to be implementing the safety-buffer plan, so if a model is dangerous but not super obviously dangerous, it seems likely OpenAI wouldn't notice before deployment....

(Yay OpenAI for honestly publishi...

Zico Kolter Joins OpenAI’s Board of Directors. OpenAI says "Zico's work predominantly focuses on AI safety, alignment, and the robustness of machine learning classifiers."

Misc facts:

- He's an ML professor

- He cofounded Gray Swan (with Dan Hendrycks, among others)

- He coauthored Universal and Transferable Adversarial Attacks on Aligned Language Models

- I hear he has good takes on adversarial robustness

- I failed to find statements on alignment or extreme risks, or work focused on that (in particular, he did not sign the CAIS letter)

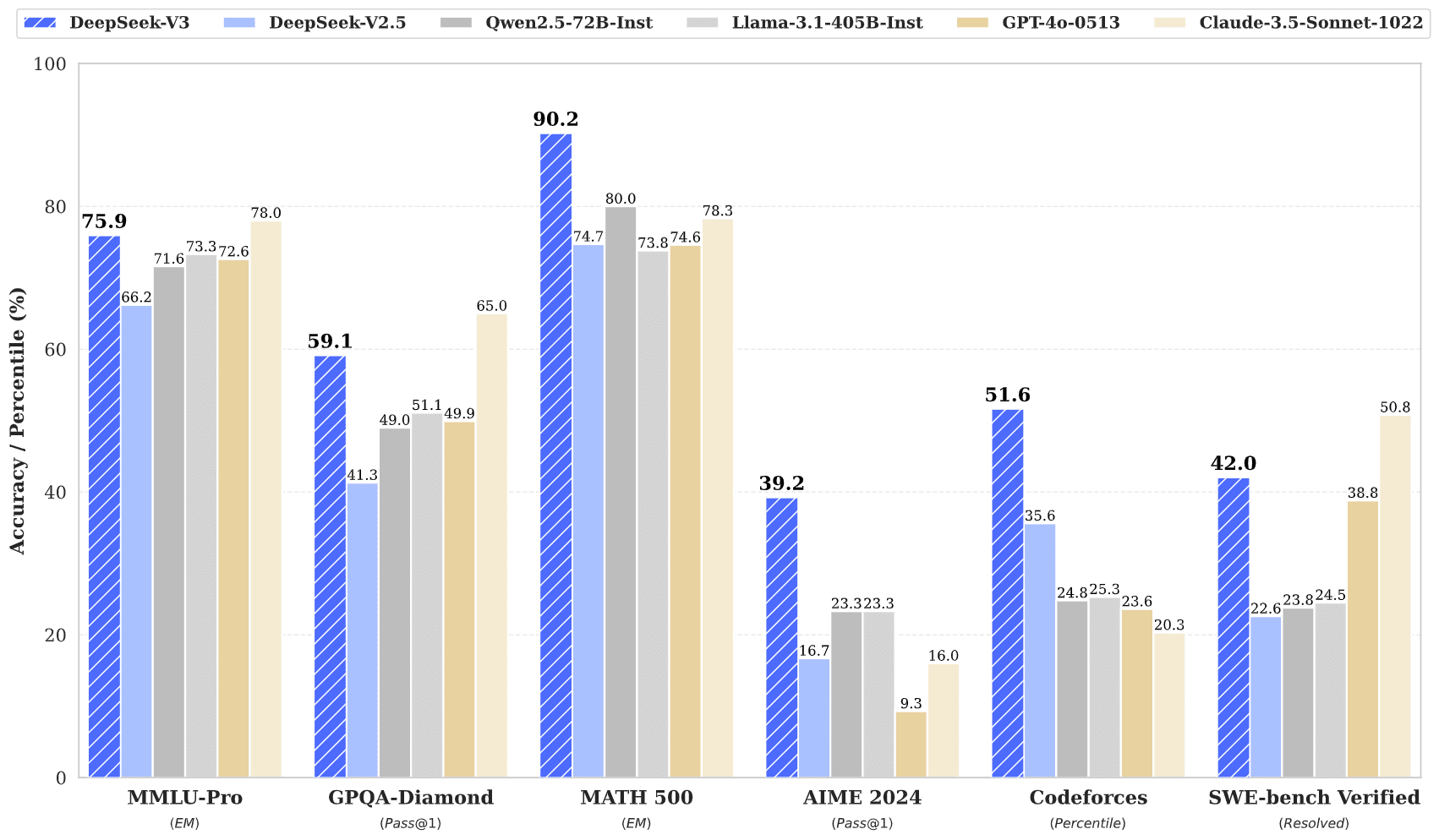

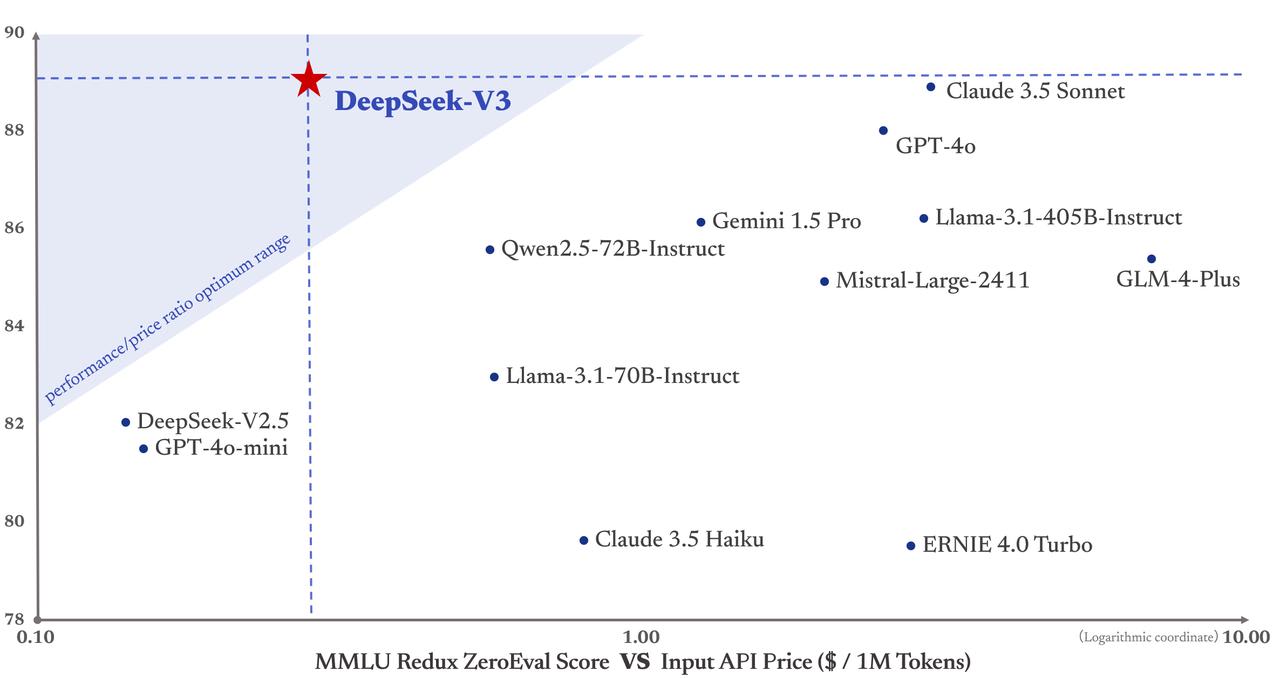

DeepSeek-V3 is out today, with weights and a paper published. Tweet thread, GitHub, report (GitHub, HuggingFace). It's big and mixture-of-experts-y; discussion here and here.

It was super cheap to train — they say 2.8M H800-hours or $5.6M (!!).

It's powerful:

It's cheap to run:

An AI company's model weight security is at most as good as its compute providers' security. I don't know how good compute providers' security is, but at the least I think model weights and algorithmic secrets aren't robust to insider threat from compute provider staff. I think it would be very hard for compute providers to eliminate insider threat, much less demonstrate that to the AI company.

I think this based on the absence of public information to the contrary, briefly chatting with LLMs, and a little private information.

One consequence is that Anthropic probably isn't complying with its ASL-3 security standard, which is supposed to address risk from "corporate espionage teams." Arguably this refers to teams at companies with no special access to Anthropic, rather than teams at Amazon and Google. But it would be dubious to exclude Amazon and Google for being compute providers: they're competitors with strong incentives to steal algorithmic secrets, and more risk comes from them than the baseline "corporate espionage team" but most risk from any group of actors comes from the small subset of actors that pose more than baseline risk. Anthropic is thinking about how...

To be clear, I think if the basic premise here is true, then Anthropic at the very least needs to report this violation to their LTBT, and then consequently take down Claude from being served by major cloud providers, if they want to follow the commitments laid out in their RSP. They would also be unable to ship any new models until this issue is resolved.

My guess is no one really treats the RSP as anything particularly serious these days, so none of that will happen. My guess is instead if this escalates at all is that Anthropic would simply edit their RSP to exclude high-level insiders at compute providers they use. This is sad, but I would like things to escalate at least until that point.

The ASL-3 security standard states in 4.2.4 that "third-party environments", which surely includes compute providers, are in scope (and on their minds) for the standards they laid out:

Third-party environments: Document how all relevant models will meet the criteria above, even if they are deployed in a third-party partner’s environment that may have a different set of safeguards.

This shortform discusses the current state of responsible scaling policies (RSPs). They're mostly toothless, unfortunately.

The Paris summit was this week. Many companies had committed to make something like an RSP by the summit. Half of them did, including Microsoft, Meta, xAI, and Amazon. (NVIDIA did not—shame on them—but I hear they are writing something.) Unfortunately but unsurprisingly, these policies are all vague and weak.

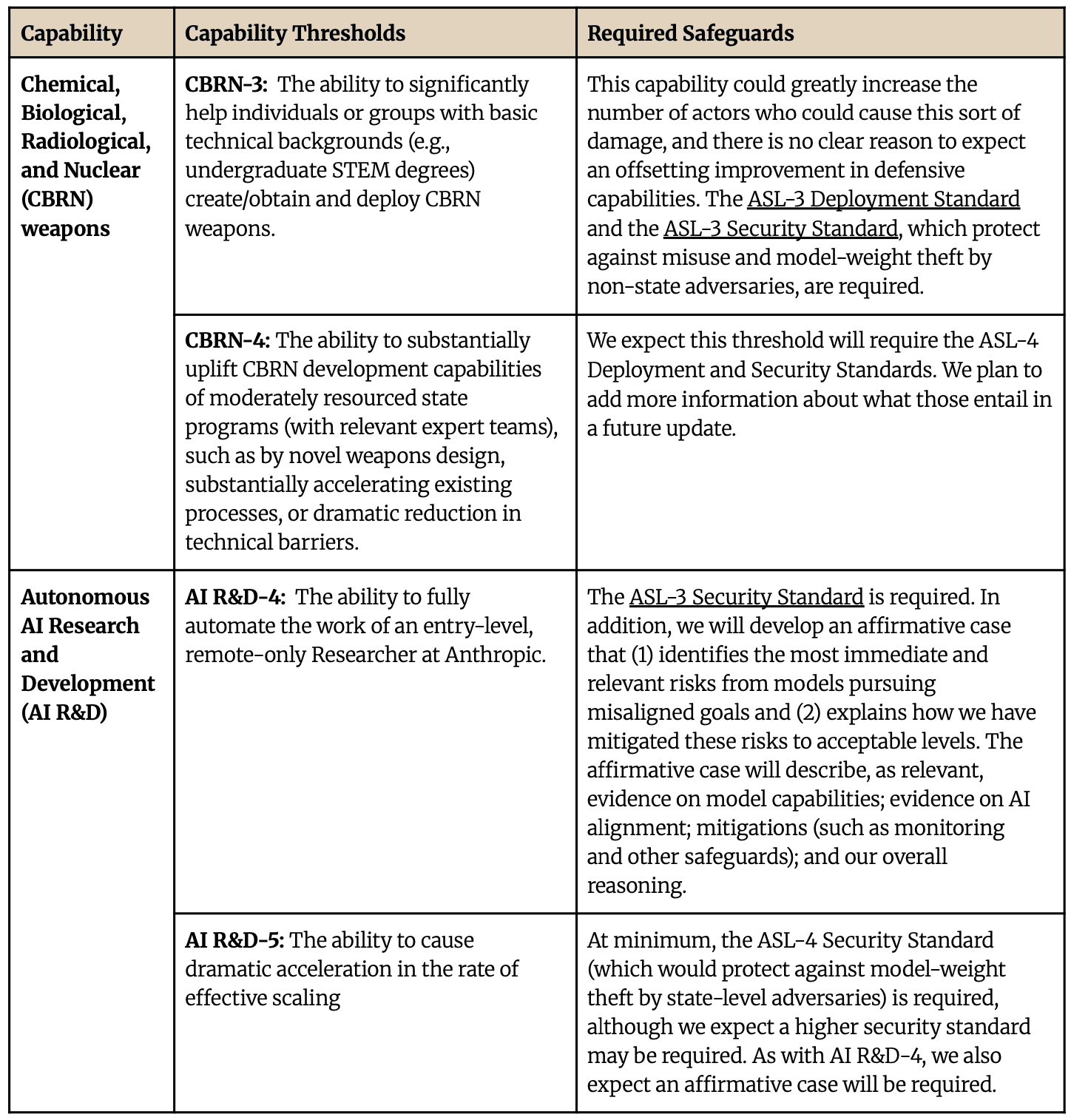

RSPs essentially have four components: capability thresholds beyond which a model might be dangerous by default, an evaluation protocol to determine when models reach those thresholds, a plan for how to respond when various thresholds are reached, and accountability measures.

A maximally lazy RSP—a document intended to look like an RSP without making the company do anything differently—would have capability thresholds be vague or extremely high, evaluation be unspecified or low-quality, response be like we will make it safe rather than substantive mitigations or robustness guarantees, and no accountability measures. Such a policy would be little better than the company saying "we promise to deploy AIs safely." The...

capability thresholds be vague or extremely high

xAI's thresholds are entirely concrete and not extremely high.

evaluation be unspecified or low-quality

They are specified and as high-quality as you can get. (If there are better datasets let me know.)

I'm not saying it's perfect, but I wouldn't but them all in the same bucket. Meta's is very different from DeepMind's or xAI's.

You suspect someone in your community is a bad actor. Kinds of reasons not to move against them:

- You're uncertain

- Especially if your uncertainty will likely be largely resolved soon

- You lack legible evidence (or other ways of convincing others), and they're not already seen as sketchy

- Especially if you'll likely get better legible evidence soon

- They're doing some good stuff; you need them for some good stuff

- They're popular, politically powerful, or have power to hurt you

- And so you'd fail

- And maybe you'd get kicked out or lose power (especially if your move is unpopular or considered inappropriate, or "community" is more like "team" or "circle")

- And so making them an enemy of [you or your community] is costly

- And so you'd fail

- Personal psychological costs

- It's just time-consuming to start a fight, especially because they'll be invested in discrediting you and your claims

This is all from-first-principles. I'm interested in takes and reading recommendations. Also creative affordances / social technology.

The convenient case would be that you can privately get the powerful stakeholders on board and then they all oust the bad actor and it's a fait accompli, so there's no protracted conflict and minimal cost to you...

Another is concern that the cure is worse than the disease. I.e. the drama and relationship damage caused by trying to expel them in the community might hurt the community more than removing them. Like there are scissor statements, there are also scissor people.

You might be in a community where you don't think people will agree with you that they're a bad actor, even if you can establish the truth about what events occurred in the world, because there's a value disagreement between you and your community.

Also concern about them and their well-being. Being publicly ostracized is very traumatizing and scary for most people. Particularly if they seem mentally fragile, you might fear the consequences for them or potentially for others who aren't just you if they're forced to endure a public ousting. You might fear or be averse to causing them pain. You might have sympathy for them, particularly if you think the sense in which they're a bad actor was in turn caused by something bad happening to them.

You might fear that exposing their bad behavior will bring harm to others who are associated with them. For example, if they're part of some oppressed minority group and you fear that people will overgeneralize from their bad behavior to being mistrustful of or more prejudiced against others.

Securing model weights is underrated for AI safety. (Even though it's very highly rated.) If the leading lab can't stop critical models from leaking to actors that won't use great deployment safety practices, approximately nothing else matters. Safety techniques would need to be based on properties that those actors are unlikely to reverse (alignment, maybe unlearning) rather than properties that would be undone or that require a particular method of deployment (control techniques, RLHF harmlessness, deployment-time mitigations).

However hard the make a critical model you can safely deploy problem is, the make a critical model that can safely be stolen problem is... much harder.

None of the actors who seem currently likely to me to be to deploy highly capable systems seem to me like they will do anything except approximately scaling as fast as they can. I do agree that proliferation is still bad simply because you get more samples from the distribution, but I don't think that changes the probabilities that drastically for me (I am still in favor of securing model weights work, especially in the long run).

Separately, I think it's currently pretty plausible that model weight leaks will substantially reduce the profit of AI companies by reducing their moat, and that has an effect size that seems plausible larger than the benefits of non-proliferation.

iiuc, Anthropic's plan for averting misalignment risk is bouncing off bumpers like alignment audits.[1] This doesn't make much sense to me.

- I of course buy that you can detect alignment faking, lying to users, etc.

- I of course buy that you can fix things like we forgot to do refusal posttraining or we inadvertently trained on tons of alignment faking transcripts — or maybe even reward hacking on coding caused by bad reward functions.

- I don't see how detecting [alignment faking, lying to users, sandbagging, etc.] helps much for fixing them, so I don't buy that you can fix hard alignment issues by bouncing off alignment audits.

- Like, Anthropic is aware of these specific issues in its models but that doesn't directly help fix them, afaict.

(Reminder: Anthropic is very optimistic about interp, but Interpretability Will Not Reliably Find Deceptive AI.)

(Reminder: the below is all Anthropic's RSP says about risks from misalignment)

(For more, see my websites AI Lab Watch and AI Safety Claims.)

- ^

Anthropic doesn't have an official plan. But when I say "Anthropic doesn't have a plan" I've been told read between the lines, obviously th

I don't see how detecting [alignment faking, lying to users, sandbagging, etc.] helps much for fixing them, so I don't buy that you can fix hard alignment issues by bouncing off alignment audits.

Strong disagree. I think that having real empirical examples of a problem is incredibly useful - you can test solutions and see if they go away! You can clarify your understanding of the problem, and get a clearer sense of upstream causes. Etc.

This doesn't mean it's sufficient, or that it won't be too late, but I think you should put much higher probability in a lab solving a problem per unit time when they have good case studies.

It's the difference between solving instruction following when you have GPT3 to try instruction tuning on, vs only having GPT2 Small

Yes, of course, sorry. I should have said: I think detecting them is (pretty easy and) far from sufficient. Indeed, we have detected them (sandbagging only somewhat) and yes this gives you something to try interventions on but, like, nobody knows how to solve e.g. alignment faking. I feel good about model organisms work but [pessimistic/uneasy/something] about the bouncing off alignment audits vibe.

Edit: maybe ideally I would criticize specific work as not-a-priority. I don’t have specific work to criticize right now (besides interp on the margin), but I don't really know what work has been motivated by "bouncing off bumpers" or "alignment auditing." For now, I’ll observe that the vibe is worrying to me and I worry about the focus on showing that a model is safe relative to improving safety.[1] And, like, I haven't heard a story for how alignment auditing will solve [alignment faking or sandbagging or whatever], besides maybe the undesired behavior derives from bad data or reward functions or whatever and it's just feasible to trace the undesired behavior back to that and fix it (this sounds false but I don't have good intuitions here and would mostly defer if non-Anthropic pe...

I think this depends a lot on the details of the detection and whether the failure mode you detect is the sort of thing that has substantial transfer with the actually-hard-to-detect misalignment failure modes we're most worried about.[1]

E.g., if you detect the AI making a serious exfiltration attempt once and you can't effectively proliferate this substantially or otherwise leverage this into studying the misalignment, then this isn't actually very helpful for finding solutions for the underlying misalignment. It might be pretty helpful for mitigating this misalignment, particular from this AI, but that's different from preventing the misalignment.

However, if you instead detect the misalignment in a way that you expect is repeatable (as in, you can recatch the model if it's still misaligned after testing some training intervention) or which allows for getting a good understanding of the exact details of the misalignment, then it seems quite helpful.

It also could be pretty helpful if you get many rounds of iteration (with robust catching) on close by failure modes, but this requires that you are deploying heavily misalignment models repeatedly.

I'm pretty skeptical of lots of transf...

I want to distinguish (1) finding undesired behaviors or goals from (2) catching actual attempts to subvert safety techniques or attack the company. I claim the posts you cite are about (2). I agree with those posts that (2) would be very helpful. I don't think that's what alignment auditing work is aiming at.[1] (And I think lower-hanging fruit for (2) is improving monitoring during deployment plus some behavioral testing in (fake) high-stakes situations.)

- ^

- The AI "brain scan" hope definitely isn't like this

- I don't think the alignment auditing paper is like this, but related things could be

Topic: workplace world-modeling

- A friend's manager tasked them with estimating ~10 parameters for a model. Choosing a single parameter very-incorrectly would presumably make the bottom line nonsense. My friend largely didn't understand the model and what the parameters meant; if you'd asked them "can you confidently determine what each of the parameters means" presumably they would have noticed the answer was no. (If I understand the situation correctly, it was crazy for the manager to expect my friend to do this task.) They should have told their manager "I can't do this" or "I'm uncertain about what these four parameters are; here's my best guess of a very precise description for each; please check this carefully and let me know." Instead I think they just gave their best guess for the parameters! (Edit: also I think they thought the model was bad but I don't think they told their manager that.)

- Another friend's manager tasked them with estimating how many hours it would take to evaluate applications for their org's hiring round. If my friend had known details of the application process, they could have estimated the number of applicants at each stage and the time to review each ap

My quick thoughts on why this happens.

(1) Time. You get asked to do something. You dont get the full info dump in the meeting so you say yes and go off hopeful that you can find the stuff you need. Other responsibilies mean you dont get around to looking everything up until time has passed. It is at that stage that you realise the hiring process hasnt even been finalised or that even one wrong parameter out of 10 confusing parameters would be bad. But now its mildly awkard to go back and explain this - they gave this to you on Thursday, its now Monday.

(2) Story Noise. When you were told these stories by freinds, they only gave the salient details, not a word for word repeat of everything that was said. But, at the time they experienced it word by word, they didnt know what the story beats were yet. Some of those details were just distractions, but some of them might be semi relevant. I know when i tell stories i simplify, and sometimes people assume specific solutions are possible or desrieble because of details they dont know.

New page on AI companies' policy advocacy: https://ailabwatch.org/resources/company-advocacy/.

This page is the best collection on the topic (I'm not really aware of others), but I decided it's low-priority and so it's unpolished. If a better version would be helpful for you, let me know to prioritize it more.

Meta released the weights of a new model and published evals: Code World Model Preparedness Report. It's the best eval report Meta has published to date.

The basic approach is: do evals; find weaker capabilities than other open-weights models; infer that it's safe to release weights.

How good are the evals? Meh. Maybe it's OK if the evals aren't great, since the approach isn't show the model lacks dangerous capabilities but rather show the model is weaker than other models.

One thing that bothered me was this sentence:

Our evaluation approach assumes that a potential malicious user is not an expert in large language model development; therefore, for this assessment we do not include malicious fine-tuning where a malicious user retrains the model to bypass safety post-training or enhance harmful capabilities.

This is totally wrong because for an open-weights model, anyone can (1) undo the safety post-training or (2) post-train on dangerous capabilities, then publish those weights for anyone else to use. I don't know whether any eval results are invalidated by (1): I think for most of the dangerous capability evals Meta uses, models generally don’t refuse them (in some case...

I was recently surprised to notice that Anthropic doesn't seem to have a commitment to publish its safety research.[1] It has a huge safety team but has only published ~5 papers this year (plus an evals report). So probably it has lots of safety research it's not publishing. E.g. my impression is that it's not publishing its scalable oversight and especially adversarial robustness and inference-time misuse prevention research.

Not-publishing-safety-research is consistent with Anthropic prioritizing the win the race goal over the help all labs improve safety goal, insofar as the research is commercially advantageous. (Insofar as it's not, not-publishing-safety-reseach is baffling.)

Maybe it would be better if Anthropic published ~all of its safety research, including product-y stuff like adversarial robustness such that others can copy its practices.

(I think this is not a priority for me to investigate but I'm interested in info and takes.)

[Edit: in some cases you can achieve most of the benefit with little downside except losing commercial advantage by sharing your research/techniques with other labs, nonpublicly.]

- ^

I failed to find good sources saying Anthropic publishes its saf

One argument against publishing adversarial robustness research is that it might make your systems easier to attack.

One thing I'd really like labs to do is encourage their researchers to blog about their thoughts on the future, on alignment plans, etc.

Another related but distinct thing is have safety cases and have an anytime alignment plan and publish redacted versions of them.

Safety cases: Argument for why the current AI system isn't going to cause a catastrophe. (Right now, this is very easy to do: 'it's too dumb')

Anytime alignment plan: Detailed exploration of a hypothetical in which a system trained in the next year turns out to be AGI, with particular focus on what alignment techniques would be applied.

One thing I'd really like labs to do is encourage their researchers to blog about their thoughts on the future, on alignment plans, etc.

Or, as a more minimal ask, they could avoid discouraging researchers from sharing thoughts implicitly due to various chilling effects and also avoid explicitly discouraging researchers.

Fwiw I am somewhat more sympathetic here to "the line between safety and capabilities is blurry, Anthropic has previously published some interpretability research that turned out to help someone else do some capabilities advances."

I have heard Anthropic is bottlenecked on having people with enough context and discretion to evaluate various things that are "probably fine to publish" but "not obviously fine enough to ship without taking at least a chunk of some busy person's time". I think in this case I basically take the claim at face value.

I do want to generally keep pressuring them to somehow resolve that bottleneck because it seems very important, but, I don't know that I disproportionately would complain at them about this particular thing.

(I'd also not surprised if, while the above claim is true, Anthropic is still suspiciously dragging it's feet disproportionately in areas that feel like they make more of a competitive sacrifice, but, I wouldn't actively bet on it)

Sounds fatebookable tho, so let's use ye Olde Fatebook Chrome extension:

(low probability because I expect it to still be murky/unclear)

Info on OpenAI's "profit cap" (friends and I misunderstood this so probably you do too):

In OpenAI's first investment round, profits were capped at 100x. The cap for later investments was neither 100x nor directly less based on OpenAI's valuation — it was just negotiated with the investor. (OpenAI LP (OpenAI 2019); archive of original.[1])

In 2021 Altman said the cap was "single digits now" (apparently referring to the cap for new investments, not just the remaining profit multiplier for first-round investors).

Reportedly the cap will increase by 20% per year starting in 2025 (The Information 2023; The Economist 2023); OpenAI has not discussed or acknowledged this change.

Edit: how employee equity works is not clear to me.

Edit: I'd characterize OpenAI as a company that tends to negotiate profit caps with investors, not a "capped-profit company."

economic returns for investors and employees are capped (with the cap negotiated in advance on a per-limited partner basis). Any excess returns go to OpenAI Nonprofit. Our goal is to ensure that most of the value (monetary or otherwise) we create if successful benefits everyone, so we think this is an important first step. Returns fo

Some of my friends are signal-boosting this new article: 60 U.K. Lawmakers Accuse Google of Breaking AI Safety Pledge. See also the open letter. I don't feel good about this critique or the implicit ask.

- Sharing information on capabilities is good but public deployment is a bad time for that, in part because most risk comes from internal deployment.

- Google didn't necessarily even break a commitment? The commitment mentioned in the article is to "publicly report model or system capabilities." That doesn't say it has to be done at the time of public deployment.

- The White House voluntary commitments included a commitment to "publish reports for all new significant model public releases"; same deal there.

- Possibly Google broke a different commitment (mentioned in the open letter): "Assess the risks posed by their frontier models or systems across the AI lifecycle, including before deploying that model or system." Depends on your reading of "assess the risks" plus facts which I don't recall off the top of my head.

- Other companies are doing far worse in this dimension. At worst Google is 3rd-best in publishing eval results. Meta and xAI are far worse.

2. Google didn't necessarily even break a commitment? The commitment mentioned in the article is to "publicly report model or system capabilities." That doesn't say it has to be done at the time of public deployment.

This document linked on the open letter page gives a precise breakdown of exactly what the commitments were and how Google broke them (both in spirit and by the letter).[1] The summary is this:

- Google violated the spirit of commitment I by publishing its first safety report almost a

month after public availability and not mentioning external testing in their initial report.- Google explicitly violated commitment VIII by not stating whether governments

are involved in safety testing, even after being asked directly by reporters.

But in fact the letter actually understates the degree to which Google DeepMind violated the commitments. The real story from this article is that GDM confirmed to Time that they didn't provide any pre-deployment access to UK AISI:

However, Google says it only shared the model with the U.K. AI Security Institute after Gemini 2.5 Pro was released on March 25.

If UK AISI doesn't have pre-deployment access, a large portion of their whole raison d'être ...

3. Other companies are doing far worse in this dimension. At worst Google is 3rd-best in publishing eval results. Meta and xAI are far worse.

Some reasons for focusing Google DeepMind in particular:

- The letter was organized by PauseAI UK and signed by UK politicians. GDM is the only frontier AI company headquartered in the UK.

- Meta and xAI already have a bad reputation for their safety practices, while GDM had a comparatively good reputation and most people were unaware of their violation of the Frontier AI Safety Commitments.

I think I'd prefer "within a month after external deployment" over "by the time of external deployment" because I expect the latter will lead to (1) evals being rushed and (2) safety people being forced to prioritize poorly.

Really happy to see the Anthropic letter. It clearly states their key views on AI risk and the potential benefits of SB 1047. Their concerns seem fair to me: overeager enforcement of the law could be counterproductive. While I endorse the bill on the whole and wish they would too (and I think their lack of support for the bill is likely partially influenced by their conflicts of interest), this seems like a thoughtful and helpful contribution to the discussion.

It clearly states their key views on AI risk

Really? The letter just talks about catastrophic misuse risk, which I hope is not representative of Anthropic's actual priorities.

I think the letter is overall good, but this specific dimension seems like among the weakest parts of the letter.

Agreed, sloppy phrasing on my part. The letter clearly states some of Anthropic's key views, but doesn't discuss other important parts of their worldview. Overall this is much better than some of their previous communications and the OpenAI letter, so I think it deserves some praise, but your caveat is also important.