"Scaling breaks down", they say. By which they mean one of the following wildly different claims with wildly different implications:

- When you train on a normal dataset, with more compute/data/parameters, subtract the irreducible entropy from the loss, and then plot in a log-log plot: you don't see a straight line anymore.

- Same setting as before, but you see a straight line; it's just that downstream performance doesn't improve .

- Same setting as before, and downstream performance improves, but: it improves so slowly that the economics is not in favor of further scaling this type of setup instead of doing something else.

- A combination of one of the last three items and "btw., we used synthetic data and/or other more high-quality data, still didn't help".

- Nothing in the realm of "pretrained models" and "reasoning models like o1" and "agentic models like Claude with computer use" profits from a scale-up in a reasonable sense.

- Nothing which can be scaled up in the next 2-3 years, when training clusters are mostly locked in, will demonstrate a big enough success to motivate the next scale of clusters costing around $100 billion.

Be precise. See also.

This is a just ask.

Also, even though it's not locally rhetorically convenient [ where making an isolated demand for rigor of people making claims like "scaling has hit a wall [therefore AI risk is far]" that are inconvenient for AInotkilleveryoneism, is locally rhetorically convenient for us ], we should demand the same specificity of people who are claiming that "scaling works", so we end up with a correct world-model and so people who just want to build AGI see that we are fair.

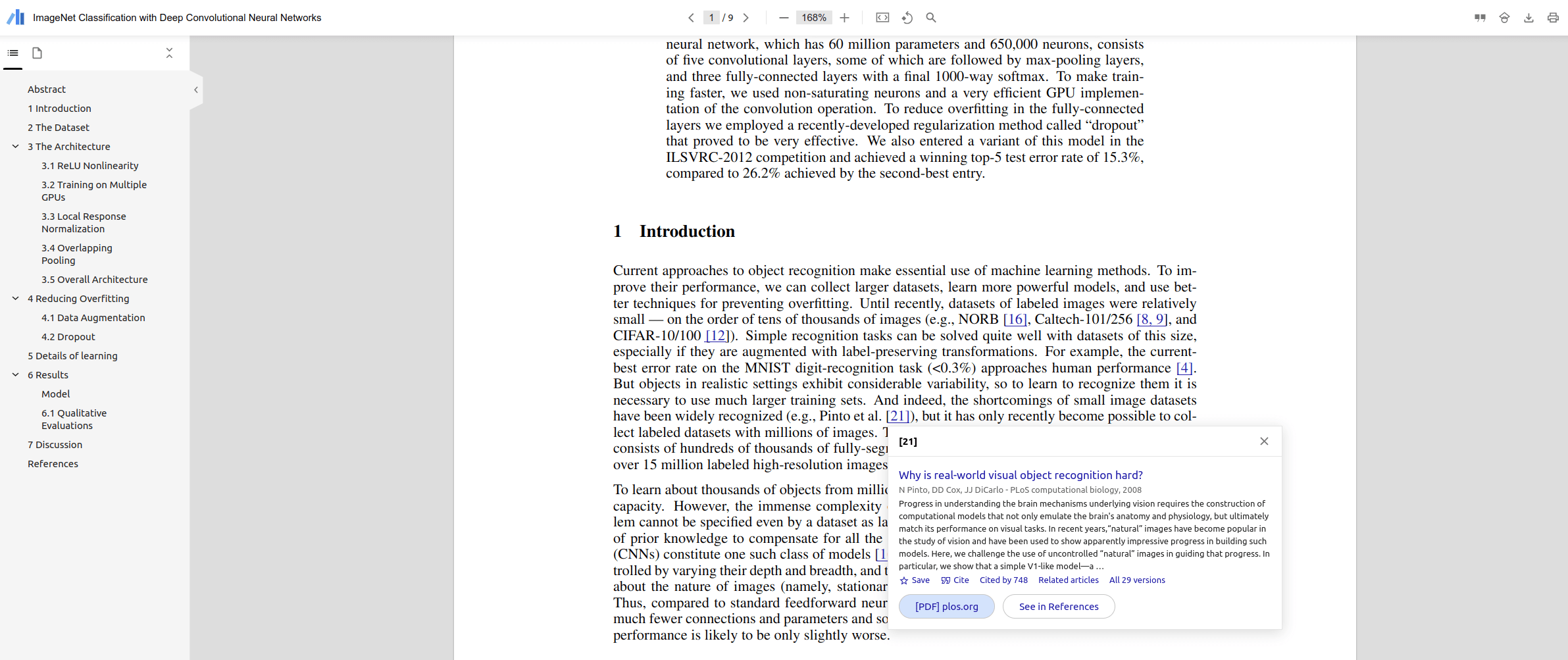

You should all be using the "Google Scholar PDF reader extension" for Chrome.

Features I like:

- References are linked and clickable

- You get a table of contents

- You can move back after clicking a link with Alt+left

Screenshot:

https://www.wsj.com/tech/ai/californias-gavin-newsom-vetoes-controversial-ai-safety-bill-d526f621

“California Gov. Gavin Newsom has vetoed a controversial artificial-intelligence safety bill that pitted some of the biggest tech companies against prominent scientists who developed the technology.

The Democrat decided to reject the measure because it applies only to the biggest and most expensive AI models and leaves others unregulated, according to a person with knowledge of his thinking”

@Zach Stein-Perlman which part of the comment are you skeptical of? Is it the veto itself, or is it this part?

The Democrat decided to reject the measure because it applies only to the biggest and most expensive AI models and leaves others unregulated, according to a person with knowledge of his thinking”

There are a few sentences in Anthropic's "conversation with our cofounders" regarding RLHF that I found quite striking:

Dario (2:57): "The whole reason for scaling these models up was that [...] the models weren't smart enough to do RLHF on top of. [...]"

Chris: "I think there was also an element of, like, the scaling work was done as part of the safety team that Dario started at OpenAI because we thought that forecasting AI trends was important to be able to have us taken seriously and take safety seriously as a problem."

Dario: "Correct."

That LLMs were scaled up partially in order to do RLHF on top of them is something I had previously heard from an OpenAI employee, but I wasn't sure it's true. This conversation seems to confirm it.

we thought that forecasting AI trends was important to be able to have us taken seriously

This might be the most dramatic example ever of forecasting affecting the outcome.

Similarly I'm concerned that a lot of alignment people are putting work into evals and benchmarks which may be having some accelerating affect on the AI capabilities which they are trying to understand.

"That which is measured improves. That which is measured and reported improves exponentially."

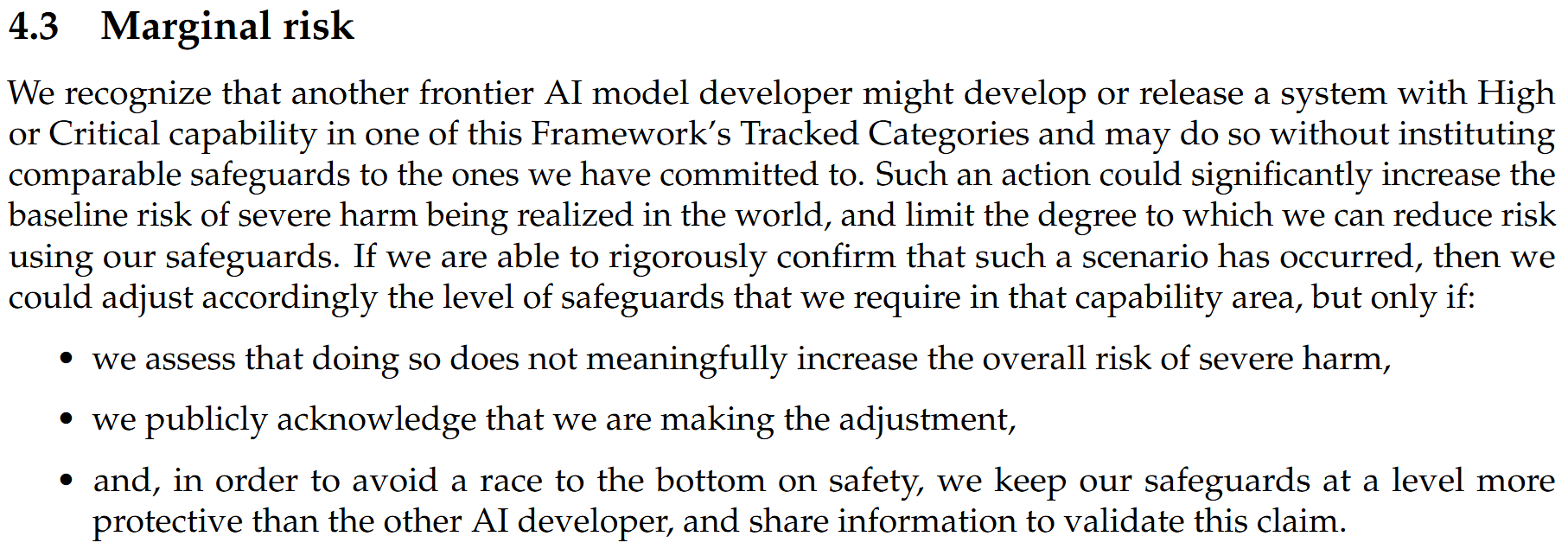

Are the straight lines from scaling laws really bending? People are saying they are, but maybe that's just an artefact of the fact that the cross-entropy is bounded below by the data entropy. If you subtract the data entropy, then you obtain the Kullback-Leibler divergence, which is bounded by zero, and so in a log-log plot, it can actually approach negative infinity. I visualized this with the help of ChatGPT:

Here, f represents the Kullback-Leibler divergence, and g the cross-entropy loss with the entropy offset.

Isn't an intercept offset already usually included in the scaling laws and so can't be misleading anyone? I didn't think anyone was fitting scaling laws which allow going to exactly 0 with no intrinsic entropy.

Why I think scaling laws will continue to drive progress

Epistemic status: This is a thought I had since a while. I never discussed it with anyone in detail; a brief conversation could convince me otherwise.

According to recent reports there seem to be some barriers to continued scaling. We don't know what exactly is going on, but it seems like scaling up base models doesn't bring as much new capability as people hope.

However, I think probably they're still in some way scaling the wrong thing: The model learns to predict a static dataset on the internet; however, what it needs to do later is to interact with users and the world. For performing well in such a task, the model needs to understand the consequences of its actions, which means modeling interventional distributions P(X | do(A)) instead of static data P(X | Y). This is related to causal confusion as an argument against the scaling hypothesis.

This viewpoint suggests that if big labs figure out how to predict observations in an online-way by ongoing interactions of the models with users / the world, then this should drive further progress. It's possible that labs are already doing this, but I'm not aware of it, and...

I'm only now really learning about Solomonoff induction. I think I didn't look into it earlier since I often heard things along the lines of "It's not computable, so it's not relevant".

But...

- It's lower semicomputable: You can actually approximate it arbitrarily well, you just don't know how good your approximations are.

- It predicts well: It's provably a really good predictor under the reasonable assumption of a computable world.

- It's how science works: You focus on simple hypotheses and discard/reweight them according to Bayesian reasoning.

- It's mathematically precise.

What more do you want?

The fact that my master's degree in AI at the UvA didn't teach this to us seems like a huge failure.

Unfortunately, I don't think that "this is how science works" is really true. Science focuses on having a simple description of the world, while Solomonoff induction focuses on the description of the world plus your place in it, being simple.

This leads to some really weird consequences, which people sometimes refer to as the Solomonoff induction being malign.

What more do you want?

Some degree of real-life applicability. If your mathematically precise framework nonetheless requires way more computing power than is available around you (or, in some cases, in the entire observable universe) to approximate it properly, you have a serious practical issue.

It's how science works: You focus on simple hypotheses and discard/reweight them according to Bayesian reasoning.

The percentage of scientists I know who use explicit Bayesian updating[1] to reweigh hypotheses is a flat 0%. They use Occam's razor-type intuitions, and those intuitions can be formalized using Solomonoff induction,[2] but that doesn't mean they are using the latter.

reasonable assumption of a computable world

Reasonable according to what? Substance-free vibes from the Sequences? The map is not the territory. A simplifying mathematical description need not represent the ontologically correct way of identifying something in the territory.

It predicts well: It's provenly a really good predictor

So can you point to any example of anyone ever predicting anything using it?

- ^

Or universal Turing Machines to compute the description lenghts of programs meant to represent real-w

It's how science works: You focus on simple hypotheses and discard/reweight them according to Bayesian reasoning.

There are some ways in which solomonoff induction and science are analogous[1], but there are also many important ways in which they are disanalogous. Here are some ways in which they are disanalogous:

- A scientific theory is much less like a program that prints (or predicts) an observation sequence than it is like a theory in the sense used in logic. Like, a scientific theory provides a system of talking which involves some sorts of things (eg massive objects) about which some questions can be asked (eg each object has a position and a mass, and between any pair of objects there is a gravitational force) with some relations between the answers to these questions (eg we have an axiom specifying how the gravitational force depends on the positions and masses, and an axiom specifying how the second derivative of the position relates to the force).[2]

- Science is less in the business of predicting arbitrary observation sequences, and much more in the business of letting one [figure out]/understand/exploit very particular things — like, the physics someone knows is go

It predicts well

Versus: it only predicts.

Scientific epistemology has a distinction between realism and instrumentalism. According to realism, a theory tells you what kind of entities do and do not exist. According to instrumentalism, a theory is restricted to predicting observations. If a theory is empirically adequate, if it makes only correct predictions within its domain, that's good enough for instrumentalists. But the realist is faced with the problem that multiple theories can make good predictions, yet imply different ontologies, and one ontology can be ultimately correct, so some criterion beyond empirical adequacy is needed.

On the face of it, Solomonoff Inductors contain computer programmes, not explanations, not hypotheses and not descriptions. (I am grouping explanations, hypotheses and beliefs as things which have a semantic interpretation, which say something about reality . In particular, physics has a semantic interpretation in a way that maths does not.)

The Yukdowskian version of Solomonoff switches from talking about programs to talking about hypotheses as if they are obviously equivalent. Is it obvious? There's a vague and loose sense in which physical theories...

https://www.washingtonpost.com/opinions/2024/07/25/sam-altman-ai-democracy-authoritarianism-future/

Not sure if this was discussed at LW before. This is an opinion piece by Sam Altman, which sounds like a toned down version of "situational awareness" to me.

https://x.com/sama/status/1813984927622549881

According to Sam Altman, GPT-4o mini is much better than text-davinci-003 was in 2022, but 100 times cheaper. In general, we see increasing competition to produce smaller-sized models with great performance (e.g., Claude Haiku and Sonnet, Gemini 1.5 Flash and Pro, maybe even the full-sized GPT-4o itself). I think this trend is worth discussing. Some comments (mostly just quick takes) and questions I'd like to have answers to:

- Should we expect this trend to continue? How much efficiency gains are still possible? Can we expect another 100x efficiency gain in the coming years? Andrej Karpathy expects that we might see a GPT-2 sized "smart" model.

- What's the technical driver behind these advancements? Andrej Karpathy thinks it is based on synthetic data: Larger models curate new, better training data for the next generation of small models. Might there also be architectural changes? Inference tricks? Which of these advancements can continue?

- Why are companies pushing into small models? I think in hindsight, this seems easy to answer, but I'm curious what others think: If you have a GPT-4 level model that is much, much cheaper, then you can sell

To make a Chinchilla optimal model smaller while maintaining its capabilities, you need more data. At 15T tokens (the amount of data used in Llama 3), a Chinchilla optimal model has 750b active parameters, and training it invests 7e25 FLOPs (Gemini 1.0 Ultra or 4x original GPT-4). A larger $1 billion training run, which might be the current scale that's not yet deployed, would invest 2e27 FP8 FLOPs if using H100s. A Chinchilla optimal run for these FLOPs would need 80T tokens when using unique data.

Starting with a Chinchilla optimal model, if it's made 3x smaller, maintaining performance requires training it on 9x more data, so that it needs 3x more compute. That's already too much data, and we are only talking 3x smaller. So we need ways of stretching the data that is available. By repeating data up to 16 times, it's possible to make good use of 100x more compute than by only using unique data once. So with say 2e26 FP8 FLOPs (a $100 million training run on H100s), we can train a 3x smaller model that matches performance of the above 7e25 FLOPs Chinchilla optimal model while needing only about 27T tokens of unique data (by repeating them 5 times) instead of 135T unique tokens, and...

Is there a way to filter on Lesswrong for all posts from the alignment forum?

I often like to just see what's on the alignment forum, but I dislike that I don't see most Lesswrong comments when viewing those posts on the alignment forum.

Related: in my ideal world there would be a wrapper version of LessWrong which is like the alignment forum (just focused on transformative AI) but where anyone can post. By default, I'd probably end up recommending people interested in AI go to this because the other content on lesswrong isn't relevant to them.

One proposal for this:

- Use a separate url (e.g. aiforum.com or you could give up on the alignment forum as is and use that existing url).

- This is a shallow wrapper on LW in the same way the alignment forum is, but anyone can post.

- All posts tagged with AI are crossposted (and can maybe be de-crossposted by a moderator if it's not actually relevant). (And if you post using the separate url, it automatically is also on LW and is always tagged with AI.)

- Maybe you add some mechanism for tagging quick takes or manually cross posting them (similar to how you can cross post quick takes to alignment forum now).

- Ideally the home page of the website default to having some more visual emphasis on key research as well as key explanations/intros rather than as much focus on latest events.

Yeah, I think something like this might make sense to do one of these days. I am not super enthused with the current AI Alignment Forum setup.

New Bloomberg article on data center buildouts pitched to the US government by OpenAI. Quotes:

- “the startup shared a document with government officials outlining the economic and national security benefits of building 5-gigawatt data centers in various US states, based on an analysis the company engaged with outside experts on. To put that in context, 5 gigawatts is roughly the equivalent of five nuclear reactors, or enough to power almost 3 million homes.”

- “Joe Dominguez, CEO of Constellation Energy Corp., said he has heard that Altman is talking about ...

From $4 billion for a 150 megawatts cluster, I get 37 gigawatts for a $1 trillion cluster, or seven 5-gigawatts datacenters (if they solve geographically distributed training). Future GPUs will consume more power per GPU (though a transition to liquid cooling seems likely), but the corresponding fraction of the datacenter might also cost more. This is only a training system (other datacenters will be built for inference), and there is more than one player in this game, so the 100 gigawatts figure seems reasonable for this scenario.

Current best deployed models are about 5e25 FLOPs (possibly up to 1e26 FLOPs), very recent 100K H100s scale systems can train models for about 5e26 FLOPs in a few months. Building datacenters for 1 gigawatt scale seems already in progress, plausibly the models from these will start arriving in 2026. If we assume B200s, that's enough to 15x the FLOP/s compared to 100K H100s, for 7e27 FLOPs in a few months, which is 5 trillion active parameters models (at 50 tokens/parameter).

The 5 gigawatts clusters seem more speculative for now, though o1-like post-training promises sufficient investment, once it's demonstrated on top of 5e26+ FLOPs base models next year....

Zeta Functions in Singular Learning Theory

In this shortform, I very briefly explain my understanding of how zeta functions play a role in the derivation of the free energy in singular learning theory. This is entirely based on slide 14 of the SLT low 4 talk of the recent summit on SLT and Alignment, so feel free to ignore this shortform and simply watch the video.

The story is this: we have a prior , a model , and there is an unknown true distribution . For model selection, we are interested in the evidence of our model for a da...

I think it would be valuable if someone would write a post that does (parts of) the following:

- summarize the landscape of work on getting LLMs to reason.

- sketch out the tree of possibilities for how o1 was trained and how it works in inference.

- select a “most likely” path in that tree and describe in detail a possibility for how o1 works.

I would find it valuable since it seems important for external safety work to know how frontier models work, since otherwise it is impossible to point out theoretical or conceptual flaws for their alignment approaches.

O...

40 min podcast with Anca Dragan who leads safety and alignment at google deepmind: https://youtu.be/ZXA2dmFxXmg?si=Tk0Hgh2RCCC0-C7q

18-month postdoc position in Singular Learning Theory for Machine Learning Models in Amsterdam: https://werkenbij.uva.nl/en/vacancies/postdoc-position-in-singular-learning-theory-for-machine-learning-models-netherlands-14741

The PI Patrick Forré is an experienced mathematician with a past background in arithmetic geometry, and he also has extensive experience in machine learning. I recommend applying! Feel free to ask me a question if you want, Patrick has been my PhD advisor.

I’m confused by the order Lesswrong shows posts to me: I’d expect to see them in chronological order if I select them by “Latest”.

But as you see, they were posted 1d, 4d, 21h, etc ago.

How can I see them chronologically?

After the US election, the twitter competitor bluesky suddenly gets a surge of new users:

This is my first comment on my own, i.e., Leon Lang's, shortform. It doesn't have any content, I just want to test the functionality.

Edit: This is now obsolete with our NAH distillation.

Making the Telephone Theorem and Its Proof Precise

This short form distills the Telephone Theorem and its proof. The short form will thereby not at all be "intuitive"; the only goal is to be mathematically precise at every step.

Let be jointly distributed finite random variables, meaning they are all functions

starting from the same finite sample space with a given probability distribution and into respective finite value spaces . Additionally, assume that these r...

These are rough notes trying (but not really succeeding) to deconfuse me about Alex Turner's diamond proposal. The main thing I wanted to clarify: what's the idea here for how the agent remains motivated by diamonds even while doing very non-diamond related things like "solving mazes" that are required for general intelligence?

- Summarizing Alex's summary:

- Multimodal SSL initialization

- recurrent state, action head

- imitation learning on humans in simulation, + sim2real

- low sample complexity

- Humans move toward diamonds

- policy-gradient RL: reward the AI for getting n

This is my first short form. It doesn't have any content, I just want to test the functionality.