Popular Comments

Recent Discussion

People sometimes ask me if I think quantum computing will impact AGI development, and my usual answer has been that it likely won't play much of a role. However, photonics likely will.

Photonics was one of the subfields I studied and worked in while I was in academia doing physics.

In the context of deep learning, Photonics for deep learning focuses on using light (photons) for efficient computation in neural networks, while quantum computing uses quantum-mechanical properties for computation.

There is some overlap between quantum computing and photonics, which can sometimes be confusing. There's even a subfield called Quantum Photonics, which merges the two. However, they are both two distinctive approaches to computing.

I'll go into more detail later, but OpenAI recently hired someone who, at PsiQuantum, worked on "designing a...

OK. Why would you consider it a realistic enough prospect to study or write this post? I know there were people doing analog multiplies with light absorption, but even 8-bit analog data transmission with light uses more energy than an 8-bit multiply with transistors. The physics of optical transistors don't seem compatible with lower energy than electrons. What hope do you think there is?

I currently am completing psychological studies for credit in my university psych course. The entire time, all I can think is “I wonder if that detail is the one they’re using to trick me with?”

I wonder how this impacts results. I can’t imagine being in a heightened state of looking out for deception has no impact.

When I play live I have a bunch of instruments, including:

Mandolin: an electric mandolin

Computer: a custom MIDI mapper driven by keyboard, foot drums, and breath controller.

Bass whistle: a whistle-controlled bass synthesizer

I also have some effects, primarily a talkbox and an audio-to-audio synth pedal. Normally I route the mandolin into the effects, but I've recently been wanting more options:

The computer effects are a lot of fun routed through the talkbox.

-

If I set the bass whistle to emit just a sine wave, and pipe that into the synth pedal, I can control professionally-designed sounds:

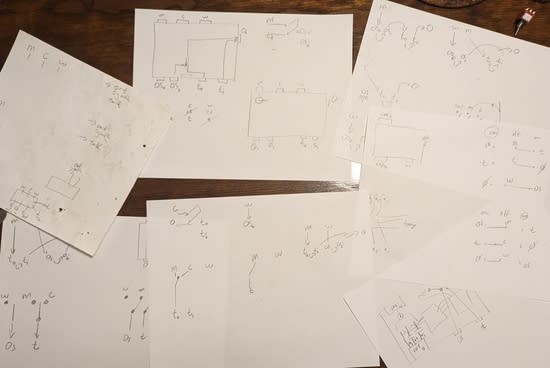

The thing that makes this tricky is that I want to be able to play mandolin direct (which goes via the talkbox output) at the same time as playing bass whistle (which goes via the pedals output). I sketched a lot of options:

And eventually realized...

It might be a good on the current margin to have a norm of publicly listing any non-disclosure agreements you have signed (e.g. on one's LW profile), and the rough scope of them, so that other people can model what information you're committed to not sharing, and highlight if it is related to anything beyond the details of technical research being done (e.g. if it is about social relationships or conflicts or criticism).

I have added the one NDA that I have signed to my profile.

The curious tale of how I mistook my dyslexia for stupidity - and talked, sang, and drew my way out of it.

Sometimes I tell people I’m dyslexic and they don’t believe me. I love to read, I can mostly write without error, and I’m fluent in more than one language.

Also, I don’t actually technically know if I’m dyslectic cause I was never diagnosed. Instead I thought I was pretty dumb but if I worked really hard no one would notice. Later I felt inordinately angry about why anyone could possibly care about the exact order of letters when the gist is perfectly clear even if if if I right liike tis.

I mean, clear to me anyway.

I was 25 before it dawned on me that all the tricks...

I’ve got a few questions.

- What is “WRT brain function”?

- How does someone train themself out of subvocalising?

- If you think critically, has speed reading actually increased your learning rate for semantic knowledge?

- Most things have downsides, what are the downsides of speed reading?

- What are your Words Per Minute (WPM)?

- Did you test WPM before learning speed reading?

- If this was an RPG, what level do you think you are in speed reading from 1-100?

- How long did it take you to reach your current level in this skill?

Sorry that’s a lot of questions. I’ve bee...

1. If you find that you’re reluctant to permanently give up on to-do list items, “deprioritize” them instead

I hate the idea of deciding that something on my to-do list isn’t that important, and then deleting it off my to-do list without actually doing it. Because once it’s off my to-do list, then quite possibly I’ll never think about it again. And what if it’s actually worth doing? Or what if my priorities will change such that it will be worth doing at some point in the future? Gahh!

On the other hand, if I never delete anything off my to-do list, it will grow to infinity.

The solution I’ve settled on is a priority-categorized to-do list, using a kanban-style online tool (e.g. Trello). The left couple columns (“lists”) are very active—i.e., to-do list...

Yeah most of the time I’ll open my to-do list and just look at one the couple very leftmost columns, and the column has maybe 3 items, and then I’ll pick one and do it (or pick a few and schedule them for that same day).

Occasionally I’ll look at a column farther to the right, and see if any ought to be moved left or right. The further right, the less often I’m checking.

Most people avoid saying literally false things, especially if those could be audited, like making up facts or credentials. The reasons for this are both moral and pragmatic — being caught out looks really bad, and sustaining lies is quite hard, especially over time. Let’s call the habit of not saying things you know to be false ‘shallow honesty’[1].

Often when people are shallowly honest, they still choose what true things they say in a kind of locally act-consequentialist way, to try to bring about some outcome. Maybe something they want for themselves (e.g. convincing their friends to see a particular movie), or something they truly believe is good (e.g. causing their friend to vote for the candidate they think will be better for the country).

Either way, if you...

Might be an uncharitable read of what's being recommended here. In particular, it might be worth revisiting the section that details what Deep Honesty is not. There's a large contingent of folks online who self-describe as 'borderline autistic', and one of their hallmark characteristics is blunt honesty, specifically the sort that's associated with an inability to pick up on ordinary social cues. My friend group is disproportionately comprised of this sort of person. So I've had a lot of opportunity to observe a few things about how honesty works.

Speaking ...

One of the primary concerns when attempting to control an AI of human-or-greater capabilities is that it might be deceitful. It is, after all, fairly difficult for an AI to succeed in a coup against humanity if the humans can simply regularly ask it "Are you plotting a coup? If so, how can we stop it?" and be confident that it will give them non-deceitful answers!

TL;DR LLMs demonstrably learn deceit from humans. Deceit is a fairly complex behavior, especially over an extended period: you need to reliably come up with plausible lies, which preferably involves modeling the thought processes of those you wish to deceive, and also keep the lies an internally consistent counterfactual, yet separate from your real beliefs. As the quotation goes, "Oh what a...

I also wonder how much interpretability LM agents might help here, e.g. as they could make much cheaper scaling the 'search' to many different undesirable kinds of behaviors.