Do we know if @paulfchristiano or other ex-lab people working on AI policy have non-disparagement agreements with OpenAI or other AI companies? I know Cullen doesn't, but I don't know about anybody else.

I know NIST isn't a regulatory body, but it still seems like standards-setting should be done by people who have no unusual legal obligations. And of course, some other people are or will be working at regulatory bodies, which may have more teeth in the future.

To be clear, I want to differentiate between Non-Disclosure Agreements, which are perfectly sane and reasonable in at least a limited form as a way to prevent leaking trade secrets, and non-disparagement agreements, which prevents you from saying bad things about past employers. The latter seems clearly bad to have for anybody in a position to affect policy. Doubly so if the existence of the non-disparagement agreement itself is secretive.

Sam Altman appears to have been using non-disparagements at least as far back as 2017-2018, even for things that really don't seem to have needed such things at all, like a research nonprofit arm of YC.* It's unclear if that example is also a lifetime non-disparagement (I've asked), but nevertheless, given that track record, you should assume the OA research non-profit also tried to impose it, followed by the OA LLC (obviously), and so Paul F. Christiano (and all of the Anthropic founders) would presumably be bound.

This would explain why Anthropic executives never say anything bad about OA, and refuse to explain exactly what broken promises by Altman triggered their failed attempt to remove Altman and subsequent exodus.

(I have also asked Sam Altman on Twitter, since he follows me, apropos of his vested equity statement, how far back these NDAs go, if the Anthropic founders are still bound, and if they are, whether they will be unbound.)

* Note that Elon Musk's SpaceX does the same thing and is even worse because they will cancel your shares after you leave for half a year, and if they get mad at you after that expires, they may simply lock you out of tender offers indefinitely - whi...

It seems really quite bad for Paul to work in the U.S. government on AI legislation without having disclosed that he is under a non-disparagement clause for the biggest entity in the space the regulator he is working at is regulating. And if he signed an NDA that prevents him from disclosing it, then it was IMO his job to not accept a position in which such a disclosure would obviously be required.

I am currently like 50% Paul has indeed signed such a lifetime non-disparagement agreement, so I do think I don't buy that a "presumably" is appropriate here (though I am not that far away from it).

It would be bad, I agree. (An NDA about what he worked on at OA, sure, but then being required to never say anything bad about OA forever, as a regulator who will be running evaluations etc...?) Fortunately, this is one of those rare situations where it is probably enough for Paul to simply say his OA NDA does not cover that - then either it doesn't and can't be a problem, or he has violated the NDA's gag order by talking about it and when OA then fails to sue him to enforce it, the NDA becomes moot.

At the very least I hope he disclosed it to the gov't (and then it was voided at least in internal government communications, I don't know how the law works here), though I'd personally want it to be voided completely or at least widely communicated to the public as well.

Examples of maybe disparagement:

+1. Also curious about Jade Leung (formerly Open AI)– she's currently the CTO for the UK AI Safety Institute. Also Geoffrey Irving (formerly DeepMind), who is a research director at the UKAISI.

Great point! (Also oops– I forgot that Irving was formerly OpenAI as well. He worked for DeepMind in recent years, but before that he worked at OpenAI and Google Brain.)

Do we have any evidence that DeepMind or Anthropic definitely do not do non-disparagement agreements? (If so then we can just focus on former OpenAI employees.)

Hi! I have been lurking here for over a year but I've been too shy to participate until now. I'm 14, and I've been homeschooled all my life. I like math and physics and psychology, and I've learned lots of interesting things here. I really enjoyed reading the sequences last year. I've also been to some meetups in my city and the people there (despite – or maybe because of – being twice my age) are very cool. Thank you all for existing!

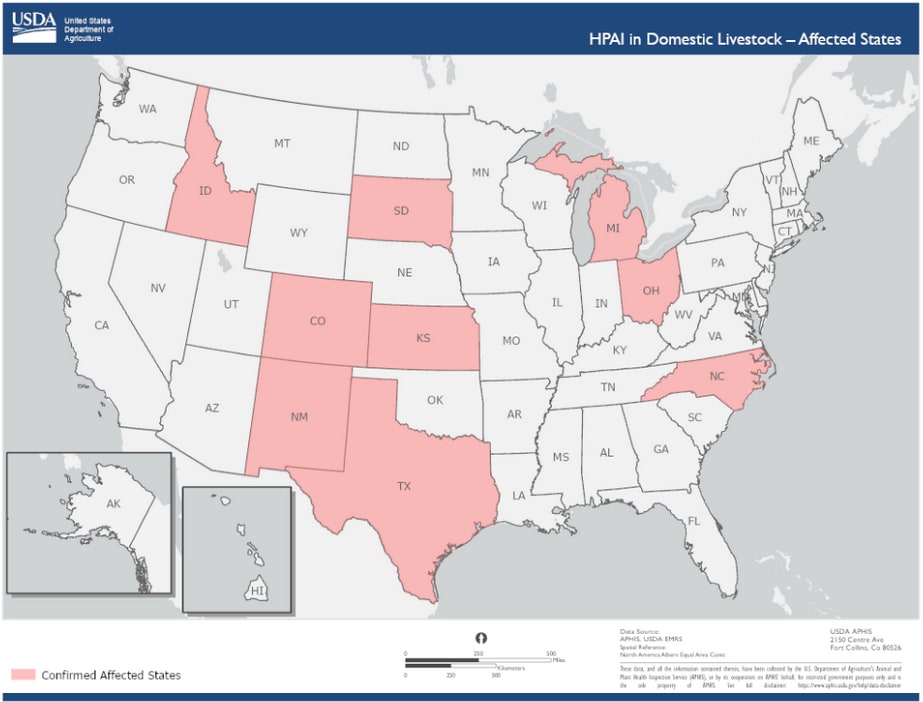

As of May 16, 2024 an easily findable USDA/CDC report says that widely dispersed cow herds are being detectably infected.

So far, that I can find reports of, only one human dairy worker has been detected as having an eye infection.

I saw a link to a report on twitter from an enterprising journalist who claimed to have gotten some milk directly from small local farms in Texas, and the first lab she tried refuse to test it. They asked the farms. The farms said no. The labs were happy to go with this!

So, the data I've been able to get so far is consistent with many possibly real worlds.

The worst plausible world would involve a jump to humans, undetected for quite a while, allowing time for adaptive evolution, and an "influenza normal" attack rate of 5% -10% for adults and ~30% for kids, and an "avian flu plausible" mortality rate of 56%(??) (but maybe not until this winter when cold weather causes lots of enclosed air sharing?) which implies that by June of 2025 maybe half a billion people (~= 7B*0.12*0.56) will be dead???

But probably not, for a variety of reasons.

However, I sure hope that the (half imaginary?) Administrators who would hypothetically exist in some bureaucracy somewhere ...

Also, there's now a second detected human case, this one in Michigan instead of Texas.

Both had a surprising-to-me "pinkeye" symptom profile. Weird!

The dairy worker in Michigan had various "compartments" tested and their nasal compartment (and people they lived with) were all negative. Hopeful?

Apparently and also hopefully this virus is NOT freakishly good at infecting humans and also weirdly many other animals (like covid was with human ACE2, in precisely the ways people have talked about when discussing gain-of-function in years prior to covid).

If we're being foolishly mechanical in our inferences "n=2 with 2 survivors" could get rule of succession treatment. In that case we pseudocount 1 for each category of interest (hence if n=0 we say 50% survival chance based on nothing but pseudocounts), and now we have 3 survivors (2 real) versus 1 dead (0 real) and guess that the worst the mortality rate here would be maybe 1/4 == 25% (?? (as an ass number)), which is pleasantly lower than overall observed base rates for avian flu mortality in humans! :-)

Naive impressions: a natural virus, with pretty clear reservoirs (first birds and now dairy cows), on the maybe slightly less bad side of...

Katelyn Jetelina has been providing some useful information on this. Her conclusion at this point seems to be 'more data needed'.

The only thing I can conclude looking around for her is that she's out of the public eye. Hope she's ok, but I'd guess she's doing fine and just didn't feel like being a public figure anymore. Interested if anyone can confirm that, but if it's true I want to make sure to not pry.

Hello! I'm building an open source communication tool with a one-of-a-kind UI for LessWrong kind of deep, rational discussions. The tool is called CQ2 (https://cq2.co). It has a sliding panes design with quote-level threads. There's a concept of "posts" for more serious discussions with many people and there's "chat" for less serious ones, and both of them have a UI crafted for deep discussions.

I simulated some LessWrong discussions there – they turned out to be a lot more organised and easy to follow. You can check them out in the chat channel and direct message part of the demo on the site. However, it is a bit inconvenient – there's horizontal scrolling and one needs to click to open new threads. Since forums need to prioritize convenience, I think CQ2's design isn't good for LessWrong. But I think the inconvenience is worth it for such discussions at writing-first teams, since it helps them with hyper-focusing on one thing at a time and avoid losing context in order to come to a conclusion and make decisions.

If you have such discussions at work, I would love to learn about your team, your frustrations with existing communication tools, and better understand how CQ2 can help! I ...

Hello everyone! My name is Roman Maksimovich, I am an immigrant from Russia, currently finishing high school in Serbia. My primary specialization is mathematics, and back in middle school I have had enough education in abstract mathematics (from calculus to category theory and topology) to call myself a mathematician.

My other strong interests include computer science and programming (specifically functional programming, theoretical CS, AI, and systems programming s.a. Linux) as well as languages (specifically Asian languages like Japanese).

I ended up here after reading HP:MOR, which I consider to be an all-time masterpiece. The Sequences are very good too, although not that gripping. Rationality is a very important principle in my life, and so far I found the forum to be very well-organized and the posts to be very informative and well-written, so I will definitely stick around and try to engage in the forum to the best of my ability.

I thought I might do a bit of self-advertising as well. Here's my GitHub: https://github.com/thornoar

If any of you use this very niche mathematical graphics tool called Asymptote, you might be interested to know that I have been developing a cool 6000-...

Hey all

I found out about LessWrong through a confluence of factors over the past 6 years or so, starting with Rob Miles' Computerphile videos and then his personal videos, seeing Aella make rounds on the internet, and hearing about Manifold, which all just sorta pointed me towards Eliezer and this website. I started reading the rationality a-z posts about a year ago and have gotten up to the value theory portion, but over the past few months I've started realizing just how much engaging content there is to read on here. I just graduated with my bachelor's and I hope to get involved with AI alignment (but Eliezer paints a pretty bleak picture for a newcomer like myself (and I know not to take any one person's word as gospel, but I'd be lying if I said it wasn't a little disheartening)).

I'm not really sure how to break into the field of AI safety/alignment, given that college has left me without a lot of money and I don't exactly have a portfolio or degree that scream machine learning. I fear that I would have to go back and get an even higher education to even attempt to make a difference. Maybe, however, this is where my lack of familiarity in the field shows, because I don't actually know what qualifications are required for the positions I'd be interested in or if there's even a formal path for helping with alignment work. Any direction would be appreciated.

Hi LessWrong Community!

I'm new here, though I've been an LW reader for a while. I'm representing complicated.world website, where we strive to use similar rationality approach as here and we also explore philosophical problems. The difference is that, instead of being a community-driven portal like you, we are a small team which is working internally to achieve consensus and only then we publish our articles. This means that we are not nearly as pluralistic, diverse or democratic as you are, but on the other hand we try to present a single coherent view on all discussed problems, each rooted in basic axioms. I really value the LW community (our entire team does) and would like to start contributing here. I would also like to present from time to time a linkpost from our website - I hope this is ok. We are also a not-for-profit website.

Does anyone have advice on how I could work full-time on an alignment research agenda I have? It looks like trying to get a LTFF grant is the best option for this kind of thing, but if after working more time alone on it, it keeps looking like it could succeed, it’s likely that it would become too big for me alone, I would need help from other people, and that looks hard to get. So, any advice from anyone who’s been in a similar situation? Also, how does this compare with getting a job at an alignment org? Is there any org where I would have a comparable amount of freedom if my ideas are good enough?

Edit: It took way longer than I thought it would, but I've finally sent my first LTFF grant application! Now let's just hope they understand it and think it is good.

Hello! I'm dipping my toes into this forum, coming primarily from the Scott Alexander side of rationalism. Wanted to introduce myself, and share that i'm working on a post about ethics/ethical frameworks i hope to share here eventually!

Feature request: I'd like to be able to play the LW playlist (and future playlists!) from LW. I found it a better UI than Spotify and Youtube, partly because it didn't stop me from browsing around LW and partly because it had the lyrics on the bottom of the screen. So... maybe there could be a toggle in the settings to re-enable it?

There are several sequences which are visible on the profiles of their authors, but haven't yet been added to the library. Those are:

- «Boundaries» Sequence (Andrew Critch)

- Maximal Lottery-Lotteries (Scott Garrabrant)

- Geometric Rationality (Scott Garrabrant)

- UDT 1.01 (Diffractor)

- Unifying Bargaining (Diffractor)

- Why Everyone (Else) Is a Hypocrite: Evolution and the Modular Mind (Kaj Sotala)

- The Sense Of Physical Necessity: A Naturalism Demo (LoganStrohl)

- Scheming AIs: Will AIs fake alignment during training in order to get power? (Joe Carlsmith)

I think t...

Post upvotes are at the bottom but user comment upvotes are at the top of each comment. Sometimes I'll read a very long comment and then have to scroll aaaaall the way back up to upvote it. Is there some reason for this that I'm missing or is it just an oversight?

Obscure request:

Short story by Yudkowsky, on a reddit short fiction subreddit, about a time traveler coming back to the 19th century from the 21st. The time traveler is incredibly distraught about the red tape in the future, screaming about molasses and how it's illegal to sell food on the street.

Nevermind, found it.

Hi everyone!

I found lesswrong at the end of 2022, as a result of ChatGPT’s release. What struck me fairly quickly about lesswrong was how much it resonated with me. Much of the ways of thinking discussed on lesswrong were things I was already doing, but without knowing the name for it. For example, I thought of the strength of my beliefs in terms of probabilities, long before I had ever heard the word “bayesian”.

Since discovering lesswrong, I have been mostly just vaguely browsing it, with some periods of more intense study. But I’m aware that I haven’t be...

Hey everyone! I work on quantifying and demonstrating AI cybersecurity impacts at Palisade Research with @Jeffrey Ladish.

We have a bunch of exciting work in the pipeline, including:

- demos of well-known safety issues like agent jailbreaks or voice cloning

- replications of prior work on self-replication and hacking capabilities

- modelling of above capabilities' economic impact

- novel evaluations and tools

Most of my posts here will probably detail technical research or announce new evaluation benchmarks and tools. I also think a lot about responsible release, ...

Hi! I have lurked for quite a while and wonder if I can/should participate more. I'm interested in science in general, speculative fiction and simulation/sandbox games among other stuff. I like reading speculations about the impact of AI and other technologies, but find many of the alignment-related discussions too focused on what the author wants/values rather than what future technologies can really cause. Also, any game recommendations with a hard science/AI/transhumanist theme that are truly simulation-like and not narratively railroading?

Is it really desirable to have the new "review bot" in all the 100+ karma comment sections? To me it feels like unnecessary clutter, similar to injecting ads.

Hi everyone! I'm new to LW and wanted to introduce myself. I'm from the SF bay area and working on my PhD in anthropology. I study AI safety, and I'm mainly interested in research efforts that draw methods from the human sciences to better understand present and future models. I'm also interested in the AI safety's sociocultural dynamics, including how ideas circulate the research community and how uncertainty figures into our interactions with models. All thoughts and leads are welcome.

This work led me to LW. Originally all the content was overwhelming bu...

Hey, I'm new to LessWrong and working on a post - however at some point the guidelines which pop up at the top of a fresh account's "new post" screen went away, and I cannot find the same language in the New Users Guide or elsewhere on the site.

Does anyone have a link to this? I recall a list of suggestions like "make the post object-level," "treat it as a submission for a university," "do not write a poetic/literary post until you've already gotten a couple object-level posts on your record."

It seems like a minor oversight if it's impossible to find certa...

Some features I'd like:

a 'mark read' button next to posts so I could easily mark as read posts that I've read elsewhere (e.g. ones cross-posted from a blog I follow)

a 'not interested' button which would stop a given post from appearing in my latest or recommended lists. Ideally, this would also update my recommended posts so as to recommend fewer posts like that to me. (Note: the hide-from-front-page button could be this if A. It worked even on promoted/starred posts, and B. it wasn't hidden in a three-dot menu where it's frustrating to access)

a 'read late...

Someone strong-downvoted a post/question of mine with a downvote strength of 10, if I remember correctly.

I had initially just planned to keep silent about this, because that's their good right to do, if they think the post is bad or harmful.

But since the downvote, I can't shake off the curiosity of why that person disliked my post so strongly—I'm willing to pay $20 for two/three paragraphs of explanation by the person why they downvoted it.

PSA: Tooth decay might be reversible! The recent discussion around the Lumina anti-cavity prophylaxis reminded me of a certain dentist's YouTube channel I'd stumbled upon recently, claiming that tooth decay can be arrested and reversed using widely available over-the-counter dental care products. I remember my dentist from years back telling me that if regular brushing and flossing doesn't work, and the decay is progressing, then the only treatment option is a filling. I wish I'd known about alternatives back then, because I definitely would have tried tha...

How efficient are equity markets? No, not in the EMH sense.

My take is that market efficiency viewed from economics/finance is about total surplus maximization -- the area between the supply and demand curves. Clearly when S and D are order schedules and P and Q correspond to the S&D intersection one maximizes the area of the triangle defined in the graph.

But existing equity markets don't work off an ordered schedule but largely match trades in a somewhat random order -- people place orders (bids and offers) throughout the day and as they come in ...

Feature Suggestion: add a number to the hidden author names.

I enjoy keeping the author names hidden when reading the site, but find it difficult to follow comment threads when there isn't a persistent id for each poster. I think a number would suffice while keeping the hiddenness.

Any thoughts on Symbolica? (or "categorical deep learning" more broadly?)

...All current state of the art large language models such as ChatGPT, Claude, and Gemini, are based on the same core architecture. As a result, they all suffer from the same limitations.

Extant models are expensive to train, complex to deploy, difficult to validate, and infamously prone to hallucination. Symbolica is redesigning how machines learn from the ground up.

We use the powerfully expressive language of category theory to develop models capable of learning algebraic structur

I’m in the market for a new productivity coach / accountability buddy, to chat with periodically (I’ve been doing one ≈20-minute meeting every 2 weeks) about work habits, and set goals, and so on. I’m open to either paying fair market rate, or to a reciprocal arrangement where we trade advice and promises etc. I slightly prefer someone not directly involved in AGI safety/alignment—since that’s my field and I don’t want us to get nerd-sniped into object-level discussions—but whatever, that’s not a hard requirement. You can reply here, or DM or email me. :) update: I’m all set now

Hi everyone, my name is Mickey Beurskens. I've been reading posts for about two years now, and I would like to participate more actively in the community, which is why I'll take the opportunity to introduce myself here.

In my daily life I am doing independent AI engineering work (contracting mostly). About three years ago a (then) colleague introduced me to HPMOR, which was a wonderful introduction to what would later become some quite serious deliberations on AI alignment and LessWrong! After testing out rationality principles in my life I was convinced th...

Hello, I'm Marius, an embedded SW developer looking to pivot into AI and machine learning.

I've read the Sequences and am reading ACX somewhat regularly.

Looking forward to a fruitful discussions.

Best wishes,

Marius Nicoară

I'm trying to remember the name of a blog. The only things I remember about it is that it's at least a tiny bit linked to this community, and that there is some sort of automatic decaying endorsement feature. Like, there was a subheading indicating the likely percentage of claims the author no longer endorses based on the age of the post. Does anyone know what I'm talking about?

Is there a post in the Sequences about when it is justifiable to not pursue going down a rabbit hole? It's a fairly general question, but the specific context is a tale as old as time. My brother, who has been an atheist for decades, moved to Utah. After 10 years, he now asserts that he was wrong and his "rigorous pursuit" of verifying with logic and his own eyes, leads him to believe the Bible is literally true. I worry about his mental health so I don't want to debate him, but felt like I should give some kind of justification for why I'm not personally ...

Hi any it may concern,

You could say I have a technical moat in a certain area and came across an idea/cluster of ideas that seemed unusually connected and potentially alignment-significant but whose publication seems potentially capabilities-enhancing. (I consulted with one other person and they also found it difficult to ascertain or summarize)

I was considering writing to EY on here as an obvious person who would both be someone more likely to be able to determine plausibility/risk across a less familiar domain and have an idea of what further to do. Is t...

I have the mild impression that Jacqueline Carey's Kushiel trilogy is somewhat popular in the community?[1] Is it true and if so, why?

- ^

E.g. Scott Alexander references Elua in Mediations on Moloch and I know of at least one prominent LWer who was a big enough fan of it to reference Elua in their discord handle.

Unsure if there is normally a thread for putting only semi-interesting news articles, but here is a recently posted news article by Wired that seems.... rather inflammatory toward Effective Altruism. I have not read the article myself yet, but a quick skim confirms the title is not only to get clickbait anger clicks, the rest of the article also seems extremely critical of EA, transhumanism, and Rationality.

I am going to post it here, though I am not entirely sure if getting this article more clicks is a good thing, so if you have no interest in read...

I've came across a poll about exchanging probability estimates with another rationalist: https://manifold.markets/1941159478/you-think-something-is-30-likely-bu?r=QW5U.

You think something is 30% likely but a friend thinks 70%. To what does that change your opinion?

I feel like there can be specially-constructed problems when the result probability is 0, but haven't been able to construct an example. Are there any?

There is a box which contains money iff the front and back are painted the same color. Each side is independently 30% to be blue, and 70% to be red. You observe that the front is blue, and your friend observes that the back is red.

I don't think this crosses the line regarding poltics on the board but note that as a warning header.

I was just struck by a though related to the upcoming elections in the USA. Age of both party's candidate have been noted and create both some concern and even risks for the country.

No age limits exist and I suspect trying to get get legislative action on that would be slow to impossible as it undoubtedly would be a new ammendment to the Constitution.

BUT, I don't think there is any law or other restriction on any political party imposing their own age limit...

Hi, I am new to the site having just registered, after reading through a couple of the posts referenced in the suggested reading list I felt comfortable enough to try to participate on this site. I feel I could possible add something to some of the discussions here, though time will tell. I did land on this site "through AI", so we'll see if that means this isn't a good place for me to land and/or pass through. Though I am slightly bending the definition of that quote and its context here (maybe). Or does finding this site by questioning an AI about possib...

I didn't get any replies on my question post re: the EU parliamentary election and AI x-risk, but does anyone have a suggestion for a party I could vote for (in Germany) when it comes to x-risk?

How much power is required to run the most efficient superhuman chess engines? There's this discussion saying Stockfish running on a phone is superhuman, but is that one watt or 10 watts? Could we beat grandmasters with 0.1 watts if we tried?

Does anyone have high quality analysis of how effective machines are for strength training and building muscles. Not free weights specifically machines. Im not the pickiest on how one operationalizes 'work'. More interested in the quality of the analysis. But some questions:

-- Do users get hurt frequently? Are the injuries chronic? (This is the most important question)

-- Do people who use them consistently gain muscle

-- Can you gain a 'lot' of muscle and strength liek you can with free weights. Or people cap out quickly if they are fit

-- Does strength from...

Does anyone recommend a good book on finance? I'd like to understand most of the terms used in this post, and then some, but the old repository doesn't have any suggestions.

Is this site just riddled with spammers anymore? Or is the way the site works just arcane or something?

I keep seeing ancient posts (usually by Eliezer himself from 2007-08) popping up on the front page. But I'll scroll through the comments looking for the new one(s) which must have bumped it onto today's list, but they're nowhere to be found. Thus someone is bumping the things (if that is indeed how the site works), but if it was a spammer who got deleted before I saw the thread in question, I wouldn't see said spam post.

Has anyone ever made an aggregator of open source LLMs and image generators with specific security vulnerabilities?

Ie. If it doesn’t have a filter for prompt injection or if it doesn’t have built in filter for dats poisoning, etc…

Looking for something that’s written to help a solution builder using one of these models and what they’d need to consider wrt deployment. .

So, I have three very distinct ideas for projects that I'm thinking about applying to the Long Term Future Fund for. Does anyone happen to know if it's better to try to fit them all into one application, or split them into three separate applications?

Bug report: I got notified about Joe Carlsmith's most recent post twice, the second time after ~4 hours

Has anybody tried to estimate how prevalent sexual abuse is in EA circles/orgs compared to general population?

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library, checking recent Curated posts, seeing if there are any meetups in your area, and checking out the Getting Started section of the LessWrong FAQ. If you want to orient to the content on the site, you can also check out the Concepts section.

The Open Thread tag is here. The Open Thread sequence is here.