Popular Comments

Recent Discussion

Suppose Alice and Bob are two Bayesian agents in the same environment. They both basically understand how their environment works, so they generally agree on predictions about any specific directly-observable thing in the world - e.g. whenever they try to operationalize a bet, they find that their odds are roughly the same. However, their two world models might have totally different internal structure, different “latent” structures which Alice and Bob model as generating the observable world around them. As a simple toy example: maybe Alice models a bunch of numbers as having been generated by independent rolls of the same biased die, and Bob models the same numbers using some big complicated neural net.

Now suppose Alice goes poking around inside of her world model, and somewhere in there...

As long as you only care about the latent variables that make and independent of each other, right? Asking because this feels isomorphic to classic issues relating to deception and wireheading unless one treads carefully. Though I'm not quite sure whether you intend for it to be applied in this way,

// ODDS = YEP:NOPE

YEP, NOPE = MAKE UP SOME INITIAL ODDS WHO CARES

FOR EACH E IN EVIDENCE

YEP *= CHANCE OF E IF YEP

NOPE *= CHANCE OF E IF NOPEThe thing to remember is that yeps and nopes never cross. The colon is a thick & rubbery barrier. Yep with yep and nope with nope.

bear : notbear =

1:100 odds to encounter a bear on a camping trip around here in general

* 20% a bear would scratch my tent : 50% a notbear would

* 10% a bear would flip my tent over : 1% a notbear would

* 95% a bear would look exactly like a fucking bear inside my tent : 1% a notbear would

* 0.01% chance a bear would eat me alive : 0.001% chance a notbear would

As you die you conclude 1*20*10*95*.01 : 100*50*1*1*.001 = 190 : 5 odds that a bear is eating you.

A possom or whatever will scratch mine like half the time

Introduction

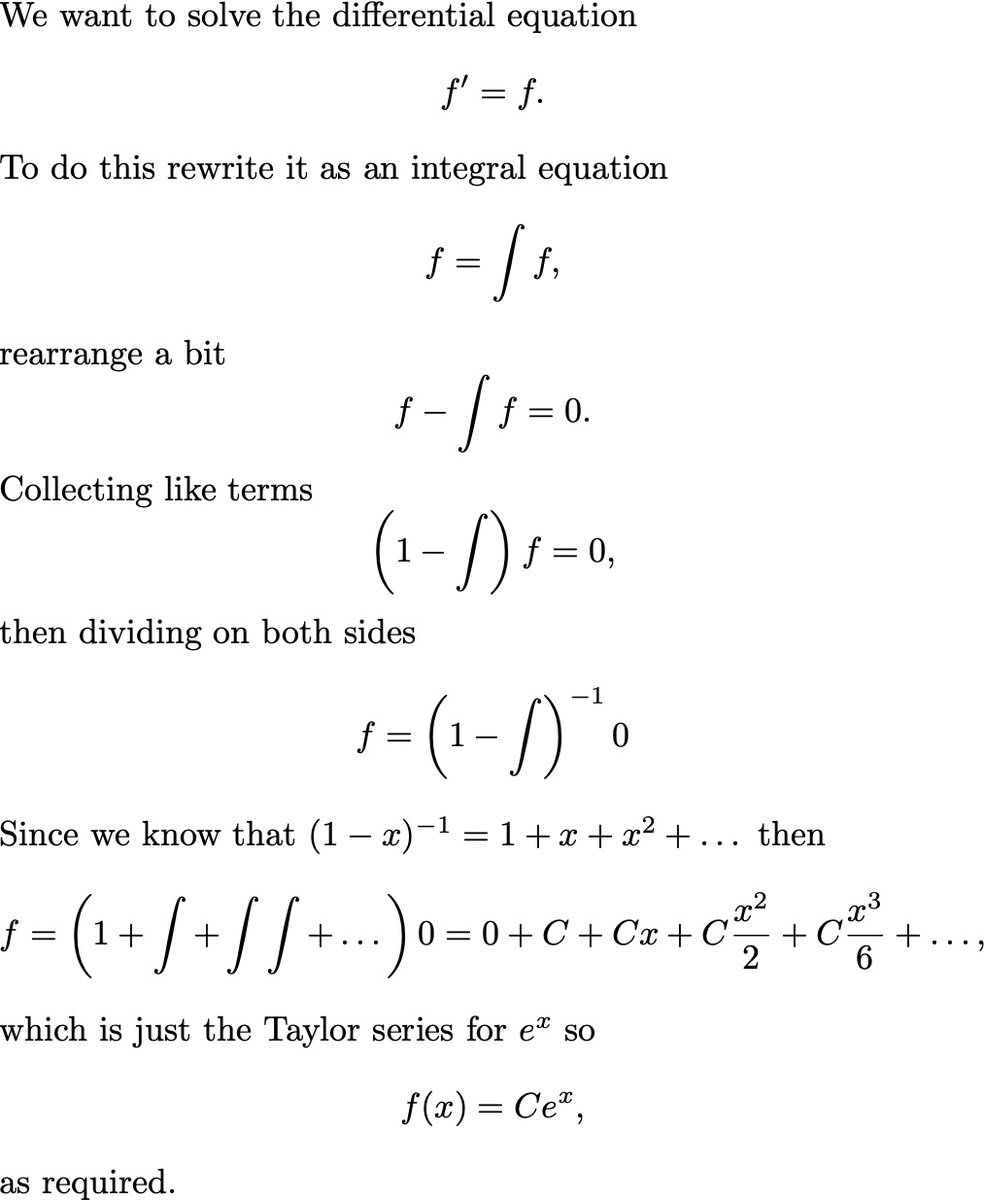

A recent popular tweet did a "math magic trick", and I want to explain why it works and use that as an excuse to talk about cool math (functional analysis). The tweet in question:

This is a cute magic trick, and like any good trick they nonchalantly gloss over the most important step. Did you spot it? Did you notice your confusion?

Here's the key question: Why did they switch from a differential equation to an integral equation? If you can use when , why not use it when ?

Well, lets try it, writing for the derivative:

So now you may be disappointed, but relieved: yes, this version fails, but at least it fails-safe, giving you the trivial solution, right?

But no, actually can fail catastrophically, which we can see if we try a nonhomogeneous equation...

Edit: looks like was already raised by Dacyn and answered to my satisfaction by Robert_AIZI. Correctly applying the fundamental theorem of calculus will indeed prevent that troublesome zero from appearing in the RHS in the first place, which seems much preferable to dealing with it later.

My real analysis might be a bit rusty, but I think defining I as the definite integral breaks the magic trick.

I mean, in the last line of the 'proof', gets applied to the zero function.

Any definitive integral of the zero function is zer...

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library, checking recent Curated posts, seeing if there are any meetups in your area, and checking out the Getting Started section of the LessWrong FAQ. If you want to orient to the content on the site, you can also check out the Concepts section.

The Open Thread tag is here. The Open Thread sequence is here.

epistemic/ontological status: almost certainly all of the following -

- a careful research-grade writeup of some results I arrived at a genuinely kinda shiny open(?) question in theoretical psephology that we are near-certainly never going to get to put into practice for any serious non-cooked-up purpose let alone at scale without already not actually needing it, like, not even a little;

- utterly dependent on definitions I have come up with and theorems I have personally proven partially using them, which I have done with a professional mathematician's care; some friends and strangers have also checked them over;

- my attempt to follow up on proving that something that can reasonably be called a maximal lottery-lottery exists, to characterize it better, and to sketch a construction;

- my attempt to to craft the last couple

In light of all of the talk about AI, utility functions, value alignment etc. I decided to spend some time thinking about what my actual values are. I encourage you to do the same (yes both of you). Lower values on the list are less important to me but not qualitatively less important. For example some of value 2 is not worth an unbounded amount of value 3. The only exception to this is value 1 which is in fact infinitely more important to me than the others.

- Life- If your top value isn't life then I don't know what to say. All the other values are only built off of this one. Nothing matters if you are dead. Call me selfish but I have always been sympathetic

Some people have suggested that a lot of the danger of training a powerful AI comes from reinforcement learning. Given an objective, RL will reinforce any method of achieving the objective that the model tries and finds to be successful including things like deceiving us or increasing its power.

If this were the case, then if we want to build a model with capability level X, it might make sense to try to train that model either without RL or with as little RL as possible. For example, we could attempt to achieve the objective using imitation learning instead.

However, if, for example, the alternate was imitation learning, it would be possible to push back and argue that this is still a black-box that uses gradient descent so we...

Personally, I consider model-based RL to be not RL at all. I claim that either one needs to consider model-based RL to be not RL at all, or one needs to accept such a broad definition of RL that the term is basically-useless (which I think is what porby is saying in response to this comment, i.e. "the category of RL is broad enough that it belonging to it does not constrain expectation much in the relevant way").

Also check out "personalized pagerank", where the rating shown to each user is "rooted" in what kind of content this user has upvoted in the past. It's a neat solution to many problems.

Predicting the future is hard, so it’s no surprise that we occasionally miss important developments.

However, several times recently, in the contexts of Covid forecasting and AI progress, I noticed that I missed some crucial feature of a development I was interested in getting right, and it felt to me like I could’ve seen it coming if only I had tried a little harder. (Some others probably did better, but I could imagine that I wasn't the only one who got things wrong.)

Maybe this is hindsight bias, but if there’s something to it, I want to distill the nature of the mistake.

First, here are the examples that prompted me to take notice:

Predicting the course of the Covid pandemic:

- I didn’t foresee the contribution from sociological factors (e.g., “people not wanting

Relatedly, over time as capital demands increase, we might see huge projects which are collaborations between multiple countries.

I also think that investors could plausibly end up with more and more control over time if capital demands grow beyond what the largest tech companies can manage. (At least if these investors are savvy.)

(The things I write in this comment are commonly discussed amongst people I talk to, so not exactly surprises.)