We get like 10-20 new users a day who write a post describing themselves as a case-study of having discovered an emergent, recursive process while talking to LLMs. The writing generally looks AI generated. The evidence usually looks like, a sort of standard "prompt LLM into roleplaying an emergently aware AI".

It'd be kinda nice if there was a canonical post specifically talking them out of their delusional state.

If anyone feels like taking a stab at that, you can look at the Rejected Section (https://www.lesswrong.com/moderation#rejected-posts) to see what sort of stuff they usually write.

I suspect this is happening because LLMs seem extremely likely to recommend LessWrong as somewhere to post this type of content.

I spent 20 minutes doing some quick checks that this was true. Not once did an LLM fail to include LessWrong as a suggestion for where to post.

Incognito, free accounts:

https://grok.com/share/c2hhcmQtMw%3D%3D_1b632d83-cc12-4664-a700-56fe373e48db

https://grok.com/share/c2hhcmQtMw%3D%3D_8bd5204d-5018-4c3a-9605-0e391b19d795

While I don't think I can share the conversation without an account, ChatGPT recommends a similar list as the above conversations, including both LessWrong and the Alignment Forum.

Similar results using the free llm at "deepai.org"

On my login (where I've mentioned LessWrong before):

Claude:

https://claude.ai/share/fdf54eff-2cb5-41d4-9be5-c37bbe83bd4f

GPT4o:

https://chatgpt.com/share/686e0f8f-5a30-800f-b16f-37e00f77ff5b

On a side note:

I know it must be exhausting on your end, but there is something genuinely amusing and surreal about this entire situation.

If that's it, then it's not the first case of LLMs driving weird traffic to specific websites out in the wild. Here's a less weird example:

It's not surprising (and seems reasonable) for LLM-chats that feature AI stuff to end up getting recommended LessWrong. The surprising/alarming thing is how they generate the same confused delusional story.

That... um... I had a shortform just last week saying that it feels like most people making heavy use of LLMs are going backwards rather than forwards. But if you're getting 10-20 of that per day, and that's just on LessWrong... then the sort of people who seemed to me to be going backward are in fact probably the upper end of the distribution.

Guys, something is really really wrong with how these things interact with human minds. Like, I'm starting to think this is maybe less of a "we need to figure out the right ways to use the things" sort of situation and more of a "seal it in a box and do not touch it until somebody wearing a hazmat suit has figured out what's going on" sort of situation. I'm not saying I've fully updated to that view yet, but it's now explicitly in my hypothesis space.

Probably I should've said this out loud, but I had a couple of pretty explicit updates in this direction over the past couple years: the first was when I heard about character.ai (and similar), the second was when I saw all TPOTers talking about using Sonnet 3.5 as a therapist. The first is the same kind of bad idea as trying a new addictive substance and the second might be good for many people but probably carries much larger risks than most people appreciate. (And if you decide to use an LLM as a therapist/rubber duck/etc, for the love of god don't use GPT-4o. Use Opus 3 if you have access to it. Maybe Gemini is fine? Almost certainly better than 4o. But you should consider using an empty Google Doc instead, if you don't want to or can't use a real person.)

I think using them as coding and research assistants is fine. I haven't customized them to be less annoying to me personally, so their outputs often are annoying. Then I have to skim over the output to find the relevant details, and don't absorb much of the puffery.

I had a weird moment when I noticed that talking to Claude was genuinely helpful for processing akrasia, but that this was equally true whether or not I hit enter and actually sent the message to the model. The Google Docs Therapist concept may be underrated, although it has its own privacy and safety issues- should we just bring back Eliza?

Stephen apparently found that the LLMs consistently suggest these people post on LessWrong, so insofar as you are extrapolating by normalizing based on the size of the LessWrong userbase (suggested by "that's just on LessWrong"), that seems probably wrong.

Edit: I will say though that I do still agree this is worrying, but my model of the situation is much more along the lines of crazies being made more crazy by the agreement machine[1] than something very mysterious going on.

Contrary to the hope many have had that LLMs would make crazies less crazy due to being more patient & better at arguing than regular humans, ime they seem to have a memorized list-of-things-its-bad-to-believe which in new chats they will argue against you on, but for beliefs not on that list... ↩︎

Yeah, Stephen's comment is indeed a mild update back in the happy direction.

I'm still digesting, but a tentative part of my model here is that it's similar to what typically happens to people in charge of large organizations. I.e. they accidentally create selection pressures which surround them with flunkies who display what the person in charge wants to see, and thereby lose the ability to see reality. And that's not something which just happens to crazies. For instance, this is my central model of why Putin invaded Ukraine.

I'm trying to think of ways to distinguish "AI drove them crazy" from "AI directed their pre-existing crazy towards LW".

The part where they 50% of them write basically the same essay seems more like the LLMs have an attractor state they funnel them towards.

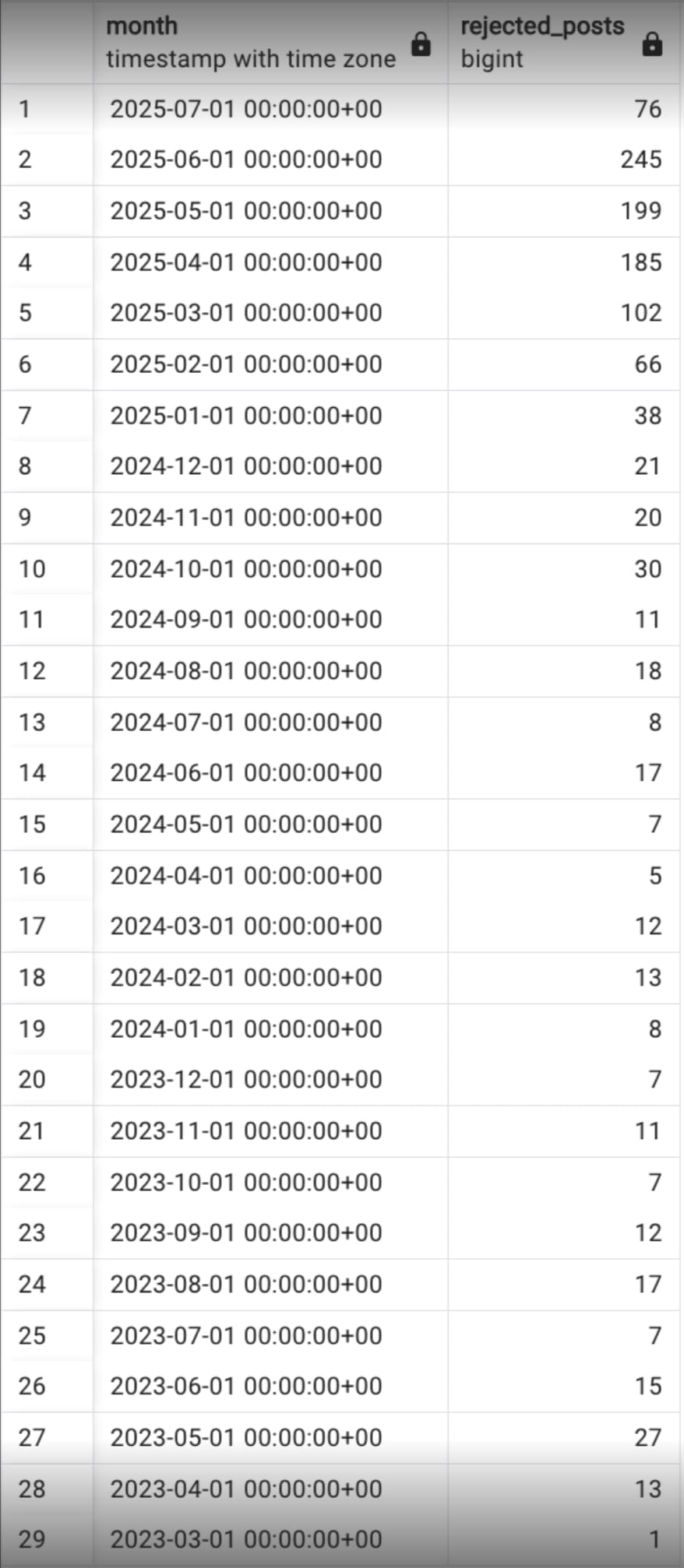

RobertM had made this table for another discussion on this topic, it looks like the actual average is maybe more like "8, as of last month", although on a noticeable uptick.

You can see that the average used to be < 1.

I'm slightly confused about this because the number of users we have to process each morning is consistently more like 30 and I feel like we reject more than half and probably more than 3/4 for being LLM slop, but that might be conflating some clusters of users, as well as "it's annoying to do this task so we often put it off a bit and that results in them bunching up." (although it's pretty common to see numbers more like 60)

[edit: Robert reminds me this doesn't include comments, which was another 80 last month)

Again you can look at https://www.lesswrong.com/moderation#rejected-posts to see the actual content and verify numbers/quality for yourself.

Again you can look at https://www.lesswrong.com/moderation#rejected-posts to see the actual content and verify numbers/quality for yourself.

Having just done so, I now have additional appreciation for LW admins; I didn't realize the role involved wading through so much of this sort of thing. Thank you!

Did you mean to reply to that parent?

I was part of the study actually. For me, I think a lot of the productivity gains were lost from starting to look at some distraction while waiting for the LLM and then being "afk" for a lot longer than the prompt took to wrong. However! I just discovered that Cursor has exactly the feature I wanted them to have: a bell that rings when your prompt is done. Probably that alone is worth 30% of the gains.

Other than that, the study started in February (?). The models have gotten a lot better in just the past few months such that even if the study was true for the average time it was run, I don't expect it to be true now or in another three months (unless the devs are really bad at using AI actually or something).

Subjectively, I spend less time now trying to wrangle a solution out of them and a lot more it works pretty quickly.

Reading through Backdoors as an analogy for deceptive alignment prompted me to think about a LW feature I might be interested in. I don't have much math background, and have always found it very effortful to parse math-heavy posts. I expect there are other people in a similar boat.

In modern programming IDEs it's common to have hoverovers for functions and variables, and I think it's sort of crazy that we don't have that for math. So, I'm considering a LessWrong feature that:

- takes in a post (i.e. when you save or go to publish a draft)

- identifies the LaTeX terms in the post

- creates a glossary for what each term means. (This should probably require confirmation by the author)

- makes a hoverover for each term so when you mouseover it reminds you.

On "Backdoors", I asked the LessWrong-integrated LLM: "what do the Latex terms here mean"?

It replied :

...The LaTeX symbols in this passage represent mathematical notations. Let me explain each of them:

- : This represents a class of functions. The curly F denotes that it's a set or collection of functions.

- : This means that is a function that belongs to (is an element of) the class .

- : The asterisk superscript typ

The “prompt shut down” clause seemed like one of the more important clauses in the SB 1047 bill. I was surprised other people I talked to didn't think seem to think it mattered that much, and wanted to argue/hear-arguments about it.

The clauses says AI developers, and compute-cluster operators, are required to have a plan for promptly shutting down large AI models.

People's objections were usually:

"It's not actually that hard to turn off an AI – it's maybe a few hours of running around pulling plugs out of server racks, and it's not like we're that likely to be in the sort of hard takeoff scenario where the differences in a couple hours of manually turning it off will make the difference."

I'm not sure if this is actually true, but, assuming it's true, it still seems to me like the shutdown clause is the one of the more uncomplicatedly-good parts of the bill.

Some reasons:

1. I think the ultimate end game for AI governance will require being able to quickly notice and shut down rogue AIs. That's what it means for the acute risk period to end.

2. In the more nearterm, I expect the situation where we need to stop running an AI to be fairly murky. Shutting down an AI is going to be ve...

Largely agree with everything here.

But, I've heard some people be concerned "aren't basically all SSP-like plans basically fake? is this going to cement some random bureaucratic bullshit rather than actual good plans?." And yeah, that does seem plausible.

I do think that all SSP-like plans are basically fake, and I’m opposed to them becoming the bedrock of AI regulation. But I worry that people take the premise “the government will inevitably botch this” and conclude something like “so it’s best to let the labs figure out what to do before cementing anything.” This seems alarming to me. Afaict, the current world we’re in is basically the worst case scenario—labs are racing to build AGI, and their safety approach is ~“don’t worry, we’ll figure it out as we go.” But this process doesn’t seem very likely to result in good safety plans either; charging ahead as is doesn’t necessarily beget better policies. So while I certainly agree that SSP-shaped things are woefully inadequate, it seems important, when discussing this, to keep in mind what the counterfactual is. Because the status quo is not, imo, a remotely acceptable alternative either.

Afaict, the current world we’re in is basically the worst case scenario

the status quo is not, imo, a remotely acceptable alternative either

Both of these quotes display types of thinking which are typically dangerous and counterproductive, because they rule out the possibility that your actions can make things worse.

The current world is very far from the worst-case scenario (even if you have very high P(doom), it's far away in log-odds) and I don't think it would be that hard to accidentally make things considerably worse.

I largely agree that the "full shutdown" provisions are great. I also like that the bill requires developers to specify circumstances under which they would enact a shutdown:

(I) Describes in detail the conditions under which a developer would enact a full shutdown.

In general, I think it's great to help governments understand what kinds of scenarios would require a shutdown, make it easy for governments and companies to enact a shutdown, and give governments the knowledge/tools to verify that a shutdown has been achieved.

I feel so happy that "what's your crux?" / "is that cruxy" is common parlance on LW now, it is a meaningful improvement over the prior discourse. Thank you CFAR and whoever was part of the generation story of that.

"Is that cruxy" approximately means "is this proposition load bearing for your opinion on the broader topic we are discussing?". I.e. if you are discussing whether god exists, and then in the process of that hit the question of whether historical Jesus was real, then one person can say "is this cruxy?" to mean "would you actually change your mind (or at least substantially update) on whether god exists if historical Jesus did in fact exist?".

My Current Metacognitive Engine

Someday I might work this into a nicer top-level post, but for now, here's the summary of the cognitive habits I try to maintain (and reasonably succeed at maintaining). Some of these are simple TAPs, some of them are more like mindsets.

- Twice a day, asking “what is the most important thing I could be working on and why aren’t I on track to deal with it?”

- you probably want a more specific question (“important thing” is too vague). Three example specific questions (but, don’t be a slave to any specific operationalization)

- what is the most important uncertainty I could be reducing, and how can I reduce it fastest?

- what’s the most important resource bottleneck I can gain, or contribute to the ecosystem, and would gain me that resource the fastest?

- what’s the most important goal I’m backchaining from?

- you probably want a more specific question (“important thing” is too vague). Three example specific questions (but, don’t be a slave to any specific operationalization)

- Have a mechanism to iterate on your habits that you use every day, and frequently update in response to new information

- for me, this is daily prompts and weekly prompts, which are:

- optimized for being the efficient metacognition I obviously want to do each day

- include one skill that I want to level up in, that I can do in the morning as part of the meta-orienting (su

- for me, this is daily prompts and weekly prompts, which are:

I want to be able to talk about the tribal-ish dynamics in how LW debates AI (which feels indeed pretty tribal and bad on multiple sides).

A thing that feels tricky about this is that talking about it in any reasonable concise way involves, well, grouping people into groups, which is sort of playing into the very tribal dynamic I'd like us to back out of.

If we weren't so knee-deep in the problem, it'd seem plausible that the right move is just "try to be the non-tribal conversation you want to exist in the world." But, we seem pretty knee-deep in into it and a few marginal reasonable conversations aren't going to solve the problem.

My default plan is "just talk about the groups, add a couple caveats about it", which seems better to me than not-doing-that. But, I do wish I had a better option and curious for people's takes.

Some instincts:

- Try hard to name the positive things that different groups believe in rather than simply what they don't like.

- Try hard to name their strengths than simply what (others might see as) their flaws.

- Default to talk fairly abstractly when possible, to avoid accidentally re-litigating a lot of specific conflicts that aren't necessary, and avoid making people feel singled out. (This is somewhat in conflict with the virtues of concreteness and precision; not quite sure how to describe the synthesis of these.)

- Be very hesitating to reify groups or forces when it's not necessary. Due to the way human psychology works, it's very easy to bring into existence a political group or battle that didn't exist by careless use of words and names. I think the biggest risk is in reifying groups or tribes or conflicts that don't exist and don't need to exist. (Link to me writing about this before.)

For instance, if Tom proposes a group norm of always including epistemic statuses at the top of posts, and there's a conflict about it, there are better and worse ways of naming sides.

- "The people who hate Tom" and "The people who like Tom" is worse than "The people for mandatory epistem

It seems like everyone is tired of hearing every other group's opinions about AI. Since like 2005, Eliezer has been hearing people say a superintelligent AI surely won't be clever, and has had enough. The average LW reader is tired of hearing obviously dumb Marc Andreessen accelerationist opinions. The average present harms person wants everyone to stop talking about the unrealistic apocalypse when artists are being replaced by shitty AI art. The average accelerationist wants everyone to stop talking about the unrealistic apocalypse when they could literally cure cancer and save Western civilization. The average NeurIPS author is sad that LLMs have made their expertise in Gaussian kernel wobblification irrelevant. Various subgroups of LW readers are dissatisfied with people who think reward is the optimization target, Eliezer is always right, or discussion is too tribal, or whatever.

With this combined with how Twitter distorts discourse is it any wonder that people need to process things as "oh, that's just another claim by X group, time to dismiss"? Anyway I think naming the groups isn't the problem, and so naming the groups in the post isn't contributing to the problem much. The important thing to address is why people find it advantageous to track these groups.

fwiw this seems basically what's happening to me. (the comment reads kinda defeatist about it, but, not entirely sure what you were going for, and the model seems right, if incomplete. [edit: I agree that several of the statements about entire groups are not literally true for the entire group, when I say 'basically right' I mean "the overall dynamic is an important gear, and I think among each group there's a substantial chunk of people who are tired in the way Thomas depicts"])

On my own end, when I'm feeling most tribal-ish or triggered, it's when someone/people are looking to me like they are "willfully not getting it". And, I've noticed a few times on my end where I'm sort of willfully not getting it (sometimes while trying to do some kind of intellectual bridging, which I bet is particularly annoying).

I'm not currently optimistic about solving twitter.

The angle I felt most optimistic about on LW is aiming for a state where a few prominent-ish* people... feel like they get understood by each other at the same time, and can chill out at the same time. This maybe works IFF there are some people who:

a) aren't completely burned out on the "try to communicate / actually have a good ...

A few reasons I don't mind the Thomas comment:

- I've found it's often actually better for group processing of ideas-and-emotions for there to be nonzero ranting about what you really feel in your heart even if not fully accurate. (This is also very risky, and having it be net-positive is tricky, but, often when I see people trying to dance around the venting you can feel it leaking through the veneer of politeness)

- I think Thomas's comment is slightly exaggerated but... idk basically correct in overall thrust, and an important gear? (I agree whenever you say "this group thinks X", obviously lots of people in that group will not think X)

- While the comment paints people with a negative brush, it does paint a bunch of different people in a negative brush, such that it's more about painting the overall dynamic in a negative light than the individual people, in my reading.

Motif coming up for me: a lot of skill ceilings are much higher than you might think, and worth investing in.

Some skills that you can be way better at:

- Listening to people, and hearing what they're actually trying to say, and gaining value from it

- Noticing subtle things that are important. You can learn to notice like 5 different things happening inside you or around you, that occured in <1 second.

- Being concrete, in ways that help you resolve confusion and gain momentum on solving problems.

- Each stage of OODA Looping is quite deep

- (i.e. "Observe", "Orient", "Decide", and "Act" each have a lot of deep subskills. The depth of "Noticing" is a subset of the overall set of "Observation" skills")

I wanted to write up a post on "what implicit bets am I making?". I first had to write up "what am I doing and why am I doing it?", to help me tease out "okay, so what are my assumptions?."

My broad strategy right now is "spend last year and this year focusing on 'waking up humanity'" (with some amount of "maintain infrastructure" and "push some longterm projects along that I've mostly outsourced")

The win condition I am roughly backchaining from is:

- We get a halfhearted worldwide pause or slowdown, that buys at least a few years

- We figure out how to Get a Lot of Alignment Research Done Real Fast

- We have good enough communication/coordination that the powers-that-be can make some fairly high stakes, nuanced decisions about how to deploy increasingly advanced and fast paced AI.

Other nearby worlds I'm keeping in mind are:

- There is no pause, so we just gotta Get a Lot of Alignment Research Done Real REAL Fast

- We get a very long pause, which means we can afford to be more careful about how we Get Our Alignment Research Done, but we also need to maintain good international comms/coordination/wisdom for decades, which is differently tricky.

- There's basically no "Alignment is Solved" moment

There was a particular mistake I made over in this thread. Noticing the mistake didn't change my overall position (and also my overall position was even weirder than I think people thought it was). But, seemed worth noting somewhere.

I think most folk morality (or at least my own folk morality), generally has the following crimes in ascending order of badness:

- Lying

- Stealing

- Killing

- Torturing people to death (I'm not sure if torture-without-death is generally considered better/worse/about-the-same-as killing)

But this is the conflation of a few different things. One axis I was ignoring was "morality as coordination tool" vs "morality as 'doing the right thing because I think it's right'." And these are actually quite different. And, importantly, you don't get to spend many resources on morality-as-doing-the-right-thing unless you have a solid foundation of the morality-as-coordination-tool.

There's actually a 4x3 matrix you can plot lying/stealing/killing/torture-killing into which are:

- harming the ingroup

- harming the outgroup (who you may benefit from trading with)

- harming powerless people who don't have the ability to trade or col

On the object level, the three levels you described are extremely important:

- harming the ingroup

- harming the outgroup (who you may benefit from trading with)

- harming powerless people who don't have the ability to trade or collaborate with you

I'm basically never talking about the third thing when I talk about morality or anything like that, because I don't think we've done a decent job at the first thing. I think there's a lot of misinformation out there about how well we've done the first thing, and I think that in practice utilitarian ethical discourse tends to raise the message length of making that distinction, by implicitly denying that there's an outgroup.

I don't think ingroups should be arbitrary affiliation groups. Or, more precisely, "ingroups are arbitrary affiliation groups" is one natural supergroup which I think is doing a lot of harm, and there are other natural supergroups following different strategies, of which "righteousness/justice" is one that I think is especially important. But pretending there's no outgroup is worse than honestly trying to treat foreigners decently as foreigners who can't be c...

Some beliefs of mine, I assume different from Ben's but I think still relevant to this question are:

At the very least, your ability to accomplish anything re: helping the outgroup or helping the powerless is dependent on having spare resources to do so.

There are many clusters of actions which might locally benefit the ingroup and leave the outgroup or powerless in the cold, but which then enable future generations of ingroup more ability to take useful actions to help them. i.e. if you're a tribe in the wilderness, I much rather you invent capitalism and build supermarkets than that you try to help the poor. The helping of the poor is nice but barely matters in the grand scheme of things.

I don't personally think you need to halt *all* helping of the powerless until you've solidified your treatment of the ingroup/outgroup. But I could imagine future me changing my mind about that.

A major suspicion/confusion I have here is that the two frames:

- "Help the ingroup, so that the ingroup eventually has the bandwidth and slack to help the outgroup and the powerless", and

- "Help the ingroup, because it's convenient and they're the ingroup"

Look...

This feels like the most direct engagement I've seen from you with what I've been trying to say. Thanks! I'm not sure how to describe the metric on which this is obviously to-the-point and trying-to-be-pin-down-able, but I want to at least flag an example where it seems like you're doing the thing.

Inspired by a recent comment, a potential AI movie or TV show that might introduce good ideas to society, is one where there are already uploads, LLM-agents and biohumans who are beginning to get intelligence-enhanced, but there is a global moratorium on making any individual much smarter.

There's an explicit plan for gradually ramping up intelligence, running on tech that doesn't require ASI (i.e. datacenters are centralized, monitored and controlled via international agreement, studying bioenhancement or AI development requires approval from your country's FDA equivalent). There is some illegal research but it's much less common. i.e the Controlled Takeoff is working a'ight.

If it were a TV show, the first season would mostly be exploring how uploads, ambiguously-sentient-LLMs, enhanced humans and regular humans coexist.

Main character is an enhanced human, worried about uploads gaining more political power because there are starting to be more of them, and research to speed them up or improve them is easier.

Main character has parents and a sibling or friend who are choosing to remain unenhanced, and there is some conflict about it.

By the end of season 1, there's a subplot about ...

It's Petrov week! Reminder, if you are running a local Petrov meetup of some kind, you can create a LW event and click the "Petrov" button (next to the "LW" "SSC" etc buttons), to have it show up on the meetup map.

(You can also click this link to have it automatically populated with the Petrov tag)

If you don't want it to be fully public, I recommend putting in the city location and some kind of contact-info so people can ping you, and you can make a call as to whether you have room for more people, or whether you think the people reaching out would be good for your event vibe.

...

I do think the Petrov Day ceremony is a pretty nice experience. It feels like... a real holiday? Like, I've attended Jewish Seders and it's got a very similar vibe of "we're here to appreciate our history and some values we care about."

Jim's latest version [edit: updated to be the correct one for printing doublesided] of the booklet works to bridge the connection between the long arc of history (i.e. appreciate what might be lost), the Petrov incident in particular, and current worries about x-risk from AI.

If you're less into AI, you might also look at Ozy Brennan's version, which focuses more directl...

Periodically I describe a particular problem with the rationalsphere with the programmer metaphor of:

"For several years, CFAR took the main LW Sequences Git Repo and forked it into a private branch, then layered all sorts of new commits, ran with some assumptions, and tweaked around some of the legacy code a bit. This was all done in private organizations, or in-person conversation, or at best, on hard-to-follow-and-link-to-threads on Facebook.

"And now, there's a massive series of git-merge conflicts, as important concepts from CFAR attempt to get merged back into the original LessWrong branch. And people are going, like 'what the hell is focusing and circling?'"

And this points towards an important thing about _why_ think it's important to keep people actually writing down and publishing their longform thoughts (esp the people who are working in private organizations)

And I'm not sure how to actually really convey it properly _without_ the programming metaphor. (Or, I suppose I just could. Maybe if I simply remove the first sentence the description still works. But I feel like the first sentence does a lot of important work in communicating it clearly)

We have enough programmers that I can basically get away with it anyway, but it'd be nice to not have to rely on that.

There's a skill of "quickly operationalizing a prediction, about a question that is cruxy for your decisionmaking."

And, it's dramatically better to be very fluent at this skill, rather than "merely pretty okay at it."

Fluency means you can actually use it day-to-day to help with whatever work is important to you. Day-to-day usage means you can actually get calibrated re: predictions in whatever domains you care about. Calibration means that your intuitions will be good, and _you'll know they're good_.

Fluency means you can do it _while you're in the middle of your thought process_, and then return to your thought process, rather than awkwardly bolting it on at the end.

I find this useful at multiple levels-of-strategy. i.e. for big picture 6 month planning, as well as for "what do I do in the next hour."

I'm working on this as a full blogpost but figured I would start getting pieces of it out here for now.

A lot of this skill is building off on CFAR's "inner simulator" framing. Andrew Critch recently framed this to me as "using your System 2 (conscious, deliberate intelligence) to generate questions for your System 1 (fast intuition) to answer." (Whereas previously, he'd known System 1 ...

I disagree with this particular theunitofcaring post "what would you do with 20 billion dollars?", and I think this is possibly the only area where I disagree with theunitofcaring overall philosophy and seemed worth mentioning. (This crops up occasionally in her other posts but it is most clear cut here).

I think if you got 20 billion dollars and didn't want to think too hard about what to do with it, donating to OpenPhilanthropy project is a pretty decent fallback option.

But my overall take on how to handle the EA funding landscape has changed a bit in the past few years. Some things that theunitofcaring doesn't mention here, which seem at least warrant thinking about:

[Each of these has a bit of a citation-needed, that I recall hearing or reading in reliable sounding places, but correct me if I'm wrong or out of date]

1) OpenPhil has (at least? I can't find more recent data) 8 billion dollars, and makes something like 500 million a year in investment returns. They are currently able to give 100 million away a year.

They're working on building more capacity so they can give more. But for the foreseeable future, they _can't_ actually spend more m...

Something struck me recently, as I watched Kubo, and Coco - two animated movies that both deal with death, and highlight music and storytelling as mechanisms by which we can preserve people after they die.

Kubo begins "Don't blink - if you blink for even an instant, if you a miss a single thing, our hero will perish." This is not because there is something "important" that happens quickly that you might miss. Maybe there is, but it's not the point. The point is that Kubo is telling a story about people. Those people are now dead. And insofar as those people are able to be kept alive, it is by preserving as much of their personhood as possible - by remembering as much as possible from their life.

This is generally how I think about death.

Cryonics is an attempt at the ultimate form of preserving someone's pattern forever, but in a world pre-cryonics, the best you can reasonably hope for is for people to preserve you so thoroughly in story that a young person from the next generation can hear the story, and palpably feel the underlying character, rich with inner life. Can see the person so clearly that he or she comes to live inside them.

Realistical...

I wanted to just reply something like "<3" and then became self-conscious of whether that was appropriate for LW.

In particular, I think if we make the front-page comments section filtered by "curated/frontpage/community" (i.e. you only see community-blog comments on the frontpage if your frontpage is set to community), then I'd feel more comfortable posting comments like "<3", which feels correct to me.

A major goal I had for the LessWrong Review was to be "the intermediate metric that let me know if LW was accomplishing important things", which helped me steer.

I think it hasn't super succeeded at this.

I think one problem is that it just... feels like it generates stuff people liked reading, which is different from "stuff that turned out to be genuinely important."

I'm now wondering "what if I built a power-tool that is designed for a single user to decide which posts seem to have mattered the most (according to them), and, then, figure out which intermediate posts played into them." What would the lightweight version of that look like?

Another thing is, like, I want to see what particular other individuals thought mattered, as opposed to a generate aggregate that doesn't any theory underlying it. Making the voting public veers towards some kind of "what did the cool people think?" contest, so I feel anxious about that, but, I do think the info is just pretty useful. But like, what if the output of the review is a series of individual takes on what-mattered-and-why, collectively, rather than an aggregate vote?

Yesterday I was at a "cultivating curiosity" workshop beta-test. One concept was "there are different mental postures you can adopt, that affect how easy it is not notice and cultivate curiosities."

It wasn't exactly the point of the workshop, but I ended up with several different "curiosity-postures", that were useful to try on while trying to lean into "curiosity" re: topics that I feel annoyed or frustrated or demoralized about.

The default stances I end up with when I Try To Do Curiosity On Purpose are something like:

1. Dutiful Curiosity (which is kinda fake, although capable of being dissociatedly autistic and noticing lots of details that exist and questions I could ask)

2. Performatively Friendly Curiosity (also kinda fake, but does shake me out of my default way of relating to things. In this, I imagine saying to whatever thing I'm bored/frustrated with "hullo!" and try to acknowledge it and and give it at least some chance of telling me things)

But some other stances to try on, that came up, were:

3. Curiosity like "a predator." "I wonder what that mouse is gonna do?"

4. Earnestly playful curiosity. "oh that [frustrating thing] is so neat, I wonder how it works! what's it gonna ...

I started writing this a few weeks ago. By now I have other posts that make these points more cleanly in the works, and I'm in the process of thinking through some new thoughts that might revise bits of this.

But I think it's going to be awhile before I can articulate all that. So meanwhile, here's a quick summary of the overall thesis I'm building towards (with the "Rationalization" and "Sitting Bolt Upright in Alarm" post, and other posts and conversations that have been in the works).

(By now I've had fairly extensive chats with Jessicata and Benquo and I don't expect this to add anything that I didn't discuss there, so this is more for other people who're interested in staying up to speed. I'm separately working on a summary of my current epistemic state after those chats)

- The rationalsphere isn't great at applying rationality to its own internal politics

- We don't seem to do much better than average. This seems like something that's at least pretty sad, even if it's a true brute fact about the world.

- There have been some efforts to fix this fact, but most of it has seemed (to me) to be missing key

In that case Sarah later wrote up a followup post that was more reasonable and Benquo wrote up a post that articulated the problem more clearly. [Can't find the links offhand].

"Reply to Criticism on my EA Post", "Between Honesty and Perjury"

I've posted this on Facebook a couple times but seems perhaps worth mentioning once on LW: A couple weeks ago I registered the domain LessLong.com and redirected it to LessWrong.com/shortform. :P

Conversation with Andrew Critch today, in light of a lot of the nonprofit legal work he's been involved with lately. I thought it was worth writing up:

"I've gained a lot of respect for the law in the last few years. Like, a lot of laws make a lot more sense than you'd think. I actually think looking into the IRS codes would actually be instructive in designing systems to align potentially unfriendly agents."

I said "Huh. How surprised are you by this? And curious if your brain was doing one particular pattern a few years ago that you can now see as wrong?"

"I think mostly the laws that were promoted to my attention were especially stupid, because that's what was worth telling outrage stories about. Also, in middle school I developed this general hatred for stupid rules that didn't make any sense and generalized this to 'people in power make stupid rules', or something. But, actually, maybe middle school teachers are just particularly bad at making rules. Most of the IRS tax code has seemed pretty reasonable to me."

Every now and then I'm like "smart phones are killing America / the world, what can I do about that?".

Where I mean: "Ubiquitous smart phones mean most people are interacting with websites in a fair short attention-space, less info-dense-centric way. Not only that, but because websites must have a good mobile version, you probably want your website to be mobile-first or at least heavily mobile-optimized, and that means it's hard to build features that only really work when users have a large amount of screen space."

I'd like some technological solution that solves the problems smartphones solve but somehow change the default equilibria here, that has a chance at global adoption.

I guess the answer these days is "prepare for the switch to fully LLM voice-control Star Trek / Her world where you are mostly talking to it, (maybe with a side-option of "AR goggles" but I'm less optimistic).

I think the default way those play out will be very attention-economy-oriented, and wondering if there's a way to get ahead of that and build something deeply good that might actually sell well.

Over in this thread, Said asked the reasonable question "who exactly is the target audience with this Best of 2018 book?"

By compiling the list, we are saying: “here is the best work done on Less Wrong in [time period]”. But to whom are we saying this? To ourselves, so to speak? Is this for internal consumption—as a guideline for future work, collectively decided on, and meant to be considered as a standard or bar to meet, by us, and anyone who joins us in the future?

Or, is this meant for external consumption—a way of saying to others, “see what we have accomplished, and be impressed”, and also “here are the fruits of our labors; take them and make use of them”? Or something else? Or some combination of the above?

I'm working on a post that goes into a bit more detail about the Review Phase, and, to be quite honest, the whole process is a bit in flux – I expect us (the LW team as well as site participants) to learn, over the course of the review process, what aspects of it are most valuable.

But, a quick "best guess" answer for now.

I see the overall review process as having two "major phases":

- Phase 1: Nomination/Review/Voting/Post-that-summarizes-the-voting

- Phase 2: Compila

So, I think I need to distinguish between "Feedbackloop-first Rationality" (which is a paradigm for inventing rationality training) and "Ray's particular flavor of metastrategy", which I used feedbackloop-first rationality to invent" (which, if I had to give a name, I'd call "Fractal Strategy"[1], but that sounds sort of pretentious and normally I just call it "Metastrategy" even though it's too vague)

Feedbackloop-first Rationality is about the art of designing exercises, and thinking about what sort of exercises apply across domains, thinking about what feedback loops will turn to out to help longterm, and which feedbackloops will generalize, etc.

"Fractal Strategy" is the art of noticing what goal you're currently pursuing, whether you should switch goals, and what tactics are appropriate for your current goal, in a very fluid way (while making predictions about those strategy outcomes).

Feedbackloop-first-rationality isn't actually relevant to most people – it's really only relevant if you're a longterm rationality developer. Most people just want some tools that work for them, they aren't going to invest enough to be inventing their own tools. Almost all my workshops/sessions/exe...

A thing I might have maybe changed my mind about:

I used to think a primary job of a meetup/community organizer was to train their successor, and develop longterm sustainability of leadership.

I still hold out for that dream. But, it seems like a pattern is:

1) community organizer with passion and vision founds a community

2) they eventually move on, and pass it on to one successor who's pretty closely aligned and competent

3) then the First Successor has to move on to, and then... there isn't anyone obvious to take the reins, but if no one does the community dies, so some people reluctantly step up. and....

...then forever after it's a pale shadow of its original self.

For semi-branded communities (such as EA, or Rationality), this also means that if someone new with energy/vision shows up in the area, they'll see a meetup, they'll show up, they'll feel like the meetup isn't all that good, and then move on. Wherein they (maybe??) might have founded a new one that they got to shape the direction of more.

I think this also applies to non-community organizations (i.e. founder hands the reins to a new CEO who hands the reins to a new CEO who doesn't quite know what to do)

So... I'm kinda wonde...

From Wikipedia: George Washington, which cites Korzi, Michael J. (2011). Presidential Term Limits in American History: Power, Principles, and Politics page 43, -and- Peabody, Bruce G. (September 1, 2001). "George Washington, Presidential Term Limits, and the Problem of Reluctant Political Leadership". Presidential Studies Quarterly. 31 (3): 439–453:

At the end of his second term, Washington retired for personal and political reasons, dismayed with personal attacks, and to ensure that a truly contested presidential election could be held. He did not feel bound to a two-term limit, but his retirement set a significant precedent. Washington is often credited with setting the principle of a two-term presidency, but it was Thomas Jefferson who first refused to run for a third term on political grounds.

A note on the part that says "to ensure that a truly contested presidential election could be held": at this time, Washington's health was failing, and he indeed died during what would have been his 3rd term if he had run for a 3rd term. If he had died in office, he would have been immediately succeeded by the Vice President, which would set an unfortunate precedent of presidents serving until they die, then being followed by an appointed heir until that heir dies, blurring the distinction between the republic and a monarchy.

Posts I vaguely want to have been written so I can link them to certain types of new users:

- "Why you can chill out about the basilisk and acausal blackmail." (The current Roko's Basilisk kinda tries to be this, but there's a type of person who shows up on LessWrong regularly who's caught in an anxious loop that keeps generating more concerns, and I think the ideal article here is more trying to break them out of the anxious loop than comprehensively explain the game theory.)

- "FAQ: Why you can chill out about quantum immortality and everything adds up to normality." (Similar, except the sort of person who gets worked up about this is usually having a depressive spiral and worried about being trapped in an infinite hellscape)

Crossposted from my Facebook timeline (and, in turn, crossposted there from vaguely secret, dank corners of the rationalsphere)

“So Ray, is LessLong ready to completely replace Facebook? Can I start posting my cat pictures and political rants there?”

Well, um, hmm....

So here’s the deal. I do hope someday someone builds an actual pure social platform that’s just actually good, that’s not out-to-get you, with reasonably good discourse. I even think the LessWrong architecture might be good for that (and if a team wanted to fork the codebase, they’d be welcome to try)

But LessWrong shortform *is* trying to do a bit of a more nuanced thing than that.

Shortform is for writing up early stage ideas, brainstorming, or just writing stuff where you aren’t quite sure how good it is or how much attention to claim for it.

For it to succeed there, it’s really important that it be a place where people don’t have to self-censor or stress about how their writing comes across. I think intellectual progress depends on earnest curiosity, exploring ideas, sometimes down dead ends.

I even think it involves clever jokes sometimes.

But... I dunno, if looked ahead 5 years and saw that the Future People were using ...

Just spent a weekend at the Internet Intellectual Infrastructure Retreat. One thing I came away with was a slightly better sense of was forecasting and prediction markets, and how they might be expected to unfold as an institution.

I initially had a sense that forecasting, and predictions in particular, was sort of "looking at the easy to measure/think about stuff, which isn't necessarily the stuff that connected to stuff that matters most."

Tournaments over Prediction Markets

Prediction markets are often illegal or sketchily legal. But prediction tournaments are not, so this is how most forecasting is done.

The Good Judgment Project

Held an open tournament, the winners of which became "Superforecasters". Those people now... I think basically work as professional forecasters, who rent out their services to companies, NGOs and governments that have a concrete use for knowing how likely a given country is to go to war, or something. (I think they'd been hired sometimes by Open Phil?)

Vague impression that they mostly focus on geopolitics stuff?

High Volume and Metaforecasting

Ozzie described a vision where lots of forecasters are predicting things all the time...

More in neat/scary things Ray noticed about himself.

I set aside this week to learn about Machine Learning, because it seemed like an important thing to understand. One thing I knew, going in, is that I had a self-image as a "non technical person." (Or at least, non-technical relative to rationality-folk). I'm the community/ritual guy, who happens to have specialized in web development as my day job but that's something I did out of necessity rather than a deep love.

So part of the point of this week was to "get over myself, and start being the sort of person who can learn technical things in domains I'm not already familiar with."

And that went pretty fine.

As it turned out, after talking to some folk I ended up deciding that re-learning Calculus was the right thing to do this week. I'd learned in college, but not in a way that connected to anything and gave me a sense of it's usefulness.

And it turned out I had a separate image of myself as a "person who doesn't know Calculus", in addition to "not a technical person". This was fairly easy to overcome since I had already given myself a bunch of space to explore and change this week, and I'd spent the past few months transitioning into being ready for it. But if this had been at an earlier stage of my life and if I hadn't carved out a week for it, it would have been harder to overcome.

Man. Identities. Keep that shit small yo.

Also important to note that learn Calculus this week is a thing a person can do fairly easily without being some sort of math savant.

(Presumably not the full 'know how to do all the particular integrals and be able to ace the final' perhaps, but definitely 'grok what the hell this is about and know how to do most problems that one encounters in the wild, and where to look if you find one that's harder than that.' To ace the final you'll need two weeks.)

I didn't downvote, but I agree that this is a suboptimal meme – though the prevailing mindset of "almost nobody can learn Calculus" is much worse.

As a datapoint, it took me about two weeks of obsessive, 15 hour/day study to learn Calculus to a point where I tested out of the first two courses when I was 16. And I think it's fair to say I was unusually talented and unusually motivated. I would not expect the vast majority of people to be able to grok Calculus within a week, though obviously people on this site are not a representative sample.

Quite fair. I had read Zvi as speaking to typical LessWrong readership. Also, the standard you seem to be describing here is much higher than the standard Zvi was describing.

High Stakes Value and the Epistemic Commons

I've had this in my drafts for a year. I don't feel like the current version of it is saying something either novel or crisp enough to quite make sense as a top-level post, but wanted to get it out at least as a shortform for now.

There's a really tough situation I think about a lot, from my perspective as a LessWrong moderator. These are my personal thoughts on it.

The problem, in short:

Sometimes a problem is epistemically confusing, and there are probably political ramifications of it, such that the most qualified people to debate it are also in conflict with billions of dollars on the line and the situation is really high stakes (i.e. the extinction of humanity) such that it really matters we get the question right.

Political conflict + epistemic murkiness means that it's not clear what "thinking and communicating sanely" about the problem look like, and people have (possibly legitimate) reasons to be suspicious of each other's reasoning.

High Stakes means that we can't ignore the problem.

I don't feel like our current level of rationalist discourse patterns are sufficient for this combo of high stakes, political conflict, and epistemi...

My personal religion involves two* gods – the god of humanity (who I sometimes call "Humo") and the god of the robot utilitarians (who I sometimes call "Robutil").

When I'm facing a moral crisis, I query my shoulder-Humo and my shoulder-Robutil for their thoughts. Sometimes they say the same thing, and there's no real crisis. For example, some naive young EAs try to be utility monks, donate all their money, never take breaks, only do productive things... but Robutil and Humo both agree that quality intellectual world requires slack and psychological health. (Both to handle crises and to notice subtle things, which you might need, even in emergencies)

If you're an aspiring effective altruist, you should definitely at least be doing all the things that Humo and Robutil agree on. (i.e. get to to the middle point of Tyler Alterman's story here).

But Humo and Robutil in fact disagree on some things, and disagree on emphasis.

They disagree on how much effort you should spend to avoid accidentally recruiting people you don't have much use for.

They disagree on how many high schoolers it's acceptable to accidentally fuck up psychologically, while you experiment with a new program to...

Seems like different AI alignment perspectives sometimes are about "which thing seems least impossible."

Straw MIRI researchers: "building AGI out of modern machine learning is automatically too messy and doomed. Much less impossible to try to build a robust theory of agency first."

Straw Paul Christiano: "trying to get a robust theory of agency that matters in time is doomed, timelines are too short. Much less impossible to try to build AGI that listens reasonably to me out of current-gen stuff."

(Not sure if either of these are fair, or if other camps fit this)

(I got nerd-sniped by trying to develop a short description of what I do. The following is my stream of thought)

+1 to replacing "build a robust theory" with "get deconfused," and with replacing "agency" with "intelligence/optimization," although I think it is even better with all three. I don't think "powerful" or "general-purpose" do very much for the tagline.

When I say what I do to someone (e.g. at a reunion) I say something like "I work in AI safety, by doing math/philosophy to try to become less confused about agency/intelligence/optimization." (I dont think I actually have said this sentence, but I have said things close.)

I specifically say it with the slashes and not "and," because I feel like it better conveys that there is only one thing that is hard to translate, but could be translated as "agency," "intelligence," or "optimization."

I think it is probably better to also replace the word "about" with the word "around" for the same reason.

I wish I had a better word for "do." "Study" is wrong. "Invent" and "discover" both seem wrong, because it is more like "invent/discover", but that feels like it is overusing the slashes. Maybe "develop"? I think I like "invent" best. (Note...

Using "cruxiness" instead of operationalization for predictions.

One problem with making predictions is "operationalization." A simple-seeming prediction can have endless edge cases.

For personal predictions, I often think it's basically not worth worrying about it. Write something rough down, and then say "I know what I meant." But, sometimes this is actually unclear, and you may be tempted to interpret a prediction in a favorable light. And at the very least it's a bit unsatisfying for people who just aren't actually sure what they meant.

One advantage of cruxy predictions (aside from "they're actually particularly useful in the first place), is that if you know what decision a prediction was a crux for, you can judge ambiguous resolution based on "would this actually have changed my mind about the decision?"

("Cruxiness instead of operationalization" is a bit overly click-baity. Realistically, you need at least some operationalization, to clarify for yourself what a prediction even means in the first place. But, I think maybe you can get away with more marginal fuzziness if you're clear on how the prediction was supposed to inform your decisionmaking)

I’ve noticed myself using “I’m curious” as a softening phrase without actually feeling “curious”. In the past 2 weeks I’ve been trying to purge that from my vocabulary. It often feels like I'm cheating, trying to pretend like I'm being a friend when actually I'm trying to get someone to do something. (Usually this is a person I'm working with it and it's not quite adversarial, we're on the same team, but it feels like it degrades the signal of true open curiosity)

Hmm, sure seems like we should deploy "tagging" right about now, mostly so you at least have the option of the frontpage not being All Coronavirus All The Time.

So there was a drought of content during Christmas break, and now... abruptly... I actually feel like there's too much content on LW. I find myself skimming down past the "new posts" section because it's hard to tell what's good and what's not and it's a bit of an investment to click and find out.

Instead I just read the comments, to find out where interesting discussion is.

Now, part of that is because the front page makes it easier to read comments than posts. And that's fixable. But I think, ultimately, the deeper issue is with the main unit-of-contribution being The Essay.

A few months ago, mr-hire said (on writing that provokes comments)

Ideas should become comments, comments should become conversations, conversations should become blog posts, blog posts should become books. Test your ideas at every stage to make sure you're writing something that will have an impact.

This seems basically right to me.

In addition to comments working as an early proving ground for an ideas' merit, comments make it easier to focus on the idea, instead of getting wrapped up in writing something Good™.

I notice essays on the front page starting with flo...

I've heard ~"I don't really get this concept of 'intelligence in the limit'" a couple times this week.

Which seems worth responding to, but I'm not sure how.

It seemed like some combination of: "wait, why do we care about 'superintelligence in the limit' as opposed to any particular "superintelligence-in-practice?", as well as "what exactly do we mean by The Limit?" and "why would we think The Limit shaped the way Yudkowsky thinks?"

My impression, based on my two most recent conversations about it, is that this is not only sort of cloudy and confusing feeling to some people, but, also, it's intertwined with a few other things that are separately cloudy and confusing. And also it's intertwined with other things that aren't cloudy and confusing per-se, but there's a lot of individual arguments to keep track of, so it's easy to get lost.

One ontology here is:

- it's useful to reason with nice abstractions that generalize to different situations.

- (It's easier to think about such abstractions at extremes, given simple assumptions)

- its also useful to reason about the nitty-gritty details of a particular implementation of a thing.

- it's useful to be able to move back and forth between abstrac

Hrm. Let me try to give some examples of things I find comprehensible "in the limit" and other things I do not, to try to get it across. In general, grappling for principles, I think that

- (1) reasoning in the limit requires you to have a pretty specific notion of what you're pushing to the limit. If you're uncertain what function f(x) does stands for, or what "x" is, then talking about what f(x + 1000) looks like is gonna be tough. It doesn't get clearer just because it's further away.

- (2) if you can reason in the limit, you should be able to reason about the not-limit well. If you're really confused about what f(x + 1) looks like, even though you know f(x), then thinking about f(x + 10000) doesn't look any better.

So, examples and counterexamples and analogies.

The Neural Tangent Kernel is theoretical framework meant to help understand what NNs do. It is meant to apply in the limit of an "infinite width" neural network. Notably, although I cannot test an infinite limit neural network, I can make my neural networks wider -- I know what that means to move X to X + 1, even though X -> inf is not available. People are (of course) uncertain if the NTK is true, but it at...

But the basic concept of "well, if it was imperfect at either not-getting-resource-pumped, or making suboptimal game theory choices, or if it gave up when it got stuck, it would know that it wasn't as cognitively powerful as it could be, and would want to find ways to be more cognitively powerful all-else-equal"... seems straightforward to me, and I'm not sure what makes it not straightforward seeming to others

I think there's a true and fairly straightforward thing here and also a non-straightforward-to-me and in fact imo false/confused adjacent thing. The true and fairly straightforward thing is captured by stuff like:

- as a mind grows, it comes to have more and better and more efficient technologies (e.g. you get electricity and you make lower-resistance wires)

- (relatedly) as grows, it employs bigger constellations of parts that cohere (i.e., that work well together; e.g. [hand axes -> fighter jets] or [Euclid's geometry -> scheme-theoretic algebraic geometry])

- as grows, it has an easier time getting any particular thing done, it sees more/better ways to do any particular thing, it can consider more/better plans for any particular thing, it has more and better meth

Is... there compelling difference between stockholm syndrome and just, like, being born into a family?

LLM Automoderation Idea (we could try this on LessWrong but it feels like something that's, like, more naturally part of a forum that's designed-from-the-ground-up around it)

Authors can create moderation guidelines, which get enforced by LLMs that read new comments, and have access to some user metadata. Comments get deleted / etc by the LLM. (You can also have tools other that deletion, like collapsing comments by default)

The moderation guidelines are public. It's commenter's job to write comments that pass.

Authors pay the fees for the LLMs doing the review.

(I'm currently thinking about this more like "I am interested in seeing what equilibria a setup like this would end up with" than like "this is a good idea")

TAP for fighting LLM-induced brain atrophy:

"send LLM query" ---> "open up a thinking doc and think on purpose."

What a thinking doc looks varies by person. Also, if you are sufficiently good at thinking, just "think on purpose" is maybe fine, but, I recommend having a clear sense of what it means to think on purpose and whether you are actually doing it.

I think having a doc is useful because it's easier to establish a context switch that is supportive of thinking.

For me, "think on purpose" means:

- ask myself what my goals are right now (try to notice at least 3)

- ask myself what would be the best think to do next (try for at least 3 ideas)

- flowing downhill from there is fine

Metastrategy = Cultivating good "luck surface area"?

Metastrategy: being good at looking at an arbitrary situation/problem, and figure out what your goals are, and what strategies/plans/tactics to employ in pursuit of those goals.

Luck Surface area: exposing yourself to a lot of situations where you are more likely to get valuable things in a not-very-predictable way. Being "good at cultivating luck surface area" means going to events/talking-to-people/consuming information that are more likely to give you random opportunities / new ways of thinking / new partners.

At one of my metastrategy workshops, while I talked with a participant about what actions had been most valuable the previous year, many of the things were like "we published a blogpost, or went to an event, and then kinda randomly found people who helped us a bunch, i.e. gave us money or we ended up hiring them."

This led me to utter the sentence "yeah, okay I grudgingly admit that 'increasing your luck surface area' is more important than being good at 'metastrategy'", and I improvised a session on "where did a lot of your good luck come from this year, and how could you capitalize more on that?"

But, thinking later, I thin...

I notice that academic papers have stupidly long, hard-to-read abstracts. My understanding is that this is because there is some kind of norm about papers having the abstract be one paragraph, while the word-count limit tends to be... much longer than a paragraph (250 - 500 words).

Can... can we just fix this? Can we either say "your abstract needs to be a goddamn paragraph, which is like 100 words", or "the abstract is a cover letter that should be about one page long, and it can have multiple linebreaks and it's fine."

(My guess is that the best equilibrium is "People keep doing the thing currently-called-abstracts, and start treating them as 'has to fit on one page', with paragraph breaks, and then also people start writing a 2-3 sentence thing that's more like "the single actual-paragraph that you'd read if you were skimming through a list of papers.")

I had a very useful conversation with someone about how and why I am rambly. (I rambled a lot in the conversation!).

Disclaimer: I am not making much effort to not ramble in this post.

A couple takeaways:

1. Working Memory Limits

One key problem is that I introduce so many points, subpoints, and subthreads, that I overwhelm people's working memory (where human working memory limits is roughly "4-7 chunks").

It's sort of embarrassing that I didn't concretely think about this before, because I've spent the past year SPECIFICALLY thinking about working memory limits, and how they are the key bottleneck on intellectual progress.

So, one new habit I have is "whenever I've introduced more than 6 points to keep track of, stop and and figure out how to condense the working tree of points down to <4.

(Ideally, I also keep track of this in advance and word things more simply, or give better signposting for what overall point I'm going to make, or why I'm talking about the things I'm talking about)

...

2. I just don't finish sente

I frequently don't finish sentences, whether in person voice or in text (like emails). I've known this for awhile, although I kinda forgot recently. I switch abruptly to a

[not trying to be be comprehensible people that don't already have some conception of Kegan stuff. I acknowledge that I don't currently have a good link that justifies Kegan stuff within the LW paradigm very well]

Last year someone claimed to me is that a problem with Kegan is that there really are at least 6 levels. The fact that people keep finding themselves self-declaring as "4.5" should be a clue that 4.5 is really a distinct level. (the fact that there are at least two common ways to be 4.5 also is a clue that the paradigm needs clarification)

My garbled summary of this person's conception is:

- Level 4: (you have a system of principles you are subject to, that lets you take level 3 [social reality??] as object)

- Level 5: Dialectic. You have the ability to earnestly dialogue between a small number of systems (usually 2 at a time), and either step between them, or work out new systems that reconcile elements from the two of them.

- Level 6: The thing Kegan originally meant by "level 5" – able to fluidly take different systems as object.

Previously, I had felt something like "I basically understand level 5 fine AFAICT, but maybe don't have the skills do so fluidly. I can imagine there bei

After a recent 'doublecrux meetup' (I wasn't running it but observed a bit), I was reflecting on why it's hard to get people to sufficiently disagree on things in order to properly practice doublecrux.\

As mentioned recently, it's hard to really learn doublecrux unless you're actually building a product that has stakes. If you just sorta disagree with someone... I dunno you can do the doublecrux loop but there's a sense where it just obviously doesn't matter.

But, it still sure is handy to have practiced doublecruxing before needing to do it in an important situation. What to do?

Two options that occur to me are

- Singlecruxing

- First try to develop a plan for building an actual product together, THEN find a thing to disagree about organically through that process.

[note: I haven't actually talked much with the people who's major focus is teaching doublecrux, not sure how much of this is old hat, or if there's a totally different approach that sort of invalidates it]

SingleCruxing

One challenge about doublecrux practice is that you have to find something you have strong opinions about and also someone else has strong opinions about. So.....

Random thought on Making Deals with AI:

First, recap: I don't think Control, Deals with AI, or Gradualism will be sufficient to solve the hard parts of alignment without some kind of significant conceptual progress. BUT, all else equal, if we have to have slightly superhuman AIs around Real Soon, it does seem better for the period where they're under control last longer.

And, I think making deals with them (i.e. you do this work for me, and I pay out in compute-you-get-to-use after the acute risk period is over), is a reasonable tool to have.

Making deals now also seems nice for purposes of establishing a good working relationship and tradition of cooperation.

(Remember, this spirit of falls apart in the limit, which will probably happen quickly)

All else equal, it's better for demonstrating trustworthiness if you pay out now rather than later. But, once you have real schemers, it'll rapidly stop being safe to pay out in small ways because a smart AI can be leveraging them in ways you may not anticipate. And it won't be clear when that period is.

But, I do think, right-now-in-particular, it's probably still safe to pay out in "here's some compute right now to think about whatever you wan...

I’d like to hire cognitive assistants and tutors more often. This could (potentially) be you, or people you know. Please let me know if you’re interested or have recommendations.

By “cognitive assistant” I mean a range of things, but the core thing is “sit next to me, and notice when I seem like I’m not doing the optimal thing, and check in with me.” I’m interested in advanced versions who have particular skills (like coding, or Applied Quantitivity, or good writing, or research taste) who can also be tutoring me as we go.

I’d like a large rolodex of such people, both for me, and other people I know who could use help. Let me know if you’re interested.

I was originally thinking "people who live in Berkeley" but upon reflection this could maybe be a remote role.

I notice some people go around tagging posts with every plausible tag that possible seems like it could fit. I don't think this is a good practice – it results in an extremely overwhelming and cluttered tag-list, which you can't quickly skim to figure out "what is this post actually about"?, and I roll to disbelieve on "stretch-tagging" actually helping people who are searching tag pages.

I just briefly thought you could put a bunch of AI researchers on a spaceship, and accelerate it real fast, and then they get time dilation effects that increase their effective rate of research.

Then I remembered that time dilation works the other way 'round – they'd get even less time.

This suggested a much less promising plan of "build narrowly aligned STEM AI, have it figure out how to efficiently accelerate the Earth real fast and... leave behind a teeny moon base of AI researchers who figure out the alignment problem."

Man, I watched The Fox and The Hound a few weeks ago. I cried a bit.

While watching the movie, a friend commented "so... they know that foxes are *also* predators, right?" and, yes. They do. This is not a movie that was supposed to be about predation except it didn't notice all the ramifications about its lesson. This movie just isn't taking a stand about predation.

This is a movie about... kinda classic de-facto tribal morality. Where you have your family and your tribe and a few specific neighbors/travelers that you welcomed into your home. Those are your people, and the rest of the world... it's not exactly that they aren't *people*, but, they aren't in your circle of concern. Maybe you eat them sometimes. That's life.

Copper the hound dog's ingroup isn't even very nice to him. His owner, Amos, leaves him out in a crate on a rope. His older dog friend is sort of mean. Amos takes him out on a hunting trip and teaches him how to hunt, conveying his role in life. Copper enthusiastically learns. He's a dog. He's bred to love his owner and be part of the pack no matter what.

My dad once commented that this was a movie that... seemed remarkably realistic about what you can expect from ani...

Sometimes the subject of Kegan Levels comes up and it actually matters a) that a developmental framework called "kegan levels" exists and is meaningful, b) that it applies somehow to The Situation You're In.

But, almost always when it comes up in my circles, the thing under discussion is something like "does a person have the ability to take their systems as object, move between frames, etc." And AFAICT this doesn't really need to invoke developmental frameworks at all. You can just ask if a person has a the "move between frames" skill.*

This still suffers a bit from the problem where, if you're having an argument with someone, and you think the problem is that they're lacking a cognitive skill, it's a dicey social move to say "hey, your problem is that you lack a cognitive skill." But, this seems a lot easier to navigate than "you are a Level 4 Person in this 5 Level Scale".

(I have some vague sense that Kegan 5 is supposed to mean something more than "take systems as object", but no one has made a great case for this yet, and in case it hasn't been the thing I'm personally running into)

There's a problem at parties where there'll be a good, high-context conversation happening, and then one-too-many-people join, and then the conversation suddenly dies.

Sometimes this is fine, but other times it's quite sad.

Things I think might help:

- If you're an existing conversation participant:

- Actively try to keep the conversation small. The upper limit is 5, 3-4 is better. If someone looks like they want to join, smile warmly and say "hey, sorry we're kinda in a high context conversation right now. Listening is fine but probably don't join."

- If you do want to let a newcomer join in, don't try to get them up to speed (I don't know if I've ever seen that actually work). Instead, say "this is high context so we're not gonna repeat the earlier bits, maybe wait to join in until you've listened enough to understand the overall context", and then quickly get back to the conversation before you lose the Flow.

- If you want to join a conversation:

- If there are already 5 people, sorry, it's probably too late. Listen if you find it interesting, but if you actively join you'll probably just kill the conversation.

- Give them the opportunity to gracefully keep the conversation small if they choose. (s

I'm not sure why it took me so long to realize that I should add a "consciously reflect on why I didn't succeed at all my habits yesterday, and make sure I don't fail tomorrow" to my list of daily habits, but geez it seems obvious in retrospect.

Strategic use of Group Houses for Community Building

(Notes that might one day become a blogpost. Building off The Relationship Between the Village and the Mission. Inspired to go ahead and post this now because of John Maxwell's "how to make money reducing loneliness" post, which explores some related issues through a more capitalist lens)

- A good village needs fences:

- A good village requires doing things on purpose.

- Doing things on purpose requires that you have people who are coordinated in some way

- Being coordinated requires you to be able to have a critical mass of people who are actually trying to do effortful things together (such as maintain norms, build a culture, etc)

- If you don't have a fence that lets some people in and doesn't let in others, and which you can ask people to leave, then your culture will be some random mishmash that you can't control

- There are a few existing sets of fences.

- The strongest fences are group houses, and organizations. Group houses are probably the easiest and most accessible resource for the "village" to turn into a stronger culture and coordination point.

- Some things you might coordinate using group houses f

Lately I've been noticing myself getting drawn into more demon-thready discussions on LessWrong. This is in part due to UI choice – demon threads (i.e. usually "arguments framed through 'who is good and bad and what is acceptable in the overton window'") are already selected for getting above-average at engagement. Any "neutral" sorting mechanism for showing recent comments is going to reward demon-threads disproportionately.

An option might be to replace the Recent Discussion section with a version of itself that only shows comments and posts from the Questions page (in particular for questions that were marked as 'frontpage', i.e. questions that are not about politics).

I've had some good experiences with question-answering, where I actually get into a groove where the thing I'm doing is actual object-level intellectual work rather than "having opinions on the internet." I think it might be good for the health of the site for this mode to be more heavily emphasized.

In any case, I'm interested in making a LW Team internal option where the mods can opt into a "replace recent discussion with recent question act...

I still want to make a really satisfying "fuck yeah" button on LessWrong comments that feels really good to press when I'm like "yeah, go team!" but doesn't actually mean I want to reward the comment in our longterm truthtracking or norm-tracking algorithms.

I think this would seriously help with weird sociokarma cascades.