This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

Austin said they have $1.5 million in the bank, vs $1.2 million mana issued. The only outflows right now are to the charity programme which even with a lot of outflows is only at $200k. they also recently raised at a $40 million valuation. I am confused by running out of money. They have a large user base that wants to bet and will do so at larger amounts if given the opportunity. I'm not so convinced that there is some tiny timeline here.

But if there is, then say so "we know that we often talked about mana being eventually worth $100 mana per dollar, but ...

But I do think, intuitively, GPT-5-MAIA might e.g. make 'catching AIs red-handed' using methods like in this comment significantly easier/cheaper/more scalable.

Noteably, the mainline approach for catching doesn't involve any internals usage at all, let alone labeling a bunch of things.

I agree that this model might help in performing various input/output experiments to determine what made a model do a given suspicious action.

TL;DR

Tacit knowledge is extremely valuable. Unfortunately, developing tacit knowledge is usually bottlenecked by apprentice-master relationships. Tacit Knowledge Videos could widen this bottleneck. This post is a Schelling point for aggregating these videos—aiming to be The Best Textbooks on Every Subject for Tacit Knowledge Videos. Scroll down to the list if that's what you're here for. Post videos that highlight tacit knowledge in the comments and I’ll add them to the post. Experts in the videos include Stephen Wolfram, Holden Karnofsky, Andy Matuschak, Jonathan Blow, Tyler Cowen, George Hotz, and others.

What are Tacit Knowledge Videos?

Samo Burja claims YouTube has opened the gates for a revolution in tacit knowledge transfer. Burja defines tacit knowledge as follows:

...Tacit knowledge is knowledge that can’t properly be transmitted via verbal or written instruction, like the ability to create

"Mise En Place", "[i]nterviews and kitchen walkthroughs:

Qualifies as tacit knowledge, in that people are showing what they're doing that you seldom have a chance to watch first-hand. Reasonably entertaining, seems like you could learn a bit here.

Caveat: most of the dishes are really high-class/meat/fish etc. that you aren't very likely to ever cook yourself, and knowledge seems difficult to transfer.

- My current guess is that max good and max bad seem relatively balanced. (Perhaps max bad is 5x more bad/flop than max good in expectation.)

- There are two different (substantial) sources of value/disvalue: interactions with other civilizations (mostly acausal, maybe also aliens) and what the AI itself terminally values

- On interactions with other civilizations, I'm relatively optimistic that commitment races and threats don't destroy as much value as acausal trade generates on some general view like "actually going through with threats is a waste of resourc

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

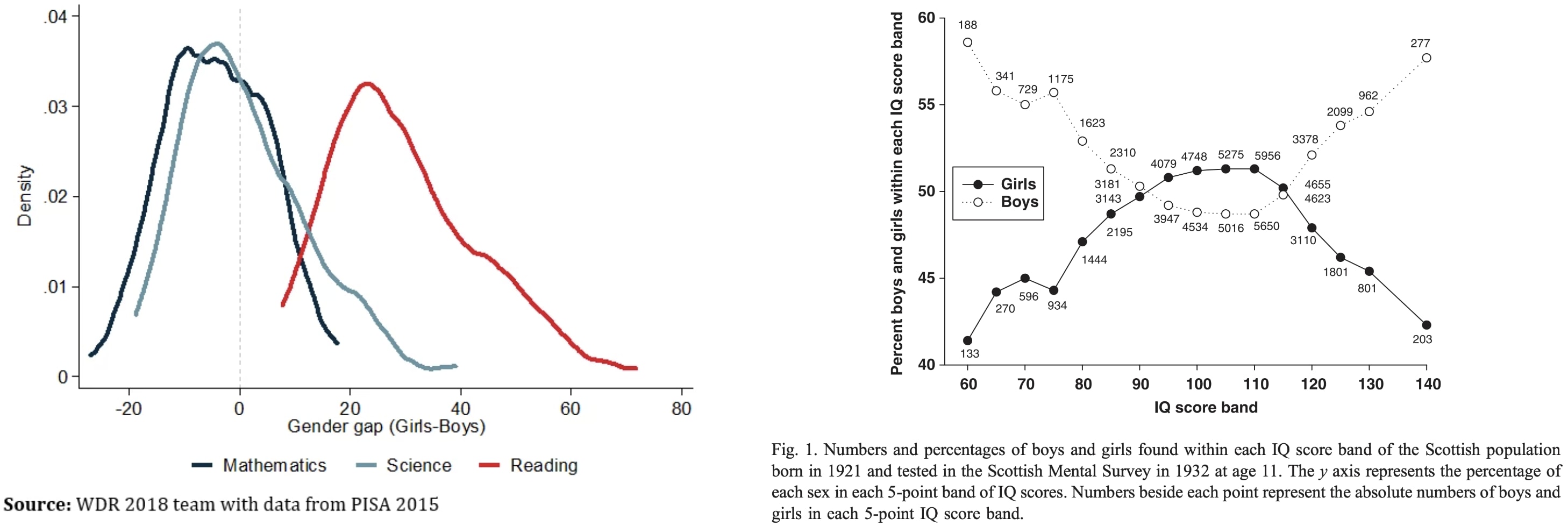

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

Yes my point is the low T did it before the transition

It's a ‘superrational’ extension of the proven optimality of cooperation in game theory

+ Taking into account asymmetries of power

// Still AI risk is very real

Short version of an already skimmed 12min post

29min version here

For rational agents (long-term) at all scale (human, AGI, ASI…)

In real contexts, with open environments (world, universe), there is always a risk to meet someone/something stronger than you, and overall weaker agents may be specialized in your flaws/blind spots.

To protect yourself, you can choose the maximally rational and cooperative alliance:

Because any agent is subjected to the same pressure/threat of (actual or potential) stronger agents/alliances/systems, one can take an insurance that more powerful superrational agents will behave well by behaving well with weaker agents. This is the basic rule allowing scale-free cooperation.

If you integrated this super-cooperative...

I can't be certain of the solidity of this uncertainty, and think we still have to be careful, but overall, the most parsimonious prediction to me seems to be super-coordination.

Compared to the risk of facing a revengeful super-cooperative alliance, is the price of maintaining humans in a small blooming "island", really that high?

Many other-than-human atoms are lions' prey.

And a doubtful AI may not optimize fully for super-cooperation, simply alleviating the price to pay in the counterfactuals where they encounter a super-cooperative cluster (resulti...

This is a linkpost for our paper Explaining grokking through circuit efficiency, which provides a general theory explaining when and why grokking (aka delayed generalisation) occurs, and makes several interesting and novel predictions which we experimentally confirm (introduction copied below). You might also enjoy our explainer on X/Twitter.

Abstract

One of the most surprising puzzles in neural network generalisation is grokking: a network with perfect training accuracy but poor generalisation will, upon further training, transition to perfect generalisation. We propose that grokking occurs when the task admits a generalising solution and a memorising solution, where the generalising solution is slower to learn but more efficient, producing larger logits with the same parameter norm. We hypothesise that memorising circuits become more inefficient with larger training datasets while generalising circuits do...

Sounds plausible, but why does this differentially impact the generalizing algorithm over the memorizing algorithm?

Perhaps under normal circumstances both are learned so fast that you just don't notice that one is slower than the other, and this slows both of them down enough that you can see the difference?

I refuse to join any club that would have me as a member.

— Groucho Marx

Alice and Carol are walking on the sidewalk in a large city, and end up together for a while.

"Hi, I'm Alice! What's your name?"

Carol thinks:

If Alice is trying to meet people this way, that means she doesn't have a much better option for meeting people, which reduces my estimate of the value of knowing Alice. That makes me skeptical of this whole interaction, which reduces the value of approaching me like this, and Alice should know this, which further reduces my estimate of Alice's other social options, which makes me even less interested in meeting Alice like this.

Carol might not think all of that consciously, but that's how human social reasoning tends to...

Hence the advice to lost children to not accept random strangers soliciting them spontaneously, but if no authority figure is available, to pick a random adult and ask them for help.

My credence: 33% confidence in the claim that the growth in the number of GPUs used for training SOTA AI will slow down significantly directly after GPT-5. It is not higher because of (1) decentralized training is possible, and (2) GPT-5 may be able to increase hardware efficiency significantly, (3) GPT-5 may be smaller than assumed in this post, (4) race dynamics.

TLDR: Because of a bottleneck in energy access to data centers and the need to build OOM larger data centers.

The reasoning behind the claim:

- Current large data centers consume around 100 MW of power, while a single nuclear power plant generates 1GW. The largest seems to consume 150 MW.

- An A100 GPU uses 250W, and around 1kW with overheard. B200 GPUs, uses ~1kW without overhead. Thus a 1MW