A book review examining Elinor Ostrom's "Governance of the Commons", in light of Eliezer Yudkowsky's "Inadequate Equilibria." Are successful local institutions for governing common pool resources possible without government intervention? Under what circumstances can such institutions emerge spontaneously to solve coordination problems?

Popular Comments

Recent Discussion

[memetic status: stating directly despite it being a clear consequence of core AI risk knowledge because many people have "but nature will survive us" antibodies to other classes of doom and misapply them here.]

Unfortunately, no.[1]

Technically, “Nature”, meaning the fundamental physical laws, will continue. However, people usually mean forests, oceans, fungi, bacteria, and generally biological life when they say “nature”, and those would not have much chance competing against a misaligned superintelligence for resources like sunlight and atoms, which are useful to both biological and artificial systems.

There’s a thought that comforts many people when they imagine humanity going extinct due to a nuclear catastrophe or runaway global warming: Once the mushroom clouds or CO2 levels have settled, nature will reclaim the cities. Maybe mankind in our hubris will have wounded Mother Earth and paid the price ourselves, but...

By my models of anthropics, I think this goes through.

As I note in the third section, I will be attending LessOnline at month’s end at Lighthaven in Berkeley. If that is your kind of event, then consider going, and buy your ticket today before prices go up.

This month’s edition was an opportunity to finish off some things that got left out of previous editions or where events have left many of the issues behind, including the question of TikTok.

Oh No

All of this has happened before. And all of this shall happen again.

...Alex Tabarrok: I regret to inform you that the CDC is at it again.

Marc Johnson: We developed an assay for testing for H5N1 from wastewater over a year ago. (I wasn’t expecting it in milk, but I figured it was going to poke up somewhere.)

However,

POSIWID. Metric being optimized is not "having the most money". It is debatable if it should be, as one of the "poor Europeans" my personal opinion is that we're doing just fine.

I stayed up too late collecting way-past-deadline papers and writing report cards. When I woke up at 6, this anxious email from one of my g11 Computer Science students was already in my Inbox.

Student: Hello Mr. Carle, I hope you've slept well; I haven't.

I've been seeing a lot of new media regarding how developed AI has become in software programming, most relevantly videos about NVIDIA's new artificial intelligence software developer, Devin.

Things like these are almost disheartening for me to see as I try (and struggle) to get better at coding and developing software. It feels like I'll never use the information that I learn in your class outside of high school because I can just ask an AI to write complex programs, and it will do it...

"[I]s a traditional education sequence the best way to prepare myself for [...?]"

This is hard to answer because in some ways the foundation of a broad education in all subjects is absolutely necessary. And some of them (math, for example), are a lot harder to patch in later if you are bad at them at say, 28.

However, the other side of this is once some foundation is laid and someone has some breadth and depth, the answer to the above question, with regards to nearly anything, is often (perhaps usually) "Absolutely Not."

So, for a 17 year old, Yes. &nbs...

Thanks to Taylor Smith for doing some copy-editing this.

In this article, I tell some anecdotes and present some evidence in the form of research artifacts about how easy it is for me to work hard when I have collaborators. If you are in a hurry I recommend skipping to the research artifact section.

Bleeding Feet and Dedication

During AI Safety Camp (AISC) 2024, I was working with somebody on how to use binary search to approximate a hull that would contain a set of points, only to knock a glass off of my table. It splintered into a thousand pieces all over my floor.

A normal person might stop and remove all the glass splinters. I just spent 10 seconds picking up some of the largest pieces and then decided...

These thoughts remind me of something Scott Alexander once wrote - that sometimes he hears someone say true but low status things - and his automatic thoughts are about how the person must be stupid to say something like that, and he has to consciously remind himself that what was said is actually true.

For anyone who's curious, this is what Scott said, in reference to him getting older – I remember it because I noticed the same in myself as I aged too:

...I look back on myself now vs. ten years ago and notice I’ve become more cynical, more mellow, and more pro

This is a followup to the D&D.Sci post I made last Friday; if you haven’t already read it, you should do so now before spoiling yourself.

Below is an explanation of the rules used to generate the dataset (my full generation code is available here, in case you’re curious about details I omitted), and their strategic implications.

Ruleset

Impossibility

Impossibility is entirely decided by who a given architect apprenticed under. Fictional impossiblists Stamatin and Johnson invariably produce impossibility-producing architects of the time; real-world impossiblists Penrose, Escher and Geisel always produce architects whose works just kind of look weird; the self-taught break Nature's laws 43% of the time.

Cost

Cost is entirely decided by materials. In particular, every structure created using Nightmares is more expensive than every structure without them.

Strategy

The five architects who would...

The halting problem is the problem of taking as input a Turing machine M, returning true if it halts, false if it doesn't halt. This is known to be uncomputable. The consistent guessing problem (named by Scott Aaronson) is the problem of taking as input a Turing machine M (which either returns a Boolean or never halts), and returning true or false; if M ever returns true, the oracle's answer must be true, and likewise for false. This is also known to be uncomputable.

Scott Aaronson inquires as to whether the consistent guessing problem is strictly easier than the halting problem. This would mean there is no Turing machine that, when given access to a consistent guessing oracle, solves the halting problem, no matter which consistent guessing oracle...

Note that Andy Drucker is not claiming to have discovered this; the paper you link is expository.

Since Drucker doesn't say this in the link, I'll mention that the objects you're discussing are conventionally know as PA degrees. The PA here stands for Peano arithmetic; a Turing degree solves the consistent guessing problem iff it computes some model of PA. This name may be a little misleading, in that PA isn't really special here. A Turing degree computes some model of PA iff it computes some model of ZFC, or more generally any theory capable o...

It's also notable that the topic of OpenAI nondisparagement agreements was brought to Holden Karnofsky's attention in 2022, and he replied with "I don’t know whether OpenAI uses nondisparagement agreements; I haven’t signed one." (He could have asked his contacts inside OAI about it, or asked the EA board member to investigate. Or even set himself up earlier as someone OpenAI employees could whistleblow to on such issues.)

If the point was to buy a ticket to play the inside game, then it was played terribly and negative credit should be assigned on that bas...

The forum has been very much focused on AI safety for some time now, thought I'd post something different for a change. Privilege.

Here I define Privilege as an advantage over others that is invisible to the beholder. [EDIT: thanks to JenniferRM for pointing out that "beholder" is a wrong word.] This may not be the only definition, or the central definition, or not how you see it, but that's the definition I use for the purposes of this post. I also do not mean it in the culture-war sense as a way to undercut others as in "check your privilege". My point is that we all have some privileges [we are not aware of], and also that nearly each one has a flip side.

In some way this...

Excellent point about the compounding, which is often multiplicative, not additive. Incidentally, multiplicative advantages result in a power law distribution of income/net worth, whereas additive advantages/disadvantages result in a normal distribution. But that is a separate topic, well explored in the literature.

TLDR: Things seem bad. But chart-wielding optimists keep telling us that things are better than they’ve ever been. What gives? Hypothesis: the point of conversation is to solve problems, so public discourse will focus on the problems—making us all think that things are worse than they are. A computational model predicts both this dynamic, and that social media makes it worse.

Are things bad? Most people think so.

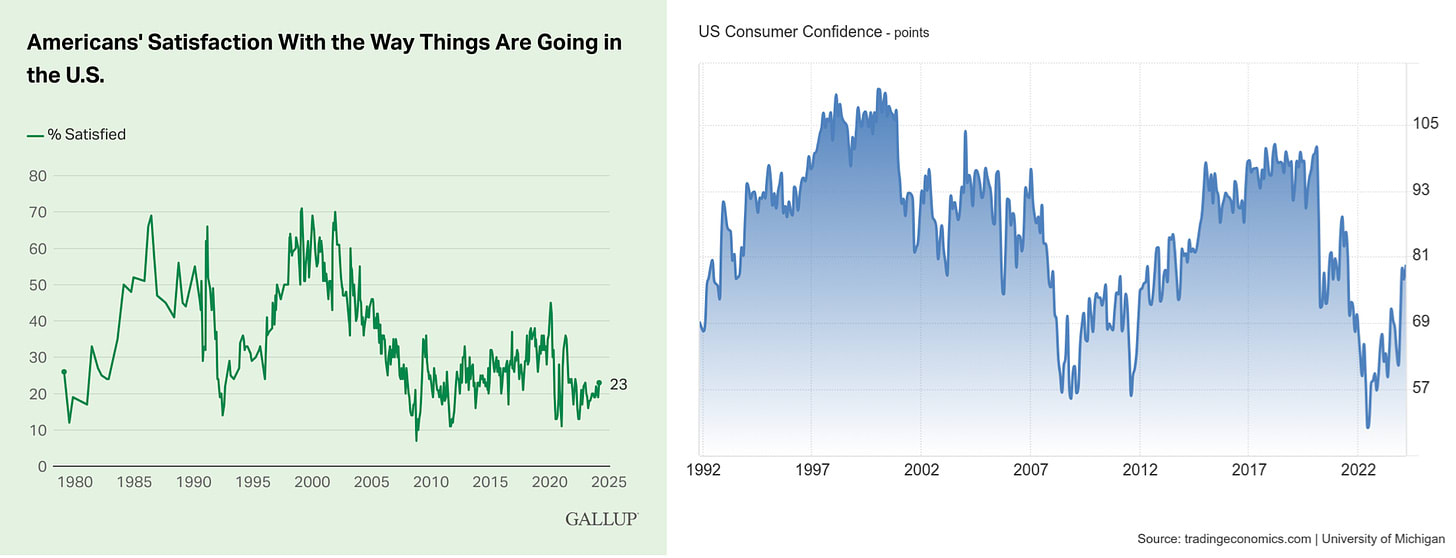

Over the last 25 years, satisfaction with how things are going in the US has tanked, while economic sentiment is as bad as it’s been since the Great Recession:

Meanwhile, majorities or pluralities in the US are pessimistic about the state of social norms, education, racial disparities, etc. And when asked about the wider world—even in the heady days of 2015—a only...

Agreed that people have lots of goals that don't fit in this model. It's definitely a simplified model. But I'd argue that ONE of (most) people's goals to solve problems; and I do think, broadly speaking, it is an important function (evolutionarily and currently) for conversation. So I still think this model gets at an interesting dynamic.