Popular Comments

Recent Discussion

I think you should write it. It sounds funny and a bunch of people have been calling out what they see as bad arguements that alginment is hard lately e.g. TurnTrout, QuintinPope, ZackMDavis, and karma wise they did fairly well.

I didn’t use to be, but now I’m part of the 2% of U.S. households without a television. With its near ubiquity, why reject this technology?

The Beginning of my Disillusionment

Neil Postman’s book Amusing Ourselves to Death radically changed my perspective on television and its place in our culture. Here’s one illuminating passage:

...We are no longer fascinated or perplexed by [TV’s] machinery. We do not tell stories of its wonders. We do not confine our TV sets to special rooms. We do not doubt the reality of what we see on TV [and] are largely unaware of the special angle of vision it affords. Even the question of how television affects us has receded into the background. The question itself may strike some of us as strange, as if one were

To make an analogy to diet, you essentially replaced a sugar fix from eating Snickers bars with eating strawberries. Gradation matters!

I had a similar slide with my technologies, as I explained in the post. I eventually landed on reading books. But even that became a form of intellectual procrastination as I wrote in my latest LW post.

Pretending not to see when a rule you've set is being violated can be optimal policy in parenting sometimes (and I bet it generalizes).

Example: suppose you have a toddler and a "rule" that food only stays in the kitchen. The motivation is that each time food is brough into the living room there is a small chance of an accident resulting in a permanent stain. There's cost to enforcing the rule as the toddler will put up a fight. Suppose that one night you feel really tired and the cost feels particularly high. If you enforce the rule, it will be much more p...

If you’ve ever been to Amsterdam, you’ve probably visited, or at least heard about the famous cookie store that sells only one cookie. I mean, not a piece, but a single flavor.

I’m talking about Van Stapele Koekmakerij of course—where you can get one of the world's most delicious chocolate chip cookies. If not arriving at opening hour, it’s likely to find a long queue extending from the store’s doorstep through the street it resides. When I visited the city a few years ago, I watched the sensation myself: a nervous crowd awaited as the rumor of ‘out of stock’ cookies spreaded across the line.

The store, despite becoming a landmark for tourists, stands for an idea that seems to...

Produced as part of the MATS Winter 2024 program, under the mentorship of Alex Turner (TurnTrout).

TL,DR: I introduce a method for eliciting latent behaviors in language models by learning unsupervised perturbations of an early layer of an LLM. These perturbations are trained to maximize changes in downstream activations. The method discovers diverse and meaningful behaviors with just one prompt, including perturbations overriding safety training, eliciting backdoored behaviors and uncovering latent capabilities.

Summary In the simplest case, the unsupervised perturbations I learn are given by unsupervised steering vectors - vectors added to the residual stream as a bias term in the MLP outputs of a given layer. I also report preliminary results on unsupervised steering adapters - these are LoRA adapters of the MLP output weights of a given...

I think it's easier to see the significance if you imagine the neural networks as a human-designed system. In e.g. a computer program, there's a clear distinction between the code that actually runs and the code that hypothetically could run if you intervened on the state, and in order to explain the output of the program, you only need to concern yourself with the former, rather than also needing to consider the latter.

For neural networks, I sort of assume there's a similar thing going on, except it's quite hard to define it precisely. In technical terms,...

Sure, I just prefer a native bookmarking function.

There are two main areas of catastrophic or existential risk which have recently received significant attention; biorisk, from natural sources, biological accidents, and biological weapons, and artificial intelligence, from detrimental societal impacts of systems, incautious or intentional misuse of highly capable systems, and direct risks from agentic AGI/ASI. These have been compared extensively in research, and have even directly inspired policies. Comparisons are often useful, but in this case, I think the disanalogies are much more compelling than the analogies. Below, I lay these out piecewise, attempting to keep the pairs of paragraphs describing first biorisk, then AI risk, parallel to each other.

While I think the disanalogies are compelling, comparison can still be useful as an analytic tool - while keeping in mind that the ability to directly...

This book argues (convincingly IMO) that it’s impossible to communicate, or even think, anything whatsoever, without the use of analogies.

- If you say “AI runs on computer chips”, then the listener will parse those words by conjuring up their previous distilled experience of things-that-run-on-computer-chips, and that previous experience will be helpful in some ways and misleading in other ways.

- If you say “AI is a system that…” then the listener will parse those words by conjuring up their previous distilled experience of so-called “systems”, and that previo

Happy May the 4th from Convergence Analysis! Cross-posted on the EA Forum.

As part of Convergence Analysis’s scenario research, we’ve been looking into how AI organisations, experts, and forecasters make predictions about the future of AI. In February 2023, the AI research institute Epoch published a report in which its authors use neural scaling laws to make quantitative predictions about when AI will reach human-level performance and become transformative. The report has a corresponding blog post, an interactive model, and a Python notebook.

We found this approach really interesting, but also hard to understand intuitively. While trying to follow how the authors derive a forecast from their assumptions, we wrote a breakdown that may be useful to others thinking about AI timelines and forecasting.

In what follows, we set out our interpretation of...

The point of the paragraph that the above quote was taken from is, I think, better summarised in its first sentence:

although Epoch takes an approach to forecasting TAI that is quite different to others in this space, its resulting probability distribution is not vastly dissimilar to those produced by other influential models

It is fair to question whether these two forecasts are “not vastly dissimilar” to one another. In some senses, two decades is a big difference between medians: for example, we suspect that a future where TAI arrives in the 2030s lo...

Introduction

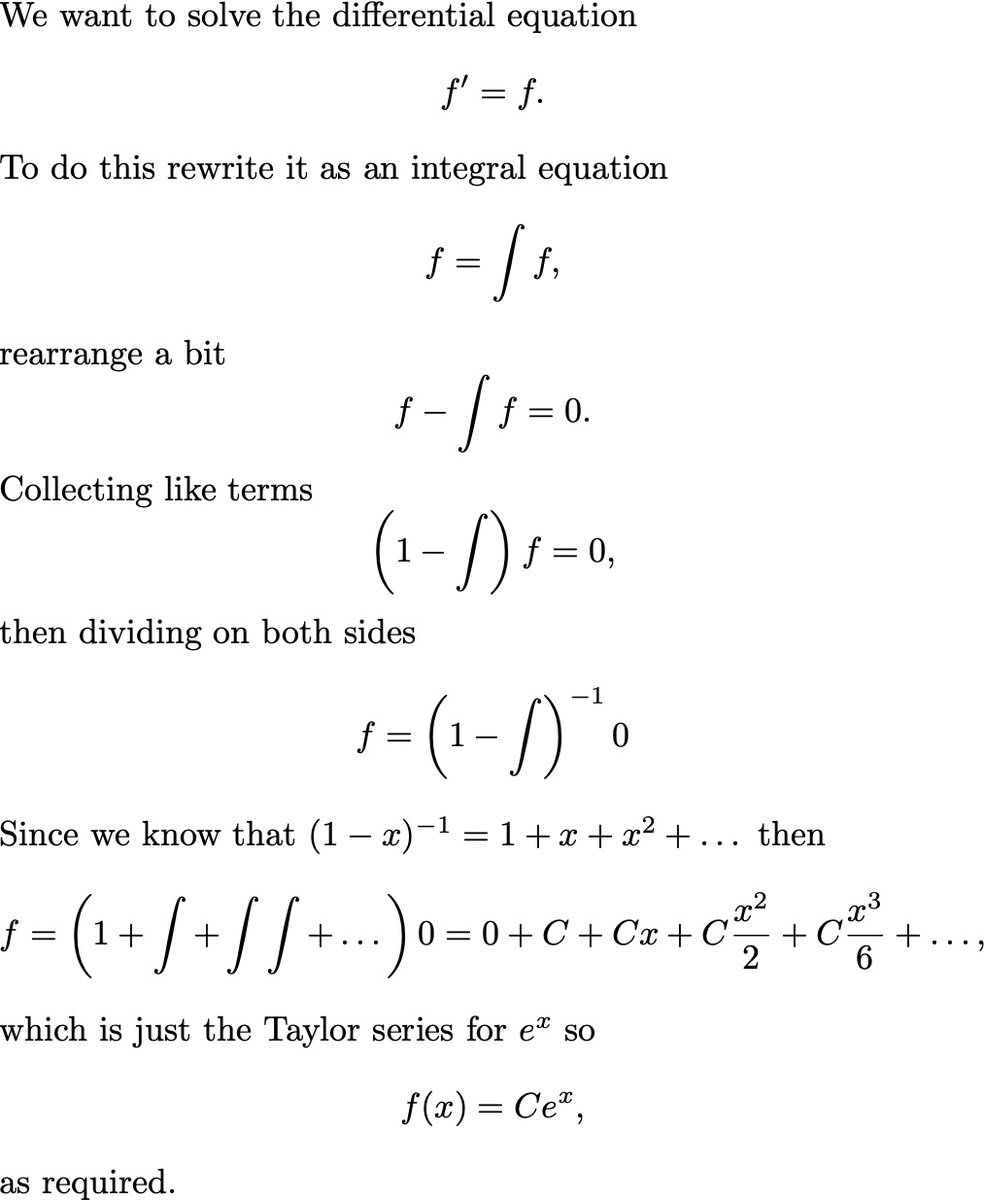

A recent popular tweet did a "math magic trick", and I want to explain why it works and use that as an excuse to talk about cool math (functional analysis). The tweet in question:

This is a cute magic trick, and like any good trick they nonchalantly gloss over the most important step. Did you spot it? Did you notice your confusion?

Here's the key question: Why did they switch from a differential equation to an integral equation? If you can use when , why not use it when ?

Well, lets try it, writing for the derivative:

So now you may be disappointed, but relieved: yes, this version fails, but at least it fails-safe, giving you the trivial solution, right?

But no, actually can fail catastrophically, which we can see if we try a nonhomogeneous equation...

Very nice! Notice that if you write as , and play around with binomial coefficients a bit, we can rewrite this as:

which holds for as well, in which case it becomes the derivative product rule. This also matches the formal power series expansion of , which one can motivate directly

(By the way, how do you spoiler tag?)