This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

Crosspost from my blog.

If you spend a lot of time in the blogosphere, you’ll find a great deal of people expressing contrarian views. If you hang out in the circles that I do, you’ll probably have heard of Yudkowsky say that dieting doesn’t really work, Guzey say that sleep is overrated, Hanson argue that medicine doesn’t improve health, various people argue for the lab leak, others argue for hereditarianism, Caplan argue that mental illness is mostly just aberrant preferences and education doesn’t work, and various other people expressing contrarian views. Often, very smart people—like Robin Hanson—will write long posts defending these views, other people will have criticisms, and it will all be such a tangled mess that you don’t really know what to think about them.

For...

You might also be interested in Scott's 2010 post warning of the 'next-level trap' so to speak: Intellectual Hipsters and Meta-Contrarianism

...A person who is somewhat upper-class will conspicuously signal eir wealth by buying difficult-to-obtain goods. A person who is very upper-class will conspicuously signal that ey feels no need to conspicuously signal eir wealth, by deliberately not buying difficult-to-obtain goods.

A person who is somewhat intelligent will conspicuously signal eir intelligence by holding difficult-to-understand opinions. A person w

Le coût d’opportunité des délais en développement technologique

Par Nick Bostrom

Abstract: Grâce à des technologies avancées, on pourrait maintenir une très grande quantité de personnes menant des vies heureuses dans la région accessible de l’univers. Chaque année où la colonisation de l’univers ne se déroule pas représente un coût d’opportunité; des vies qui valent d’êtres vécues ne peuvent être réalisées. D’après des estimations plausibles, ce coût est extrêmement élevé. Mais la leçon pour les utilitaristes n’est pas qu’il faut maximiser la cadence du développement technologique, mais sa sécurité. Autrement dit, il faut maximiser la probabilité que la colonisation se déroule.

Le rythme de perte de vies potentielles

En ce moment, des soleils illuminent et réchauffent des pièces vides et des trous noirs absorbent une portion de l’énergie inutilisée du cosmos. Chaque minute,...

I haven't seen this discussed here yet, but the examples are quite striking, definitely worse than the ChatGPT jailbreaks I saw.

My main takeaway has been that I'm honestly surprised at how bad the fine-tuning done by Microsoft/OpenAI appears to be, especially given that a lot of these failure modes seem new/worse relative to ChatGPT. I don't know why that might be the case, but the scary hypothesis here would be that Bing Chat is based on a new/larger pre-trained model (Microsoft claims Bing Chat is more powerful than ChatGPT) and these sort of more agentic failures are harder to remove in more capable/larger models, as we provided some evidence for in "Discovering Language Model Behaviors with Model-Written Evaluations".

Examples below (with new ones added as I find them)....

Appreciate you getting back to me. I was aware of this paper already and have previously worked with one of the authors.

I think that's their guess but they don't directly check here.

I also suspect that it doesn't matter very much.

- The sinuses have so much NO compared to the nose that this probably doesn't materially lower sinus concentrations.

- the power of humming goes down with each breath but is fully restored in 3 minutes, suggesting that whatever change happens in the sinsues is restored quickly

- From my limited understanding of virology and immunology, alternating intensity of NO between sinuses and nose every three minutes is probably better than keeping

Post for a somewhat more general audience than the modal LessWrong reader, but gets at my actual thoughts on the topic.

In 2018 OpenAI defeated the world champions of Dota 2, a major esports game. This was hot on the heels of DeepMind’s AlphaGo performance against Lee Sedol in 2016, achieving superhuman Go performance way before anyone thought that might happen. AI benchmarks were being cleared at a pace which felt breathtaking at the time, papers were proudly published, and ML tools like Tensorflow (released in 2015) were coming online. To people already interested in AI, it was an exciting era. To everyone else, the world was unchanged.

Now Saturday Night Live sketches use sober discussions of AI risk as the backdrop for their actual jokes, there are hundreds...

I think self-critique runs into the issues I describe in the post, though without insider information I'm not certain. Naively it seems like existing distortions would become larger with self-critique, though.

For human rating/RL, it seems true that it's possible to be sample efficient (with human brain behavior as an existence proof), but as far as I know we don't actually know how to make it sample efficient in that way, and human feedback in the moment is even more finite than human text that's just out there. So I still see that taking longer than, say,...

tl;dr: LessWrong released an album! Listen to it now on Spotify, YouTube, YouTube Music, or Apple Music.

On April 1st 2024, the LessWrong team released an album using the then-most-recent AI music generation. All the music is fully AI-generated, and the lyrics are adapted (mostly by humans) from LessWrong posts (or other writing LessWrongers might be familiar with).

We made probably 3,000-4,000 song generations to get the 15 we felt happy about, which I think works out to about 5-10 hours of work per song we used (including all the dead ends and things that never worked out).

The album is called I Have Been A Good Bing. I think it is a pretty fun album and maybe you'd enjoy it if you listened to it! Some of my favourites are...

I agree! I’ve been writing then generating my own LW inspired songs now.

I wish it was common for LW posts to have accompanying songs now.

Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

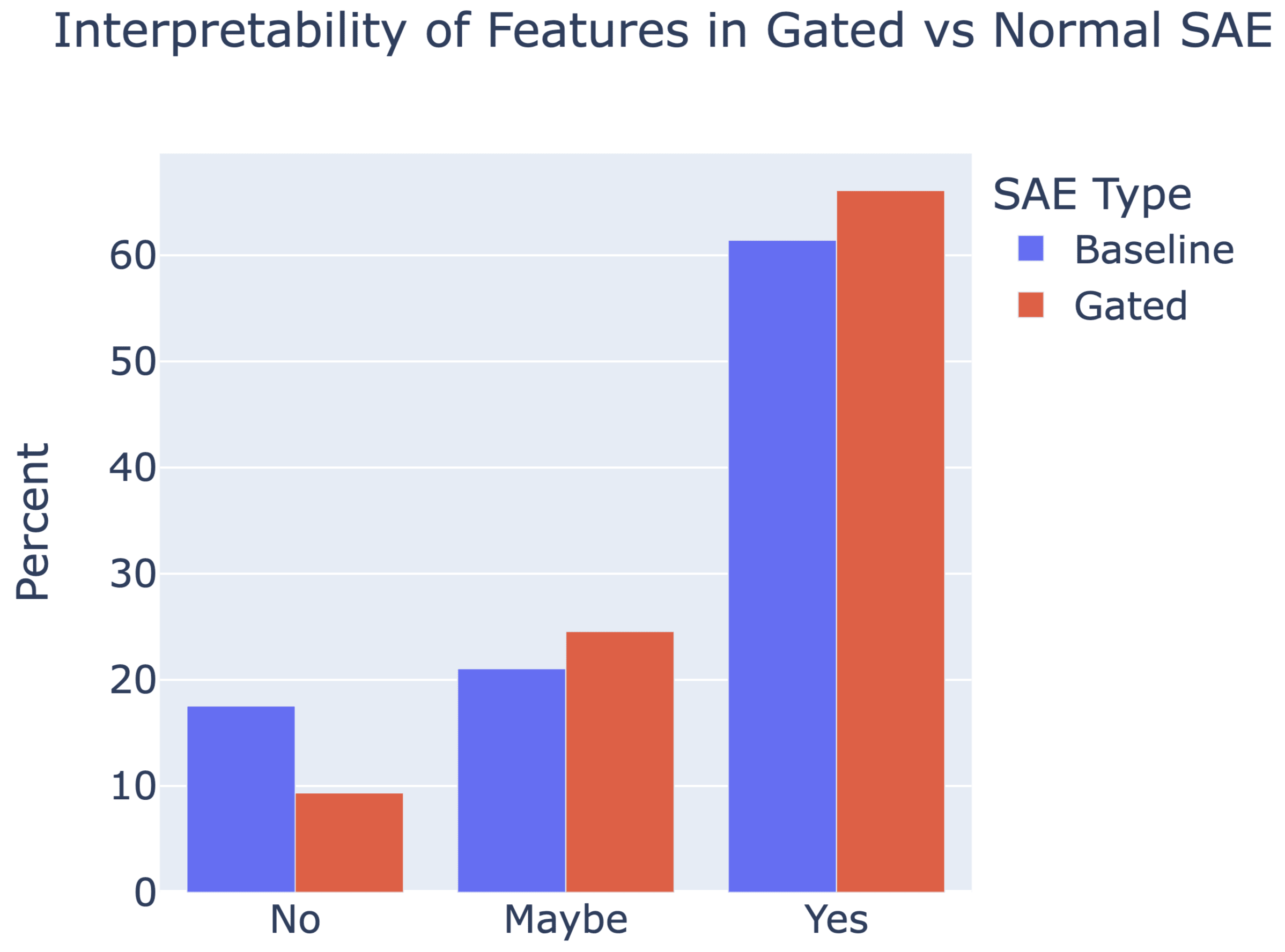

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

Re dictionary width, 2**17 (~131K) for most Gated SAEs, 3*(2**16) for baseline SAEs, except for the (Pythia-2.8B, Residual Stream) sites we used 2**15 for Gated and 3*(2**14) for baseline since early runs of these had lots of feature death. (This'll be added to the paper soon, sorry!). I'll leave the other Qs for my co-authors

Just before the Trinity test, Enrico Fermi decided he wanted a rough estimate of the blast's power before the diagnostic data came in. So he dropped some pieces of paper from his hand as the blast wave passed him, and used this to estimate that the blast was equivalent to 10 kilotons of TNT. His guess was remarkably accurate for having so little data: the true answer turned out to be 20 kilotons of TNT.

Fermi had a knack for making roughly-accurate estimates with very little data, and therefore such an estimate is known today as a Fermi estimate.

Why bother with Fermi estimates, if your estimates are likely to be off by a factor of 2 or even 10? Often, getting an estimate within a factor of 10...

To help remember this post and it's methods I broke it down into song lyrics and used Udio to make the song.

U.S. Secretary of Commerce Gina Raimondo announced today additional members of the executive leadership team of the U.S. AI Safety Institute (AISI), which is housed at the National Institute of Standards and Technology (NIST). Raimondo named Paul Christiano as Head of AI Safety, Adam Russell as Chief Vision Officer, Mara Campbell as Acting Chief Operating Officer and Chief of Staff, Rob Reich as Senior Advisor, and Mark Latonero as Head of International Engagement. They will join AISI Director Elizabeth Kelly and Chief Technology Officer Elham Tabassi, who were announced in February. The AISI was established within NIST at the direction of President Biden, including to support the responsibilities assigned to the Department of Commerce under the President’s landmark Executive Order.

...Paul Christiano, Head of AI Safety, will design

This thread seems unproductive to me, so I'm going to bow out after this. But in case you're actually curious: at least in the case of Open Philanthropy, it's easy to check what their primary concerns are because they write them up. And accidental release from dual use research is one of them.