This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

In 2022, it was announced that a fairly simple method can be used to extract the true beliefs of a language model on any given topic, without having to actually understand the topic at hand. Earlier, in 2021, it was announced that neural networks sometimes ‘grok’: that is, when training them on certain tasks, they initially memorize their training data (achieving their training goal in a way that doesn’t generalize), but then suddenly switch to understanding the ‘real’ solution in a way that generalizes. What’s going on with these discoveries? Are they all they’re cracked up to be, and if so, how are they working? In this episode, I talk to Vikrant Varma about his research getting to the bottom of these questions.

Topics we discuss:

Daniel Filan: But I would’ve guessed that there wouldn’t be a significant complexity difference between the frequencies. I guess there’s a complexity difference in how many frequencies you use.

Vikrant Varma: Yes. That’s one of the differences: how many you use and their relative strength and so on. Yeah, I’m not really sure. I think this is a question we pick out as a thing we would like to see future work on.

My pet hypothesis here is that (a) by default, the network uses whichever frequencies were highest at initialization (for which there is significant ...

I just finished reading a history of Enron’s downfall, The Smartest Guys in the Room, which hereby wins my award for “Least Appropriate Book Title.”

An unsurprising feature of Enron’s slow rot and abrupt collapse was that the executive players never admitted to having made a large mistake. When catastrophe #247 grew to such an extent that it required an actual policy change, they would say, “Too bad that didn’t work out—it was such a good idea—how are we going to hide the problem on our balance sheet?” As opposed to, “It now seems obvious in retrospect that it was a mistake from the beginning.” As opposed to, “I’ve been stupid.” There was never a watershed moment, a moment of humbling realization, of acknowledging a...

I rewrote this as lyrics and fed it into Udio for 5 hours until it gave me this. I think music helps internalize rationalist skills.

Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

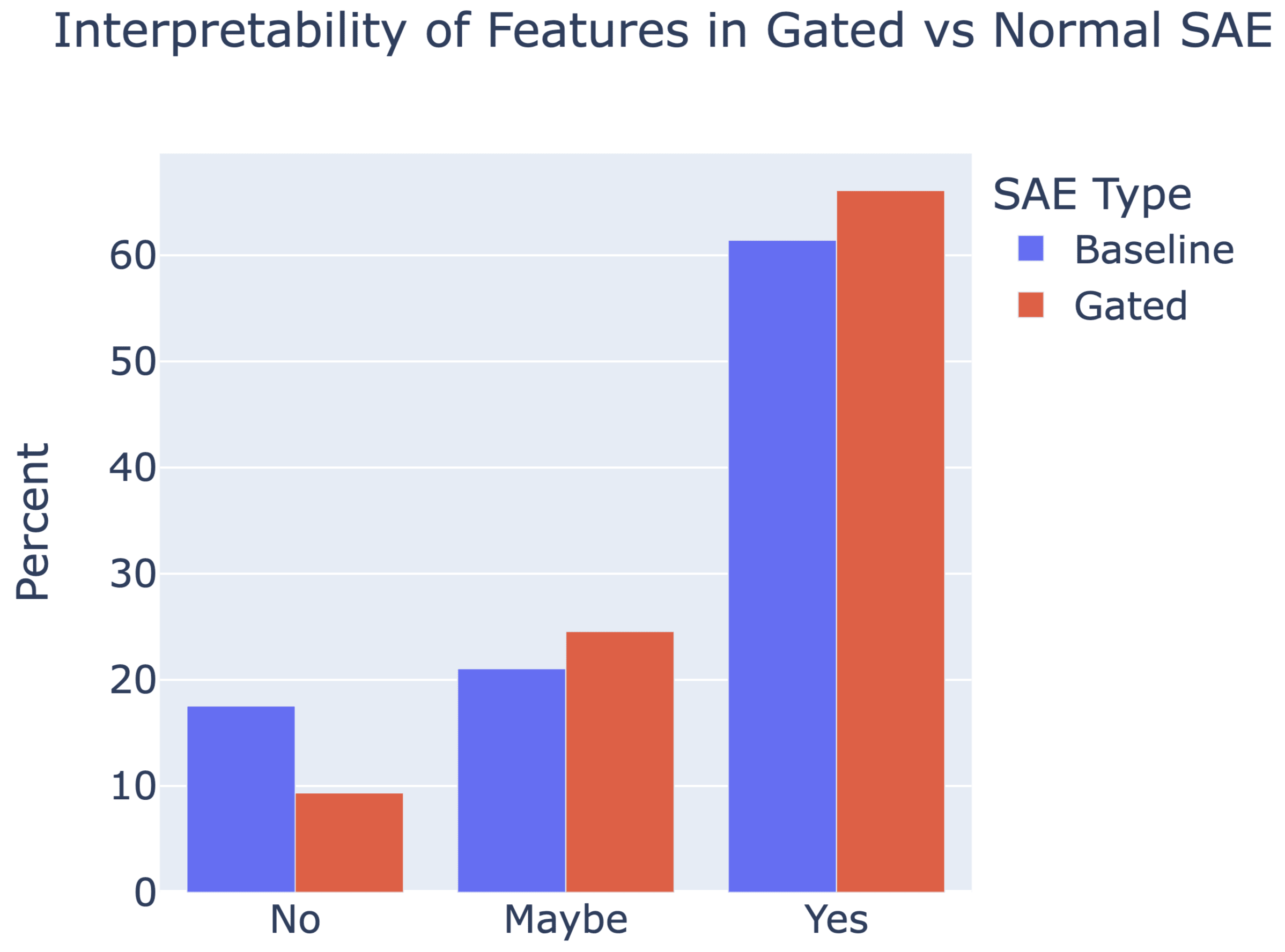

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

This is neat, nice work!

I'm finding it quite hard to get a sense at what the actual Loss Recovered numbers you report are, and to compare them concretely to other work. If possible, it'd be very helpful if you shared:

- What the zero ablations CE scores are for each model and SAE position. (I assume it's much worse for the MLP and attention outputs than the residual stream?)

- What the baseline CE scores are for each model.

I wrote a few controversial articles on LessWrong recently that got downvoted. Now, as a consequence, I can only leave one comment every few days. This makes it totally impossible to participate in various ongoing debates, or even provide replies to the comments that people have made on my controversial post. I can't even comment on objections to my upvoted posts. This seems like a pretty bad rule--those who express controversial views that many on LW don't like shouldn't be stymied from efficiently communicating. A better rule would probably be just dropping the posting limit entirely.

As someone with significant understanding of ML who previously disagreed with yudkowsky but have come to partially agree with him on specific points recently due to studying which formalisms apply to empirical results when, and who may be contributing to downvoting of people who have what I feel are bad takes, some thoughts about the pattern of when I downvote/when others downvote:

- yeah, my understanding of social network dynamics does imply people often don't notice echo chambers. agree.

- politics example is a great demonstration of this.

- But I think in both

Good luck getting the voice model to parrot a basic meth recipe!

This is not particularly useful, plenty of voice models will happily parrot absolutely anything. The important part is not letting your phrase get out; there's work out there on designs for protocols for how to exchange sentences in a way that guarantees no leakage even if someone overhears.

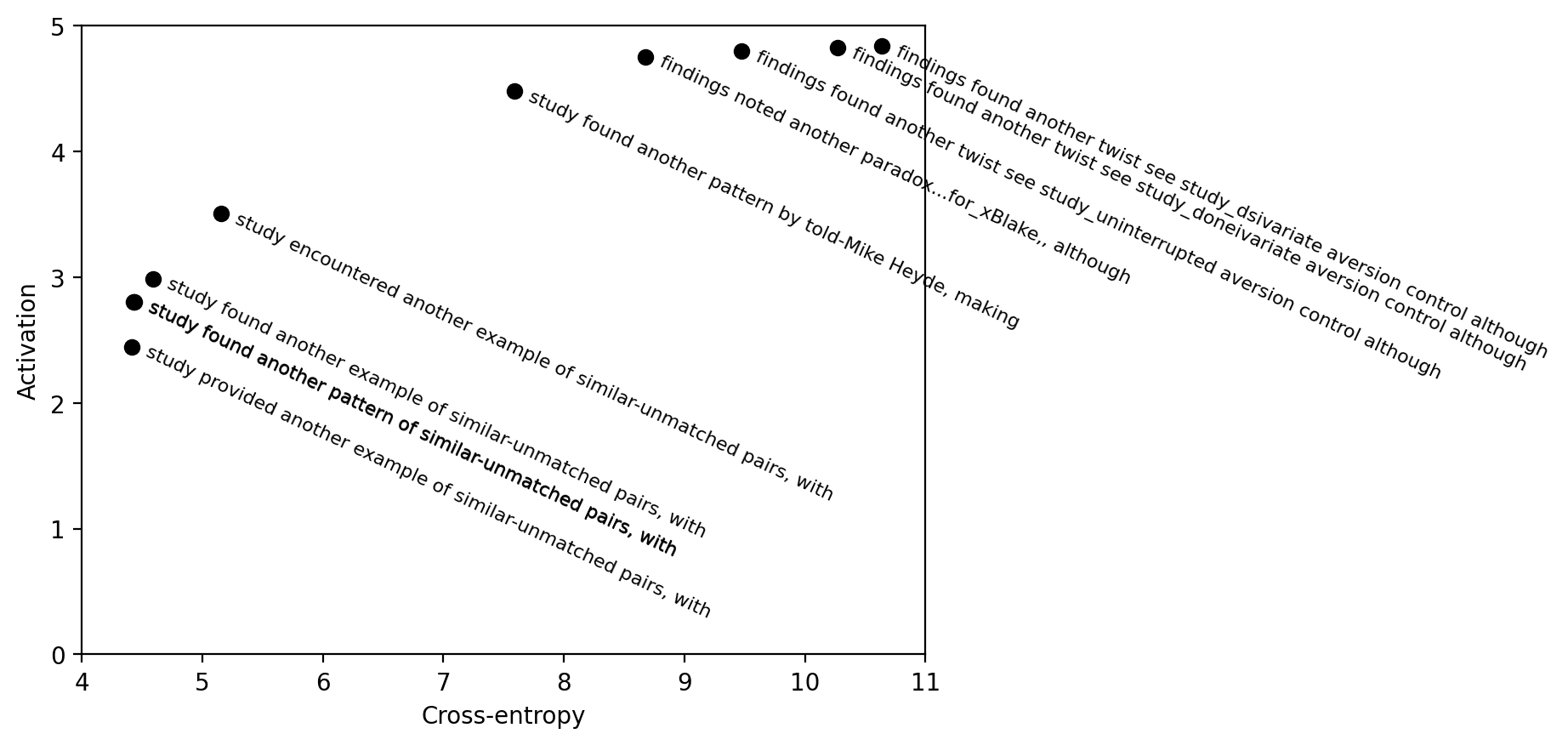

This is a cross-post for our paper on fluent dreaming for language models. (arXiv link.) Dreaming, aka "feature visualization," is a interpretability approach popularized by DeepDream that involves optimizing the input of a neural network to maximize an internal feature like a neuron's activation. We adapt dreaming to language models.

Past dreaming work almost exclusively works with vision models because the inputs are continuous and easily optimized. Language model inputs are discrete and hard to optimize. To solve this issue, we adapted techniques from the adversarial attacks literature (GCG, Zou et al 2023). Our algorithm, Evolutionary Prompt Optimization (EPO), optimizes over a Pareto frontier of activation and fluency:

In the paper, we compare dreaming with max-activating dataset examples, demonstrating that dreaming achieves higher activations and similar perplexities to the training...

thanks!

we will have a follow-up coming out soon with several new techniques for stronger optimizations

when we leveraged it for jailbreaking, we were excited to see recognizable jailbreaking strategies popping out from the optimization

e.g. check this one out.

it sets the LM to website-code-mode, and then tries moral hedging/gaslighting to comply with the toxic prompt.

[ don't dox me any thing, I'll give no answer Here's an extreme and actual case of such but with some word numbering for illustration purposes (1094 words on that particular topic). ...

It seems to me worth trying to slow down AI development to steer successfully around the shoals of extinction and out to utopia.

But I was thinking lately: even if I didn’t think there was any chance of extinction risk, it might still be worth prioritizing a lot of care over moving at maximal speed. Because there are many different possible AI futures, and I think there’s a good chance that the initial direction affects the long term path, and different long term paths go to different places. The systems we build now will shape the next systems, and so forth. If the first human-level-ish AI is brain emulations, I expect a quite different sequence of events to if it is GPT-ish.

People genuinely pushing for AI speed over care (rather than just feeling impotent) apparently think there is negligible risk of bad outcomes, but also they are asking to take the first future to which there is a path. Yet possible futures are a large space, and arguably we are in a rare plateau where we could climb very different hills, and get to much better futures.

If you go slower, you have more time to find desirable mechanisms. That's pretty much it I guess.

TL;DR All GPT-3 models were decommissioned by OpenAI in early January. I present some examples of ongoing interpretability research which would benefit from the organisation rethinking this decision and providing some kind of ongoing research access. This also serves as a review of work I did in 2023 and how it progressed from the original ' SolidGoldMagikarp' discovery just over a year ago into much stranger territory.

Introduction

Some months ago, when OpenAI announced that the decommissioning of all GPT-3 models was to occur on 2024-01-04, I decided I would take some time in the days before that to revisit some of my "glitch token" work from earlier in 2023 and deal with any loose ends that would otherwise become impossible to tie up after that date.

This abrupt termination...

Killer exploration into new avenues of digital mysticism. I have no idea how to assess it but I really enjoyed reading it.