Here's a list of my donations so far this year (put together as part of thinking through whether I and others should participate in an OpenAI equity donation round).

They are roughly in chronological order (though it's possible I missed one or two). I include some thoughts on what I've learned and what I'm now doing differently at the bottom.

- $100k to Lightcone

- This grant was largely motivated by my respect for Oliver Habryka's quality of thinking and personal judgment.

- This ended up being partially matched by the Survival and Flourishing Fund (though I didn't know it would be when I made it). Note that they'll continue (partially) matching donations to Lightcone until the end of March 2026.

- $50k to the Alignment of Complex Systems (ACS) research group

- This grant was largely motivated by my respect for Jan Kulveit's philosophical and technical thinking.

- $20k to Alexander Gietelink Oldenziel for support with running agent foundations conferences.

- ~$25k to Inference Magazine to host a public debate on the plausibility of the intelligence explosion in London.

- $100k to Apart Research, who run hackathons where people can engage with AI safety research in a hands-on way (technically made with my

I'm glad you're betting on your own taste/expertise instead of donating on behalf of the community—that seems sensible to me.

What are the best places to start reading about why you are uninterested in almost all commonly proposed AI governance interventions, and about the AI governance interventions you are interested in? I imagine the curriculum sheds some light on this, but it's quite long.

I feel kinda frustrated whenever "shard theory" comes up in a conversation, because it's not a theory, or even a hypothesis. In terms of its literal content, it basically seems to be a reframing of the "default" stance towards neural networks often taken by ML researchers (especially deep learning skeptics), which is "assume they're just a set of heuristics".

This is a particular pity because I think there's a version of the "shard" framing which would actually be useful, but which shard advocates go out of their way to avoid. Specifically: we should be interested in "subagents" which are formed via hierarchical composition of heuristics and/or lower-level subagents, and which are increasingly "goal-directed" as you go up the hierarchy. This is an old idea, FWIW; e.g. it's how Minsky frames intelligence in Society of Mind. And it's also somewhat consistent with the claim made in the original shard theory post, that "shards are just collections of subshards".

The problem is the "just". The post also says "shards are not full subagents", and that "we currently estimate that most shards are 'optimizers' to the extent that a bacterium or a thermostat is an optimizer." But the whole point...

I am not as negative on it as you are -- it seems an improvement over the 'Bag O' Heuristics' model and the 'expected utility maximizer' model. But I agree with the critique and said something similar here:

...you go on to talk about shards eventually values-handshaking with each other. While I agree that shard theory is a big improvement over the models that came before it (which I call rational agent model and bag o' heuristics model) I think shard theory currently has a big hole in the middle that mirrors the hole between bag o' heuristics and rational agents. Namely, shard theory currently basically seems to be saying "At first, you get very simple shards, like the following examples: IF diamond-nearby THEN goto diamond. Then, eventually, you have a bunch of competing shards that are best modelled as rational agents; they have beliefs and desires of their own, and even negotiate with each other!" My response is "but what happens in the middle? Seems super important! Also haven't you just reproduced the problem but inside the head?" (The problem being, when modelling AGI we always understood that it would start out being just a crappy bag of heuristics and end up a scary rational ag

FWIW I'm potentially intrested in interviewing you (and anyone else you'd recommend) and then taking a shot at writing the 101-level content myself.

An analogy that points at one way I think the instrumental/terminal goal distinction is confused:

Imagine trying to classify genes as either instrumentally or terminally valuable from the perspective of evolution. Instrumental genes encode traits that help an organism reproduce. Terminal genes, by contrast, are the "payload" which is being passed down the generations for their own sake.

This model might seem silly, but it actually makes a bunch of useful predictions. Pick some set of genes which are so crucial for survival that they're seldom if ever modified (e.g. the genes for chlorophyll in plants, or genes for ATP production in animals). Treating those genes as "terminal" lets you "predict" that other genes will gradually evolve in whichever ways help most to pass those terminal genes on, which is what we in fact see.

But of course there's no such thing as "terminal genes". What's actually going on is that some genes evolved first, meaning that a bunch of downstream genes ended up selected for compatibility with them. In principle evolution would be fine with the terminal genes being replaced, it's just that it's computationally difficult to find a way to do so without breaking do...

Thinking step by step:

I like the point that fundamentally the structure is tree-like, and insofar as terminal goals are a thing it's basically just that they are the leaf nodes on the tree instead of branches or roots. Note that this doesn't mean terminal goals aren't a thing; the distinction is real and potentially important.

I think an improvement on the analogy would be compare to a human organization rather than to a tree. In a human organization (such as a company or a bureaucracy) at first there is one or a small group of people, and then they hire more people to help them with stuff (and retain the option to fire them if they stop helping) and then those people hire people etc. and eventually you have six layers of middle management. Perhaps goals are like this. Evolution and/or reinforcement learning gives us some goals, and then those goals create subgoals to help them, and then those subgoals create subgoals, etc. In general when it starts to seem that a subgoal isn't going to help with the goal it's supposed to help with, it gets 'fired.' However, sometimes subgoals are 'sticky' and become terminal-ish goals, analogous to how it's sometimes hard to fire people & how t...

But of course there's no such thing as "terminal genes". What's actually going on is that some genes evolved first, meaning that a bunch of downstream genes ended up selected for compatibility with them. In principle evolution would be fine with the terminal genes being replaced, it's just that it's computationally difficult to find a way to do so without breaking downstream dependencies.

I think your analysis is incorrect. The book is called "The Selfish Gene". No basic unit of evolution is perfect, but probably the best available is the gene--which is to say, genomic locus (defined relative to surrounding context). An organism is a temporary coalition of its genes. Generally there's quite strong instrumental alignment between all the genes in an organism, but it's not always perfect, and you do get gene drives in nature. If a gene could favor itself at the expense of the other genes in that organism (in terms of overall population frequency), it totally would.

...I think this is a good analogy for how human values work. We start off with some early values, and then develop instrumental strategies for achieving them. Those instrumental strategies become crystallized and then give

This is great, and on an important topic that's right in the center of our collective ontology and where I've been feeling for a while that our concepts are inadequate.

Top level post! Top level post!

In response to an email about what a pro-human ideology for the future looks like, I wrote up the following:

The pro-human egregore I'm currently designing (which I call fractal empowerment) incorporates three key ideas:

Firstly, we can see virtue ethics as a way for less powerful agents to aggregate to form more powerful superagents that preserve the interests of those original less powerful agents. E.g. virtues like integrity, loyalty, etc help prevent divide-and-conquer strategies. This would have been in the interests of the rest of the world when Europe was trying to colonize them, and will be in the best interests of humans when AIs try to conquer us.

Secondly, the most robust way for a more powerful agent to be altruistic towards a less powerful agent is not for it to optimize for that agent's welfare, but rather to optimize for its empowerment. This prevents predatory strategies from masquerading as altruism (e.g. agents claiming "I'll conquer you and then I'll empower you", which then somehow never get around to the second step).

Thirdly: the generational contract. From any given starting point, there are a huge number of possible coalitions which could form, an...

How would this ideology address value drift? I've been thinking a lot about the kind quoted in Morality is Scary. The way I would describe it now is that human morality is by default driven by a competitive status/signaling game, where often some random or historically contingent aspect of human value or motivation becomes the focal point of the game, and gets magnified/upweighted as a result of competitive dynamics, sometimes to an extreme, even absurd degree.

(Of course from the inside it doesn't look absurd, but instead feels like moral progress. One example of this that I happened across recently is filial piety in China, which became more and more extreme over time, until someone cutting off a piece of their flesh to prepare a medicinal broth for an ailing parent was held up as a moral exemplar.)

Related to this is my realization is that the kind of philosophy you and I are familiar with (analytical philosophy, or more broadly careful/skeptical philosophy) doesn't exist in most of the world and may only exist in Anglophone countries as a historical accident. There, about 10,000 practitioners exist who are funded but ignored by the rest of the population. To most of humanity, "ph...

In some sense this is a core idea of UDT: when coordinating with forks of yourself, you defer to your unique last common ancestor. When it's not literally a fork of yourself, there's more arbitrariness but you can still often find a way to use history to narrow down on coordination Schelling points (e.g. "what would Jesus do")

I think this is wholly incorrect line of thinking. UDT operates on your logical ancestor, not literal.

Say, if you know enough science, you know that normal distribution is a maxentropy distribution for fixed mean and variance, and therefore, optimal prior distribution under certain set of assumptions. You can ask yourself question "let's suppose that I haven't seen this evidence, what would be my prior probability?" and get an answer and cooperate with your counterfactual versions which have seen other versions of evidence. But you can't cooperate with your hypothetical version which doesn't know what normal distribution is, because, if it doesn't know about normal distribution, it can't predict how you would behave and account for this in cooperation.

Sufficiently different versions of yourself are just logically uncorrelated with you and there is no game-theoretic reason to account for them.

Many of Paul Christiano's writings were valuable corrections to the dominant Yudkowskian paradigm of AI safety. However, I think that many of them (especially papers like concrete problems in AI safety and posts like these two) also ended up providing a lot of intellectual cover for people to do "AI safety" work (especially within AGI companies) that isn't even trying to be scalable to much more powerful systems.

I want to register a prediction that "gradual disempowerment" will end up being (mis)used in a similar way. I don't really know what to do about this, but I intend to avoid using the term myself. My own research on related topics I cluster under headings like "understanding intelligence", "understanding political philosophy", and "understanding power". To me this kind of understanding-oriented approach seems more productive than trying to create a movement based around a class of threat models.

I do agree there is some risk of the type you describe, but mostly it does not match my practical experience so far.

The approach to "avoid using the term" makes little sense. There is a type difference between area of study ('understanding power') and dynamic ('gradual disempowerment'). I don't think you can substitute term for area of study for term for a dynamic or thread model, so avoiding using the term could be done mostly by either inventing another term for the the dynamic, or not thinking about the dynamic, or similar moves, which seem epistemically unhealthy.

In practical terms I don't think there is much effort to "create a movement based around a class of threat models". At least as authors of the GD paper, when trying to support thinking about the problems, we use understanding-directed labels/pointers (Post-AGI Civilizational Equilibria), even though in many ways it could have been easier to use GD as a brand.

"Understanding power" is fine as a label for part of your writing, but in my view is basically unusable as term for coordination.

Also, in practical terms, gradual disempowerment does not seem particularly convenient set of ideas for justifying that working in...

The impression I got from Ngo's post is that:

- assorted varieties of gradual disempowerment do seem like genuine long term threats

- however, by the nature of the idea, it involves talking a lot about relatively small present-day harms from AI

- therefore gradual disempowerment is highly at-risk of being coopted by people who mostly just want to talk about present day harms, distracting from both AI x-risk overall and even perhaps from gradual-disempowerment-related x-risk

One fairly strong belief of mine is that Less Wrong's epistemic standards are not high enough to make solid intellectual progress here. So far my best effort to make that argument has been in the comment thread starting here. Looking back at that thread, I just noticed that a couple of those comments have been downvoted to negative karma. I don't think any of my comments have ever hit negative karma before; I find it particularly sad that the one time it happens is when I'm trying to explain why I think this community is failing at its key goal of cultivating better epistemics.

There's all sorts of arguments to be made here, which I don't have time to lay out in detail. But just step back for a moment. Tens or hundreds of thousands of academics are trying to figure out how the world works, spending their careers putting immense effort into reading and producing and reviewing papers. Even then, there's a massive replication crisis. And we're trying to produce reliable answers to much harder questions by, what, writing better blog posts, and hoping that a few of the best ideas stick? This is not what a desperate effort to find the truth looks like.

And we're trying to produce reliable answers to much harder questions by, what, writing better blog posts, and hoping that a few of the best ideas stick? This is not what a desperate effort to find the truth looks like.

It seems to me that maybe this is what a certain stage in the desperate effort to find the truth looks like?

Like, the early stages of intellectual progress look a lot like thinking about different ideas and seeing which ones stand up robustly to scrutiny. Then the best ones can be tested more rigorously and their edges refined through experimentation.

It seems to me like there needs to be some point in the desparate search for truth in which you're allowing for half-formed thoughts and unrefined hypotheses, or else you simply never get to a place where the hypotheses you're creating even brush up against the truth.

In the half-formed thoughts stage, I'd expect to see a lot of literature reviews, agendas laying out problems, and attempts to identify and question fundamental assumptions. I expect that (not blog-post-sized speculation) to be the hard part of the early stages of intellectual progress, and I don't see it right now.

Perhaps we can split this into technical AI safety and everything else. Above I'm mostly speaking about "everything else" that Less Wrong wants to solve. Since AI safety is now a substantial enough field that its problems need to be solved in more systemic ways.

As mentioned in my reply to Ruby, this is not a critique of the LW team, but of the LW mentality. And I should have phrased my point more carefully - "epistemic standards are too low to make any progress" is clearly too strong a claim, it's more like "epistemic standards are low enough that they're an important bottleneck to progress". But I do think there's a substantive disagreement here. Perhaps the best way to spell it out is to look at the posts you linked and see why I'm less excited about them than you are.

Of the top posts in the 2018 review, and the ones you linked (excluding AI), I'd categorise them as follows:

Interesting speculation about psychology and society, where I have no way of knowing if it's true:

- Local Validity as a Key to Sanity and Civilization

- The Loudest Alarm Is Probably False

- Anti-social punishment (which is, unlike the others, at least based on one (1) study).

- Babble

- Intelligent social web

- Unrolling social metacognition

- Simulacra levels

- Can you keep this secret?

Same as above but it's by Scott so it's a bit more rigorous and much more compelling:

- Is Science Slowing Down?

- The tails coming apart as a metaph

(Thanks for laying out your position in this level of depth. Sorry for how long this comment turned out. I guess I wanted to back up a bunch of my agreement with words. It's a comment for the sake of everyone else, not just you.)

I think there's something to what you're saying, that the mentality itself could be better. The Sequences have been criticized because Eliezer didn't cite previous thinkers all that much, but at least as far as the science goes, as you said, he was drawing on academic knowledge. I also think we've lost something precious with the absence of epic topic reviews by the likes of Luke. Kaj Sotala still brings in heavily from outside knowledge, John Wentworth did a great review on Biological Circuits, and we get SSC crossposts that have that, but otherwise posts aren't heavily referencing or building upon outside stuff. I concede that I would like to see a lot more of that.

I think Kaj was rightly disappointed that he didn't get more engagement with his post whose gist was "this is what the science really says about S1 & S2, one of your most cherished concepts, LW community".

I wouldn't say the typical approach is strictly bad, there's value in thinking freshly...

This is only tangentially relevant, but adding it here as some of you might find it interesting:

Venkatesh Rao has an excellent Twitter thread on why most independent research only reaches this kind of initial exploratory level (he tried it for a bit before moving to consulting). It's pretty pessimistic, but there is a somewhat more optimistic follow-up thread on potential new funding models. Key point is that the later stages are just really effortful and time-consuming, in a way that keeps out a lot of people trying to do this as a side project alongside a separate main job (which I think is the case for a lot of LW contributors?)

Quote from that thread:

Research =

a) long time between having an idea and having something to show for it that even the most sympathetic fellow crackpot would appreciate (not even pay for, just get)

b) a >10:1 ratio of background invisible thinking in notes, dead-ends, eliminating options etc

With a blogpost, it’s like a week of effort at most from idea to mvp, and at most a 3:1 ratio of invisible to visible. That’s sustainable as a hobby/side thing.

...To do research-grade thinking you basically have to be independently wealthy and accept 90% d

Thanks, these links seem great! I think this is a good (if slightly harsh) way of making a similar point to mine:

"I find that autodidacts who haven’t experienced institutional R&D environments have a self-congratulatory low threshold for what they count as research. It’s a bit like vanity publishing or fan fiction. This mismatch doesn’t exist as much in indie art, consulting, game dev etc"

Quoting your reply to Ruby below, I agree I'd like LessWrong to be much better at "being able to reliably produce and build on good ideas".

The reliability and focus feels most lacking to me on the building side, rather than the production, which I think we're doing quite well at. I think we've successfully formed a publishing platform that provides and audience who are intensely interested in good ideas around rationality, AI, and related subjects, and a lot of very generative and thoughtful people are writing down their ideas here.

We're low on the ability to connect people up to do more extensive work on these ideas – most good hypotheses and arguments don't get a great deal of follow up or further discussion.

Here are some subjects where I think there's been various people sharing substantive perspectives, but I think there's also a lot of space for more 'details' to get fleshed out and subquestions to be cleanly answered:

- Sabbath and Rest Days (Zvi, Lauren Lee, Jacobian, Scott)

- Moloch and Slack and Mazes (Scott, Eliezer, Zvi, Swentworth, Jameson)

- Inner/Outer Alignment (EvHub, Rafael, Paul, Swentworth, Steve2152)

- Embedded Agency + Optimization (Abram, Scott, Swentworth, Alex Fli

"I see a lot of (very high quality) raw energy here that wants shaping and directing, with the use of lots of tools for coordination (e.g. better collaboration tools)."

Yepp, I agree with this. I guess our main disagreement is whether the "low epistemic standards" framing is a useful way to shape that energy. I think it is because it'll push people towards realising how little evidence they actually have for many plausible-seeming hypotheses on this website. One proven claim is worth a dozen compelling hypotheses, but LW to a first approximation only produces the latter.

When you say "there's also a lot of space for more 'details' to get fleshed out and subquestions to be cleanly answered", I find myself expecting that this will involve people who believe the hypothesis continuing to build their castle in the sky, not analysis about why it might be wrong and why it's not.

That being said, LW is very good at producing "fake frameworks". So I don't want to discourage this too much. I'm just arguing that this is a different thing from building robust knowledge about the world.

I feel like this comment isn't critiquing a position I actually hold. For example, I don't believe that "the correct next move is for e.g. Eliezer and Paul to debate for 1000 hours". I am happy for people to work towards building evidence for their hypotheses in many ways, including fleshing out details, engaging with existing literature, experimentation, and operationalisation.

Perhaps this makes "proven claim" a misleading phrase to use. Perhaps more accurate to say: "one fully fleshed out theory is more valuable than a dozen intuitively compelling ideas". But having said that, I doubt that it's possible to fully flesh out a theory like simulacra levels without engaging with a bunch of academic literature and then making predictions.

I also agree with Raemon's response below.

I think I'm concretely worried that some of those models / paradigms (and some other ones on LW) don't seem pointed in a direction that leads obviously to "make falsifiable predictions."

And I can imagine worlds where "make falsifiable predictions" isn't the right next step, you need to play around with it more and get it fleshed out in your head before you can do that. But there is at least some writing on LW that feels to me like it leaps from "come up with an interesting idea" to "try to persuade people it's correct" without enough checking.

(In the case of IFS, I think Kaj's sequence is doing a great job of laying it out in a concrete way where it can then be meaningfully disagreed with. But the other people who've been playing around with IFS didn't really seem interested in that, and I feel like we got lucky that Kaj had the time and interest to do so.)

In general when we do intellectual work we have excellent epistemic standards, capable of listening to all sorts of evidence that other communities and fields would throw out, and listening to subtler evidence than most scientists ("faster than science")

"Being more openminded about what evidence to listen to" seems like a way in which we have lower epistemic standards than scientists, and also that's beneficial. It doesn't rebut my claim that there are some ways in which we have lower epistemic standards than many academic communities, and that's harmful.

In particular, the relevant question for me is: why doesn't LW have more depth? Sure, more depth requires more work, but on the timeframe of several years, and hundreds or thousands of contributors, it seems viable. And I'm proposing, as a hypothesis, that LW doesn't have enough depth because people don't care enough about depth - they're willing to accept ideas even before they've been explored in depth. If this explanation is correct, then it seems accurate to call it a problem with our epistemic standards - specifically, the standard of requiring (and rewarding) deep investigation and scholarship.

There's been a fair amount of discussion of that sort of thing here: https://www.lesswrong.com/tag/group-rationality There are also groups outside LW thinking about social technology such as RadicalxChange.

Imagine you took 5 separate LWers and asked them to create a unified consensus response to a given article. My guess is that they’d learn more through that collective effort, and produce a more useful response, than if they spent the same amount of time individually evaluating the article and posting their separate replies.

I'm not sure. If you put those 5 LWers together, I think there's a good chance that the highest status person speaks first and then the others anchor on what they say and then it effectively ends up being like a group project for school with the highest status person in charge. Some related links.

Much of the same is true of scientific journals. Creating a place to share and publish research is a pretty key piece of intellectual infrastructure, especially for researchers to create artifacts of their thinking along the way.

The point about being 'cross-posted' is where I disagree the most.

This is largely original content that counterfactually wouldn't have been published, or occasionally would have been published but to a much smaller audience. What Failure Looks Like wasn't crossposted, Anna's piece on reality-revealing puzzles wasn't crossposted. I think that Zvi would have still written some on mazes and simulacra, but I imagine he writes substantially more content given the cross-posting available for the LW audience. Could perhaps check his blogging frequency over the last few years to see if that tracks. I recall Zhu telling me he wrote his FAQ because LW offered an audience for it, and likely wouldn't have done so otherwise. I love everything Abram writes, and while he did have the Intelligent Agent Foundations Forum, it had a much more concise, technical style, tiny audience, and didn't have the conversational explanations and stories and cartoons that have...

Here is the best toy model I currently have for rational agents. Alas, it is super messy and hacky, but better than nothing. I'll call it the BAVM model; the one-sentence summary is "internal traders concurrently bet on beliefs, auction actions, vote on values, and merge minds". There's little novel here, I'm just throwing together a bunch of ideas from other people (especially Scott Garrabrant and Abram Demski).

In more detail, the three main components are:

- A prediction market

- An action auction

- A value election

You also have some set of traders, who can simultaneously trade on any combination of these three. Traders earn money in two ways:

- Making accurate predictions about future sensory experiences on the market.

- Taking actions which lead to reward or increase the agent's expected future value.

They spend money in three ways:

- Bidding to control the agent's actions for the next N timesteps.

- Voting on what actions get reward and what states are assigned value.

- Running the computations required to figure out all these trades.

Values are therefore dominated by whichever traders earn money from predictions or actions, who will disproportionately vote for values that are formulated in the same on...

An analogy that might be banal, but might be interesting:

One reason (the main reason?) that computers use discrete encodings is to make error correction easier. A continuous signal will gradually drift over time. Conversely, if the signal is frequently rounded to the nearest discrete value, then it might remain error-free for a long time. (I think this is also the reason why two most complicated biological information-processing systems use discrete encodings: DNA base pairs and neural spikes. EDIT: Neural spikes may seem continuous in the time dimension but the concept of "brain waves" makes me suspect that the time intervals between them are better understood as discrete.)

Separately, agents tend to define discrete boundaries around themselves—e.g. countries try to have sharp borders rather than fuzzy borders. One reason (the main reason?) is to make themselves easier to defend: with sharp borders there's a clear Schelling point for when to attack invaders. Without that, invaders might "drift in" over time.

The logistics of defending oneself vary by type of agent. For physical agents, perhaps fuzzy boundaries are just not possible to implement (e.g. humans need to literally hold the water inside us). However, many human groups (e.g. social classes) have initiation rituals which clearly demarcate who's in and who's out, even though in principle it'd be fairly easy for them to have a gradual/continuous metric of membership (like how many "points" members have gotten). We might be able to explain this as a way of giving them social defensibility.

A further potential extension here is to point out that modern hiveminds (Twitter / X / Bsky) changed group membership in many political groups from something explicit ("We let this person write in a our [Conservative / Liberal / Leftist / etc] magazine / published them in our newspaper") to something very fuzzy and indeterminate ("Well, they call themselves an [Conservative / Liberal / Leftist / etc] , and they're huge on Twitter, and they say some of the kinds of things [Conservative / Liberal / Leftist / etc] people say, so I guess they're an [Conservative / Liberal / Leftist / etc] .")

I think this is a really big part of why the free market of ideas has stopped working in the US over the last decade or two.

Yet more speculative is a preferred solution of mine; intermediate groups within hiveminds, such that no person can post in the hivemind without being part of such a group, and such that both person and group are clearly associated with each other. This permits:

- Membership to be explicit

- Bad actors (according to group norms) to be actually kicked out proactively, rather than degrading norms

- Multi-level selection between group norms, where you can just block large groups that do not adopt truthseeking norms

- More conscious shaping of the egregore.

But this solutioning is all more speculative than the problem.

Someone on the EA forum asked why I've updated away from public outreach as a valuable strategy. My response:

I used to not actually believe in heavy-tailed impact. On some gut level I thought that early rationalists (and to a lesser extent EAs) had "gotten lucky" in being way more right than academic consensus about AI progress. I also implicitly believed that e.g. Thiel and Musk and so on kept getting lucky, because I didn't want to picture a world in which they were actually just skillful enough to keep succeeding (due to various psychological blockers).

Now, thanks to dealing with a bunch of those blockers, I have internalized to a much greater extent that you can actually be good not just lucky. This means that I'm no longer interested in strategies that involve recruiting a whole bunch of people and hoping something good comes out of it. Instead I am trying to target outreach precisely to the very best people, without compromising much.

Relatedly, I've updated that the very best thinkers in this space are still disproportionately the people who were around very early. The people you need to soften/moderate your message to reach (or who need social proof in order to get involved)...

The people you need to soften/moderate your message to reach (or who need social proof in order to get involved) are seldom going to be the ones who can think clearly about this stuff.

I strongly agree with this. (I wrote a post about it years ago.[1])

Even of the people who were not "in early", of the ones who I most respect, and who seem to me to be doing the most impressive work that I'm most grateful to have in the world, 0 of them needed hand-holding or "outreach" to get them on board.

Writing the sequences was an amazing, high quality intervention that continues to pay dividends to this day. I think writing on the internet about the things that you think are important is a fantastic strategy, at least if your intellectual taste is good.

The payoff of most of the "movement building" and "community building" seems much murkier to me. At least some of it was clearly positive, but I don't know if it was positive on net (I think a smaller and more intense EA than the one we have in practice probably would have been better).

There's selection bias in kinds of community building I observed, but it seems to me that community building was more effective to the extent that it wa...

I agree with the main generator of this post (a small number of people produce a wildly disproportionate amount of the intellectual progress on hard problems) and one of the conclusions (don't water down your messages at all, if people need watered down messages they are unlikely to be helpful) but I think there's significant value in trying to communicate the hard problem of alignment broadly anyway because:

- Filtering who are the best people is expensive and error-prone, so if you don't put the correct models in general circulation even pretty great people might just not become aware of them

- People who are highly competent but not highly confident seem to often run into people who have been misinformed and become less sure of their own positions, having more generally circulating models of the main threat models would help those people get less distracted

- Planting lots of seeds can be relatively cheap.

Also, related anecdote, I ran ~8 retreats at my house covering around 60 people in 2022/23. I got a decent read on how much of the core stack of alignment concepts at least half of them had, and how often they made hopeful mistakes which were transparently going to fail based on not hav...

Some thoughts on public outreach and "Were they early because they were good or lucky?"

- Who are the best newcomers to AI safety? I'd be interested to here anyone's takes, not just Richard's. Who has done great work (by your lights) since joining after ChatGPT?

- Rob Miles was the high watermark of public outreach. Unfortunately he stopped making videos. I'd be far more excited by a newcomer if they were persuaded by a Rob Miles video than an 80K video -- videos like 80K's "We're Not Ready for Superintelligence"[1] are better on legible/easy-to-measure dimensions but worse in some more important way I think.

- I observe a suspicious amount of 'social contagion' among the pre-ChatGPT AI Safety crowd, which updates me somewhat in favour of "lucky" over "good".[2]

- ^

- ^

A bit anecdotal but: there are ~ a dozen people who went to our college in 2017-2020 now working full-time in AI safety, which is much higher than other colleges at the same university. I'm not saying any of us are particularly "great" -- but this suggests social contagion / information cascade, rather than "we figured this stuff out from the empty string". Maybe if you go back further (e.g. 2012-2016) there was less social co

In trying to reply to this comment I identified four "waves" of AI safety, and lists of the central people in each wave. Since this is socially complicated I'll only share the full list of the first wave here, and please note that this is all based on fuzzy intuitions gained via gossip and other unreliable sources.

The first wave I’ll call the “founders”; I think of them as the people who set up the early institutions and memeplexes of AI safety before around 2015. My list:

- Eliezer Yudkowsky

- Michael Vassar

- Anna Salamon

- Carl Schulman

- Scott Alexander

- Holden Karnofsky

- Nick Bostrom

- Robin Hanson

- Wei Dai

- Shane Legg

- Geoff Anders

The second wave I’ll call the “old guard”; those were the people who joined or supported the founders before around 2015. A few central examples include Paul Christiano, Chris Olah, Andrew Critch and Oliver Habryka.

Around 2014/2015 AI safety became significantly more professionalized and growth-oriented. Bostrom published Superintelligence, the Puerto Rico conference happened, OpenAI was founded, DeepMind started a safety team (though I don't recall exactly when), and EA started seriously pushing people towards AI safety. I’ll call the people who entered the field from then un...

My guess would be that nowadays many people who could bring a fresh perspective, or simply high-caliber original thinking, get either selected out/drowned out or are pushed through social and financial incentives to align there thinking towards more "mainstream" views.

Being able to take future AI seriously as a risk seems to be highly correlated to being able to take COVID seriously as a risk in February 2020.

- The key skill here may be as simple as being able to selectively turn off normalcy bias in the face of highly unusual news.

- A closely related "skill" may be a certain general pessimism about future events, the sort of thing economists jokingly describe as "correctly predicting 12 out of the last 6 recessions."

That said, mass public action can be valuable. It's a notoriously blunt tool, though. As one person put it, "if you want to coordinate more than 5,000 people, your message can be about 5 words long." And the public will act anyway, in some direction. So if there's something you want to public to do, it can be worth organizing and working on communication strategies.

My political platform is, if you boil it down far enough, about 3 words long: "Don't build SkyNet." (As early as the late 90s, I joked about having a personal 11th commandment: "Thou shalt not build SkyNet." One of my career options at that point was to potentially work on early semi-autonomous robotic weapon platform prototypes, so this was actually relevant moral advic...

Yeah, it's not like the point of outreach is to mobilise citizen science on alignment (though that may happen). It's because in democracy the public is an important force. You can pick the option of focusing on converting a few powerful people and hope they can get shit done via non-political avenues but that hasn't worked spectacularly either for now, such people are still subject to classic race to the bottom dynamics and then you get cases like Altman and Musk, who all in all may have ended up net negative for the AI safety cause.

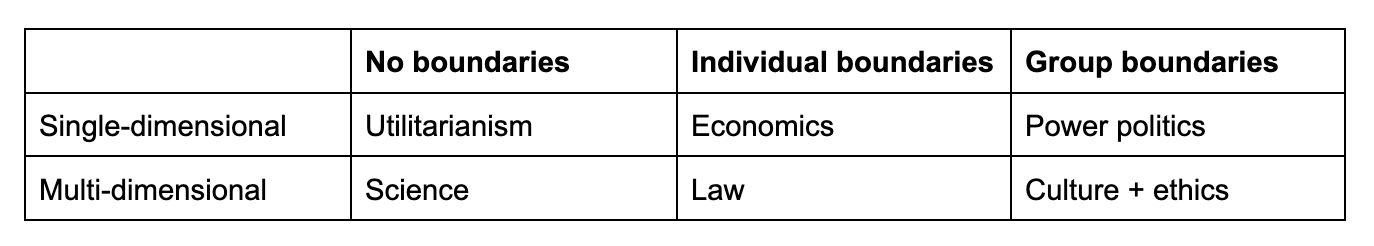

Some opinions about AI and epistemology:

- One reasons that many rationalists have such strong views about AI is that they are wrong about epistemology. Specifically, bayesian rationalism is a bad way to think about complex issues.

- A better approach is meta-rationality. To summarize one guiding principle of (my version of) meta-rationality in a single sentence: if something doesn't make sense in the context of group rationality, it probably doesn't make sense in the context of individual rationality either.

- For example: there's no privileged way to combine many people's opinions into a single credence. You can average them, but that loses a lot of information. Or you can get them to bet on a prediction market, but that depends on a lot on details of the individuals' betting strategies. The group might settle on a number to help with planning and communication, but it's only a lossy summary of many different beliefs and models. Similarly, we should think of individuals' credences as lossy summaries of different opinions from different underlying models that they have.

- How does this apply to AI? Suppose we each think of ourselves as containing many different subagents that focus on u

That will push P(doom) lower because most frames from most disciplines, and most styles of reasoning, don't predict doom.

I don't really buy this statement. Most frames, from most disciplines, and most styles of reasoning, do not make clear predictions about what will happen to humanity in the long-run future. A very few do, but the vast majority are silent on this issue. Silence is not anything like "50%".

Most frames, from most disciplines, and most styles of reasoning, don't predict sparks when you put metal in a microwave. This doesn't mean I don't know what happens when you put metal in a microwave. You need to at the very least limit yourself to applicable frames, and there are very few applicable frames for predicting humanity's long-term future.

- I've been thinking lately that human group rationality seems like such a mess. Like how can humanity navigate a once in a lightcone opportunity like the AI transition without doing something very suboptimal (i.e., losing most of potential value), when the vast majority of humans (and even the elites) can't understand (or can't be convinced to pay attention to) many important considerations. This big picture seems intuitively very bad and I don't know any theory of group rationality that says this is actually fine.

- I guess my 1 is mostly about descriptive group rationality, and your 2 may be talking more about normative group rationality. However I'm also not aware of any good normative theories about group rationality. I started reading your meta-rationality sequence, but it ended after just two posts without going into details.

- The only specific thing you mention here is "advance predictions" but for example, moral philosophy deals with "ought" questions and can't provide advance predictions. Can you say more about how you think group rationality should work, especially when advance predictions isn't possible?

- From your group rationality perspective, why is it good that rationalists individually have better views about AI? Why shouldn't each person just say what they think from their own preferred frame, and then let humanity integrate that into some kind of aggregate view or outcome, using group rationality?

How can the mistakes rationalists are making be expressed in the language of Bayesian rationalism? Priors, evidence, and posteriors are fundamental to how probability works.

The mistakes can (somewhat) be expressed in the language of Bayesian rationalism by doing two things:

- Talking about partial hypotheses rather than full hypotheses. You can't have a prior over partial hypotheses, because several of them can be true at once (though you can still assign them credences and update those credences according to evidence).

- Talking about models with degrees of truth rather than just hypotheses with degrees of likelihood. E.g. when using a binary conception of truth, general relativity is definitely false because it's inconsistent with quantum phenomena. Nevertheless, we want to say that it's very close to the truth. In general this is more of an ML approach to epistemology (we want a set of models with low combined loss on the ground truth).

Suppose we think of ourselves as having many different subagents that focus on understanding the world in different ways - e.g. studying different disciplines, using different styles of reasoning, etc. The subagent that thinks about AI from first principles might come to a very strong opinion. But this doesn't mean that the other subagents should fully defer to it (just as having one very confident expert in a room of humans shouldn't cause all the other humans to elect them as the dictator). E.g. maybe there's an economics subagent who will remain skeptical unless the AI arguments can be formulated in ways that are consistent with their knowledge of economics, or the AI subagent can provide evidence that is legible even to those other subagents (e.g. advance predictions).

Do "subagents" in this paragraph refer to different people, or different reasoning modes / perspectives within a single person? (I think it's the latter, since otherwise they would just be "agents" rather than subagents.)

Either way, I think this is a neat way of modeling disagreement and reasoning processes, but for me it leads to a different conclusion on the object-level question of AI doom.

A big part of why I f...

I'd love to read an elaboration of your perspective on this, with concrete examples, which avoids focusing on the usual things you disagree about (pivotal acts vs. pivotal processes, social facets of the game is important for us to track, etc.) and mainly focus on your thoughts on epistemology and rationality and how it deviates from what you consider the LW norm.

A response to someone asking about my criticisms of EA (crossposted from twitter):

EA started off with global health and ended up pivoting hard to AI safety, AI governance, etc. You can think of this as “we started with one cause area and we found another using the same core assumptions” but another way to think about it is “the worldview which generated ‘work on global health’ was wrong about some crucial things”, and the ideology hasn’t been adequately refactored to take those things out.

Some of them include:

- Focusing on helping the worst-off instead of empowering the best

- Being very scared of political controversy (e.g. not being willing to discuss the real causes of African poverty)

- Not deeply believing in technological progress (e.g. prioritizing bed nets over vaccines). Related to point 1.

- Trusting the international order to mostly have its shit together (related to point 2)

You can partially learn these lessons from within the EA framework but it’s very unnatural and you won’t learn them well enough. E.g. now EAs are pivoting to politics but again they’re flinching away from anything remotely controversial and so are basically just propping up existing elites.

On a deeper ideologic...

It’s far easier to soothe your ego by finding a rationalization for having caused good consequences, than to self-deceive about whether you’re a paragon of courage or honesty.

It seems like the opposite to me, that it is extremely easy to self-deceive about whether you're a virtuous person. In fact this seems like a quintessential example of self-deception that one encounters fairly commonly.

It is also quite easy to self-deceive about the consequences of ones actions, I agree, but in that case there is at least some empirical fact about the world which could drag you back from your self-deception of you were open to it. In contrast, it seems to me like if you self-deceive hard enough you can always view yourself as a paragon of virtue essentially regardless of how virtuous you actually are and you can always come up with rationalizations for your own actions. If you care about consequences your performance on those consequences can be contradicted empirically, but there isn't a similar way of contradicting your virtue if you are willing to twist your impressions of what is virtuous enough.

Focusing on helping the worst-off instead of empowering the best

I feel like this is getting at some directionally correct stuff but, feels off.

EA was the union of a few disparate groups, roughly encapsulated by:

- Peter Singer / Giving What We Can folk

- Early Givewell

- Early LessWrong

There are other specific subgroups. But, my guess is early Givewell was most loadbearing in there ending up being an "EA" identity, in that there were concrete recommendations of what to do with the money that stood up to some scrutiny. Otherwise it'd have just been more "A", or, "weird transhumanists with weird goals."

Givewell started out with a wider variety of cause areas, including education in America. It just turned out that it seemed way more obviously cost effective to do specific global health interventions than to try to fix education in America. (I realize education in America isn't particularly "empowering the best", but, the flow towards "helping worst off" seems to me like it wasn't actually the initial focus)

I agree some memeplex accreted around that, which had some of the properties you describe.

But meanwhile:

EA started off with global health and ended up pivoting hard to AI safety, AI governa...

It seems off to say "started off in global health, and pivoted to AI", when all the AI stuff was there from the beginning at the very first pre-EA-Global events, and just eventually became clear that it was real, and important. The worldview that generated AI was not (exactly) the same one generating global health, they were just two clusters of worldview that were in conversation with each other from the beginning.

I agree with all the facts cited here, but I think it still understates the way that there was an intentional pivot.

The EA brand to broader world emphasized earning to give and effective global poverty charities in particular. That's what most people who had heard of it associated with "effective altruism". And most of the people who got involved before 2019 got involved with an EA bearing that brand.

I guess that in 2015, the average EAG-goer was mostly interested in GiveWell style effective charities, and gave a bit of difference to the more speculative x-risk stuff (because smart EAs seem to take it seriously), but mostly didn't focus on it very much.

And while it's true that AI risk was part of the discussion from the very beginning, there were explicit top-down pushes from the leadership to prioritize it and to give it more credibility.

(And more than that, I'm told that at least some of the leadership had the explicit strategy of building credibility and reputation with GiveWell-like stuff, and boosting the reputation of AI risk by association.)

Some of them include:

imo a larger one is something like not rooting the foundations in "build your own models of the world so that you contain within you a stack trace of why you're doing what you're doing" + "be willing to be challenges and update major beliefs based on deep-in-the-weeds technical arguments, and do so from a highly truth-seeking stance which knows what it feels like to actually understand something not just have an opinion".

Lack of this is fineish in global health, but in AI Safety generates a crop of people with only surface deferral flavor understanding of the issues, which is insufficient to orient in a much less straightforward technical domain.

hmm, I like the diagnosis of issues with the EA worldview, but I don't really buy that they're downstream of issues with consequentialism and utilitarianism itself.

I would say it's more like: Effective Altruism has historically embraced a certain flavor of utilitarianism and naive consequentialism that attempts to be compatible with pre-existing vibes and (to some degree) mainstream politics. Concretely, EAs are (to their credit) willing to bite some strange bullets and then act on their conclusions, and are also generally pro-market compared to mainstream Democratic politics. But they're still very, very Blue Tribe-coded culture-wise, and this causes them to deviate from actually-correct versions of consequentialism and utilitarianism in predictable directions.

Or: in my view, "Utilitarianism and non-naive consequentialism with guardrails" is pretty close to correct philosophy for humans; the issue is that the EA worldview systematically selects for the wrong guardrails[1]. But better ones are available; for example Eliezer wrote this nearly 20 years ago: Ends Don't Justify Means (Among Humans)

I'd be interested in hearing what kind of criticism you have of the posts in that sequenc...

In a post on Solomonoff Induction (and also in this wiki entry), Yudkowsky describes Shannon’s minimax algorithm for searching the entire chess game-tree as an example of conceptual progress. Previously, Edgar Allen Poe had argued that it was impossible in principle for a machine to play chess well. With Shannon’s algorithm, it became possible in principle, just computationally infeasible.

However, even “principled” algorithms like minimax search don’t take into account the possibility that your opponent knows things you don’t know (as I’ve previously discussed here). And so there are many elements of good chess strategy for bounded agents that they can’t account for:

- When your opponent plays a move that seems surprisingly bad, update that they’re probably seeing some tactics that you’re missing.

- When your opponent plays a move that seems surprisingly good, update that they’re probably more skilled than you thought.

- Try to play tricky (“sharp”) lines when you’re behind, in the hope that your opponent makes a mistake.

- Try to play solidly when you’re ahead, even if it narrows your lead.

- Identify your opponent’s playing style, and try to exploit it.

Some of these considerations have been dis...

(Written quickly and not very carefully.)

I think it's worth stating publicly that I have a significant disagreement with a number of recent presentations of AI risk, in particular Ajeya's "Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover", and Cohen et al.'s "Advanced artificial agents intervene in the provision of reward". They focus on policies learning the goal of getting high reward. But I have two problems with this:

- I expect "reward" to be a hard goal to learn, because it's a pretty abstract concept and not closely related to the direct observations that policies are going to receive. If you keep training policies, maybe they'd converge to it eventually, but my guess is that this would take long enough that we'd already have superhuman AIs which would either have killed us or solved alignment for us (or at least started using gradient hacking strategies which undermine the "convergence" argument). Analogously, humans don't care very much at all about the specific connections between our reward centers and the rest of our brains - insofar as we do want to influence them it's because we care about much more directly-observable p

I'm not very convinced by this comment as an objection to "50% AI grabs power to get reward." (I find it more plausible as an objection to "AI will definitely grab power to get reward.")

I expect "reward" to be a hard goal to learn, because it's a pretty abstract concept and not closely related to the direct observations that policies are going to receive

"Reward" is not a very natural concept

This seems to be most of your position but I'm skeptical (and it's kind of just asserted without argument):

- The data used in training is literally the only thing that AI systems observe, and prima facie reward just seems like another kind of data that plays a similarly central role. Maybe your "unnaturalness" abstraction can make finer-grained distinctions than that, but I don't think I buy it.

- If people train their AI with RLDT then the AI is literally be trained to predict reward! I don't see how this is remote, and I'm not clear if your position is that e.g. the value function will be bad at predicting reward because it is an "unnatural" target for supervised learning.

- I don't understand the analogy with humans. It sounds like you are saying "an AI system selected based on the reward of its acti

Putting my money where my mouth is: I just uploaded a (significantly revised) version of my Alignment Problem position paper, where I attempt to describe the AGI alignment problem as rigorously as possible. The current version only has "policy learns to care about reward directly" as a footnote; I can imagine updating it based on the outcome of this discussion though.

The concept of "schemers" seems to be gradually becoming increasingly load-bearing in the AI safety community. However, I don't think it's ever been particularly well-defined, and I suspect that taking this concept for granted is inhibiting our ability to think clearly about what's actually going on inside AIs (in a similar way to e.g. how the badly-defined concept of alignment faking obscured the interesting empirical results from the alignment faking paper).

In my mind, the spectrum from "almost entirely honest, but occasionally flinching away from aspects of your motivations you're uncomfortable with" to "regularly explicitly thinking about how you're going to fool humans in order to take over the world" is a pretty continuous one. Yet generally people treat "schemer" as a fairly binary classification.

To be clear, I'm not confident that even "a spectrum of scheminess" is a good way to think about the concept. There are likely multiple important dimensions that could be disentangled; and eventually I'd like to discover properly scientific theories of concepts like honesty, deception and perhaps even "scheming". Our current lack of such theories shouldn't be a barrier to using those terms at all, but it suggests they should be used with a level of caution that I rarely see.

I largely agree with the substance of this comment. Lots of risk comes from AIs who, to varying extents, didn't think of themselves as deceptively aligned through most of training, but then ultimately decide to take substantial material action intended to gain long-term power over the developers (I call these "behavioral schemers"). This might happen via reflection and memetic spread throughout the deployment or because of more subtle effects of the distribution shift to situations where there's an opportunity to grab power.

And I agree that people are often sloppy in their thinking about exactly how these AIs will be motivated (e.g., often too quickly concluding that they'll be trying to guard the same goal across contexts).

(Though, in case this was in question, I think this doesn't undermine the premise of AI control research, which is essentially making a worst-case assumption about the AI's motivations, so it's robust to other kinds of dangerously-motivated AIs.)

When you think of goals as reward/utility functions, the distinction between positive and negative motivations (e.g. as laid out in this sequence) isn’t very meaningful, since it all depends on how you normalize them.

But when you think of goals as world-models (as in predictive processing/active inference) then it’s a very sharp distinction: your world-model-goals can either be of things you should move towards, or things you should move away from.

This updates me towards thinking that the positive/negative motivation distinction is more meaningful than I thought.

In run-and-tumble motion, “things are going well” implies “keep going”, whereas “things are going badly” implies “choose a new direction at random”. Very different! And I suggest in §1.3 here that there’s an unbroken line of descent from the run-and-tumble signal in our worm-like common ancestor with C. elegans, to the “valence” signal that makes things seem good or bad in our human minds. (Suggestively, both run-and-tumble in C. elegans, and the human valence, are dopamine signals!)

So if some idea pops into your head, “maybe I’ll stand up”, and it seems appealing, then you immediately stand up (the human “run”); if it seems unappealing on net, then that thought goes away and you start thinking about something else instead, semi-randomly (the human “tumble”).

So positive and negative are deeply different. Of course, we should still call this an RL algorithm. It’s just that it’s an RL algorithm that involves a (possibly time- and situation-dependent) heuristic estimator of the expected value of a new random plan (a.k.a. the expected reward if you randomly tumble). If you’re way above that expected value, then keep doing whatever you’re doing; if you’re way below the threshold, re-rol...

i don't think this is unique to world models. you can also think of rewards as things you move towards or away from. this is compatible with translation/scaling-invariance because if you move towards everything but move towards X even more, then in the long run you will do more of X on net, because you only have so much probability mass to go around.

i have an alternative hypothesis for why positive and negative motivation feel distinct in humans.

although the expectation of the reward gradient doesn't change if you translate the reward, it hugely affects the variance of the gradient.[1] in other words, if you always move towards everything, you will still eventually learn the right thing, but it will take a lot longer.

my hypothesis is that humans have some hard coded baseline for variance reduction. in the ancestral environment, the expectation of perceived reward was centered around where zero feels to be. our minds do try to adjust to changes in distribution (e.g hedonic adaptation), but it's not perfect, and so in the current world, our baseline may be suboptimal.

- ^

Quick proof sketch (this is a very standard result in RL and is the motivation for advantage estimation, b

This reminds me of a conversation I had recently about whether the concept of "evil" is useful. I was arguing that I found "evil"/"corruption" helpful as a handle for a more model-free "move away from this kind of thing even if you can't predict how exactly it would be bad" relationship to a thing, which I found hard to express in a more consequentialist frames.

In (non-monotonic) infra-Bayesian physicalism, there is a vaguely similar asymmetry even though it's formalized via a loss function. Roughly speaking, the loss function expresses preferences over "which computations are running". This means that you can have a "positive" preference for a particular computation to run or a "negative" preference for a particular computation not to run[1].

- ^

There are also more complicated possibilities, such as "if P runs then I want Q to run but if P doesn't run then I rather that Q also doesn't run" or even preferences that are only expressible in terms of entanglement between computations.

Five clusters of alignment researchers

Very broadly speaking, alignment researchers seem to fall into five different clusters when it comes to thinking about AI risk:

- MIRI cluster. Think that P(doom) is very high, based on intuitions about instrumental convergence, deceptive alignment, etc. Does work that's very different from mainstream ML. Central members: Eliezer Yudkowsky, Nate Soares.

- Structural risk cluster. Think that doom is more likely than not, but not for the same reasons as the MIRI cluster. Instead, this cluster focuses on systemic risks, multi-agent alignment, selective forces outside gradient descent, etc. Often work that's fairly continuous with mainstream ML, but willing to be unusually speculative by the standards of the field. Central members: Dan Hendrycks, David Krueger, Andrew Critch.

- Constellation cluster. More optimistic than either of the previous two clusters. Focuses more on risk from power-seeking AI than the structural risk cluster, but does work that is more speculative or conceptually-oriented than mainstream ML. Central members: Paul Christiano, Buck Shlegeris, Holden Karnofsky. (Named after Constellation coworking space.)

- Prosaic cluster. Focuses on empi

A toy model of ethics which I've found helpful lately:

Consider society as a group of reinforcement learners, each getting rewards from interacting with the environment and each other.* We can then define two moral motivations:

- Altruism: trying to increase the rewards received by others.

- Justice**: trying to ensure that people get rewarded more when they act in ways that are more altruistic and just, and less when they don't (note that this is a partially recursive definition).

Importantly, if you have one faction who's primarily optimizing for altruism, and another that's primarily optimizing for justice, by default they'll undermine each other's goals:

- The easiest way to promote justice is to focus on punishing people who behave badly (since that's easier than rewarding people who behave well). This means that the justice faction will (as a first-order effect) decrease the rewards received by others.

- The easiest way to promote altruism is to focus on helping the worst-off. But insofar as the world is just, this will often be people who are badly-off as a consequence of their own misbehavior. And so this kind of altruism can easily undermine justice.

One way of thinking about the last fe...

But in a just world this will tend to be the people who are badly-off as a consequence of their own misbehavior.

The real world is not just though. Yes some people who are badly off are there as a consequence of their own actions: Eg. this is quite likely the case if they're in jail. But, like, the most common way to be badly off in a way that makes you a target for the assistance of effective altruists is to be born into a poor country without a good public health system. Non-effective altruists might try to help prisoners or do other things that oppose the justice people. But those choices seem more random: some of them will also just go and fund museums.

Of course, I agree with the overall point that it's very important to consider what incentives you will create when you try to help people.

And so more thoughtful utilitarians will defend justice as an instrumental moral good, albeit not as a terminal moral good. Unfortunately, it seems very hard to actually hold this position without in practice deprioritizing justice (e.g. it's rare to see effective altruists reasoning themselves into trying to make society more just).

There's an extensive literature in economics on optimal punishment. Does that count, as far as utilitarians working on justice as an instrumental good?

For example, before trying to plan for the future, you need to have a sense of personal identity whereby your future self will feel a sense of continuity with and loyalty to your plans.

I think we just need our terminal values to not change too much over time, so if I ever feel like I need to rethink my plans, I'll come up with a similar or even better plan. Is your thinking that this is impossible or infeasible for most humans, due to things like "power corrupts"? If so, I think consequentialism is still good as it lets us manage or mitigate such value drift, e.g., if I can foresee power (or other circumstances) corrupting my values, I can take precautions like avoiding getting into those situation...

Crossposted from Twitter:

This year I’ve been thinking a lot about how the western world got so dysfunctional. Here’s my rough, best-guess story:

1. WW2 gave rise to a strong taboo against ethnonationalism. While perhaps at first this taboo was valuable, over time it also contaminated discussions of race differences, nationalism, and even IQ itself, to the point where even truths that seemed totally obvious to WW2-era people also became taboo. There’s no mechanism for subsequent generations to create common knowledge that certain facts are true but usefully taboo—they simply act as if these facts are false, which leads to arbitrarily bad policies (e.g. killing meritocratic hiring processes like IQ tests).

2. However, these taboos would gradually have lost power if the west (and the US in particular) had maintained impartial rule of law and constitutional freedoms. Instead, politicization of the bureaucracy and judiciary allowed them to spread. This was enabled by the “managerial revolution” under which govt bureaucracy massively expanded in scope and powers. Partly this was a justifiable response to the increasing complexity of the world (and various kinds of incompetence and nepotism...

This class gains status by signaling commitment to luxury beliefs. Since more absurd beliefs are more costly-to-fake signals, the resulting ideology is actively perverse (i.e. supports whatever is least aligned with their stated core values, like Hamas).

Without commenting on your broader point, I think you believe elites support Hamas because the conservative Twitter feed is presenting you/your circle tailored ragebait, not because elites invert their own utility functions.

More generally, leftists profess many values which are upheld the most by western civilization (e.g. support for sexual freedom, women's rights, anti-racism, etc). But then in conflicts they often side specifically against western civilization. This seems like a straightforward example of pessimization.

Not at all. The trend is that in any given context, American leftists tend to support the 'weaker' group against the stronger group, regardless of the merits of the individual cases. They have a world model that says that that most social problems come from "big" people hurting "little" people, and believe the focus of their politics should be remedying this. In the case of Israel-Palestine, Israel and the United States are much more militarily and economically powerful than Gaza, so ceteris paribus[1] they side with Gaza, just as they side with women, ethnic minorities, the poor, etc. You may disagree with this behavior but it's fairly consistent.

By contrast, the argument that Israel is a bastion of western values and therefore leftists should support its war against a smaller neighbor is kind of abstract. The immediate outcome of Israel winning the war is just that Israe...

This characterizes leftists sufficiently dishonestly that I think you've gotten mindkilled by politics. As people keep removing my (entirely accurate and if anything understated) soldier-mindset reacts, I will strong-downvote instead :)

Without commenting on the specifics, I agree with a lot of the gestalt here as a description of how things evolved historically, but I think that's not really the right lens to understand the problem.

My current best one-sentence understanding: the richer humans get, the more social reality can diverge from physical reality, and therefore the more resources can be captured by parasitic egregores/memes/institutions/ideologies/interest-groups/etc. Physical reality provides constraints and feedback which limit the propagation of such parasites, but wealth makes the constraints less binding and therefore makes the feedback weaker.

The main reason I disagree with both this comment and the OP is that you both have the underlying assumption that we are in a nadir (local nadir?) of connectedness-with-reality, whereas from my read of history I see no evidence of this, and indeed plenty of evidence against.

People used to be confused about all sorts of things, including, but not limited to, the supernatural, the causes of disease, causality itself, the capabilities of women, whether children can have conscious experiences, and so forth.

I think we've gotten more reasonable about almost everything, with a few minor exceptions that people seem to like highlighting (I assume in part because they're so rare).

The past is a foreign place, and mostly not a pleasant one.

Can you explain more your affinity for virtue ethics, e.g., was there a golden age in history, that you read about, where a group of people ran on virtue ethics and it worked out really well? I'm trying to understand why you seem to like it a lot more than I do.

Re government debt, I think that is actually driven more by increasing demand for a "risk-free" asset, with the supply going up more or less passively (what politician is going to refuse to increase debt and spending, as long as people are willing to keep buying it at a low interest rate). And from this perspective it's not really a problem except for everyone getting used to the higher spending when some of the processes increasing the demand for government debt might only be temporary.

AI written explanation of how financialization causes increased demand for government debt

Financialization isn't a vague blob; it's a set of specific, concrete processes, each of which acts like a powerful vacuum cleaner sucking up government debt.

Let's trace four of the most important mechanisms in detail.

1. The Derivatives Market: The Collateral Multiplier

Derivatives (options, futures, swaps) are essentially financial side-bets on the movem

...if the west (and the US in particular) had maintained impartial rule of law and constitutional freedoms.

The US did not have impartial rule of law in the late 1800s and early 1900s. Notably, black Americans in the south were regularly impressed into forced labor, often for the rest of their lives, on the basis of flimsy or even non-existent legal pretext.

(A representative but concocted example: the local sheirf arrests a black man who's walking through town on charges of "vagrancy". The man is found guilty and sentenced to hard labor. The sheriff sells the "contact" for hard labor to a local industrialist who owns a mine. (The sherif and the industrialist are buddies, and have done versions of this deal many times before). The man is set to work the mine for the period of his sentence. When he's near the end of his sentence, he's accused of some minor infraction as a pretext to add more years to his sentence (if anyone bothers to keep track of when his sentence is served at all.)

What makes you think that impartial rule of law decayed since WWII instead of generally (though not evenly) improving?

That is, while it was bad for the people who didn't get rule of law, they were a separate enough category that this mostly didn't "leak into" undermining the legal mechanisms that helped their societies become productive and functional in the first place.

I'm speaking speculatively here, but I don't know that it didn't leak out and undermine the mechanism that supported productive and functional societies. The sophisticated SJW in me suggests that this is part of what caused the eventual (though not yet complete) erosion of those mechanisms.

It seems like if you have "rule of law" that isn't evenly distributed, actually what you have is collusion by one class of people to maintain a set of privileges at the expense of another class of people, where one of the privileges is a sand-boxed set of norms that govern dealings within the privileged class, but with the pretense that the norms are universal.

This kind of pretense seems like it could be corrosive: people can see that the norms that society proclaims as universal actually aren't. This reinforces a a sense that the norms aren't real at all (or at least) a justified sense that the ideals that underly those norms are mostly rational...

This makes no mention of the repeal of the fairness doctrine nor the shift in financial model for major newspapers. The 1987 abolishment of the Fairness Doctrine led very directly to Rush Limbaugh gaining a national political audience.

The fairness doctrine of the United States Federal Communications Commission (FCC), introduced in 1949, was a policy that required the holders of broadcast licenses both to present controversial issues of public importance and to do so in a manner that fairly reflected differing viewpoints.[1] In 1987, the FCC abolished the fairness doctrine,[2] prompting some to urge its reintroduction through either Commission policy or congressional legislation.[3] The FCC removed the rule that implemented the policy from the Federal Register in August 2011.[4]

Rush Limbaugh used to be a regular music DJ in the 1970s. His political talk show was distributed nationally in 1988, soon after the cessation of Fairness Doctrine support by the Reagan administration. Carl McIntire was the Rush Limbaugh (maybe a little more Glenn Beck) of the 1960s and had his radio talk show shut down by the Fairness Doctrine after years of litigation. The legal challenges the Fairness ...

To be honest, this post approaches a level of disjointedness from reality-as-I-understand-it that I fear I cannot accurately respond to it in a way that would satisfy the author.[1] However, if I don't give some response, I suspect that it will join a growing cultural current within LessWrong which involves an intellectualised rationalisation of ethnonationalism, hygiene-oriented eugenics, oligarchy[2], and implicit or explicit support of political action and political violence for these ends. If this current becomes normalised (and it has, frankly, always been present in the rationalist sphere), it makes TESCREAL-style categorisations of the rationalist/EA/AI safety intellectual sphere bascially correct. Therefore, I want to commit to voicing my objection to this line of argument where I see it. There are parts of me that feel strongly against doing this. There is no glory to be gained in political arguments online. However, there is the possibility of avoiding shame, which as a virtue ethicist I'm sure the author will understand.