I have preordered, and am looking forward to reading my copy when it arrives. Seems like a way to buy lightcone-control-in-expectation-points very cheaply.

I admit I'm worried about the cover design. It looks a bit... slap-dash; at first I thought the book was self-published. I'm not sure how much control you and Eliezer have over this, but I think improving it would go a long way toward convincing people to spread it & its ideas as mainstream, reasonable, inside-the-overton-window.

+1 on the cover looking outright terrible. To make this feedback more specific and actionable:

- If you care about the book bestseller lists, why doesn't this book cover look like previous bestsellers? To get a sense of how those look like, here is an "interactive map of over 5,000 book covers" from the NYT "Best Selling" and "Also Selling" lists between 2008 and 2019.

- In particular, making all words the same font size seems very bad, and making title and author names the same size and color is a baffling choice.

- Why is the subtitle in the same font size as the title?

- And why are your author names so large, anyway? Is this book called "If Anyone Build It, Everyone Dies", or is it called "Eliezer Yudkowsky & Nate Soares"?

- Plus someone with a 17-character name like "Eliezer Yudkowsky" simply can't have such a large author font. You're spending three lines of text on the author names!

- Plus I would understand making the author names so large if you had a humungous pre-existing readership (when you're Stephen King or J. K. Rowling, the title of your book is irrelevant). But even Yudkowsky doesn't have that, and Nate certainly doesn't. So why not make the author names smaller, and let the ti

The "lightcone-eating" effect on the website is quite cool. The immediate obvious idea is to have that as a background and write the title inside the black area.

If one wanted to be cute you could even make the expansion vaguely skull-shaped; perhaps like so?

I imagine most disagreement comes from the first paragraph.

The problem with assuming that since the publisher is famous their design is necessarily good is that even huge companies make much worse baffling design decisions all the time, and in this case one can directly see the design and know that it's not great – the weak outside-view evidence that prestigious companies usually do good work doesn't move this very much.

Yes, my disagreement was mostly with the first paragraph, which read to me like "who are you going to believe, the expert or your own lying eyes". I'm not an expert, but I do have a sense of aesthetics, that sense of aesthetics says the cover looks bad, and many others agree. I don't care if the cover was designed by a professional; to shift my opinion as a layperson, I would need evidence that the cover is well-received by many more people than dislike it, plus A/B tests of alternative covers that show it can't be easily improved upon.

That said, I also disagreed somewhat with the fourth paragraph, because when it comes to AI Safety, MIRI really needs no introduction or promotion of their authors. They're well-known, the labs just ignore their claim that "if anyone builds it, everyone dies".

I used to do graphic design professionally, and I definitely agree the cover needs some work.

I put together a few quick concepts, just to explore some possible alternate directions they could take it:

https://i.imgur.com/zhnVELh.png

https://i.imgur.com/OqouN9V.png

https://i.imgur.com/Shyezh1.png

These aren't really finished quality either, but the authors should feel free to borrow and expand on any ideas they like if they decide to do a redesign.

It's important that the cover not make the book look like fiction, which I think these do. The difference in style is good to keep in mind.

Other MIRI staff report that the book helped them fit the whole argument in their head better, or that it made sharp some intuitions they had that were previously vague. So you might get something out of it even if you’ve been around a while.

Can confirm! I've followed this stuff for forever, but always felt at the edge of my technical depth when it came to alignment. It wasn’t until I read an early draft of this book a year ago that I felt like I could trace a continuous, solid line from “superintelligence grown by a blind process...” to “...develops weird internal drives we could not have anticipated”. Before, I was like, "We don't have justifiable confidence that we can make something that reflects our values, especially over the long haul," and now I'm like, "Oh, you can't get there from here. Clear as day."

As for why this spells disaster if anyone builds it, I didn't need any new lessons, but they are here, and they are chilling--even for someone who was already convinced we were in trouble.

Having played some small part in helping this book come together, I would like to attest to the sheer amount of iteration it has gone through over the last year. Nate and co. have been relen...

You convinced me to pre-order it. In particular, these lines:

> It wasn’t until I read an early draft of this book a year ago that I felt like I could trace a continuous, solid line from “superintelligence grown by a blind process...” to “...develops weird internal drives we could not have anticipated”. Before, I was like, "We don't have justifiable confidence that we can make something that reflects our values, especially over the long haul," and now I'm like, "Oh, you can't get there from here. Clear as day."

I read an advance copy of the book; I liked it a lot. I think it's worth reading even if you're well familiar with the overall argument.

I think there's often been a problem, in discussing something for ~20 years, that the material is all 'out there somewhere' but unless you've been reading thru all of it, it's hard to have it in one spot. I think this book is good at presenting a unified story, and not getting bogged down in handling too many objections to not read smoothly or quickly. (Hopefully, the linked online discussions will manage to cover the remaining space in a more appropriately non-sequential fashion.)

In my experience, "normal" folks are often surprisingly open to these arguments, and I think the book is remarkably normal-person-friendly given its topic. I'd mainly recommend telling your friends what you actually think, and using practice to get better at it.

Context: One of the biggest bottlenecks on the world surviving, IMO, is the amount (and quality!) of society-wide discourse about ASI. As a consequence, I already thought one of the most useful things most people can do nowadays is to just raise the alarm with more people, and raise the bar on the quality of discourse about this topic. I'm treating the book as an important lever in that regard (and an important lever for other big bottlenecks, like informing the national security community in particular). Whether you have a large audience or just a network of friends you're talking to, this is how snowballs get started.

If you're just looking for text you can quote to get people interested, I've been using:

...As the AI industry scrambles to build increasingly capable and general AI, two researchers speak out about a disaster on the horizon.

In 2023, hundreds of AI scientists and leaders in the field, including the three mos

You could also try sending your friends an online AI risk explainer, e.g., MIRI's The Problem or Ian Hogarth's We Must Slow Down the Race to God-Like AI (requires Financial Times access) or Gabriel Alfour's Preventing Extinction from Superintelligence.

There's also AIsafety.info's short-er form and long form explainers.

One notable difficulty with talking to ordinary people about this stuff is that often, you lay out the basic case and people go "That's neat. Hey, how about that weather?" There's a missing mood, a sense that the person listening didn't grok the implications of what they're hearing. Now, of course, maybe they just don't believe you or think you're spouting nonsense. But in those cases, I'd expect more resistance to the claims, more objections or even claims like "that's crazy". Not bland acceptance.

One notable difficulty with talking to ordinary people about this stuff is that often, you lay out the basic case and people go "That's neat. Hey, how about that weather?" There's a missing mood, a sense that the person listening didn't grok the implications of what they're hearing.

I kinda think that people are correct to do this, given the normal epistemic environment. My model is this: Everyone is pretty frequently bombarded with wild arguments and beliefs that have crazy implications. Like conspiracy theories, political claims, spiritual claims, get-rich-quick schemes, scientific discoveries, news headlines, mental health and wellness claims, alternative medicine, claims about which lifestyles are better. We don't often have the time (nor expertise or skill or sometimes intelligence) to evaluate them properly. So we usually keep track of a bunch of these beliefs and arguments, and talk about them, but usually require nearby social proof in order to attach the arguments/beliefs to actions and emotions. Rationalists (and the more culty religions and many activist groups, etc.) are extreme in how much they change their everyday lives based on their beliefs.

I think it's probably oka...

I preordered my copy.

Something about the tone of this announcement feels very wrong, though. You cite Rob Bensinger and other MIRI staff being impressed. But obviously, those people are highly selected for already agreeing with you! How much did you engage with skeptical and informed prereaders? (I'm imagining people in the x-risk-reduction social network who are knowledgeable about AI, acknowledge the obvious bare-bones case for extinction risk, but aren't sold on the literal stated-with-certainty headline claim, "If anyone builds it, everyone dies.")

If you haven't already done so, is there still time to solicit feedback from such people and revise the text? (Sorry if the question sounds condescending, but the tone of the announcement really worries me. It would be insane not to commission red team prereaders, but if you did, then the announcement should be talking about the red team's reaction, not Rob's!)

We're targeting a broad audience, and so our focus groups have been more like completely uninformed folks than like informed skeptics. (We've spent plenty of time honing arguments with informed skeptics, but that sort of content will appear in the accompanying online resources, rather than in the book itself.) I think that the quotes the post leads with speak to our ability to engage with our intended audience.

I put in the quote from Rob solely for the purpose of answering the question of whether regular LW readers would have anything to gain personally from the book -- and I think that they probably would, given that even MIRI employees expressed surprise at how much they got out of it :-)

(I have now edited the post to make my intent more clear.)

This strikes me as straightforwardly not the purpose of the book. This is a general-audience book that makes the case, as Nate and Eliezer see it, for both the claim in the title and the need for a halt. This isn’t inside baseball on the exact probability of doom, whether the risks are acceptable given the benefits, whether someone should work at a lab, or any of the other favorite in-group arguments. This is For The Out Group.

Many people (like >100 is my guess), with many different view points, have read the book and offered comments. Some of those comments can be shared publicly and some can’t, as is normal in the publishing industry. Some of those comments shaped the end result, some didn’t.

OK, but is there a version of the MIRI position, more recent than 2022, that's not written for the outgroup?

I'm guessing MIRI's answer is probably something like, "No, and that's fine, because there hasn't been any relevant new evidence since 2022"?

But if you're trying to make the strongest case, I don't think the state of debate in 2022 ever got its four layers.

Take, say, Paul Christiano's 2022 "Where I Agree and Disagree With Eliezer", disagreement #18:

I think that natural selection is a relatively weak analogy for ML training. The most important disanalogy is that we can deliberately shape ML training. Animal breeding would be a better analogy, and seems to suggest a different and much more tentative conclusion. For example, if humans were being actively bred for corrigibility and friendliness, it looks to me like like they would quite likely be corrigible and friendly up through the current distribution of human behavior. If that breeding process was continuously being run carefully by the smartest of the currently-friendly humans, it seems like it would plausibly break down at a level very far beyond current human abilities.

If Christiano is right, that seems like a huge bl...

I'm replying in an awkward superposition, here:

- MIRI staff member, modestly senior (but not a technical researcher), this conversation flagged to my attention in a work Slack msg

- The take I'm about to offer is my own, and iirc has not been seen or commented on by either Nate nor Eliezer and my shoulder-copies of them are lukewarm about it at best

- Nevertheless I think it is essentially true and correct, and likely at least mostly representative of "the MIRI position" insofar as any single coherent one exists; I would expect most arguments about what I'm about to say to be more along the lines of "eh, this is misleading in X or Y way, or will likely imply A or B to most readers that I don't think is true, or puts its emphasis on M when the true problem is N" as opposed to "what? Wrong."

But all things considered, it still seems better to try to speak a little bit on MIRI's behalf, here, rather than pretending that I think this is "just my take" or giving back nothing but radio silence. Grains of salt all around.

The main reason why the selective breeding objection seems to me to be false is something like "tiger fur coloration" + "behavior once outside of the training environm...

An objection I didn't have time for in the above piece is something like "but what about Occam, though, and k-complexity? Won't you most likely get the simple, boring, black shape, if you constrain it as in the above?”

This is why I'm concerned about deleterious effects of writing for the outgroup: I'm worried you end up optimizing your thinking for coming up with eloquent allegories to convey your intuitions to a mass audience, and end up not having time for the actual, non-allegorical explanation that would convince subject-matter experts (whose support would be awfully helpful in the desperate push for a Pause treaty).

I think we have a lot of intriguing theory and evidence pointing to a story where the reason neural networks generalize is because the parameter-to-function mapping is not a one-to-one correspondence, and is biased towards simple functions (as Occam and Solomonoff demand): to a first approximation, SGD is going to find the simplest function that fits the training data (because simple functions correspond to large "basins" of approximately equal loss which are easy for SGD to find because they use fewer parameters or are more robust to some parameters being wrong)...

I do not see you as failing to be a team player re: existential risk from AI.

I do see you as something like ... making a much larger update on the bias toward simple functions than I do. Like, it feels vaguely akin to ... when someone quotes Ursula K. LeGuin's opinion as if that settles some argument with finality?

I think the bias toward simple functions matters, and is real, and is cause for marginal hope and optimism, but "bias toward" feels insufficiently strong for me to be like "ah, okay, then the problem outlined above isn't actually a problem."

I do not, to be clear, believe that my essay contains falsehoods that become permissible because they help idiots or children make inferential leaps. I in fact thought the things that I said in my essay were true (with decently high confidence), and I still think that they are true (with slightly reduced confidence downstream of stuff like the link above).

(You will never ever ever ever ever see me telling someone a thing I know to be false because I believe that it will result in them outputting a correct belief or a correct behavior; if I do anything remotely like that I will headline explicitly that that's what I'm...

All dimensions that turn out to matter for what? Current AI is already implicitly optimizing people to use the world "delve" more often than they otherwise would, which is weird and unexpected, but not that bad in the grand scheme of things. Further arguments are needed to distinguish whether this ends in "humans dead, all value lost" or "transhuman utopia, but with some weird and unexpected features, which would also be true of the human-intelligence-augmentation trajectory." (I'm not saying I believe in the utopia, but if we want that Pause treaty, we need to find the ironclad arguments that convince skeptical experts, not just appeal to intuition.)

Not sure what he's done on AI since, but Tim Urban's 2015 AI blog post series mentions how he was new to AI or AI risk and spent a little under a month studying and writing those posts. I re-read them a few months ago and immediately recommended them to some other people with no prior AI knowledge, because they have held up remarkably well.

I'm relatively OOTL on AI since GPT-3. My friend is terrified that we need to halt it urgently: I couldn't understand his point of view; he mentioned this book to me. I see a number of pre-readers saying the version they read is well-suited exactly for convincing people like me. At which point: if you believe the threat is imminent, why delay the book four months? I'll read a digital copy today if you point me to it.

I think they are delaying so people can early pre order which affects how many books the publisher prints and distributes which affects how many people ultimately read it and how much it breaks into the Overton window. Getting this conversation mainstream is an important instrumental goal.

If you are looking for info in the mean time you could look at PauseAI:

Or if you want less facts and quotes and more discussion, I recall that Yudkowsky’s Coming of Age is what changed my view from "orthogonality kinda makes sense" to "orthogonality is almost certainly correct and the implication is alignment needs more care than humanity is currently giving it".

You may also be better discussing more with your friend or the various online communities.

You can also preorder. I'm hopeful that none of the AI labs will destroy the world before the books release : )

Yeah, I think the book is going to be (by a very large margin) the best resource in the world for this sort of use case. (Though I'm potentially biased as a MIRI employee.) We're not delaying; this is basically as fast as the publishing industry goes, and we expected the audience to be a lot smaller if we self-published. (A more typical timeline would have put the book another 3-20 months out.)

If Eliezer and Nate could release it sooner than September while still gaining the benefits of working with a top publishing house, doing a conventional media tour, etc., then we'd definitely be releasing it immediately. As is, our publisher has done a ton of great work already and has been extremely enthusiastic about this project, in a way that makes me feel way better about this approach. "We have to wait till September" is a real cost of this option, but I think it's a pretty unavoidable cost given that we need this book to reach a lot of people, not just the sort of people who would hear about it from a friend on LessWrong.

I do think there are a lot of good resources already online, like MIRI's recently released intro resource, "The Problem". It's a very different beast from If Anyone Bu...

Note that IFP (a DC-based think tank) recently had someone deliver 535 copies of their new book to every US Congressional office.

Note also that my impression is that DC people (even staffers) are much less "online" than tech audiences. Whether or not you copy IFP, I would suggest thinking about in-person distribution opportunities for DC.

I would note that this is, indeed, a very common move done in DC. I would also note that many of these copies end up in, e.g., Little Free Libraries and at the Goodwill. (For example, I currently downstairs have a copy of the President of Microsoft's Board's book with literally still the letter inside saying "Dear Congressman XYZ, I hope you enjoy my book...")

I am not opposed to MIRI doing this, but just want to flag that this is a regular move in DC. (Which might mean you should absolutely do it since it has survivorship bias as a good lindy idea! Just saying it ain't, like, a brand new strat.)

Would be nice if you can get a warm intro for the book to someone high up in the Vatican too, as well as other potentially influential groups.

Are there any plans for Russian translation? If not, I'm interested in creating it (or even in organizing a truly professional translation, if someone gives me money for it).

We're still in the final proofreading stages for the English version, so the translators haven't started translating yet. But they're queued up.

Given the potentially massive importance of a Chinese version, it may be worth burning $8,000 to start the translation before proofreading is done, particularly if your translators come back with questions that are better clarified in the English text. I'd pay money to help speed this up if that's the bottleneck[1]. When I was in China I didn't have a good way of explaining what I was doing and why.

- ^

I'm working mostly off savings and wouldn't especially want to, but I would to make it happen.

Something I've done in the past is to send text that I intended to be translated through machine translation, and then back, with low latency, and gain confidence in the semantic stability of the process.

Rewrite english, click, click.

Rewrite english, click, click.

Rewrite english... click, click... oh! Now it round trips with high fidelity. Excellent. Ship that!

Hi, I've pre-ordered it on the UK Amazon, I hope that works for you. Let me know if I should do something different.

I have a number of reasonably well-respected friends in the University of Cambridge and its associated tech-sphere, I can try to get some of them to give endorsements if you think that will help and can send me a pdf.

This will be a huge help when talking to political representatives. When reaching out to politicians as an AI safety volunteer the past 6 months, I got a range of reactions:

- They're aware of this issue but can't get traction with fellow politicians, it needs visible public support first

- They're aware but this issue is too complex for the public to understand

- They're unaware but also the public is focused on immediate issues like housing and cost of living

- They're unaware, sounds important, but they lack the resources to look into it

Having a professionally published book will help with all those responses. I am preordering!

I just pre-ordered.

I agree that the cover art seems notably bad. The white text on black background in that font looks like some sort of autogenerated placeholder. I understand you feel over-constrained -- this is just another nudge to think creatively about how to overcome your constraints, e.g. route around your publisher and hire various artists on your own, then poll your friends on the best design.

I would encourage you to send free review copies to prominent nontechnical people who are publicly complaining about AI, if you're not already doing so. Here are some examples I saw in the past few days; I'm sure a dedicated search could turn up lots more (and I encourage people to reply to this comment with more examples):

-

I would offer both Ted Gioia and the new Pope advance copies. Edit: Pope John XXIII's letter sent during the Cuban Missile Crisis could be an interesting case study here.

-

This was retweeted by Emma Ashford

Edit: Come to think of it, perhaps there is no reason to preferentially send copies to those who are more inclined to agree? Engaging skeptics of AI risk like e.g. Tyler Cowen might be a good opportunity to show them a better argument and leave them a...

I have pre-ordered it! Hopefully a German pre-order from a local bookstore will make a difference. :-)

For those who can't wait, and most people here probably already know, here is Eliezer's latest interview on that topic: https://www.youtube.com/watch?v=0QmDcQIvSDc. I'm halfway through it and I really like how clearly he thinks and makes his argument; it's still deeply disturbing though.

If you want to hear a younger, more optimistic Eliezer, here's the recording of his Hard AI Future Salon from many years ago, way back in 2006. :-)

https://archive.org/details/FutureSalon_02_2006

He starts his talk at minute 12. There are excellent questions from the audience as well.

I don't know anyone who has thought about and tried to steer us in the right direction on this problem more deeply or for longer than him.

Any info on what counts as "bulk". I share an amazon prime account with my family so if we each want to buy copies, does it need to be separate orders, separate shipping/billing addresses, separate accounts, or separate websites to not count as "bulk"?

Is an audiobook version also planned per chance? Could preordering that one also help?

Judging from Stephen Fry's endorsement and, as I've seen, his interest in the topic for some time in general, perhaps a delightful and maybe even eager deal could be made where he narrates? Unless some other choice might be better for either party of course. And I also understand if negotiations or existing agreements prevent anyone from confirming anything on this aspect, I'd be happy to hear whether the audio version is planned/intended to begin with and when if that can be known.

There is indeed an audiobook version; the site links to https://www.audible.com/pd/If-Anyone-Builds-It-Everyone-Dies-Audiobook/B0F2B8J9H5 (where it says it'll be available September 30) and https://libro.fm/audiobooks/9781668652657-if-anyone-builds-it-everyone-dies (available September 16).

Any updates on the cover? It seems to matter quite a bit; this market has a trading volume of 11k mana and 57 different traders:

https://manifold.markets/ms/yudkowsky-soares-change-the-book-co?r=YWRlbGU

Writing a book is an excellent idea! I found other AI books like Superintelligence much more convenient and thorough than navigating blog posts. I've pre-ordered the book and I'm looking forward to reading it when it comes out.

I just pre-ordered 10 copies. Seems like the most cost effective way to help that I've seen in a long time. (Though yes I'm also going to try to distribute my copies.)

I think that's what they meant you should not do when they said [edit to add: directly quoting a now-modified part of the footnote] "Bulk preorders don’t count, and in fact hurt."

My guess is that "I'm excited and want a few for my friends and family!" is fine if it's happening naturally, and that "I'll buy a large number to pump up the sales" just gets filtered out. But it's hard to say; the people who compile best-seller lists are presumably intentionally opaque about this. I wouldn't sweat it too much as long as you're not trying to game it.

Online advertising can be used to promote books. Unlike many books, you are not trying to make a profit and can pay for advertising beyond where the publisher's marginal costs equals marginal revenue. Do you:

- Have online advertising campaigns set up by your publisher and can absorb donations to spend on more advertising (LLM doubts Little, Brown and Company lets authors spend more money)

- Have $$$ to spend on an advertising campaign but don't have the managerial bandwidth to set one up. You'd need logistics support to set up an effective advertising campaign.

- Need both money and logistics for an advertising campaign.

- Alphabet and Meta employees get several hundred dollars per month to spend on on advertising (as incentive to dogfood their product). If LessWrong employees at those companies setup many $300 / month advertising campaigns, that sounds like a worthwhile investment

- Need neither help setting up an advertising campaign nor funds for more advertising (though donations to MIRI are of course always welcome)

We have an advertising campaign planned, and we'll be working with professional publicists. We have a healthy budget for it already :-)

Are there planned translations in general, or is that something that is discussed only after actual success?

Quick note that I can't open the webpage via my institution (same issue on multiple browsers). Their restrictions can be quite annoying and get triggered alot. I can view it myself easily enough on phone but if you want this to get out beware trivial inconveniences and all that...

Firefox message is below.

Secure Connection Failed

An error occurred during a connection to ifanyonebuildsit.com. Cannot communicate securely with peer: no common encryption algorithm(s).

Error code: SSL_ERROR_NO_CYPHER_OVERLAP

- The page you are trying to view cannot be shown because t

I initially read the title of the post as

Eliezer and I wrote a book: If Anyone Reads It, Everyone Dies

Quite intimidating!

Very exciting; thanks for writing!

I know this is minor, but the image on the bottom of the website looks distractingly wrong to me -- the lighting doesn't match where real population centers are. It would be a lot better with something either clearly adapted from the real world or something clearly created, but this is pretty uncanny valley

Here in Australia I can only buy the paperback/hardcover versions. Any chance you can convince your publisher/publishers to release the e-book here too?

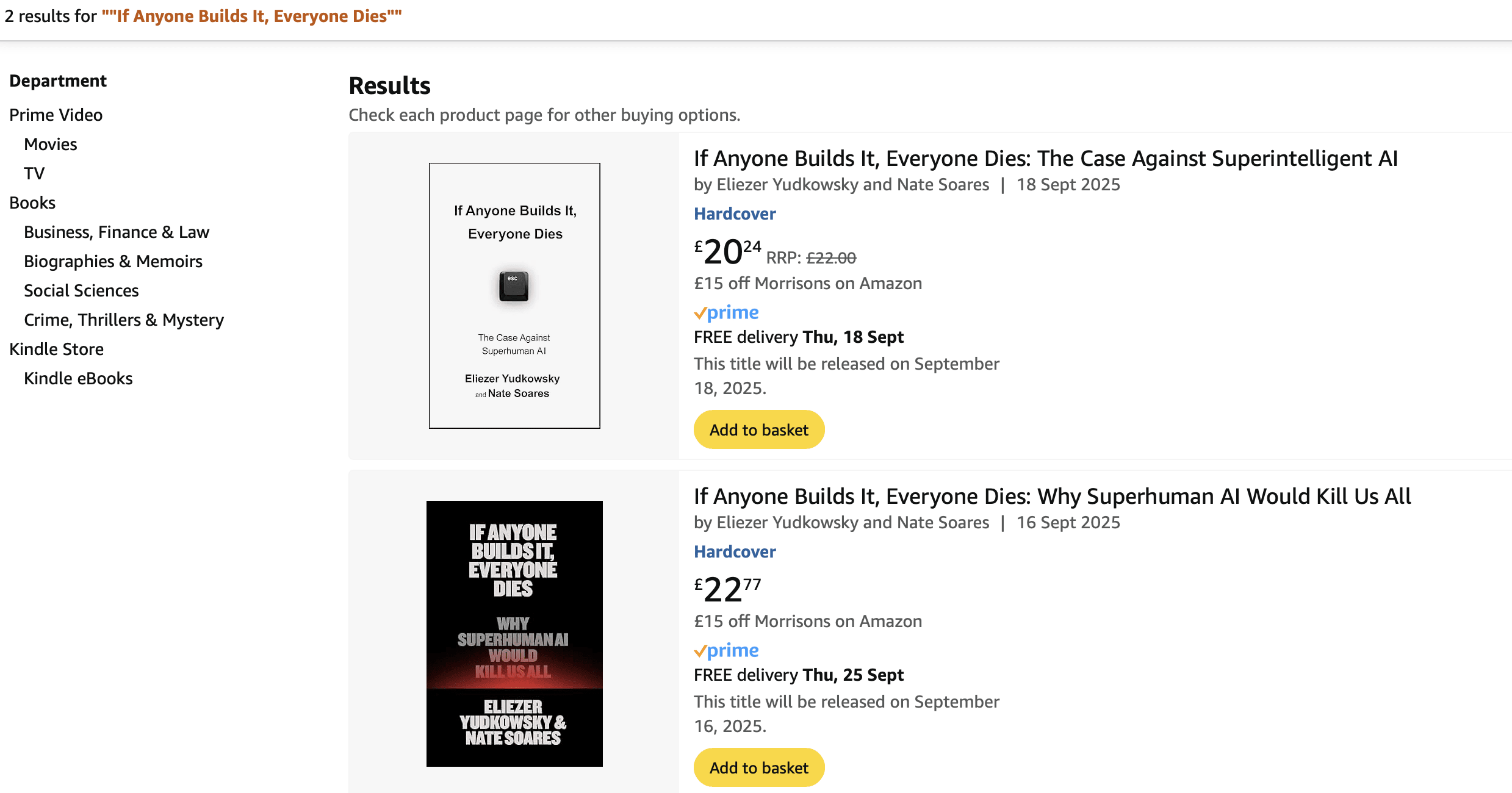

Why do I see two different versions on amazon.co.uk? Both hardcover, same title but different subtitles, different publication dates. The second one has the same cover and title as the one on amazon.com, so presumably that is the one to go for.

There's not a short answer; subtitles and cover art are over-constrained and the choices have many stakeholders (and authors rarely have final say over artwork). The differences reflect different input from different publishing-houses in different territories, who hopefully have decent intuitions about their markets.

Are you American? Because as a British person I would say that the first version looks a lot better to me, and certainly fits the standards for British non-fiction books better.

Though I do agree that the subtitle isn't quite optimal.

I am British. I'm not much impressed by either graphic design, but I'm not a graphic designer and can't articulate why.

We’re told that 10,000 pre-orders constitutes a good chance of being on the best-seller list (depending on the competition), and 20,000 would be a big deal

Does hardcover vs. ebook matter here?

Online, I'm seeing several sources say that pre-orders actually hurt on Amazon, because the Amazon algorithm cares about sales and reviews after launch and doesn't count pre-orders. Anyone know about this? If I am buying on Amazon should I wait til launch, or conversely if I'm pre-ordering should I buy elsewhere?

If I want to pre-order but don't use Internet marketplaces and don't have a credit card, are there options for that (e.g. going to a physical store and asking them to pre-order)?

How come B&N can ship to a ton of different countries including San Marino and the Vatican but not Italy???

On the German bookstore website, I can order either the American or the UK version. I assume it does not make a difference for the whole preorder argument? The American epub is cheaper and is published two days earlier:

Amazon's best-seller standings. I wouldn't make too much of this, their categorization is wonky. (I also have no clue what the lookback window is, what they make of preoders, etc.)

#5 in "Technology"

#4,537 in all books

#11 in engineering

#14 in semantics and AI (how is this so much lower than "technology?")

In short: showing up! It could be grabbing someone's eye right now. Still drowned out by Yuval Noah Harari, Ethan Mollick, Ray Kurzweil, et al.

From the MIRI announcement:

Our big ask for you is: If you have any way to help this book do shockingly, absurdly well— in ways that prompt a serious and sober response from the world — then now is the time.

sober response from the world

sober response

Uh... this is debatably a lot to ask of the world right now.

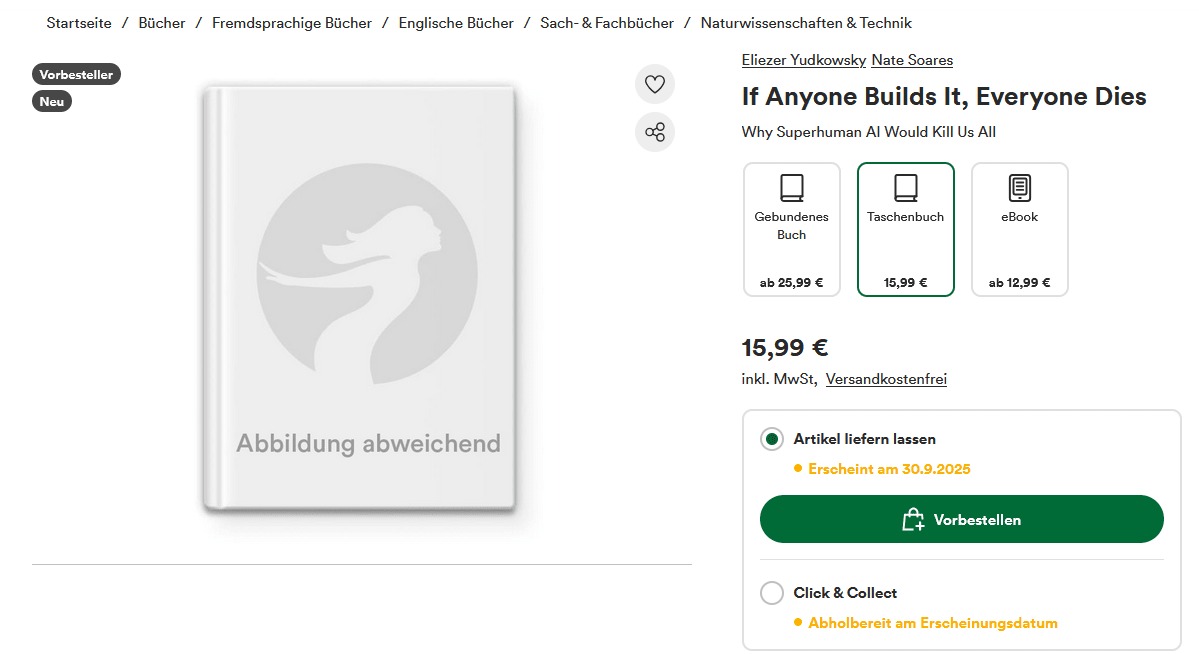

A German bookseller claims there is a softcover (Taschenbuch) version available for preorder: https://www.thalia.de/shop/home/artikeldetails/A1075128502

Is that correct? It does not seem to be available on any US website.

Can you please make it available at a cheaper rate in India?🙏 I'm very interested in alignment, yet a student, and the USD is too expensive :(

How come B&N can ship to a ton of different countries including San Marino and the Vatican but not Italy???

Eliezer and I wrote a book. It’s titled If Anyone Builds It, Everyone Dies. Unlike a lot of other writing either of us have done, it’s being professionally published. It’s hitting shelves on September 16th.

It’s a concise (~60k word) book aimed at a broad audience. It’s been well-received by people who received advance copies, with some endorsements including:

- Stephen Fry, actor, broadcaster, and writer

- Tim Urban, co-founder, Wait But Why

- Yishan Wong, former CEO of Reddit

Lots of people are alarmed about AI, and many of them are worried about sounding alarmist. With our book, we’re trying to break that logjam, and bring this conversation into the mainstream.

This is our big push to get the world onto a different track. We’ve been working on it for over a year. The time feels ripe to me. I don’t know how many more chances we’ll get. MIRI’s dedicating a lot of resources towards making this push go well. If you share any of my hope, I’d be honored by you doing whatever you can to help the book make a huge splash, once it hits shelves.

One thing that our publishers tell us would help is preorders. Preorders count towards first-week sales, which determine a book’s ranking on the best-seller list, which has a big effect on how many people read it. And, inconveniently, early pre-orders affect the number of copies that get printed, which affects how much stock publishers and retailers wind up having on hand, which affects how much they promote the book and display it prominently. So preorders are valuable,[1][2] and they’re especially valuable before the first print (mid-June) and the second print (mid-July). We’re told that 10,000 pre-orders constitutes a good chance of being on the best-seller list (depending on the competition), and 20,000 would be a big deal. Those numbers seem to me like they’re inside the range of possibility, and they’re small enough that each individual preorder makes a difference.

If you’ve been putting off sharing your views on AI with your friends and family, this summer might be a good time for it. Especially if your friends and family are the sort of people who’d pre-order a book in June even if it won’t hit shelves until September.

Another thing that I expect to help is discussing the book once it comes out, to help generate buzz that helps increase the impact. Especially if you have a social media platform. If you’ve got a big or interesting platform, I’d be happy to coordinate about what timings are most impactful (according to the publicists) and perhaps even provide an advance copy (if you want to have content queued up), though we can't offer that to everyone.

Some of you have famous friends that might provide endorsements to match or exceed the ones above. Extra endorsements would be especially valuable if they come in before May 30, in which case they could be printed in or on the book; but they’re still valuable later for use on the website and in promotional material. If you have an idea, I invite you to DM me and we might be able to share an advance copy with your contact.

(And, of course, maybe you don’t share my hope that this book can bring the conversation to the mainstream, or are reserving judgement until you’ve read the dang thing. To state the obvious, that’d make sense too.)

I’ve been positively surprised by the reception the book has gotten thus far. If you're a LessWrong regular, you might wonder whether the book contains anything new for you personally. The content won’t come as a shock to folks who have read or listened to a bunch of what Eliezer and I have to say, but it nevertheless contains some new articulations of our arguments, that I think are better articulations than we’ve ever managed before. For example, Rob Bensinger (of MIRI) read a draft and said:

Other MIRI staff report that the book helped them fit the whole argument in their head better, or that it made sharp some intuitions they had that were previously vague. So you might get something out of it even if you’ve been around a while. And between these sorts of reactions among MIRI employees and the reactions from others quoted at the top of this post, you might consider that this book really does have a chance of blowing the Overton window wide open.

As Rob said in the MIRI newsletter recently:

That’s what we’re going for. And seeing the reception of early drafts, I have a glimmer of hope. Perhaps humanity can yet jolt into action and change our course before it’s too late. If you, too, see that glimmer of hope, I’d be honored by your aid.

Also we have a stellar website made by LessWrong’s very own Oliver Habryka, where you can preorder the book today: IfAnyoneBuildsIt.com.

Bulk preorders don’t count. The people who compile bestseller lists distinguish and discount bulk preorders. ↩︎

We're told that hardcover preorders count for a little more than e-book preorders, if it's all the same to you. I mostly recommend just buying whichever versions you actually want. ↩︎