The Best of LessWrong

For the years 2018, 2019 and 2020 we also published physical books with the results of our annual vote, which you can buy and learn more about here.

Rationality

Optimization

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

World

Practical

AI Strategy

Technical AI Safety

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

/h4ajljqfx5ytt6bca7tv)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

%20and%20a%20black%20box%20which%20no%20one%20understands%20but%20works%20perfectly%20(representing%20search)/yf2xrrlv4rqga5h8lolh)

If you're looking for ways to help with the whole “the world looks pretty doomed” business, here's my advice: look around for places where we're all being total idiots. Look around for places where something seems incompetently run, or hopelessly inept, and where some part of you thinks you can do better.

Then do it better.

As resources become abundant, the bottleneck shifts to other resources. Power or money are no longer the limiting factors past a certain point; knowledge becomes the bottleneck. Knowledge can't be reliably bought, and acquiring it is difficult. Therefore, investments in knowledge (e.g. understanding systems at a gears-level) become the most valuable investments.

The author argues that it may be possible to significantly enhance adult intelligence through gene editing. They discuss potential delivery methods, editing techniques, and challenges. While acknowledging uncertainties, they believe this could have a major impact on human capabilities and potentially help with AI alignment. They propose starting with cell culture experiments and animal studies.

John Wentworth argues that becoming one of the best in the world at *one* specific skill is hard, but it's not as hard to become the best in the world at the *combination* of two (or more) different skills. He calls this being "Pareto best" and argues it can circumvent the generalized efficient markets principle.

We're used to the economy growing a few percent per year. But this is a very unusual situation. Zooming out to all of history, we see that growth has been accelerating, that it's near its historical high point, and that it's faster than it can be for all that much longer. There aren't enough atoms in the galaxy to sustain this rate of growth for even another 10,000 years!

What comes next – stagnation, explosion, or collapse?

"Some of the people who have most inspired me have been inexcusably wrong on basic issues. But you only need one world-changing revelation to be worth reading."

Scott argues that our interest in thinkers should not be determined by their worst idea, or even their average idea, but by their best ideas. Some of the best thinkers in history believed ludicrous things, like Newton believing in Bible codes.

The "tails coming apart" is a phenomenon where two variables can be highly correlated overall, but at extreme values they diverge. Scott Alexander explores how this applies to complex concepts like happiness and morality, where our intuitions work well for common situations but break down in extreme scenarios.

According to Zvi, people have a warped sense of justice. For any harm you cause, regardless of intention and or motive, you earn "negative points" that merit punishment. At least implicitly, however, people only want to reward good outcomes a person causes only if their sole goal was being altruistic. Curing illness to make profit? No "positive points" for you!

Jenn spent 5000 hours working at non-EA charities, and learned a number of things that may not be obvious to effective altruists, when working with more mature organizations in more mature ecosystems.

Prediction markets are a potential way to harness wisdom of crowds and incentivize truth-seeking. But they're tricky to set up correctly. Zvi Mowshowitz, who has extensive experience with prediction markets and sports betting, explains the key factors that make prediction markets succeed or fail.

Back in the early days of factories, workplace injury rates were enormous. Over time, safety engineering took hold, various legal reforms were passed (most notably liability law), and those rates dramatically dropped. This is the story of how factories went from death traps to relatively safe.

Many of the most profitable jobs and companies are primarily about solving coordination problems. This suggests "coordination problems" are an unusually tight bottleneck for productive economic activity. John explores implications of looking at the world through this lens.

John made his own COVID-19 vaccine at home using open source instructions. Here's how he did it and why.

Democratic processes are important loci of power. It's useful to understand the dynamics of the voting methods used real-world elections. My own ideas of ethics and of fun theory are deeply informed by my decades of interest in voting theory

It's wild to think that humanity might expand throughout the galaxy in the next century or two. But it's also wild to think that we definitely won't. In fact, all views about humanity's long-term future are pretty wild when you think about it. We're in a wild situation!

One winter a grasshopper, starving and frail, approaches a colony of ants drying out their grain in the sun to ask for food, having spent the summer singing and dancing.

Then, various things happen.

Nonprofit boards have great power, but low engagement, unclear responsibility, and no accountability. There's also a shortage of good guidance on how to be an effective board member. Holden gives recommendations on how to do it well, but the whole structure is inherently weird and challenging.

Scott Alexander's "Meditations on Moloch" paints a gloomy picture of the world being inevitably consumed by destructive forces of competition and optimization. But Zvi argues this isn't actually how the world works - we've managed to resist and overcome these forces throughout history.

Success is supposed to open doors and broaden horizons. But often it can do the opposite - trapping people in narrow specialties or roles they've outgrown. This post explores how success can sometimes be the enemy of personal freedom and growth, and how to maintain flexibility as you become more successful.

A book review examining Elinor Ostrom's "Governance of the Commons", in light of Eliezer Yudkowsky's "Inadequate Equilibria." Are successful local institutions for governing common pool resources possible without government intervention? Under what circumstances can such institutions emerge spontaneously to solve coordination problems?

Jeff argues that people should fill in some of the San Francisco Bay, south of the Dumbarton Bridge, to create new land for housing. This would allow millions of people to live closer to jobs, reducing sprawl and traffic. While there are environmental concerns, the benefits of dense urban housing outweigh the localized impacts.

Crawford looks back on past celebrations of achievements like the US transcontinental railroad, the Brooklyn Bridge, electric lighting, the polio vaccine, and the Moon landing. He then asks: Why haven't we celebrated any major achievements lately? He explores some hypotheses for this change.

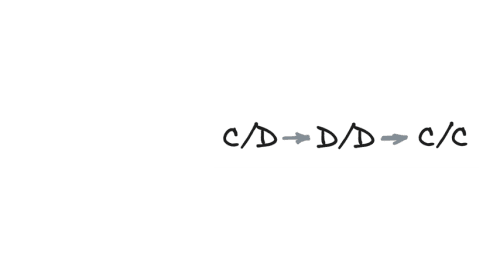

You've probably heard about the "tit-for-tat" strategy in the iterated prisoner's dilemma. But have you heard of the Pavlov strategy? The simple strategy performs surprisingly well in certain conditions. Why don't we talk about Pavlov strategy as much as Tit-for-Tat strategy?

A thoughtful exploration of the risks and benefits of sharing information about biosecurity and biological risks. The authors argue that while there are real risks to sharing sensitive information, there are also important benefits that need to be weighed carefully. They provide frameworks for thinking through these tradeoffs.

What if our universe's resources are just a drop in the bucket compared to what's out there? We might be able to influence or escape to much larger universes that are simulating us or can otherwise be controlled by us. This could be a source of vastly more potential value than just using the resources in our own universe.

AI Impacts investigated dozens of technological trends, looking for examples of discontinuous progress (where more than a century of progress happened at once). They found ten robust cases, such as the first nuclear weapons, and the Great Eastern steamship.

They hope the data can inform expectations about discontinuities in AI development.

Harmful people often lack explicit malicious intent. It’s worth deploying your social or community defenses against them anyway.

It might be some elements of human intelligence (at least at the civilizational level) are culturally/memetically transmitted. All fine and good in theory. Except the social hypercompetition between people and intense selection pressure of ideas online might be eroding our world's intelligence. Eliezer wonders if he's only who he is because he grew up reading old science fiction from before the current era's memes.

A counterintuitive concept: Sometimes people choose the worse option, to signal their loyalty or values in situations where that loyalty might be in question. Zvi explores this idea of "motive ambiguity" and how it can lead to perverse incentives.

A person wakes up from cryonic freeze in a post-apocalyptic future. A "scissor" statement – an AI-generated statement designed to provoke maximum controversy – has led to massive conflict and destruction. The survivors are those who managed to work with people they morally despise.

The Amish relationship to technology is not "stick to technology from the 1800s", but rather "carefully think about how technology will affect your culture, and only include technology that does what you want." Raemon explores how these ideas could potentially be applied in other contexts.

It is often stated (with some justification, IMO) that AI risk is an “emergency.” Various people have explained to me that they put various parts of their normal life’s functioning on hold on account of AI being an “emergency.” In the interest of people doing this sanely and not confusedly, let's take a step back and seek principles around what kinds of changes a person might want to make in an “emergency” of different sorts.

Power allows people to benefit from immoral acts without having to take responsibility or even be aware of them. The most powerful person in a situation may not be the most morally culpable, as they can remain distant from the actual "crime". If you're not actively looking into how your wants are being met, you may be unknowingly benefiting from something unethical.

You've probably heard that a nuclear war between major powers would cause human extinction. This post argues that while nuclear war would be incredibly destructive, it's unlikely to actually cause human extinction. The main risks come from potential climate effects, but even in severe scenarios some human populations would likely survive.

All sorts of everyday practices in the legal system, medicine, software, and other areas of life involve stating things that aren't true. But calling these practices "lies" or "fraud" seems to be perceived as an attack rather than a straightforward description. This makes it difficult to discuss and analyze these practices without provoking emotional defensiveness.

An in-depth overview of Georgism, a school of political economy that advocates for a Land Value Tax (LVT), aiming to discourage land speculation and rent-seeking behavior; promote more efficient use of land, make housing more affordable, and taxes more efficient.

Said argues that there's no such thing as a real exception to a rule. If you find an exception, this means you need to update the rule itself. The "real" rule is always the one that already takes into account all possible exceptions.

Under conditions of perfectly intense competition, evolution works like water flowing down a hill – it can never go up even the tiniest elevation. But if there is slack in the selection process, it's possible for evolution to escape local minima. "How much slack is optimal" is an interesting question, Scott explores in various contexts.

Elizabeth argues that veganism comes with trade-offs, including potential health issues, that are often downplayed or denied by vegan advocates. She calls for more honesty about these challenges from the vegan community.

Smart people are failing to provide strong arguments for why blackmail should be illegal. Robin Hanson is explicitly arguing it should be legal. Zvi Mowshowitz argues this is wrong, and gives his perspective on why blackmail is bad.

Nuclear power once seemed to be the energy of the future, but has failed to live up to that promise. Why? Jason Crawford summarizes Jack Devanney's book "Why Nuclear Power Has Been a Flop", which blames overregulation driven by unrealistic radiation safety models.

The credit assignment problem – the challenge of figuring out which parts of a complex system deserve credit for good or bad outcomes – shows up just about everywhere. Abram Demski describes how credit assignment appears in areas as diverse as AI, politics, economics, law, sociology, biology, ethics, and epistemology.

Robin Hanson asked "Why do people like complex rules instead of simple rules?" and gave 12 examples.

Zvi responds with a detailed analysis of each example, suggesting that the desire for complex rules often stems from issues like Goodhart's Law, the Copenhagen Interpretation of Ethics, power dynamics, and the need to consider factors that can't be explicitly stated.

/h4ajljqfx5ytt6bca7tv)